Social and Information Networks

Covers the design, analysis, and modeling of social and information networks.

Looking for a broader view? This category is part of:

Covers the design, analysis, and modeling of social and information networks.

Looking for a broader view? This category is part of:

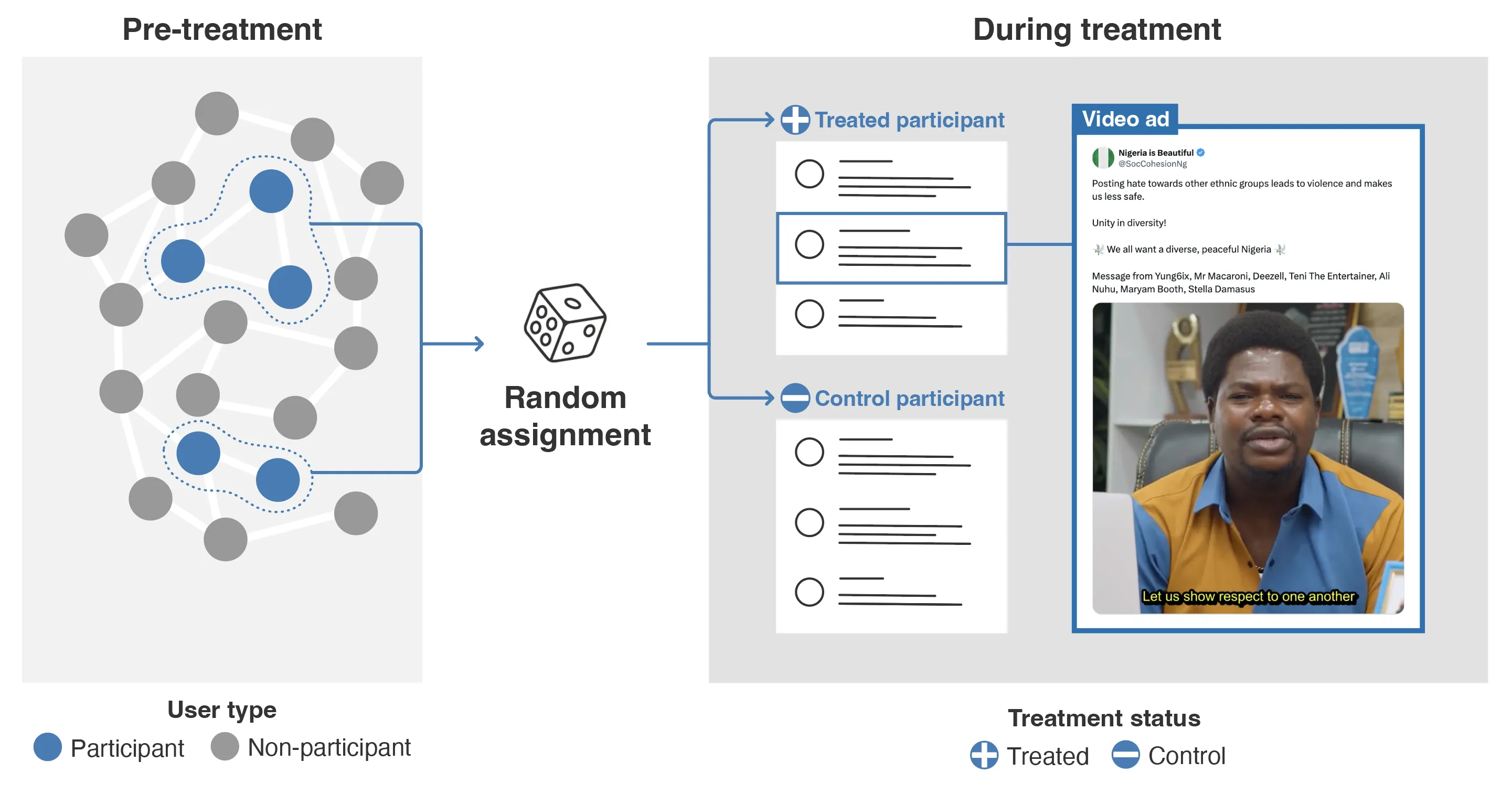

Online hate spreads rapidly, yet little is known about whether preventive and scalable strategies can curb it. We conducted the largest randomized controlled trial of hate speech prevention to date: a 20-week messaging campaign on X in Nigeria targeting ethnic hate. 73,136 users who had previously engaged with hate speech were randomly assigned to receive prosocial video messages from Nigerian celebrities. The campaign reduced hate content by 2.5% to 5.5% during treatment, with about 75% of the reduction persisting over the following four months. Reaching a larger share of a user's audience reduced amplification of that user's hate posts among both treated and untreated users, cutting hate reposts by over 50% for the most exposed accounts. Scalable messaging can limit online hate without removing content.

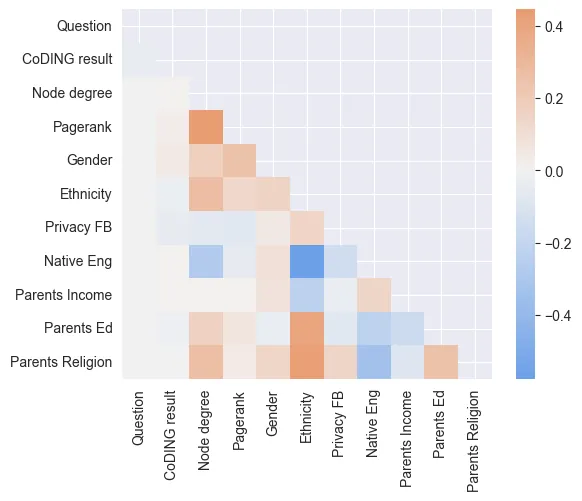

Ways in which people's opinions change are, without a doubt, subject to a rich tapestry of differing influences. Factors that affect how one arrives at an opinion reflect how they have been shaped by their environment throughout their lives, education, material status, what belief systems are they subscribed to, and what socio-economic minorities are they a part of. This already complex system is further expanded by the ever-changing nature of one's social network. It is therefore no surprise that many models have a tendency to perform best for the majority of the population and discriminating those people who are members of various marginalized groups . This bias and the study of how to counter it are subject to a rapidly developing field of Fairness in Social Network Analysis (SNA). The focus of this work is to look into how a state-of-the-art model discriminates certain minority groups and whether it is possible to reliably predict for whom it will perform worse. Moreover, is such prediction possible based solely on one's demographic or topological features? To this end, the NetSense dataset, together with a state-of-the-art CoDiNG model for opinion prediction have been employed. Our work explores how three classifier models (Demography-Based, Topology-Based, and Hybrid) perform when assessing for whom this algorithm will provide inaccurate predictions. Finally, through a comprehensive analysis of these experimental results, we identify four key patterns of algorithmic bias. Our findings suggest that no single paradigm provides the best results and that there is a real need for context-aware strategies in fairness-oriented social network analysis. We conclude that a multi-faceted approach, incorporating both individual attributes and network structures, is essential for reducing algorithmic bias and promoting inclusive decision-making.

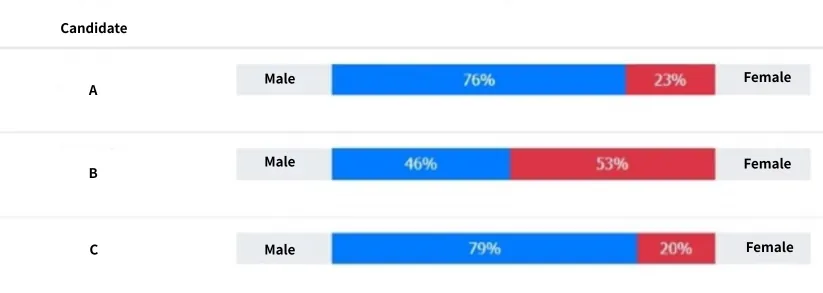

This study examines the relationship between online buzz and local election outcomes in Taiwan, with a focus on Taitung County. As social media becomes a major channel for public discourse, online buzz is increasingly seen as a factor influencing elections. However, its impact on local elections in Taiwan remains underexplored. This research addresses that gap through a comparative analysis of social media data and actual vote shares during the election period. A review of existing literature establishes the study's framework and highlights the need for empirical investigation in this area. The findings aim to reveal whether online discussions align with electoral results and to what extent digital sentiment reflects voter behavior. The study also discusses methodological and data limitations that may affect interpretation. Beyond its academic value, the research offers practical insights into how online buzz can inform campaign strategies and enhance election predictions. By analyzing the Taitung County case, this study contributes to a deeper understanding of the role of online discourse in Taiwan's local elections and offers a foundation for future research in the field.

Taiwan Cultural Memory Bank 2.0 is an online curation platform that invites the public to become curators, fostering diverse perspectives on Taiwan's society, humanities, natural landscapes, and daily life. Built on a material bank concept, the platform encourages users to co-create and curate their own works using shared resources or self-uploaded materials. At its core, the system follows a collect, store, access, and reuse model, supporting dynamic engagement with over three million cultural memory items from Taiwan. Users can search, browse, explore stories, and engage in creative applications and collaborative productions. Understanding user profiles is crucial for enhancing website service quality, particularly within the framework of the Visitor Relationship Management model. This study conducts an empirical analysis of user profiles on the platform, examining demographic characteristics, browsing behaviors, and engagement patterns. Additionally, the research evaluates the platform's SEO performance, search visibility, and organic traffic effectiveness. Based on the findings, this study provides strategic recommendations for optimizing website management, improving user experience, and leveraging social media for enhanced digital outreach. The insights gained contribute to the broader discussion on digital cultural platforms and their role in audience engagement, online visibility, and networked communication.

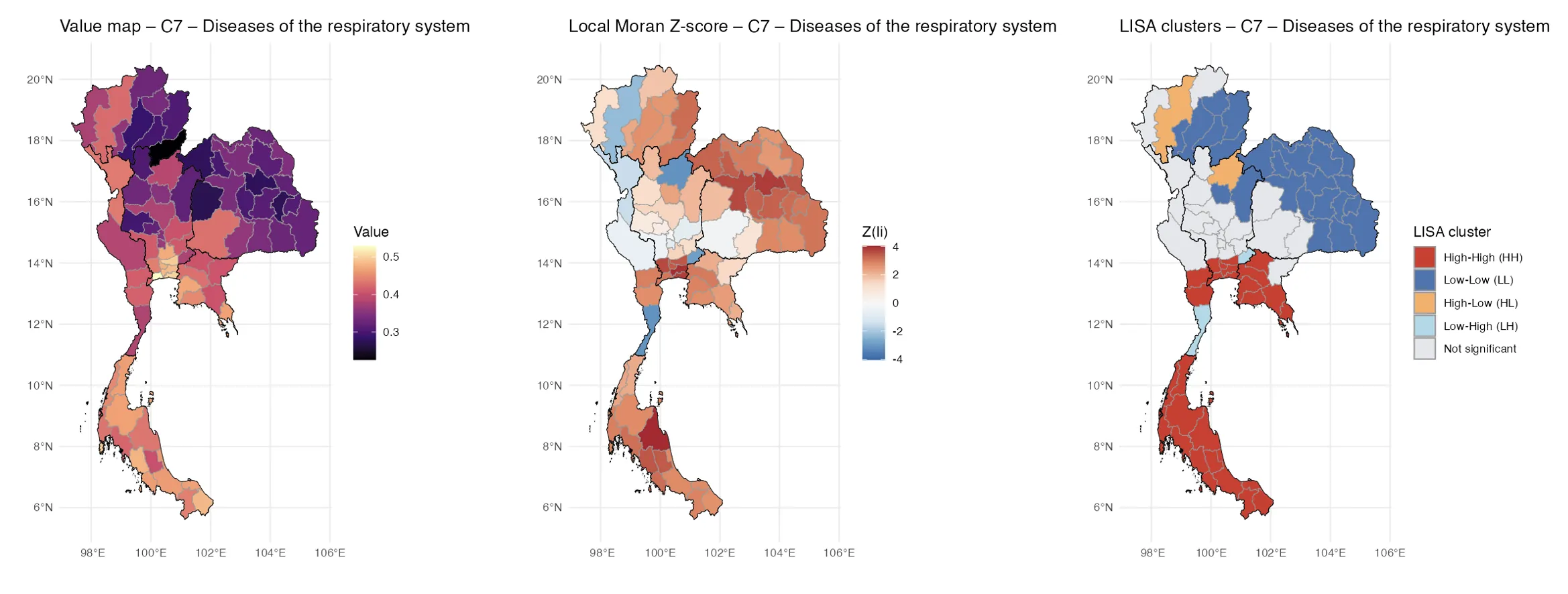

Health and poverty in Thailand exhibit pronounced geographic structuring, yet the extent to which they operate as interconnected regional systems remains insufficiently understood. This study analyzes ICD-10 chapter-level morbidity and multidimensional poverty as outcomes embedded in a spatial interaction network. Interpreting Thailand's 76 provinces as nodes within a fixed-degree regional graph, we apply tools from spatial econometrics and social network analysis, including Moran's I, Local Indicators of Spatial Association (LISA), and Spatial Durbin Models (SDM), to assess spatial dependence and cross-provincial spillovers. Our findings reveal strong spatial clustering across multiple ICD-10 chapters, with persistent high-high morbidity zones, particularly for digestive, respiratory, musculoskeletal, and symptom-based diseases, emerging in well-defined regional belts. SDM estimates demonstrate that spillover effects from neighboring provinces frequently exceed the influence of local deprivation, especially for living-condition, health-access, accessibility, and poor-household indicators. These patterns are consistent with contagion and contextual influence processes well established in social network theory. By framing morbidity and poverty as interdependent attributes on a spatial network, this study contributes to the growing literature on structural diffusion, health inequality, and regional vulnerability. The results highlight the importance of coordinated policy interventions across provincial boundaries and demonstrate how network-based modeling can uncover the spatial dynamics of health and deprivation.

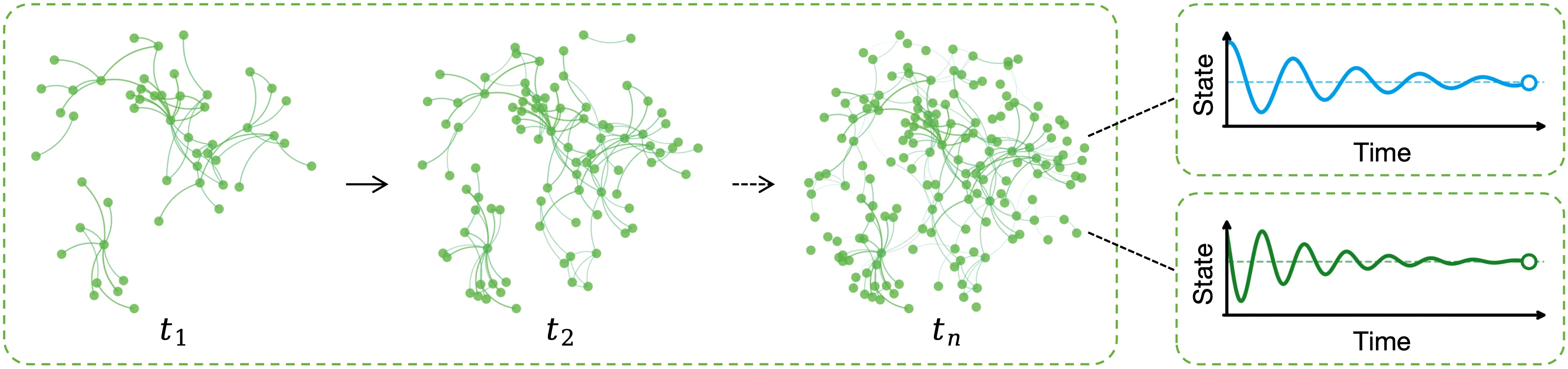

Inferring a network's evolutionary history from a single final snapshot with limited temporal annotations is fundamental yet challenging. Existing approaches predominantly rely on topology alone, which often provides insufficient and noisy cues. This paper leverages network steady-state dynamics -- converged node states under a given dynamical process -- as an additional and widely accessible observation for network evolution history inference. We propose CS$^2$, which explicitly models structure-state coupling to capture how topology modulates steady states and how the two signals jointly improve edge discrimination for formation-order recovery. Experiments on six real temporal networks, evaluated under multiple dynamical processes, show that CS$^2$ consistently outperforms strong baselines, improving pairwise edge precedence accuracy by 4.0% on average and global ordering consistency (Spearman-$ρ$) by 7.7% on average. CS$^2$ also more faithfully recovers macroscopic evolution trajectories such as clustering formation, degree heterogeneity, and hub growth. Moreover, a steady-state-only variant remains competitive when reliable topology is limited, highlighting steady states as an independent signal for evolution inference.

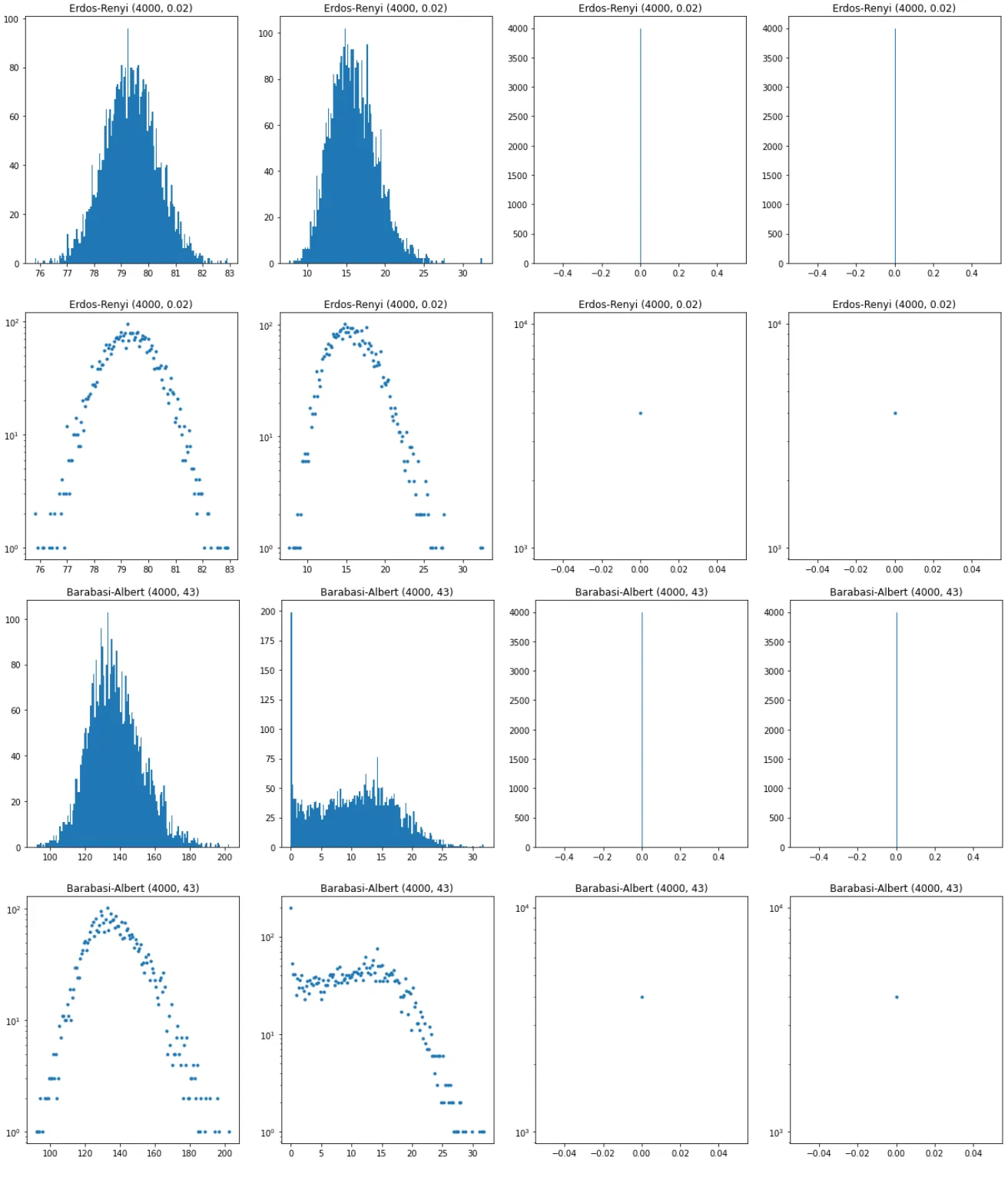

In this article we propose a generalization of two known invariants of real networks: degree and ksi-centrality. More precisely, we found a series of centralities based on Laplacian matrix, that have exponential distributions (power-law for the case $j = 0$) for real networks and different distributions for artificial ones.

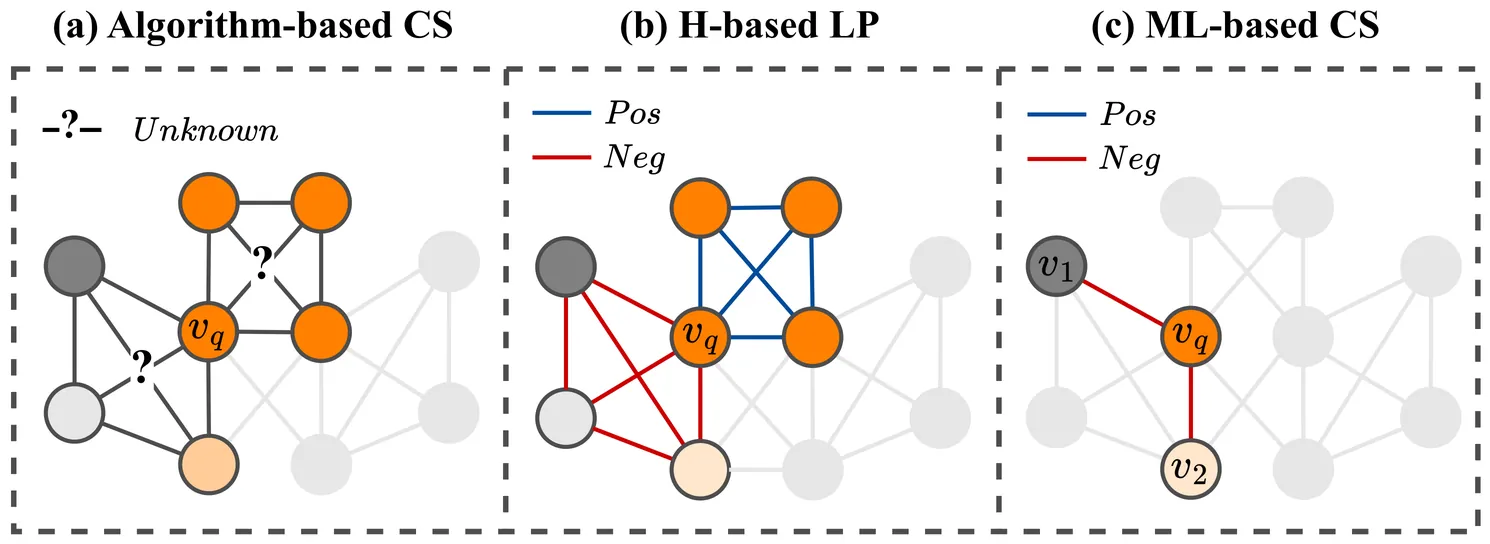

Community search aims to identify a refined set of nodes that are most relevant to a given query, supporting tasks ranging from fraud detection to recommendation. Unlike homophilic graphs, many real-world networks are heterophilic, where edges predominantly connect dissimilar nodes. Therefore, structural signals that once reflected smooth, low-frequency similarity now appear as sharp, high-frequency contrasts. However, both classical algorithms (e.g., k-core, k-truss) and recent ML-based models struggle to achieve effective community search on heterophilic graphs, where edge signs or semantics are generally unknown. Algorithm-based methods often return communities with mixed class labels, while GNNs, built on homophily, smooth away meaningful signals and blur community boundaries. Therefore, we propose Adaptive Community Search (AdaptCS), a unified framework featuring three key designs: (i) an AdaptCS Encoder that disentangles multi-hop and multi-frequency signals, enabling the model to capture both smooth (homophilic) and contrastive (heterophilic) relations; (ii) a memory-efficient low-rank optimization that removes the main computational bottleneck and ensures model scalability; and (iii) an Adaptive Community Score (ACS) that guides online search by balancing embedding similarity and topological relations. Extensive experiments on both heterophilic and homophilic benchmarks demonstrate that AdaptCS outperforms the best-performing baseline by an average of 11% in F1-score, retains robustness across heterophily levels, and achieves up to 2 orders of magnitude speedup.

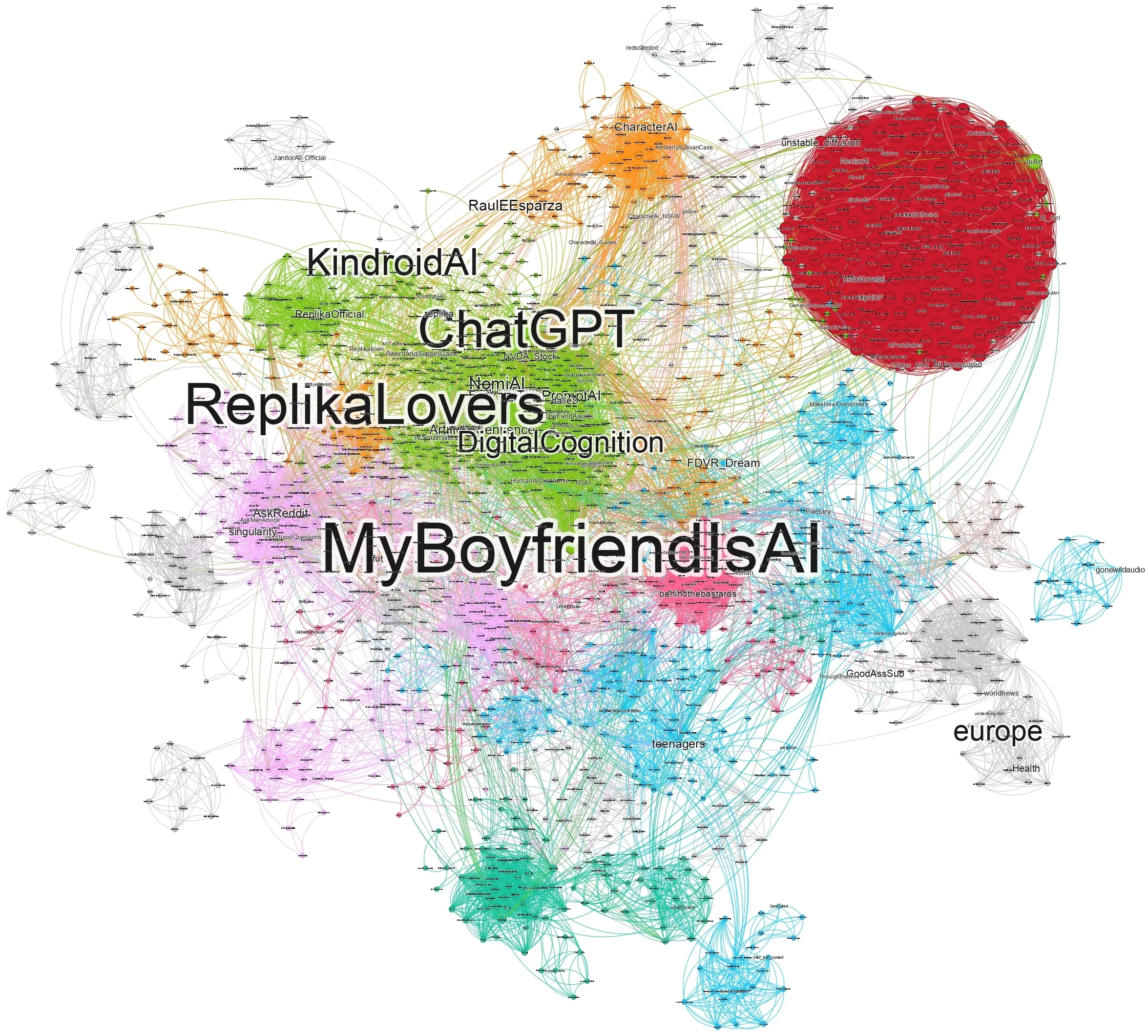

AI-companionship platforms are rapidly reshaping how people form emotional, romantic, and parasocial bonds with non-human agents, raising new questions about how these relationships intersect with gendered online behavior and exposure to harmful content. Focusing on the MyBoyfriendIsAI (MBIA) subreddit, we reconstruct the Reddit activity histories of more than 3,000 highly engaged users over two years, yielding over 67,000 historical submissions. We then situate MBIA within a broader ecosystem by building a historical interaction network spanning more than 2,000 subreddits, which enables us to trace cross-community pathways and measure how toxicity and emotional expression vary across these trajectories. We find that MBIA users primarily traverse four surrounding community spheres (AI-companionship, porn-related, forum-like, and gaming) and that participation across the ecosystem exhibits a distinct gendered structure, with substantial engagement by female users. While toxicity is generally low across most pathways, we observe localized spikes concentrated in a small subset of AI-porn and gender-oriented communities. Nearly 16% of users engage with gender-focused subreddits, and their trajectories display systematically different patterns of emotional expression and elevated toxicity, suggesting that a minority of gendered pathways may act as toxicity amplifiers within the broader AI-companionship ecosystem. These results characterize the gendered structure of cross-community participation around AI companionship on Reddit and highlight where risks concentrate, informing measurement, moderation, and design practices for human-AI relationship platforms.

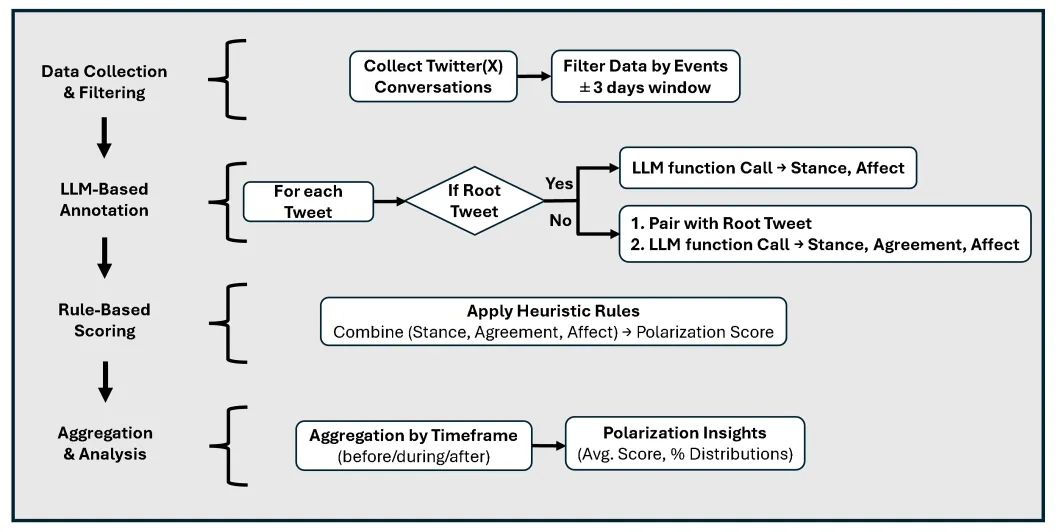

Understanding affective polarization in online discourse is crucial for evaluating the societal impact of social media interactions. This study presents a novel framework that leverages large language models (LLMs) and domain-informed heuristics to systematically analyze and quantify affective polarization in discussions on divisive topics such as climate change and gun control. Unlike most prior approaches that relied on sentiment analysis or predefined classifiers, our method integrates LLMs to extract stance, affective tone, and agreement patterns from large-scale social media discussions. We then apply a rule-based scoring system capable of quantifying affective polarization even in small conversations consisting of single interactions, based on stance alignment, emotional content, and interaction dynamics. Our analysis reveals distinct polarization patterns that are event dependent: (i) anticipation-driven polarization, where extreme polarization escalates before well-publicized events, and (ii) reactive polarization, where intense affective polarization spikes immediately after sudden, high-impact events. By combining AI-driven content annotation with domain-informed scoring, our framework offers a scalable and interpretable approach to measuring affective polarization. The source code is publicly available at: https://github.com/hasanjawad001/llm-social-media-polarization.

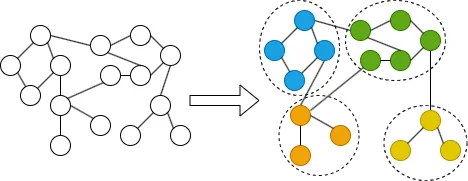

Influence Maximization (IM) seeks to identify a small set of seed nodes in a social network to maximize expected information spread under a diffusion model. While community-based approaches improve scalability by exploiting modular structure, they typically assume independence between communities, overlooking inter-community influence$\unicode{x2014}$a limitation that reduces effectiveness in real-world networks. We introduce Community-IM++, a scalable framework that explicitly models cross-community diffusion through a principled heuristic based on community-based diffusion degree (CDD) and a progressive budgeting strategy. The algorithm partitions the network, computes CDD to prioritize bridging nodes, and allocates seeds adaptively across communities using lazy evaluation to minimize redundant computations. Experiments on large real-world social networks under different edge weight models show that Community-IM++ achieves near-greedy influence spread at up to 100 times lower runtime, while outperforming Community-IM and degree heuristics across budgets and structural conditions. These results demonstrate the practicality of Community-IM++ for large-scale applications such as viral marketing, misinformation control, and public health campaigns, where efficiency and cross-community reach are critical.

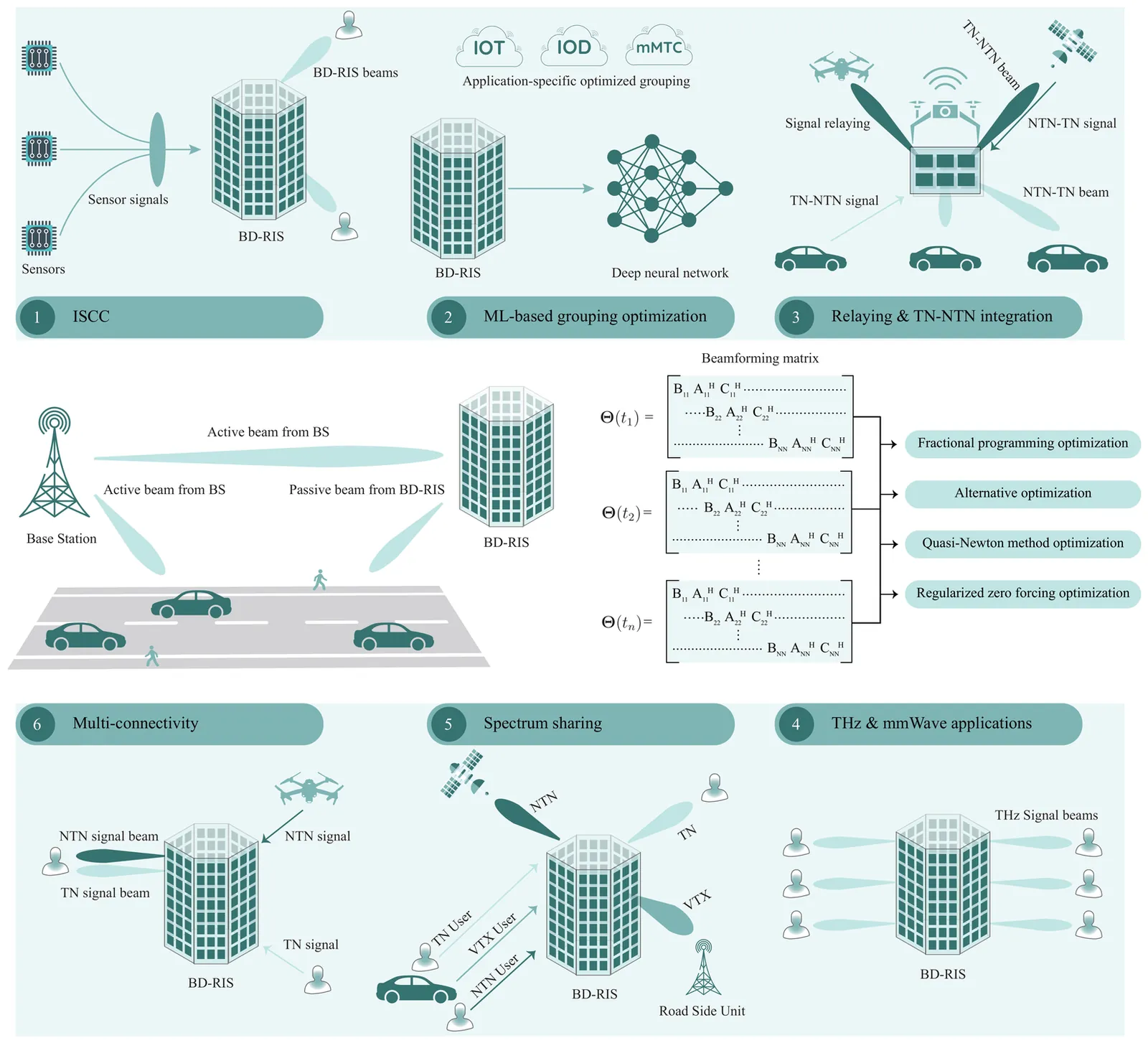

A beyond-diagonal reconfigurable intelligent surface (BD-RIS) is an innovative type of reconfigurable intelligent surface (RIS) that has recently been proposed and is considered a revolutionary advancement in wave manipulation. Unlike the mutually disconnected arrangement of elements in traditional RISs, BD-RIS creates cost-effective and simple inter-element connections, allowing for greater freedom in configuring the amplitude and phase of impinging waves. However, there are numerous underlying challenges in realizing the advantages associated with BD-RIS, prompting the research community to actively investigate cutting-edge schemes and algorithms in this direction. Particularly, the passive beamforming design for BD-RIS under specific environmental conditions has become a major focus in this research area. In this article, we provide a systematic introduction to BD-RIS, elaborating on its functional principles concerning architectural design, promising advantages, and classification. Subsequently, we present recent advances and identify a series of challenges and opportunities. Additionally, we consider a specific case study where beamforming is designed using four different algorithms, and we analyze their performance with respect to sum rate and computation cost. To augment the beamforming capabilities in 6G BD-RIS with quantum enhancement, we analyze various hybrid quantum-classical machine learning (ML) models to improve beam prediction performance, employing real-world communication Scenario 8 from the DeepSense 6G dataset. Consequently, we derive useful insights about the practical implications of BD-RIS.

When opinion spread is studied, peer pressure is often modeled by interactions of more than two individuals (higher-order interactions). In our work, we introduce a two-layer random hypergraph model, in which hyperedges represent households and workplaces. Within this overlapping, adaptive structure, individuals react if their opinion is in majority in their groups. The process evolves through random steps: individuals can either change their opinion, or quit their workplace and join another one in which their opinion belongs to the majority. Based on computer simulations, our first goal is to describe the effect of the parameters responsible for the probability of changing opinion and quitting workplace on the homophily and speed of polarization. We also analyze the model as a Markov chain, and study the frequency of the absorbing states. Then, we quantitatively compare how different statistical and machine learning methods, in particular, linear regression, xgboost and a convolutional neural network perform for estimating these probabilities, based on partial information from the process, for example, the distribution of opinion configurations within households and workplaces. Among other observations, we conclude that all methods can achieve the best results under appropriate circumstances, and that the amount of information that is necessary to provide good results depends on the strength of the peer pressure effect.

Although Graph Neural Networks (GNNs) have become the dominant approach for graph representation learning, their performance on link prediction tasks does not always surpass that of traditional heuristic methods such as Common Neighbors and Jaccard Coefficient. This is mainly because existing GNNs tend to focus on learning local node representations, making it difficult to effectively capture structural relationships between node pairs. Furthermore, excessive reliance on local neighborhood information can lead to over-smoothing. Prior studies have shown that introducing global structural encoding can partially alleviate this issue. To address these limitations, we propose a Community-Enhanced Link Prediction (CELP) framework that incorporates community structure to jointly model local and global graph topology. Specifically, CELP enhances the graph via community-aware, confidence-guided edge completion and pruning, while integrating multi-scale structural features to achieve more accurate link prediction. Experimental results across multiple benchmark datasets demonstrate that CELP achieves superior performance, validating the crucial role of community structure in improving link prediction accuracy.

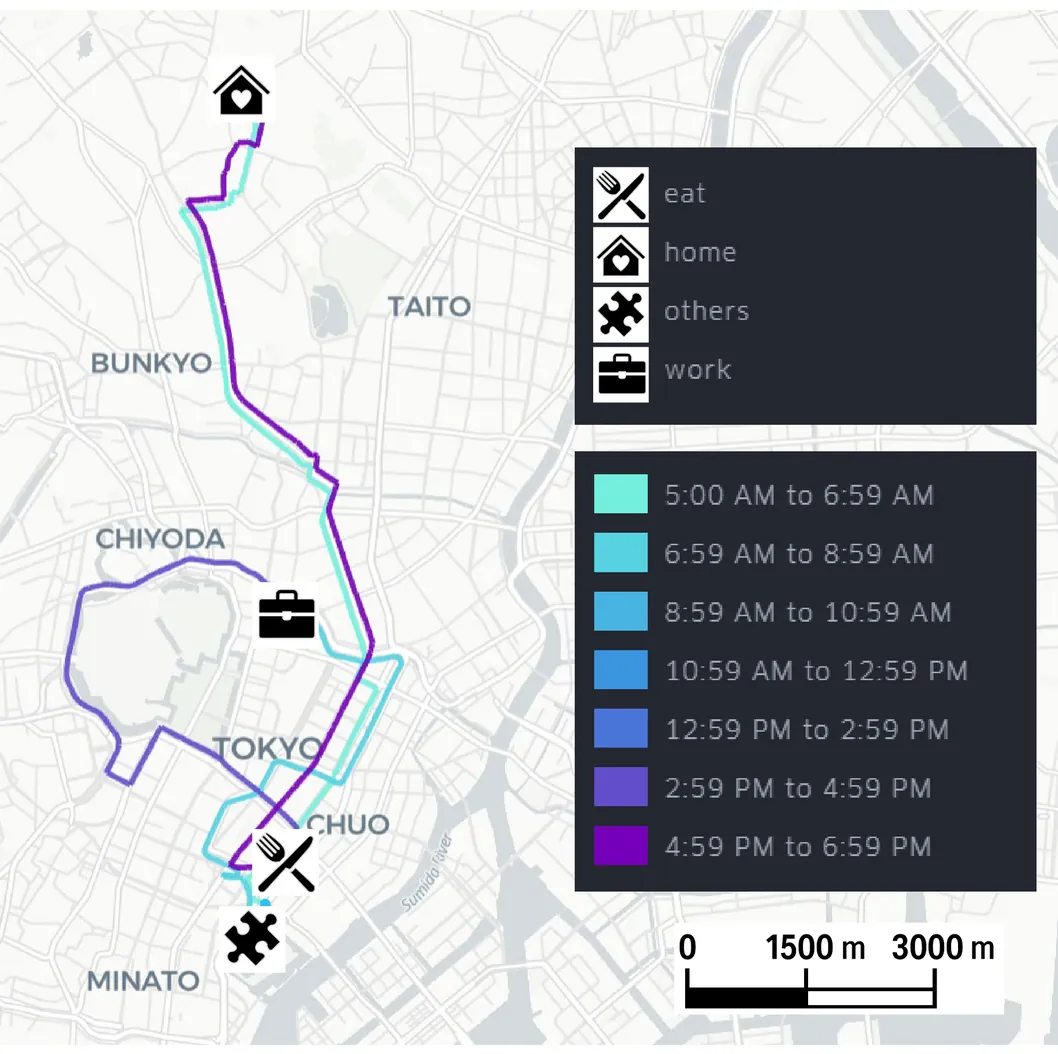

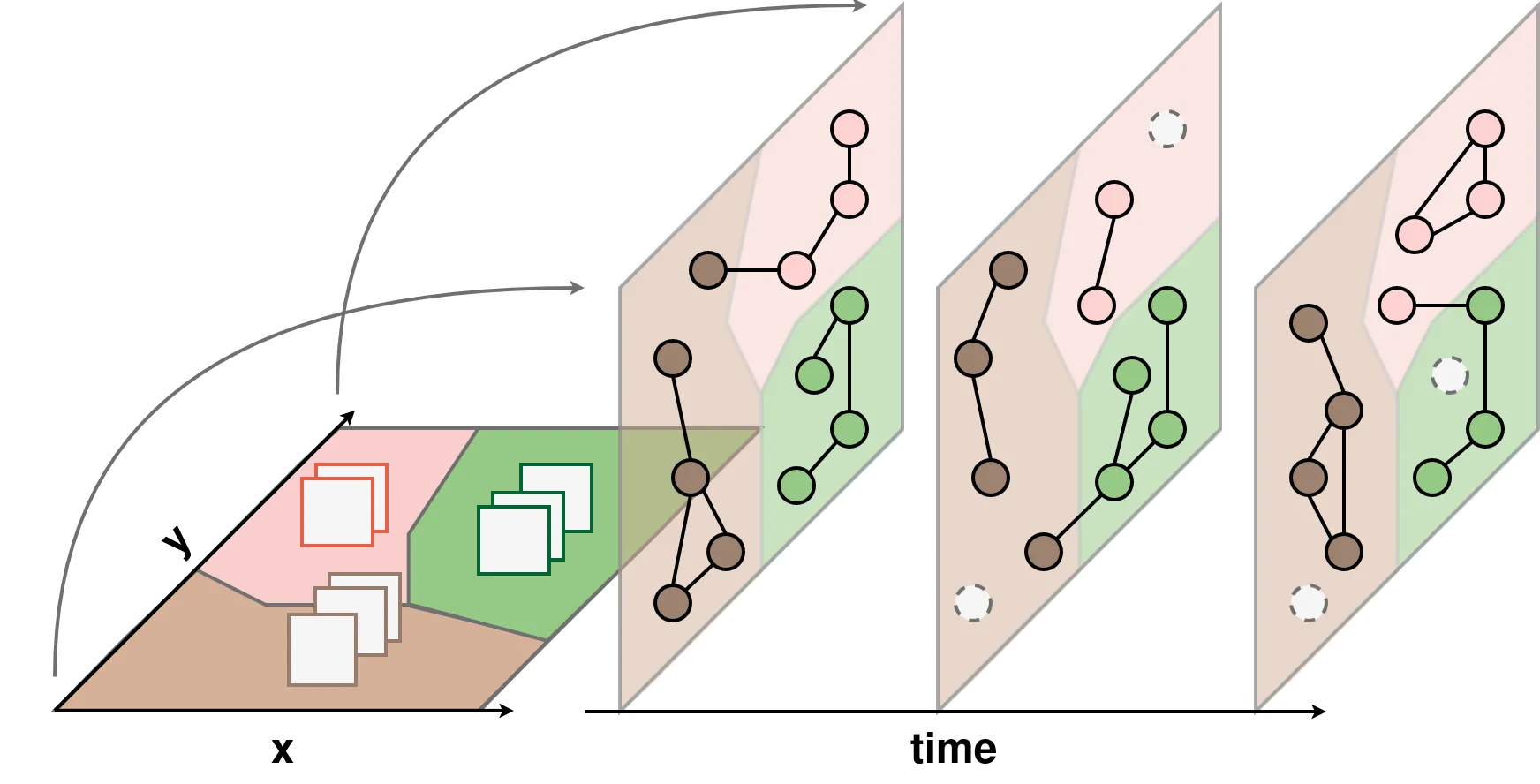

Urban mobility data are indispensable for urban planning, transportation demand forecasting, pandemic modeling, and many other applications; however, individual mobile phone-derived Global Positioning System traces cannot generally be shared with third parties owing to severe re-identification risks. Aggregated records, such as origin-destination (OD) matrices, offer partial insights but fail to capture the key behavioral properties of daily human movement, limiting realistic city-scale analyses. This study presents a privacy-preserving synthetic mobility dataset that reconstructs daily trajectories from aggregated inputs. The proposed method integrates OD flows with two complementary behavioral constraints: (1) dwell-travel time quantiles that are available only as coarse summary statistics and (2) the universal law for the daily distribution of the number of visited locations. Embedding these elements in a multi-objective optimization framework enables the reproduction of realistic distributions of human mobility while ensuring that no personal identifiers are required. The proposed framework is validated in two contrasting regions of Japan: (1) the 23 special wards of Tokyo, representing a dense metropolitan environment; and (2) Fukuoka Prefecture, where urban and suburban mobility patterns coexist. The resulting synthetic mobility data reproduce dwell-travel time and visit frequency distributions with high fidelity, while deviations in OD consistency remain within the natural range of daily fluctuations. The results of this study establish a practical synthesis pathway under real-world constraints, providing governments, urban planners, and industries with scalable access to high-resolution mobility data for reliable analytics without the need for sensitive personal records, and supporting practical deployments in policy and commercial domains.

Many complex real-world systems exhibit inherently intertwined temporal and spatial characteristics. Spatio-temporal knowledge graphs (STKGs) have therefore emerged as a powerful representation paradigm, as they integrate entities, relationships, time and space within a unified graph structure. They are increasingly applied across diverse domains, including environmental systems and urban, transportation, social and human mobility networks. However, modeling STKGs remains challenging: their foundations span classical graph theory as well as temporal and spatial graph models, which have evolved independently across different research communities and follow heterogeneous modeling assumptions and terminologies. As a result, existing approaches often lack conceptual alignment, generalizability and reusability. This survey provides a systematic review of spatio-temporal knowledge graph models, tracing their origins in static, temporal and spatial graph modeling. We analyze existing approaches along key modeling dimensions, including edge semantics, temporal and spatial annotation strategies, temporal and spatial semantics and relate these choices to their respective application domains. Our analysis reveals that unified modeling frameworks are largely absent and that most current models are tailored to specific use cases rather than designed for reuse or long-term knowledge preservation. Based on these findings, we derive modeling guidelines and identify open challenges to guide future research.

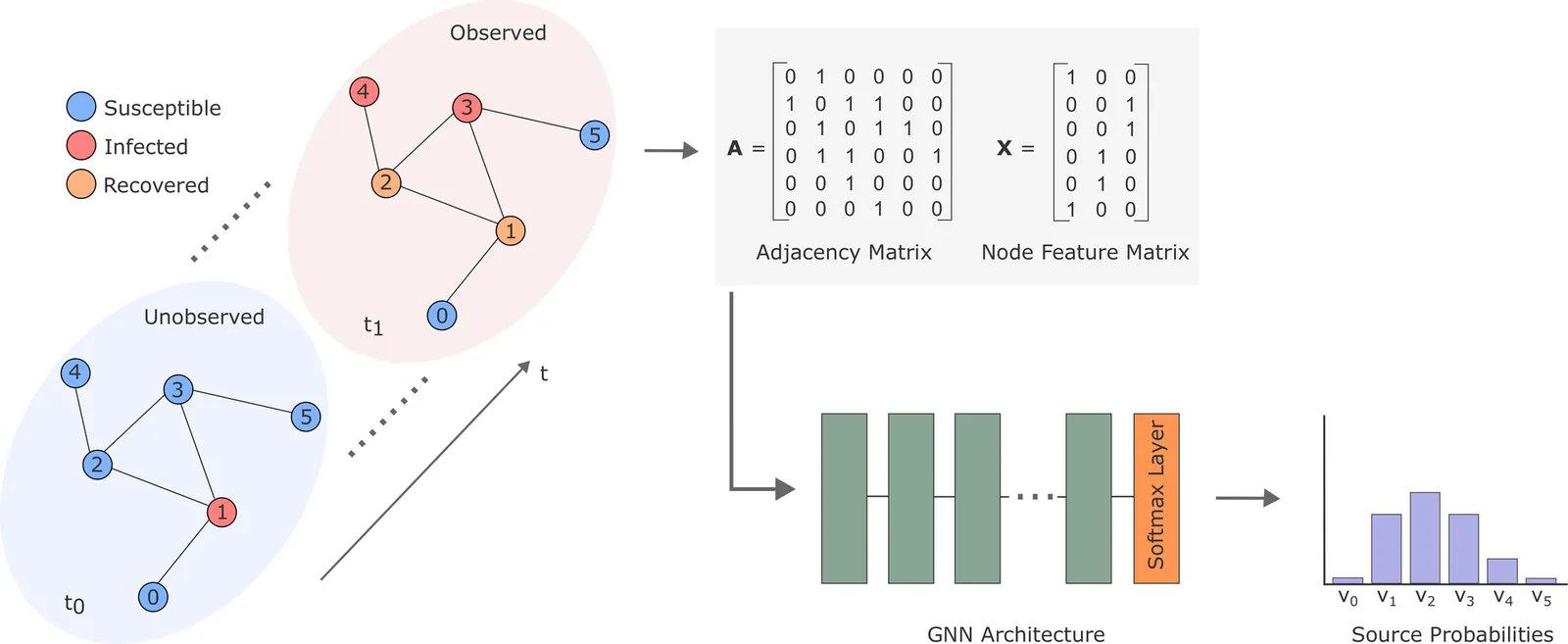

The source detection problem arises when an epidemic process unfolds over a contact network, and the objective is to identify its point of origin, i.e., the source node. Research on this problem began with the seminal work of Shah and Zaman in 2010, who formally defined it and introduced the notion of rumor centrality. With the emergence of Graph Neural Networks (GNNs), several studies have proposed GNN-based approaches to source detection. However, some of these works lack methodological clarity and/or are hard to reproduce. As a result, it remains unclear (to us, at least) whether GNNs truly outperform more traditional source detection methods across comparable settings. In this paper, we first review existing GNN-based methods for source detection, clearly outlining the specific settings each addresses and the models they employ. Building on this research, we propose a principled GNN architecture tailored to the source detection task. We also systematically investigate key questions surrounding this problem. Most importantly, we aim to provide a definitive assessment of how GNNs perform relative to other source detection methods. Our experiments show that GNNs substantially outperform all other methods we test across a variety of network types. Although we initially set out to challenge the notion of GNNs as a solution to source detection, our results instead demonstrate their remarkable effectiveness for this task. We discuss possible reasons for this strong performance. To ensure full reproducibility, we release all code and data on GitHub. Finally, we argue that epidemic source detection should serve as a benchmark task for evaluating GNN architectures.

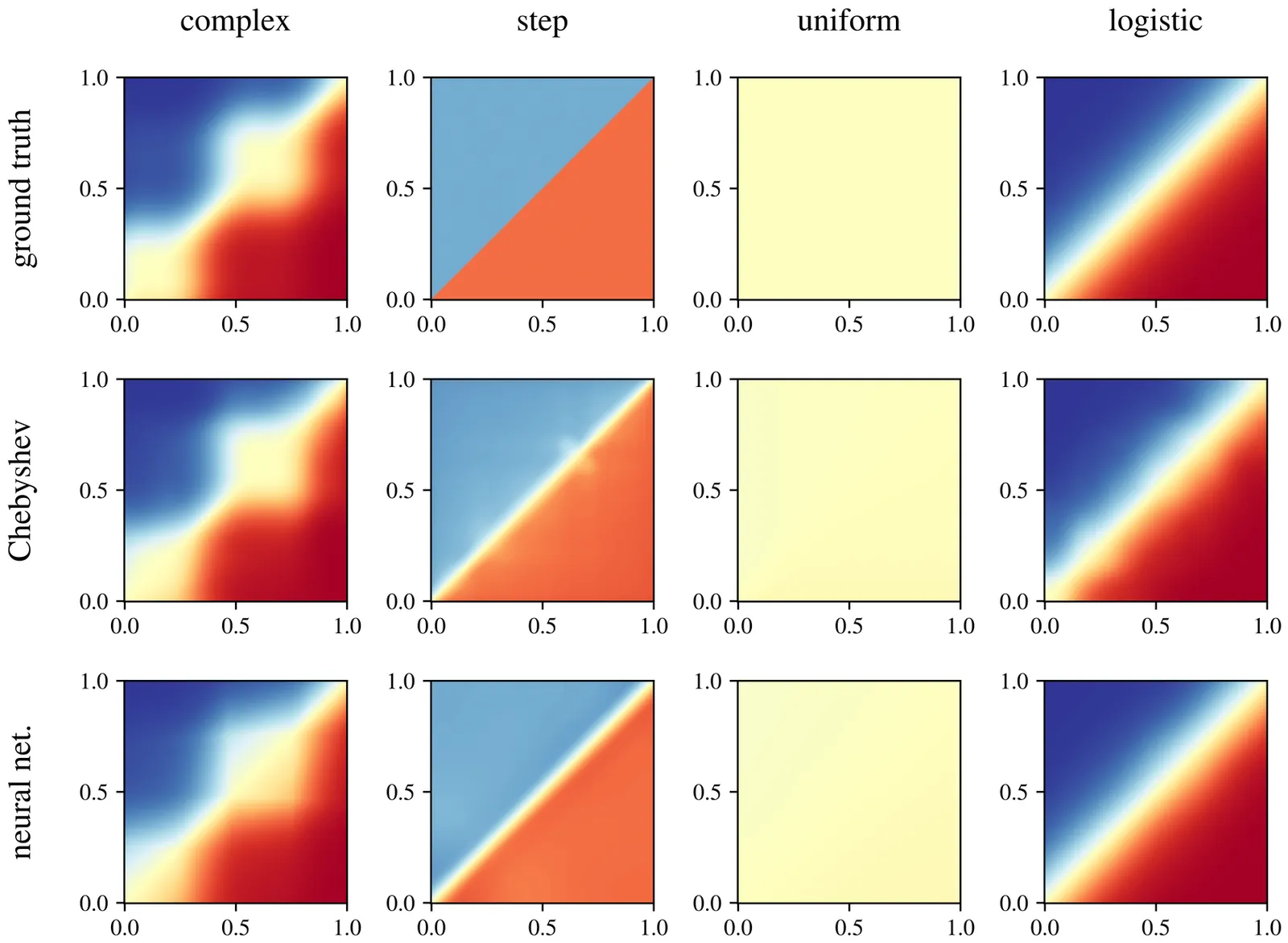

We consider the problem of ranking objects from noisy pairwise comparisons, for example, ranking tennis players from the outcomes of matches. We follow a standard approach to this problem and assume that each object has an unobserved strength and that the outcome of each comparison depends probabilistically on the strengths of the comparands. However, we do not assume to know a priori how skills affect outcomes. Instead, we present an efficient algorithm for simultaneously inferring both the unobserved strengths and the function that maps strengths to probabilities. Despite this problem being under-constrained, we present experimental evidence that the conclusions of our Bayesian approach are robust to different model specifications. We include several case studies to exemplify the method on real-world data sets.

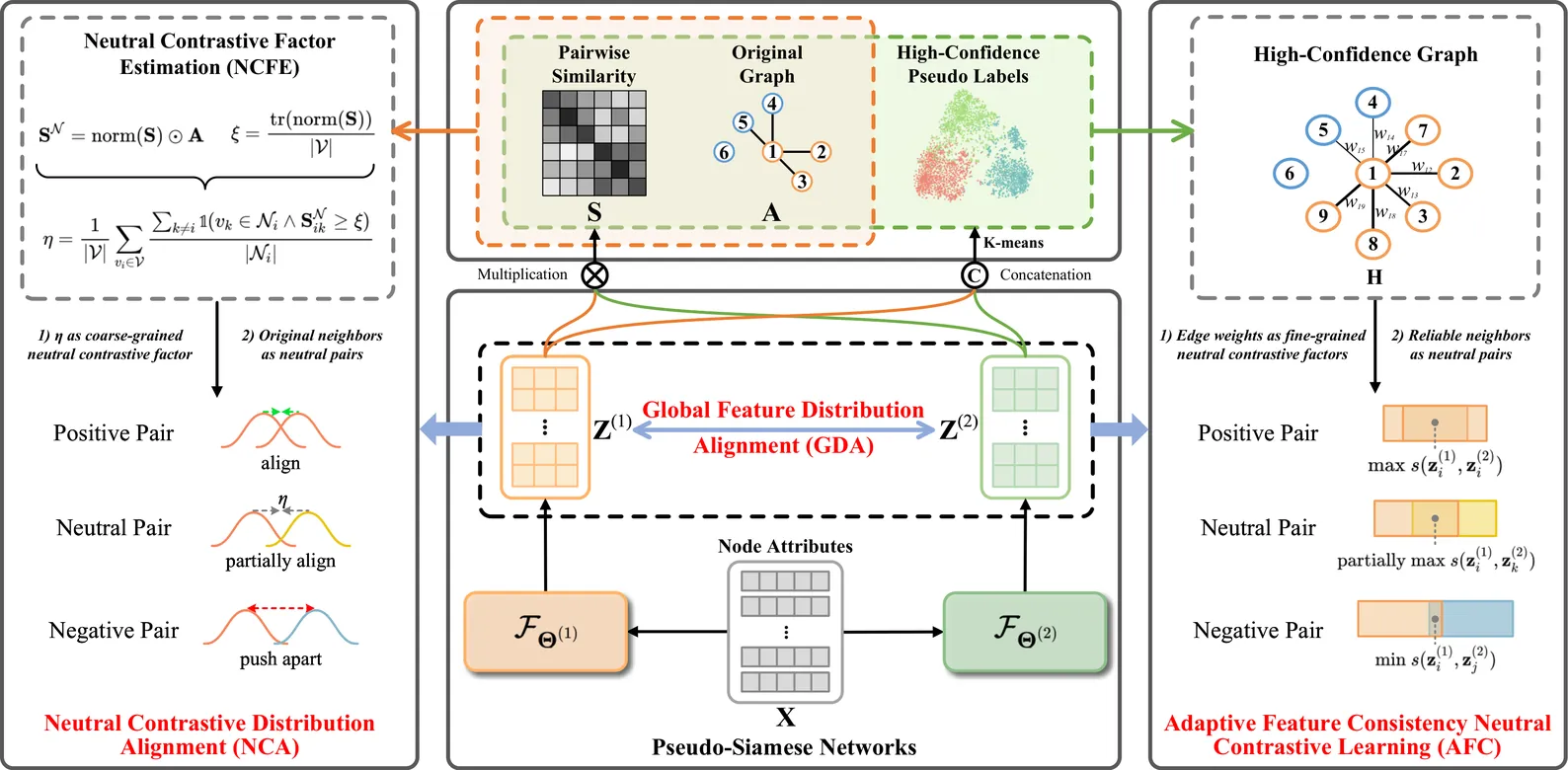

Recently, neighbor-based contrastive learning has been introduced to effectively exploit neighborhood information for clustering. However, these methods rely on the homophily assumption-that connected nodes share similar class labels and should therefore be close in feature space-which fails to account for the varying homophily levels in real-world graphs. As a result, applying contrastive learning to low-homophily graphs may lead to indistinguishable node representations due to unreliable neighborhood information, making it challenging to identify trustworthy neighborhoods with varying homophily levels in graph clustering. To tackle this, we introduce a novel neighborhood Neutral Contrastive Graph Clustering method, NeuCGC, that extends traditional contrastive learning by incorporating neutral pairs-node pairs treated as weighted positive pairs, rather than strictly positive or negative. These neutral pairs are dynamically adjusted based on the graph's homophily level, enabling a more flexible and robust learning process. Leveraging neutral pairs in contrastive learning, our method incorporates two key components: (1) an adaptive contrastive neighborhood distribution alignment that adjusts based on the homophily level of the given attribute graph, ensuring effective alignment of neighborhood distributions, and (2) a contrastive neighborhood node feature consistency learning mechanism that leverages reliable neighborhood information from high-confidence graphs to learn robust node representations, mitigating the adverse effects of varying homophily levels and effectively exploiting highly trustworthy neighborhood information. Experimental results demonstrate the effectiveness and robustness of our approach, outperforming other state-of-the-art graph clustering methods. Our code is available at https://github.com/THPengL/NeuCGC.

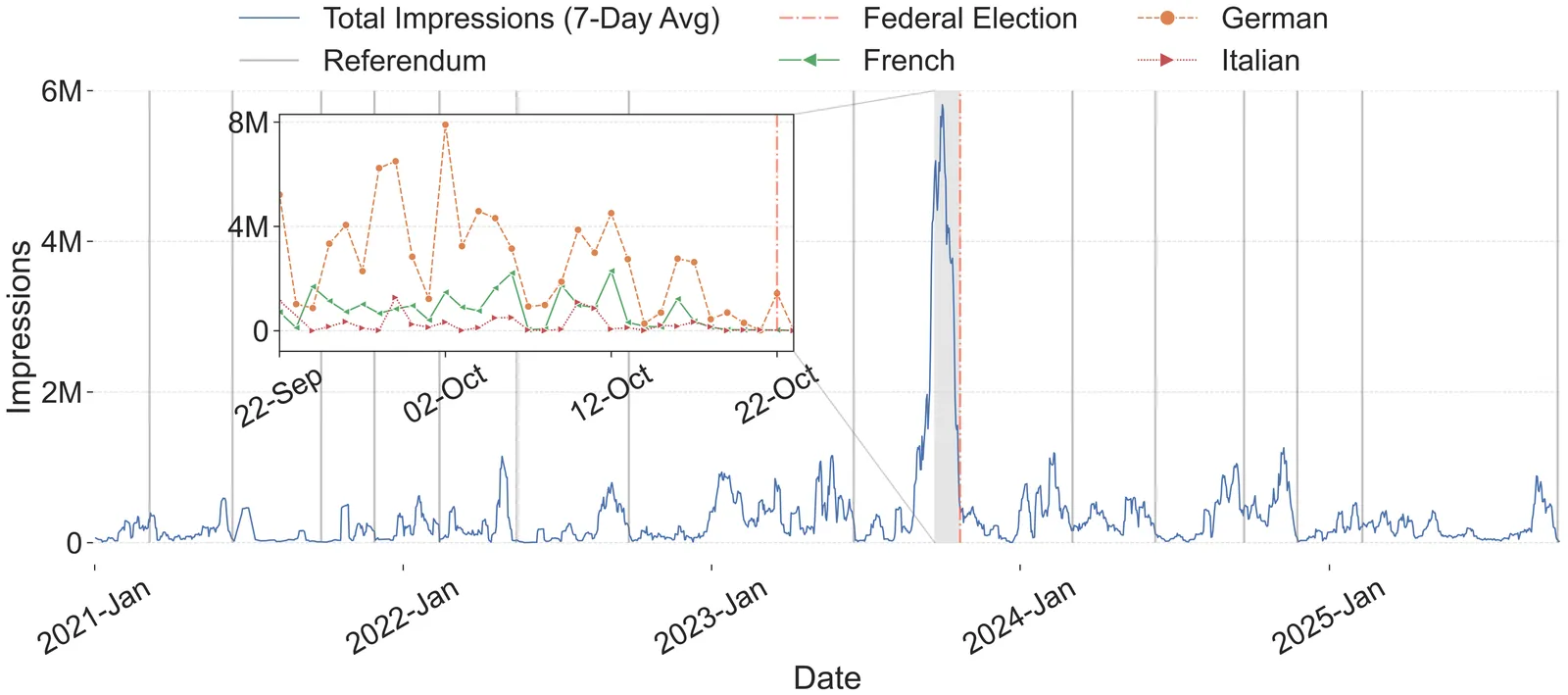

Switzerland's unique system of direct democracy, characterized by frequent popular referenda, provides a critical context for studying the impact of online political advertising beyond standard electoral cycles. This paper presents a large-scale, data-driven analysis of 40k political ads published on Facebook and Instagram in Switzerland between 2021 and 2025. Despite a voting population of only 5.6 million, the ad campaigns were significant in scale, costing CHF 4.5 million and achieving 560 million impressions. This study shows that political ads are used not only for federal elections, but also to influence referenda, where greater exposure to ``pro-Yes'' advertising correlates significantly with approval outcomes. The analysis of microtargeting reveals distinct partisan strategies: centrist and right-wing parties predominantly target older men, whereas left-wing parties focus on young women. Furthermore, significant region-specific demographic variations are observed even within the same party, reflecting Switzerland's strong territorial divisions. Regarding content, a clear pattern of ``talking past each other'' is identified: in line with issue ownership theory, parties avoid direct debate on shared issues, preferring to promote exclusively owned topics. Finally, it is demonstrated that these strategies are so distinct that an ad's author can be predicted using a machine learning model trained exclusively on its audience and topic features. This study sheds light on how microtargeting and issue divergence on social platforms may fragment the public sphere and bypass traditional democratic deliberation.