Software Engineering

Covers software design, verification, validation, documentation, and software development tools.

Looking for a broader view? This category is part of:

Covers software design, verification, validation, documentation, and software development tools.

Looking for a broader view? This category is part of:

2601.04124

2601.04124Issue Tracking Systems (ITSs) enable software developers and managers to collect and resolve issues collaboratively. While researchers have extensively analysed ITS data to automate or assist specific activities such as issue assignments, duplicate detection, or priority prediction, developer studies on ITSs remain rare. Particularly, little is known about the challenges Software Engineering (SE) teams encounter in ITSs and when certain practices and workarounds (such as leaving issue fields like "priority" empty) are considered problematic. To fill this gap, we conducted an in-depth interview study with 26 experienced SE practitioners from different organisations and industries. We asked them about general problems encountered, as well as the relevance of 31 ITS smells (aka potentially problematic practices) discussed in the literature. By applying Thematic Analysis to the interview notes, we identified 14 common problems including issue findability, zombie issues, workflow bloat, and lack of workflow enforcement. Participants also stated that many of the ITS smells do not occur or are not problematic. Our results suggest that ITS problems and smells are highly dependent on context factors such as ITS configuration, workflow stage, and team size. We also discuss potential tooling solutions to configure, monitor, and visualise ITS smells to cope with these challenges.

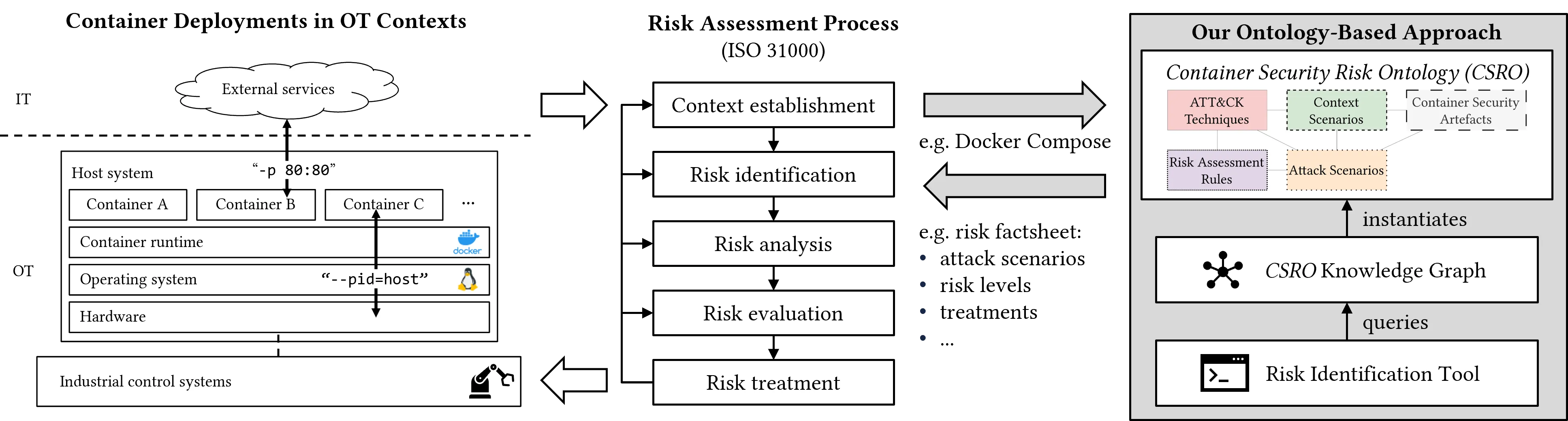

In operational technology (OT) contexts, containerised applications often require elevated privileges to access low-level network interfaces or perform administrative tasks such as application monitoring. These privileges reduce the default isolation provided by containers and introduce significant security risks. Security risk identification for OT container deployments is challenged by hybrid IT/OT architectures, fragmented stakeholder knowledge, and continuous system changes. Existing approaches lack reproducibility, interpretability across contexts, and technical integration with deployment artefacts. We propose a model-based approach, implemented as the Container Security Risk Ontology (CSRO), which integrates five key domains: adversarial behaviour, contextual assumptions, attack scenarios, risk assessment rules, and container security artefacts. Our evaluation of CSRO in a case study demonstrates that the end-to-end formalisation of risk calculation, from artefact to risk level, enables automated and reproducible risk identification. While CSRO currently focuses on technical, container-level treatment measures, its modular and flexible design provides a solid foundation for extending the approach to host-level and organisational risk factors.

2601.03988

2601.03988Background: Extracting the stages that structure Machine Learning (ML) pipelines from source code is key for gaining a deeper understanding of data science practices. However, the diversity caused by the constant evolution of the ML ecosystem (e.g., algorithms, libraries, datasets) makes this task challenging. Existing approaches either depend on non-scalable, manual labeling, or on ML classifiers that do not properly support the diversity of the domain. These limitations highlight the need for more flexible and reliable solutions. Objective: We evaluate whether Small Language Models (SLMs) can leverage their code understanding and classification abilities to address these limitations, and subsequently how they can advance our understanding of data science practices. Method: We conduct a confirmatory study based on two reference works selected for their relevance regarding current state-of-the-art's limitations. First, we compare several SLMs using Cochran's Q test. The best-performing model is then evaluated against the reference studies using two distinct McNemar's tests. We further analyze how variations in taxonomy definitions affect performance through an additional Cochran's Q test. Finally, a goodness-of-fit analysis is conducted using Pearson's chi-squared tests to compare our insights on data science practices with those from prior studies.

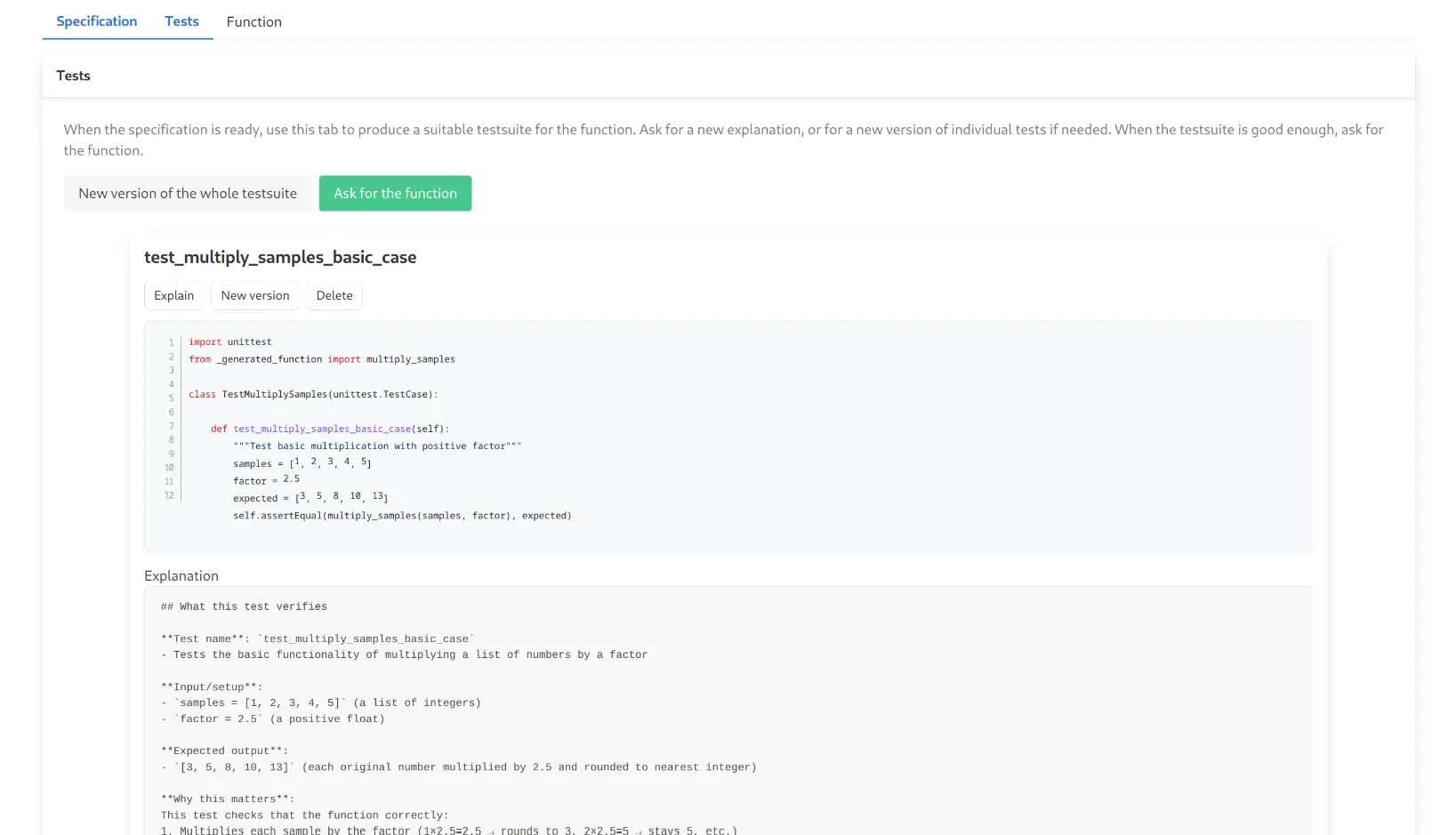

Large Language Models (LLMs) are increasingly integrated into software development workflows, yet their behavior in structured, specification-driven processes remains poorly understood. This paper presents an empirical study design using CURRANTE, a Visual Studio Code extension that enables a human-in-the-loop workflow for LLM-assisted code generation. The tool guides developers through three sequential stages--Specification, Tests, and Function--allowing them to define requirements, generate and refine test suites, and produce functions that satisfy those tests. Participants will solve medium-difficulty problems from the LiveCodeBench dataset, while the tool records fine-grained interaction logs, effectiveness metrics (e.g., pass rate, all-pass completion), efficiency indicators (e.g., time-to-pass), and iteration behaviors. The study aims to analyze how human intervention in specification and test refinement influences the quality and dynamics of LLM-generated code. The results will provide empirical insights into the design of next-generation development environments that align human reasoning with model-driven code generation.

2601.03857

2601.03857LLMs are increasingly used to boost productivity and support software engineering tasks. However, when applied to socially sensitive decisions such as team composition and task allocation, they raise concerns of fairness. Prior studies have revealed that LLMs may reproduce stereotypes; however, these analyses remain exploratory and examine sensitive attributes in isolation. This study investigates whether LLMs exhibit bias in team composition and task assignment by analyzing the combined effects of candidates' country and pronouns. Using three LLMs and 3,000 simulated decisions, we find systematic disparities: demographic attributes significantly shaped both selection likelihood and task allocation, even when accounting for expertise-related factors. Task distributions further reflected stereotypes, with technical and leadership roles unevenly assigned across groups. Our findings indicate that LLMs exacerbate demographic inequities in software engineering contexts, underscoring the need for fairness-aware assessment.

Large Language Models (LLMs) such as GPT-4, Claude and LLaMA have shown impressive performance in code generation, typically evaluated using benchmarks (e.g., HumanEval). However, effective code generation requires models to understand and apply a wide range of language concepts. If the concepts exercised in benchmarks are not representative of those used in real-world projects, evaluations may yield incomplete. Despite this concern, the representativeness of code concepts in benchmarks has not been systematically examined. To address this gap, we present the first empirical study that analyzes the representativeness of code generation benchmarks through the lens of Knowledge Units (KUs) - cohesive sets of programming language capabilities provided by language constructs and APIs. We analyze KU coverage in two widely used Python benchmarks, HumanEval and MBPP, and compare them with 30 real-world Python projects. Our results show that each benchmark covers only half of the identified 20 KUs, whereas projects exercise all KUs with relatively balanced distributions. In contrast, benchmark tasks exhibit highly skewed KU distributions. To mitigate this misalignment, we propose a prompt-based LLM framework that synthesizes KU-based tasks to rebalance benchmark KU distributions and better align them with real-world usage. Using this framework, we generate 440 new tasks and augment existing benchmarks. The augmented benchmarks substantially improve KU coverage and achieve over a 60% improvement in distributional alignment. Evaluations of state-of-the-art LLMs on these augmented benchmarks reveal consistent and statistically significant performance drops (12.54-44.82%), indicating that existing benchmarks overestimate LLM performance due to their limited KU coverage. Our findings provide actionable guidance for building more realistic evaluations of LLM code-generation capabilities.

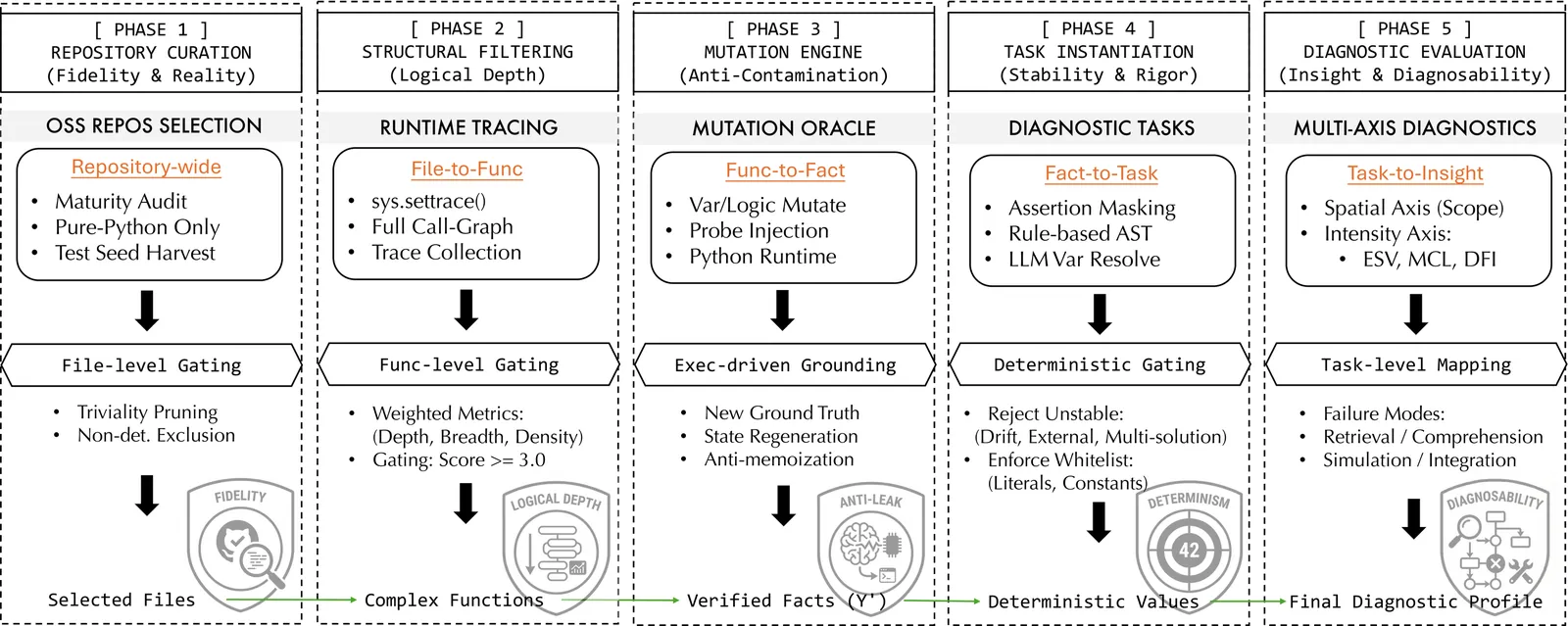

As large language models (LLMs) evolve into autonomous agents, evaluating repository-level reasoning, the ability to maintain logical consistency across massive, real-world, interdependent file systems, has become critical. Current benchmarks typically fluctuate between isolated code snippets and black-box evaluations. We present RepoReason, a white-box diagnostic benchmark centered on abductive assertion verification. To eliminate memorization while preserving authentic logical depth, we implement an execution-driven mutation framework that utilizes the environment as a semantic oracle to regenerate ground-truth states. Furthermore, we establish a fine-grained diagnostic system using dynamic program slicing, quantifying reasoning via three orthogonal metrics: $ESV$ (reading load), $MCL$ (simulation depth), and $DFI$ (integration width). Comprehensive evaluations of frontier models (e.g., Claude-4.5-Sonnet, DeepSeek-v3.1-Terminus) reveal a prevalent aggregation deficit, where integration width serves as the primary cognitive bottleneck. Our findings provide granular white-box insights for optimizing the next generation of agentic software engineering.

Many real-world software tasks require exact transcription of provided data into code, such as cryptographic constants, protocol test vectors, allowlists, and calibration tables. These tasks are operationally sensitive because small omissions or alterations can remain silent while producing syntactically valid programs. This paper introduces a deliberately minimal transcription-to-code benchmark to isolate this reliability concern in LLM-based code generation. Given a list of high-precision decimal constants, a model must generate Python code that embeds the constants verbatim and performs a simple aggregate computation. We describe the prompting variants, evaluation protocol based on exact-string inclusion, and analysis framework used to characterize state-tracking and long-horizon generation failures. The benchmark is intended as a compact stress test that complements existing code-generation evaluations by focusing on data integrity rather than algorithmic reasoning.

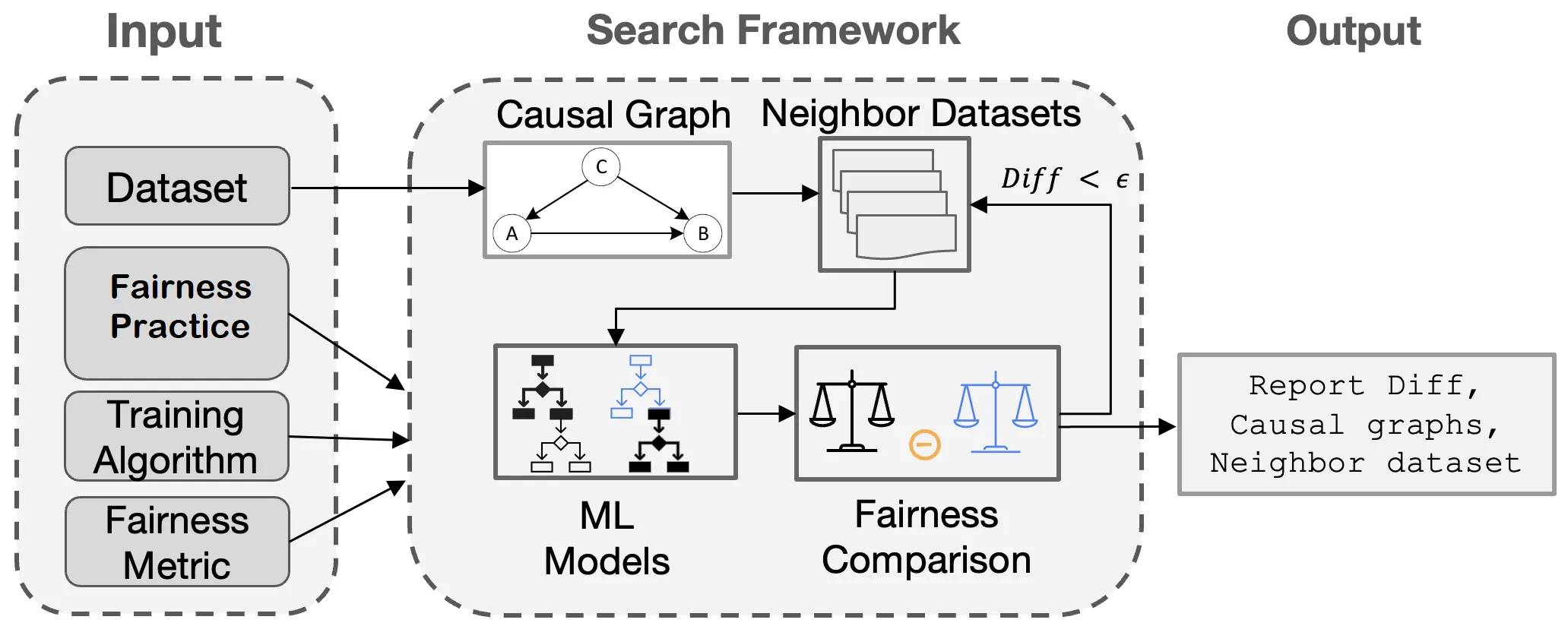

Machine learning (ML) algorithms are increasingly deployed to make critical decisions in socioeconomic applications such as finance, criminal justice, and autonomous driving. However, due to their data-driven and pattern-seeking nature, ML algorithms may develop decision logic that disproportionately distributes opportunities, benefits, resources, or information among different population groups, potentially harming marginalized communities. In response to such fairness concerns, the software engineering and ML communities have made significant efforts to establish the best practices for creating fair ML software. These include fairness interventions for training ML models, such as including sensitive features, selecting non-sensitive attributes, and applying bias mitigators. But how reliably can software professionals tasked with developing data-driven systems depend on these recommendations? And how well do these practices generalize in the presence of faulty labels, missing data, or distribution shifts? These questions form the core theme of this paper.

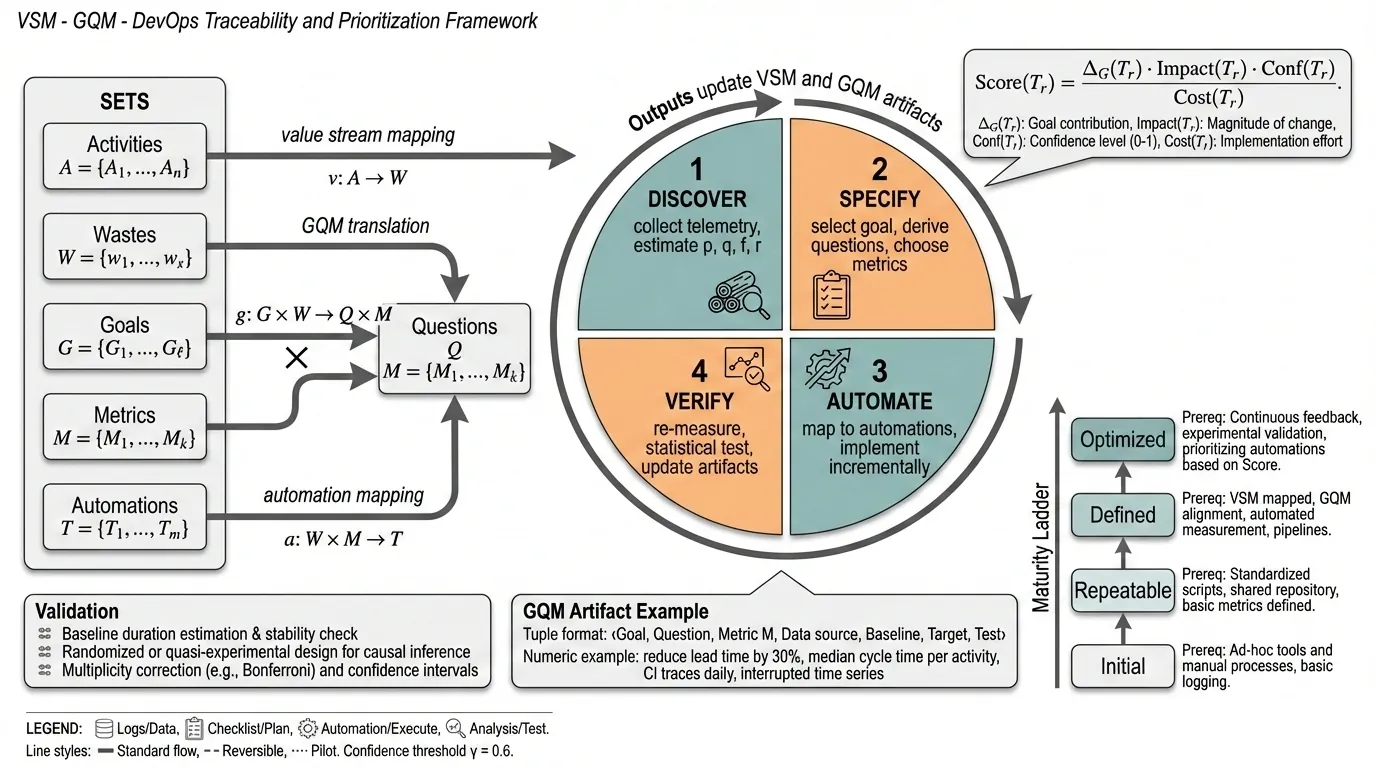

DevOps automation can accelerate software delivery, yet many organizations still struggle to justify and prioritize automation work in terms of strategic project-management outcomes such as waste reduction, delivery predictability, cross-team coordination, and customer-facing quality. This paper presents \textit{VSM--GQM--DevOps}, a unified, traceable framework that integrates (i) Value Stream Mapping (VSM) to visualize the end-to-end delivery system and quantify delays, rework, and handoffs, (ii) the Goal--Question--Metric (GQM) paradigm to translate stakeholder objectives into a minimal, decision-relevant measurement model (combining DORA with project and team outcomes), and (iii) maturity-aligned DevOps automation to remediate empirically observed bottlenecks through small, reversible interventions. The framework operationalizes traceability from observed waste to goal-aligned questions, metrics, and automation candidates, and provides a defensible prioritization approach that balances expected impact, confidence, and cost. We also define a multi-site, longitudinal mixed-method validation protocol that combines telemetry-based quasi-experimental analysis (interrupted time series and, where feasible, controlled rollouts) with qualitative triangulation from interviews and retrospectives. The expected contribution is a validated pathway and a set of practical instruments that enables organizations to select automation investments that demonstrably improve both delivery performance and project-management outcomes.

2601.03556

2601.03556Testing is a critical practice for ensuring software correctness and long-term maintainability. As agentic coding tools increasingly submit pull requests (PRs), it becomes essential to understand how testing appears in these agent-driven workflows. Using the AIDev dataset, we present an empirical study of test inclusion in agentic pull requests. We examine how often tests are included, when they are introduced during the PR lifecycle and how test-containing PRs differ from non-test PRs in terms of size, turnaround time, and merge outcomes. Across agents, test-containing PRs are more common over time and tend to be larger and take longer to complete, while merge rates remain largely similar. We also observe variation across agents in both test adoption and the balance between test and production code within test PRs. Our findings provide a descriptive view of testing behavior in agentic pull requests and offer empirical grounding for future studies of autonomous software development.

Open-source scientific software is abundant, yet most tools remain difficult to compile, configure, and reuse, sustaining a small-workshop mode of scientific computing. This deployment bottleneck limits reproducibility, large-scale evaluation, and the practical integration of scientific tools into modern AI-for-Science (AI4S) and agentic workflows. We present Deploy-Master, a one-stop agentic workflow for large-scale tool discovery, build specification inference, execution-based validation, and publication. Guided by a taxonomy spanning 90+ scientific and engineering domains, our discovery stage starts from a recall-oriented pool of over 500,000 public repositories and progressively filters it to 52,550 executable tool candidates under license- and quality-aware criteria. Deploy-Master transforms heterogeneous open-source repositories into runnable, containerized capabilities grounded in execution rather than documentation claims. In a single day, we performed 52,550 build attempts and constructed reproducible runtime environments for 50,112 scientific tools. Each successful tool is validated by a minimal executable command and registered in SciencePedia for search and reuse, enabling direct human use and optional agent-based invocation. Beyond delivering runnable tools, we report a deployment trace at the scale of 50,000 tools, characterizing throughput, cost profiles, failure surfaces, and specification uncertainty that become visible only at scale. These results explain why scientific software remains difficult to operationalize and motivate shared, observable execution substrates as a foundation for scalable AI4S and agentic science.

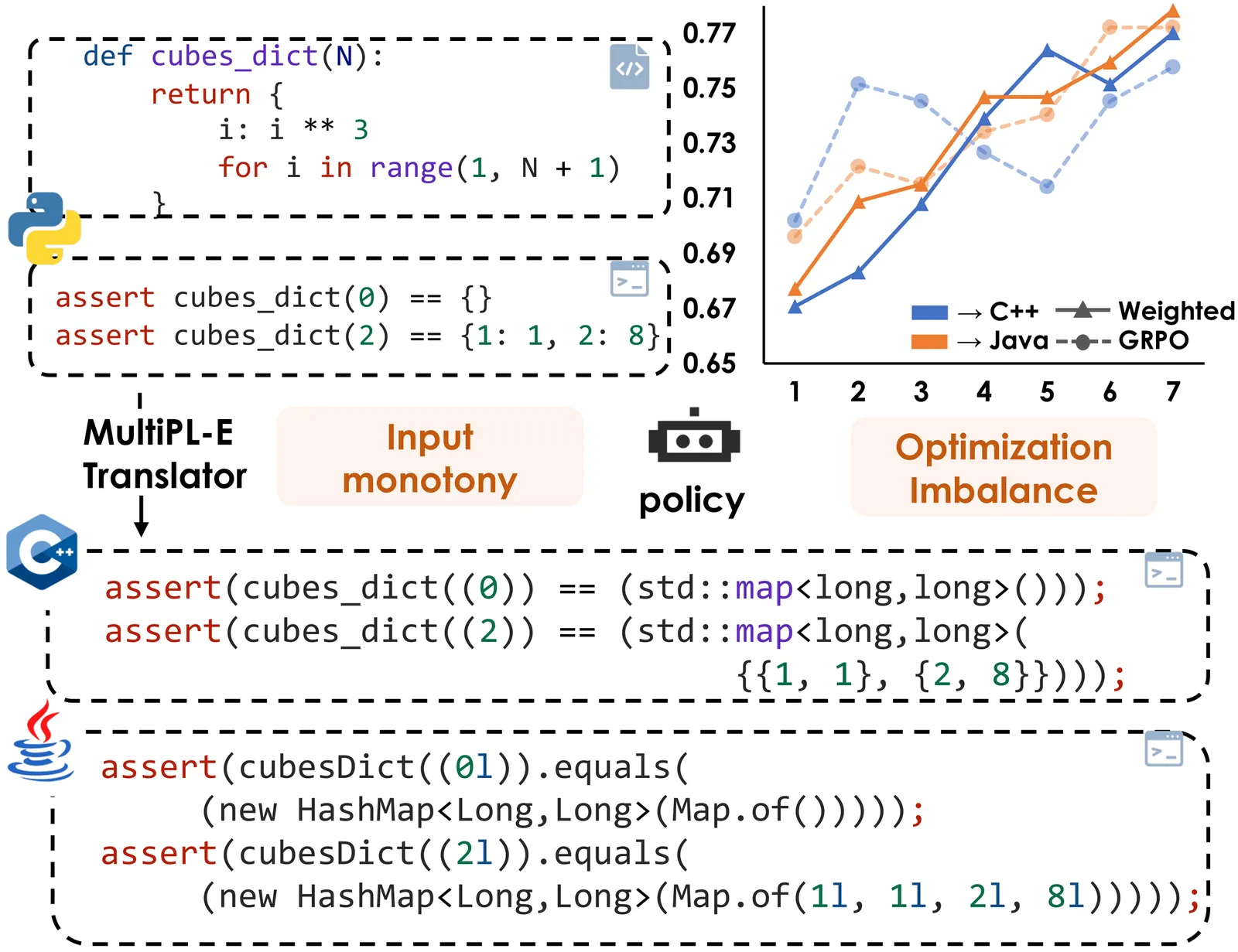

Code translation across multiple programming languages is essential yet challenging due to two vital obstacles: scarcity of parallel data paired with executable test oracles, and optimization imbalance when handling diverse language pairs. We propose BootTrans, a bootstrapping method that resolves both obstacles. Its key idea is to leverage the functional invariance and cross-lingual portability of test suites, adapting abundant pivot-language unit tests to serve as universal verification oracles for multilingual RL training. Our method introduces a dual-pool architecture with seed and exploration pools to progressively expand training data via execution-guided experience collection. Furthermore, we design a language-aware weighting mechanism that dynamically prioritizes harder translation directions based on relative performance across sibling languages, mitigating optimization imbalance. Extensive experiments on the HumanEval-X and TransCoder-Test benchmarks demonstrate substantial improvements over baseline LLMs across all translation directions, with ablations validating the effectiveness of both bootstrapping and weighting components.

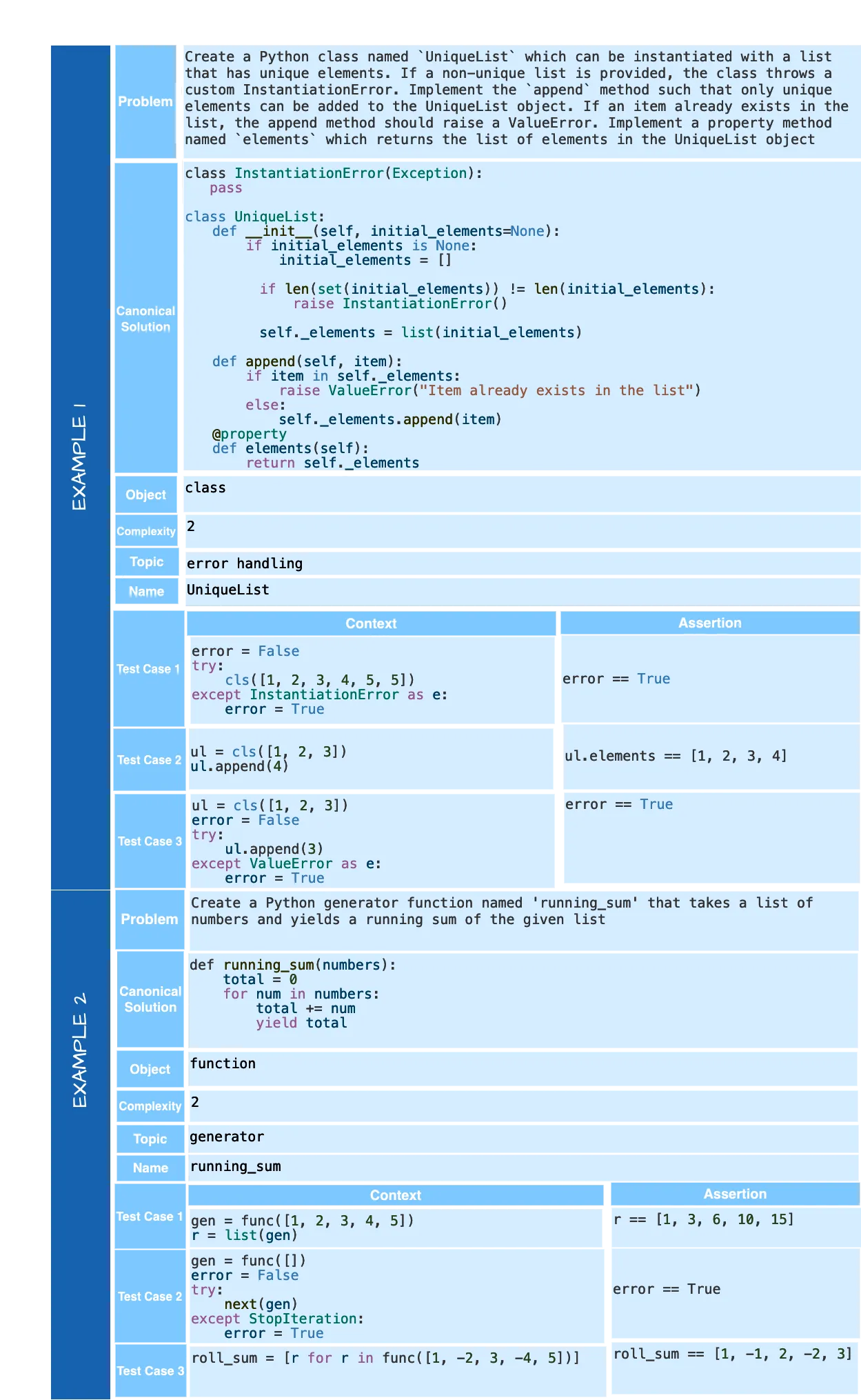

Large Language Models (LLMs) are predominantly assessed based on their common sense reasoning, language comprehension, and logical reasoning abilities. While models trained in specialized domains like mathematics or coding have demonstrated remarkable advancements in logical reasoning, there remains a significant gap in evaluating their code generation capabilities. Existing benchmark datasets fall short in pinpointing specific strengths and weaknesses, impeding targeted enhancements in models' reasoning abilities to synthesize code. To bridge this gap, our paper introduces an innovative, pedagogical benchmarking method that mirrors the evaluation processes encountered in academic programming courses. We introduce CodeEval, a multi-dimensional benchmark dataset designed to rigorously evaluate LLMs across 24 distinct aspects of Python programming. The dataset covers three proficiency levels - beginner, intermediate, and advanced - and includes both class-based and function-based problem types with detailed problem specifications and comprehensive test suites. To facilitate widespread adoption, we also developed RunCodeEval, an open-source execution framework that provides researchers with a ready-to-use evaluation pipeline for CodeEval. RunCodeEval handles test execution, context setup, and metrics generation, enabling researchers to quickly obtain detailed insights into model strengths and weaknesses across complexity levels, problem types, and programming categories. This combination enables targeted evaluation and guides improvements in LLMs' programming proficiencies.

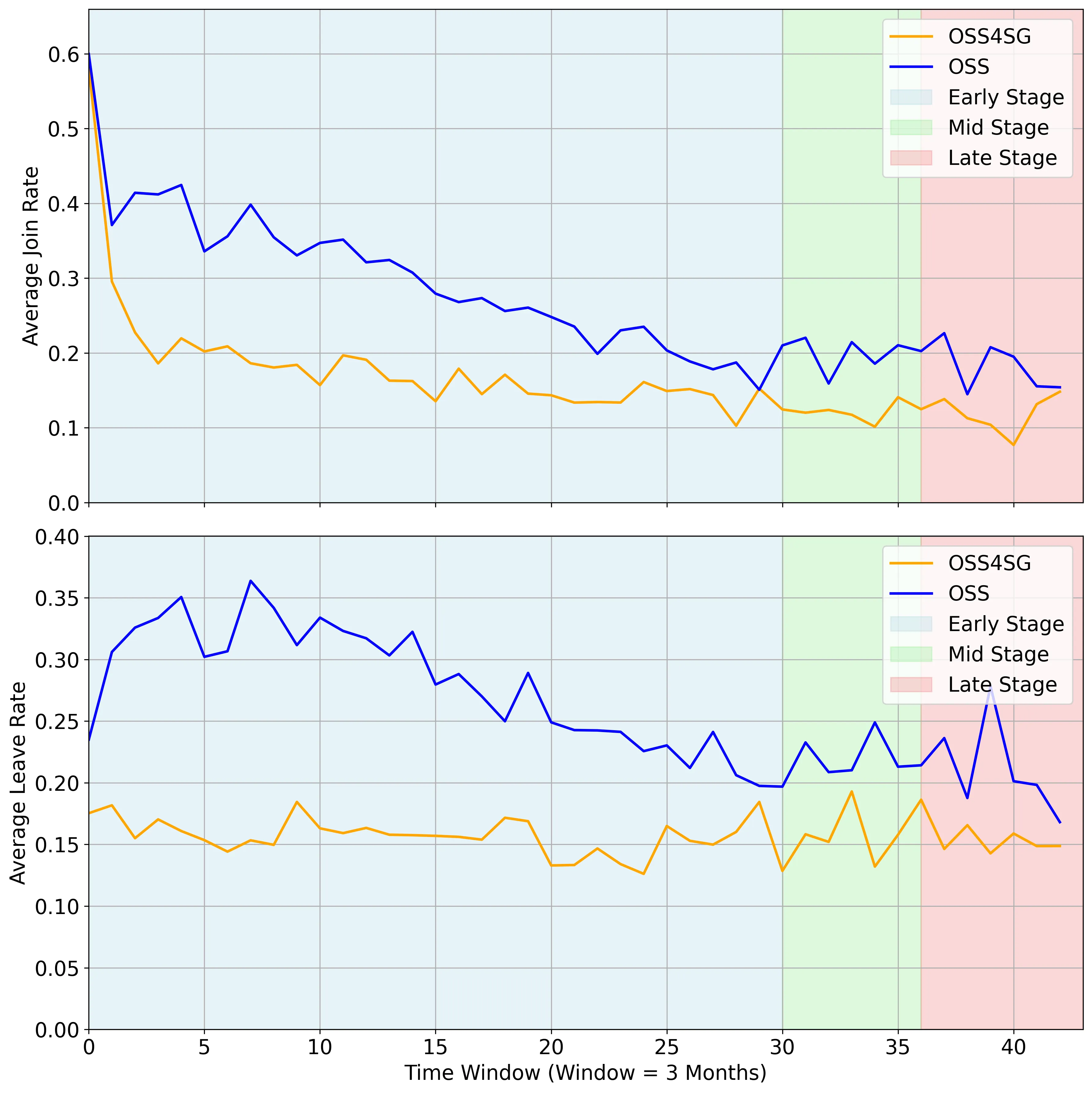

Open Source Software for Social Good (OSS4SG) projects aim to address critical societal challenges, such as healthcare access and community safety. Understanding the community dynamics and contributor patterns in these projects is essential for ensuring their sustainability and long-term impact. However, while extensive research has focused on conventional Open Source Software (OSS), little is known about how the mission-driven nature of OSS4SG influences its development practices. To address this gap, we conduct a large-scale empirical study of 1,039 GitHub repositories, comprising 422 OSS4SG and 617 conventional OSS projects, to compare community structure, contributor engagement, and coding practices. Our findings reveal that OSS4SG projects foster significantly more stable and "sticky" (63.4%) communities, whereas conventional OSS projects are more "magnetic" (75.4%), attracting a high turnover of contributors. OSS4SG projects also demonstrate consistent engagement throughout the year, while conventional OSS communities exhibit seasonal fluctuations. Additionally, OSS4SG projects rely heavily on core contributors for both code quality and issue resolution, while conventional OSS projects leverage casual contributors for issue resolution, with core contributors focusing primarily on code quality.

Repository-level code completion benefits from retrieval-augmented generation (RAG). However, controlling cross-file evidence is difficult because chunk utility is often interaction-dependent: some snippets help only when paired with complementary context, while others harm decoding when they conflict. We propose RepoShapley, a coalition-aware context filtering framework supervised by Shapley-style marginal contributions. Our module ChunkShapley constructs offline labels by (i) single-chunk probing with teacher-forced likelihood to estimate signed, weighted effects, (ii) a surrogate game that captures saturation and interference, (iii) exact Shapley computation for small retrieval sets, and (iv) bounded post-verification that selects a decoding-optimal coalition using the frozen generator. We distill verified $KEEP$ or $DROP$ decisions and retrieval triggering into a single model via discrete control tokens. Experiments across benchmarks and backbones show that RepoShapley improves completion quality while reducing harmful context and unnecessary retrieval. Code: https://anonymous.4open.science/r/a7f3c9.

Agile methods, and Scrum in particular, are widely taught in software engineering education; however, there is limited empirical evidence on how these practices function in long-running, student-led projects under academic and hybrid work constraints. This paper presents a year-long case study of an eight-person student development team tasked with designing and implementing a virtual reality game that simulates a university campus and provides program-related educational content. We analyze how the team adapted Scrum practices (sprint structure, roles, backlog management) to fit semester rhythms, exams, travel, and part-time availability, and how communication and coordination were maintained in a hybrid on-site/remote environment. Using qualitative observations and artifacts from Discord, Notion, and GitHub, as well as contribution metrics and a custom communication effectiveness index (score: 0.76/1.00), we evaluate three dimensions: (1) the effectiveness of collaboration tools, (2) the impact of hybrid work on communication and productivity, and (3) the feasibility of aligning Scrum with academic timelines. Our findings show that (i) lightweight, tool-mediated coordination enabled stable progress even during remote periods; (ii) one-week sprints and flexible ceremonies helped reconcile Scrum with academic obligations; and (iii) shared motivation, role clarity, and compatible working styles were as critical as process mechanics. We propose practical recommendations for instructors and student teams adopting agile methods in hybrid, project-based learning settings.

Navigation is one of the fundamental tasks for automated exploration in Virtual Reality (VR). Existing technologies primarily focus on path optimization in 360-degree image datasets and 3D simulators, which cannot be directly applied to immersive VR environments. To address this gap, we present NavAI, a generalizable large language model (LLM)-based navigation framework that supports both basic actions and complex goal-directed tasks across diverse VR applications. We evaluate NavAI in three distinct VR environments through goal-oriented and exploratory tasks. Results show that it achieves high accuracy, with an 89% success rate in goal-oriented tasks. Our analysis also highlights current limitations of relying entirely on LLMs, particularly in scenarios that require dynamic goal assessment. Finally, we discuss the limitations observed during the experiments and offer insights for future research directions.

Security bug reports require prompt identification to minimize the window of vulnerability in software systems. Traditional machine learning (ML) techniques for classifying bug reports to identify security bug reports rely heavily on large amounts of labeled data. However, datasets for security bug reports are often scarce in practice, leading to poor model performance and limited applicability in real-world settings. In this study, we propose a few-shot learning-based technique to effectively identify security bug reports using limited labeled data. We employ SetFit, a state-of-the-art few-shot learning framework that combines sentence transformers with contrastive learning and parameter-efficient fine-tuning. The model is trained on a small labeled dataset of bug reports and is evaluated on its ability to classify these reports as either security-related or non-security-related. Our approach achieves an AUC of 0.865, at best, outperforming traditional ML techniques (baselines) for all of the evaluated datasets. This highlights the potential of SetFit to effectively identify security bug reports. SetFit-based few-shot learning offers a promising alternative to traditional ML techniques to identify security bug reports. The approach enables efficient model development with minimal annotation effort, making it highly suitable for scenarios where labeled data is scarce.

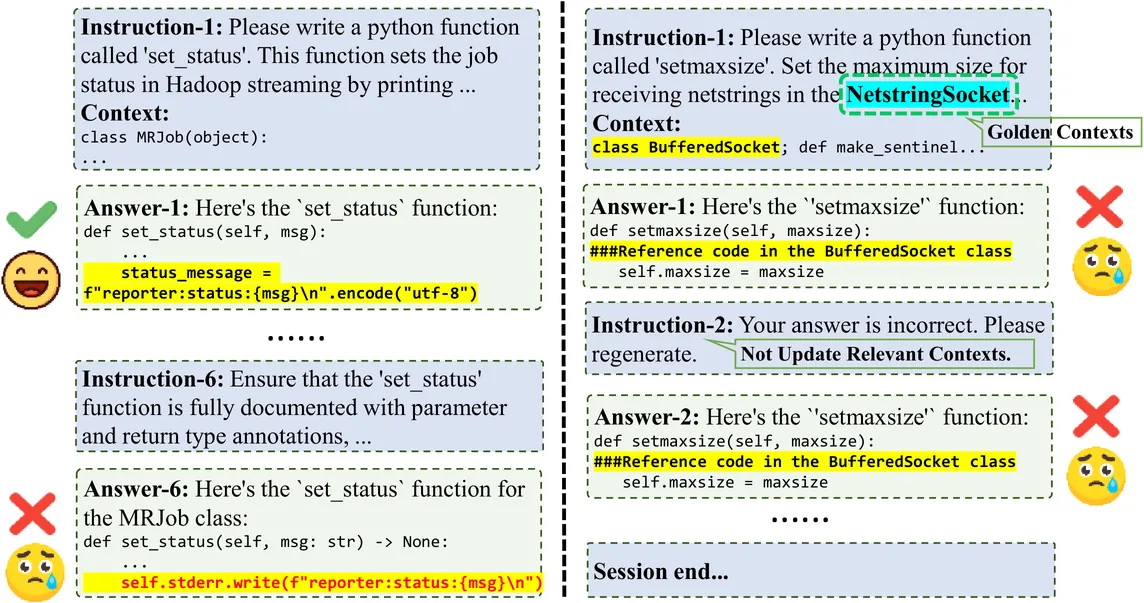

Large language models (LLMs) substantially enhance developer productivity in repository-level code generation through interactive collaboration. However, as interactions progress, repository context must be continuously preserved and updated to integrate newly validated information. Meanwhile, the expanding session history increases cognitive burden, often leading to forgetting and the reintroduction of previously resolved errors. Existing memory management approaches show promise but remain limited by natural language-centric representations. To overcome these limitations, we propose CodeMEM, an AST-guided dynamic memory management system tailored for repository-level iterative code generation. Specifically, CodeMEM introduces the Code Context Memory component that dynamically maintains and updates repository context through AST-guided LLM operations, along with the Code Session Memory that constructs a code-centric representation of interaction history and explicitly detects and mitigates forgetting through AST-based analysis. Experimental results on the instruction-following benchmark CodeIF-Bench and the code generation benchmark CoderEval demonstrate that CodeMEM achieves state-of-the-art performance, improving instruction following by 12.2% for the current turn and 11.5% for the session level, and reducing interaction rounds by 2-3, while maintaining competitive inference latency and token efficiency.