Databases

Covers database management, datamining, and data processing. Roughly includes material in ACM Subject Classes H.2, H.3, and H.4.

Looking for a broader view? This category is part of:

Covers database management, datamining, and data processing. Roughly includes material in ACM Subject Classes H.2, H.3, and H.4.

Looking for a broader view? This category is part of:

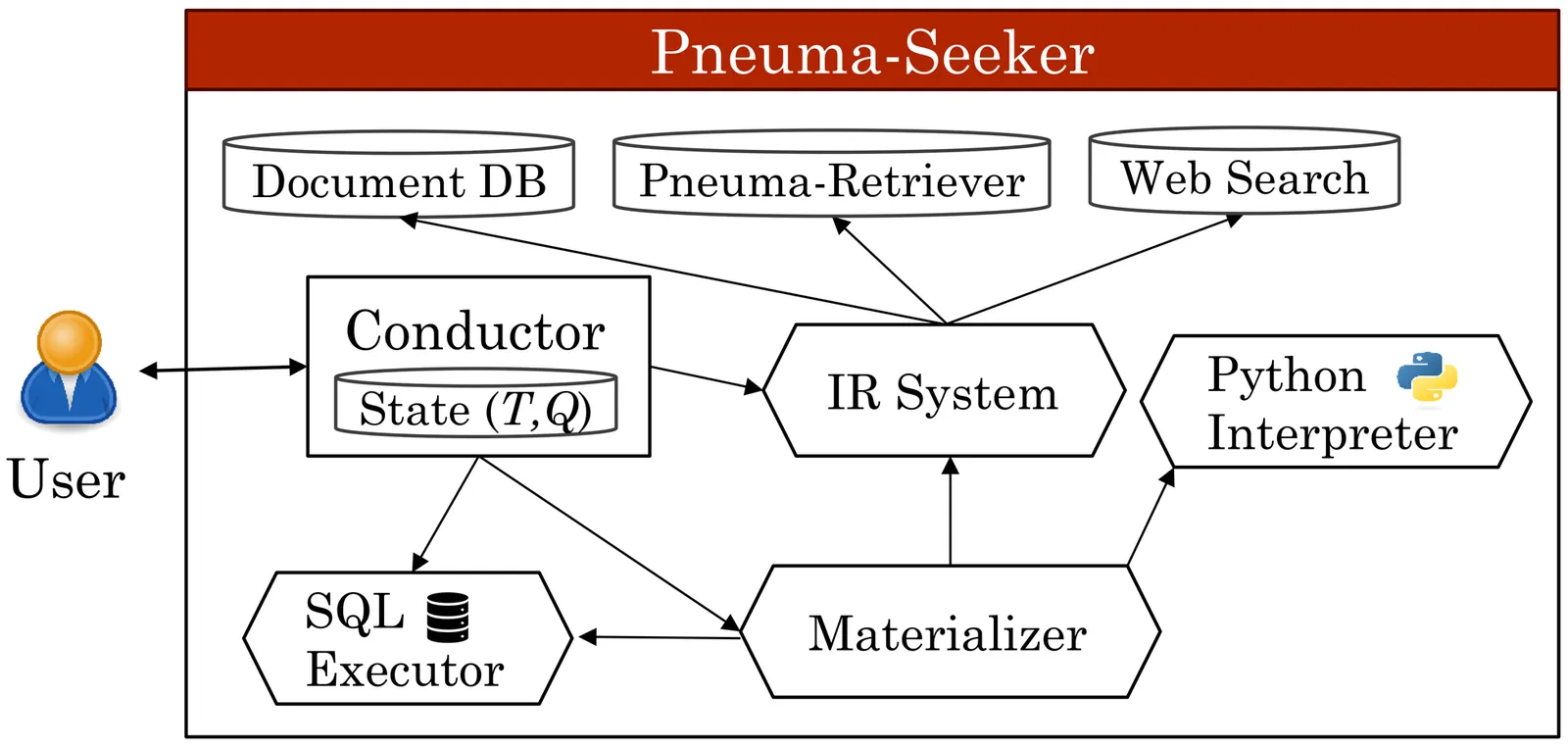

Data discovery and preparation remain persistent bottlenecks in the data management lifecycle, especially when user intent is vague, evolving, or difficult to operationalize. The Pneuma Project introduces Pneuma-Seeker, a system that helps users articulate and fulfill information needs through iterative interaction with a language model-powered platform. The system reifies the user's evolving information need as a relational data model and incrementally converges toward a usable document aligned with that intent. To achieve this, the system combines three architectural ideas: context specialization to reduce LLM burden across subtasks, a conductor-style planner to assemble dynamic execution plans, and a convergence mechanism based on shared state. The system integrates recent advances in retrieval-augmented generation (RAG), agentic frameworks, and structured data preparation to support semi-automatic, language-guided workflows. We evaluate the system through LLM-based user simulations and show that it helps surface latent intent, guide discovery, and produce fit-for-purpose documents. It also acts as an emergent documentation layer, capturing institutional knowledge and supporting organizational memory.

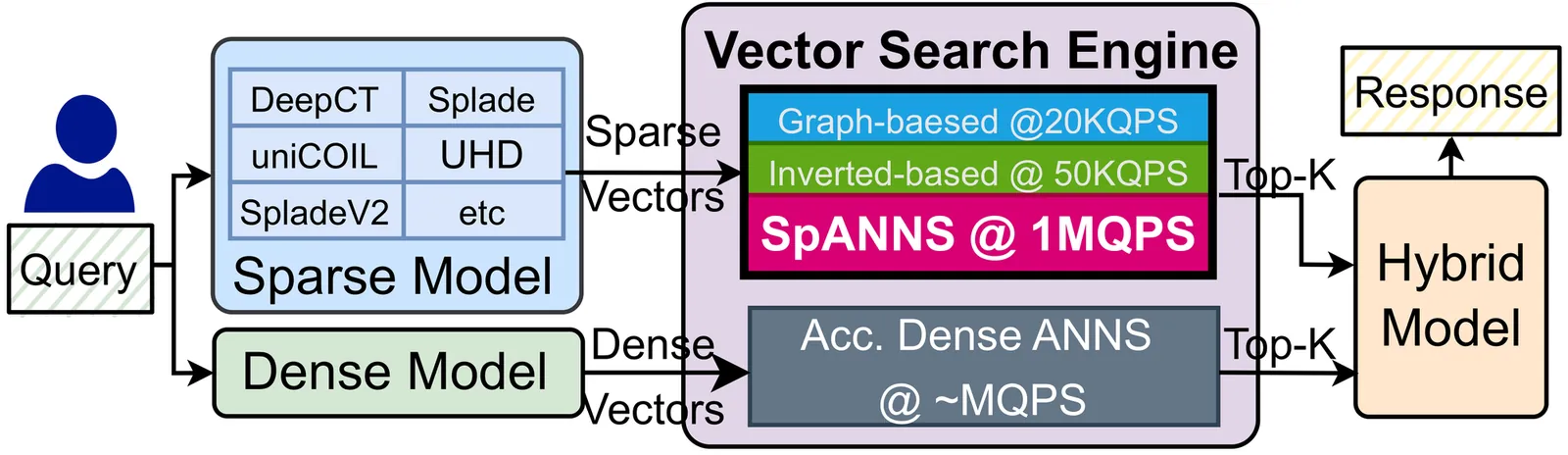

Approximate Nearest Neighbor Search (ANNS) is a fundamental operation in vector databases, enabling efficient similarity search in high-dimensional spaces. While dense ANNS has been optimized using specialized hardware accelerators, sparse ANNS remains limited by CPU-based implementations, hindering scalability. This limitation is increasingly critical as hybrid retrieval systems, combining sparse and dense embeddings, become standard in Information Retrieval (IR) pipelines. We propose SpANNS, a near-memory processing architecture for sparse ANNS. SpANNS combines a hybrid inverted index with efficient query management and runtime optimizations. The architecture is built on a CXL Type-2 near-memory platform, where a specialized controller manages query parsing and cluster filtering, while compute-enabled DIMMs perform index traversal and distance computations close to the data. It achieves 15.2x to 21.6x faster execution over the state-of-the-art CPU baselines, offering scalable and efficient solutions for sparse vector search.

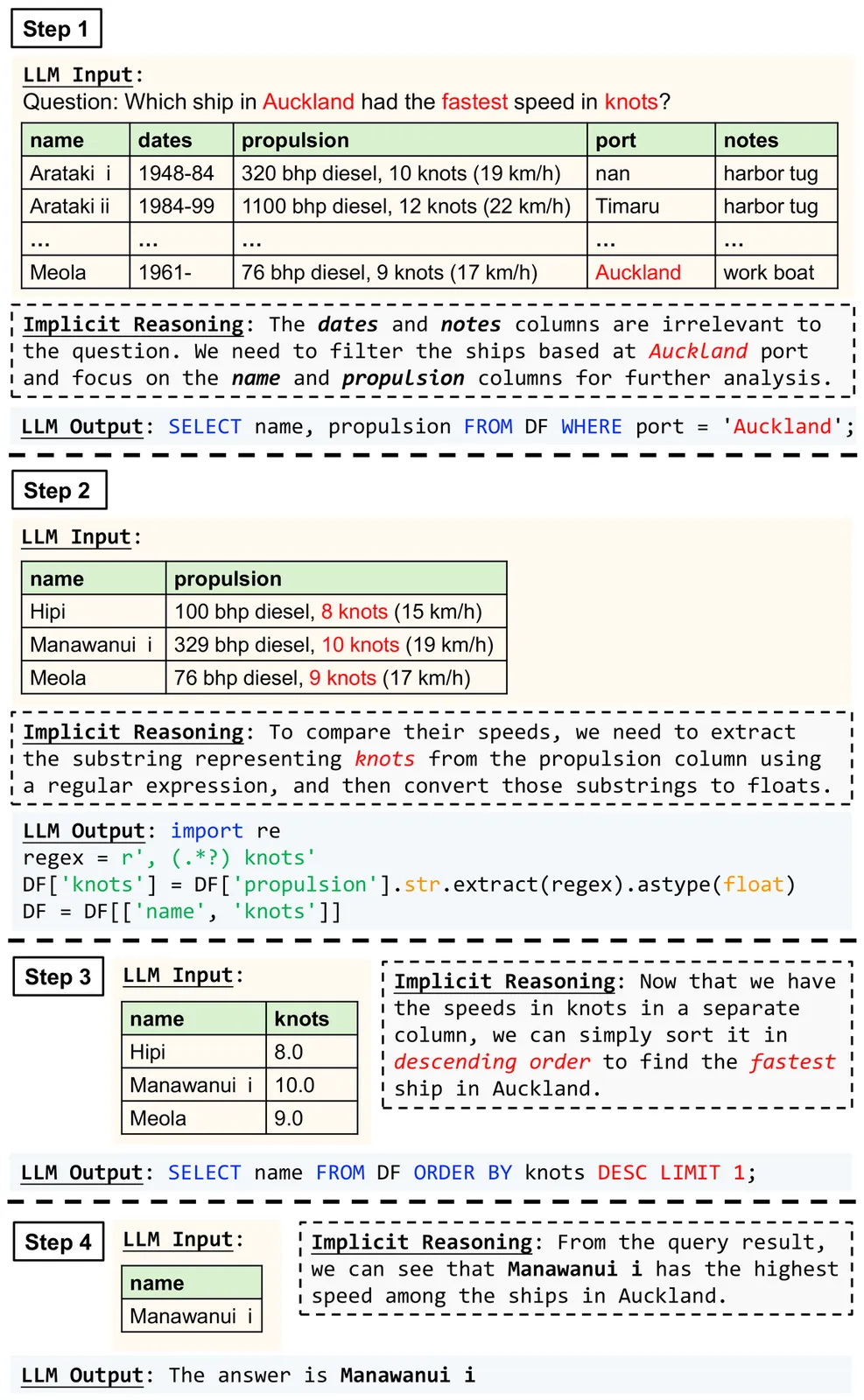

Given a table T in a database and a question Q in natural language, the table question answering (TQA) task aims to return an accurate answer to Q based on the content of T. Recent state-of-the-art solutions leverage large language models (LLMs) to obtain high-quality answers. However, most rely on proprietary, large-scale LLMs with costly API access, posing a significant financial barrier. This paper instead focuses on TQA with smaller, open-weight LLMs that can run on a desktop or laptop. This setting is challenging, as such LLMs typically have weaker capabilities than large proprietary models, leading to substantial performance degradation with existing methods. We observe that a key reason for this degradation is that prior approaches often require the LLM to solve a highly sophisticated task using long, complex prompts, which exceed the capabilities of small open-weight LLMs. Motivated by this observation, we present Orchestra, a multi-agent approach that unlocks the potential of accessible LLMs for high-quality, cost-effective TQA. Orchestra coordinates a group of LLM agents, each responsible for a relatively simple task, through a structured, layered workflow to solve complex TQA problems -- akin to an orchestra. By reducing the prompt complexity faced by each agent, Orchestra significantly improves output reliability. We implement Orchestra on top of AgentScope, an open-source multi-agent framework, and evaluate it on multiple TQA benchmarks using a wide range of open-weight LLMs. Experimental results show that Orchestra achieves strong performance even with small- to medium-sized models. For example, with Qwen2.5-14B, Orchestra reaches 72.1% accuracy on WikiTQ, approaching the best prior result of 75.3% achieved with GPT-4; with larger Qwen, Llama, or DeepSeek models, Orchestra outperforms all prior methods and establishes new state-of-the-art results across all benchmarks.

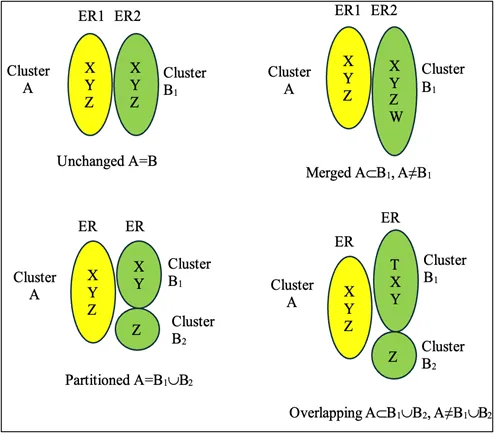

This paper describes a new process and software system, the Case Count Metric System (CCMS), for systematically comparing and analyzing the outcomes of two different ER clustering processes acting on the same dataset when the true linking (labeling) is not known. The CCMS produces a set of counts that describe how the clusters produced by the first process are transformed by the second process based on four possible transformation scenarios. The transformations are that a cluster formed in the first process either remains unchanged, merges into a larger cluster, is partitioned into smaller clusters, or otherwise overlaps with multiple clusters formed in the second process. The CCMS produces a count for each of these cases, accounting for every cluster formed in the first process. In addition, when run in analysis mode, the CCMS program can assist the user in evaluating these changes by displaying the details for all changes or only for certain types of changes. The paper includes a detailed description of the CCMS process and program and examples of how the CCMS has been applied in university and industry research.

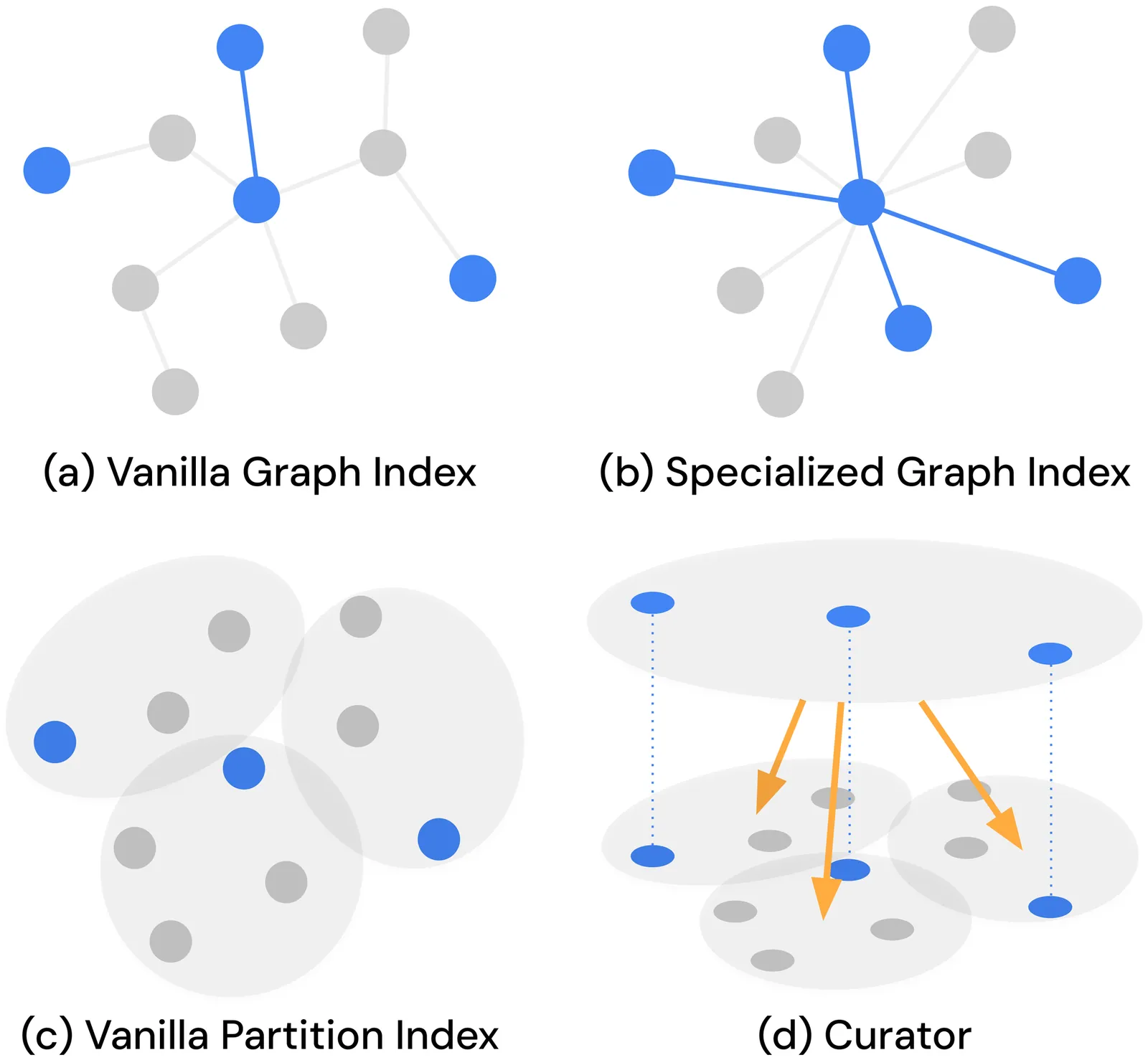

Embedding-based dense retrieval has become the cornerstone of many critical applications, where approximate nearest neighbor search (ANNS) queries are often combined with filters on labels such as dates and price ranges. Graph-based indexes achieve state-of-the-art performance on unfiltered ANNS but encounter connectivity breakdown on low-selectivity filtered queries, where qualifying vectors become sparse and the graph structure among them fragments. Recent research proposes specialized graph indexes that address this issue by expanding graph degree, which incurs prohibitively high construction costs. Given these inherent limitations of graph-based methods, we argue for a dual-index architecture and present Curator, a partition-based index that complements existing graph-based approaches for low-selectivity filtered ANNS. Curator builds specialized indexes for different labels within a shared clustering tree, where each index adapts to the distribution of its qualifying vectors to ensure efficient search while sharing structure to minimize memory overhead. The system also supports incremental updates and handles arbitrary complex predicates beyond single-label filters by efficiently constructing temporary indexes on the fly. Our evaluation demonstrates that integrating Curator with state-of-the-art graph indexes reduces low-selectivity query latency by up to 20.9x compared to pre-filtering fallback, while increasing construction time and memory footprint by only 5.5% and 4.3%, respectively.

Cloud storage has become the backbone of modern data infrastructure, yet privacy and efficient data retrieval remain significant challenges. Traditional privacy-preserving approaches primarily focus on enhancing database security but fail to address the automatic identification of sensitive information before encryption. This can dramatically reduce query processing time and mitigate errors during manual identification of sensitive information, thereby reducing potential privacy risks. To address this limitation, this research proposes an intelligent privacy-preserving query optimization framework that integrates Named Entity Recognition (NER) to detect sensitive information in queries, utilizing secure data encryption and query optimization techniques for both sensitive and non-sensitive data in parallel, thereby enabling efficient database optimization. Combined deep learning algorithms and transformer-based models to detect and classify sensitive entities with high precision, and the Advanced Encryption Standard (AES) algorithm to encrypt, with blind indexing to secure search functionality of the sensitive data, whereas non-sensitive data was divided into groups using the K-means algorithm, along with a rank search for optimization. Among all NER models, the Deep Belief Network combined with Long Short-Term Memory (DBN-LSTM) delivers the best performance, with an accuracy of 93% and precision (94%), recall, and F1 score of 93%, and 93%, respectively. Besides, encrypted search achieved considerably faster results with the help of blind indexing, and non-sensitive data fetching also outperformed traditional clustering-based searches. By integrating sensitive data detection, encryption, and query optimization, this work advances the state of privacy-preserving computation in modern cloud infrastructures.

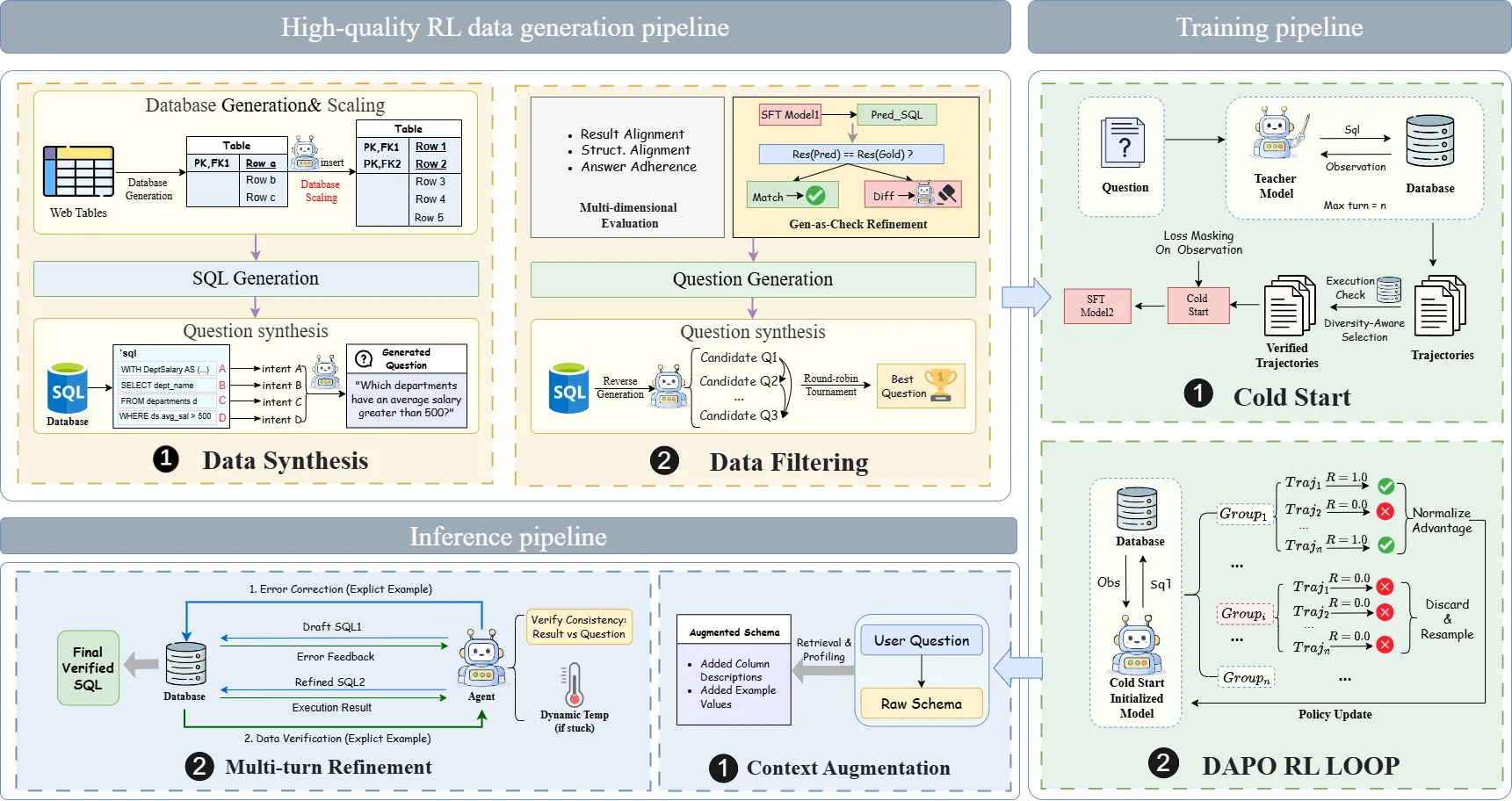

The advancement of Text-to-SQL systems is currently hindered by the scarcity of high-quality training data and the limited reasoning capabilities of models in complex scenarios. In this paper, we propose a holistic framework that addresses these issues through a dual-centric approach. From a Data-Centric perspective, we construct an iterative data factory that synthesizes RL-ready data characterized by high correctness and precise semantic-logic alignment, ensured by strict verification. From a Model-Centric perspective, we introduce a novel Agentic Reinforcement Learning framework. This framework employs a Diversity-Aware Cold Start stage to initialize a robust policy, followed by Group Relative Policy Optimization (GRPO) to refine the agent's reasoning via environmental feedback. Extensive experiments on BIRD and Spider benchmarks demonstrate that our synergistic approach achieves state-of-the-art performance among single-model methods.

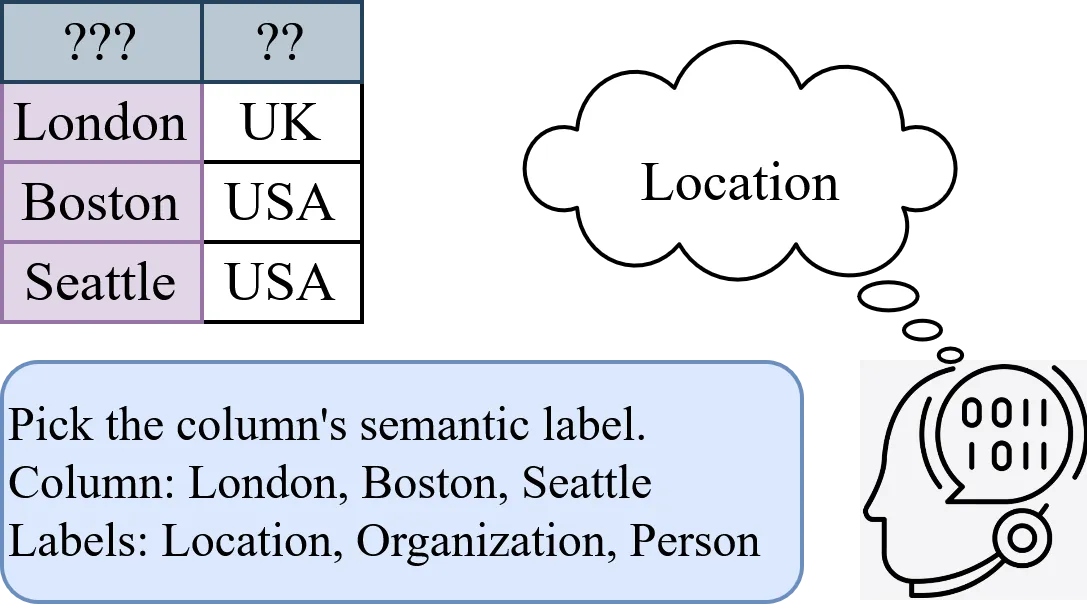

Column Type Annotation (CTA) is a fundamental step towards enabling schema alignment and semantic understanding of tabular data. Existing encoder-only language models achieve high accuracy when fine-tuned on labeled columns, but their applicability is limited to in-domain settings, as distribution shifts in tables or label spaces require costly re-training from scratch. Recent work has explored prompting generative large language models (LLMs) by framing CTA as a multiple-choice task, but these approaches face two key challenges: (1) model performance is highly sensitive to subtle changes in prompt wording and structure, and (2) annotation F1 scores remain modest. A natural extension is to fine-tune large language models. However, fully fine-tuning these models incurs prohibitive computational costs due to their scale, and the sensitivity to prompts is not eliminated. In this paper, we present a parameter-efficient framework for CTA that trains models over prompt-augmented data via Low-Rank Adaptation (LoRA). Our approach mitigates sensitivity to prompt variations while drastically reducing the number of necessary trainable parameters, achieving robust performance across datasets and templates. Experimental results on recent benchmarks demonstrate that models fine-tuned with our prompt augmentation strategy maintain stable performance across diverse prompt patterns during inference and yield higher weighted F1 scores than those fine-tuned on a single prompt template. These results highlight the effectiveness of parameter-efficient training and augmentation strategies in developing practical and adaptable CTA systems.

Text-to-SQL systems powered by Large Language Models (LLMs) achieve high accuracy on standard benchmarks, yet existing efficiency metrics such as the Valid Efficiency Score (VES) measure execution time rather than the consumption-based costs of cloud data warehouses. This paper presents the first systematic evaluation of cloud compute costs for LLM-generated SQL queries. We evaluate six state-of-the-art LLMs across 180 query executions on Google BigQuery using the StackOverflow dataset (230GB), measuring bytes processed, slot utilization, and estimated cost. Our analysis yields three key findings: (1) reasoning models process 44.5% fewer bytes than standard models while maintaining equivalent correctness (96.7%-100%); (2) execution time correlates weakly with query cost (r=0.16), indicating that speed optimization does not imply cost optimization; and (3) models exhibit up to 3.4x cost variance, with standard models producing outliers exceeding 36GB per query. We identify prevalent inefficiency patterns including missing partition filters and unnecessary full-table scans, and provide deployment guidelines for cost-sensitive enterprise environments.

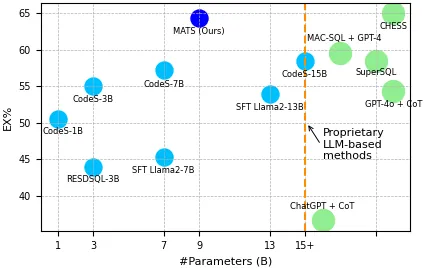

Text2SQL, the task of generating SQL queries from natural language text, is a critical challenge in data engineering. Recently, Large Language Models (LLMs) have demonstrated superior performance for this task due to their advanced comprehension and generation capabilities. However, privacy and cost considerations prevent companies from using Text2SQL solutions based on external LLMs offered as a service. Rather, small LLMs (SLMs) that are openly available and can hosted in-house are adopted. These SLMs, in turn, lack the generalization capabilities of larger LLMs, which impairs their effectiveness for complex tasks such as Text2SQL. To address these limitations, we propose MATS, a novel Text2SQL framework designed specifically for SLMs. MATS uses a multi-agent mechanism that assigns specialized roles to auxiliary agents, reducing individual workloads and fostering interaction. A training scheme based on reinforcement learning aligns these agents using feedback obtained during execution, thereby maintaining competitive performance despite a limited LLM size. Evaluation results using on benchmark datasets show that MATS, deployed on a single- GPU server, yields accuracy that are on-par with large-scale LLMs when using significantly fewer parameters. Our source code and data are available at https://github.com/thanhdath/mats-sql.

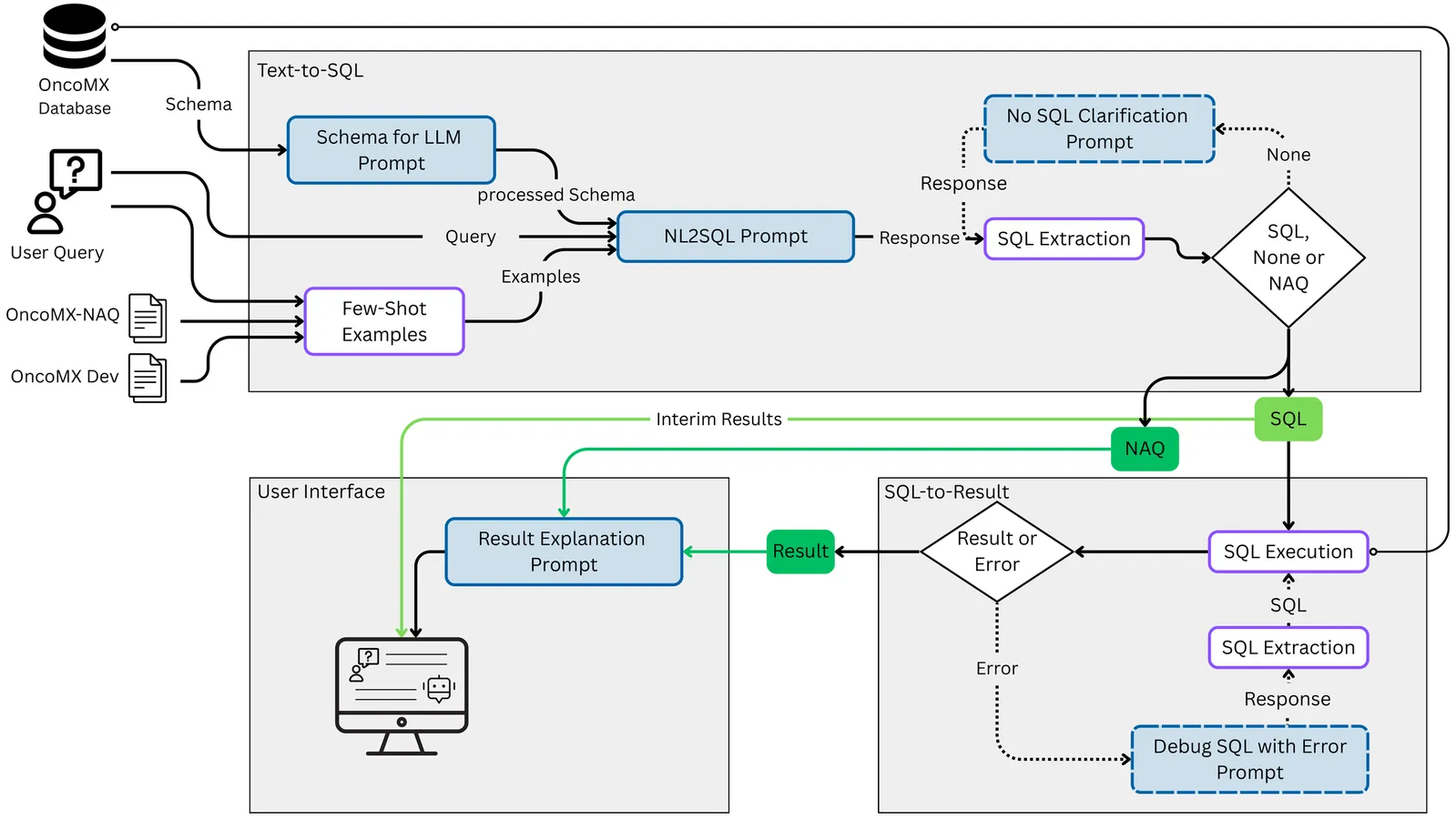

Text-to-SQL systems allow non-SQL experts to interact with relational databases using natural language. However, their tendency to generate executable SQL for ambiguous, out-of-scope, or unanswerable queries introduces a hidden risk, as outputs may be misinterpreted as correct. This risk is especially serious in biomedical contexts, where precision is critical. We therefore present Query Carefully, a pipeline that integrates LLM-based SQL generation with explicit detection and handling of unanswerable inputs. Building on the OncoMX component of ScienceBenchmark, we construct OncoMX-NAQ (No-Answer Questions), a set of 80 no-answer questions spanning 8 categories (non-SQL, out-of-schema/domain, and multiple ambiguity types). Our approach employs llama3.3:70b with schema-aware prompts, explicit No-Answer Rules (NAR), and few-shot examples drawn from both answerable and unanswerable questions. We evaluate SQL exact match, result accuracy, and unanswerable-detection accuracy. On the OncoMX dev split, few-shot prompting with answerable examples increases result accuracy, and adding unanswerable examples does not degrade performance. On OncoMX-NAQ, balanced prompting achieves the highest unanswerable-detection accuracy (0.8), with near-perfect results for structurally defined categories (non-SQL, missing columns, out-of-domain) but persistent challenges for missing-value queries (0.5) and column ambiguity (0.3). A lightweight user interface surfaces interim SQL, execution results, and abstentions, supporting transparent and reliable text-to-SQL in biomedical applications.

2512.16321

2512.16321Subset sampling (also known as Poisson sampling), where the decision to include any specific element in the sample is made independently of all others, is a fundamental primitive in data analytics, enabling efficient approximation by processing representative subsets rather than massive datasets. While sampling from explicit lists is well-understood, modern applications -- such as machine learning over relational data -- often require sampling from a set defined implicitly by a relational join. In this paper, we study the problem of \emph{subset sampling over joins}: drawing a random subset from the join results, where each join result is included independently with some probability. We address the general setting where the probability is derived from input tuple weights via decomposable functions (e.g., product, sum, min, max). Since the join size can be exponentially larger than the input, the naive approach of materializing all join results to perform subset sampling is computationally infeasible. We propose the first efficient algorithms for subset sampling over acyclic joins: (1) a \emph{static index} for generating multiple (independent) subset samples over joins; (2) a \emph{one-shot} algorithm for generating a single subset sample over joins; (3) a \emph{dynamic index} that can support tuple insertions, while maintaining a one-shot sample or generating multiple (independent) samples. Our techniques achieve near-optimal time and space complexity with respect to the input size and the expected sample size.

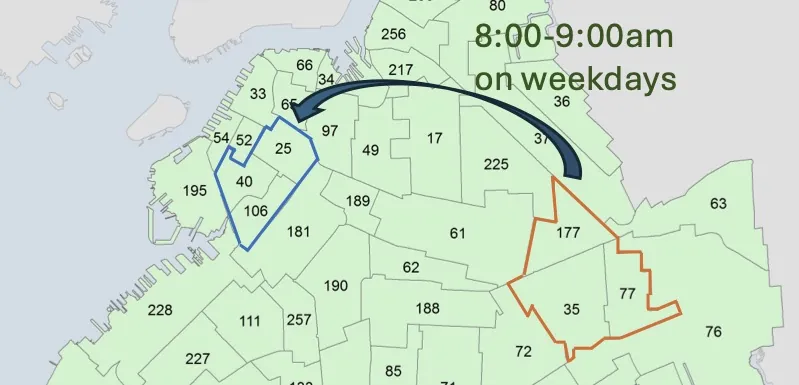

Analyzing flow of objects or data at different granularities of space and time can unveil interesting insights or trends. For example, transportation companies, by aggregating passenger travel data (e.g., counting passengers traveling from one region to another), can analyze movement behavior. In this paper, we study the problem of finding important trends in passenger movements between regions at different granularities. We define Origin (O), Destination (D), and Time (T ) patterns (ODT patterns) and propose a bottom-up algorithm that enumerates them. We suggest and employ optimizations that greatly reduce the search space and the computational cost of pattern enumeration. We also propose pattern variants (constrained patterns and top-k patterns) that could be useful to different applications scenarios. Finally, we propose an approximate solution that fast identifies ODT patterns of specific sizes, following a generate-and-test approach. We evaluate the efficiency and effectiveness of our methods on three real datasets and showcase interesting ODT flow patterns in them.

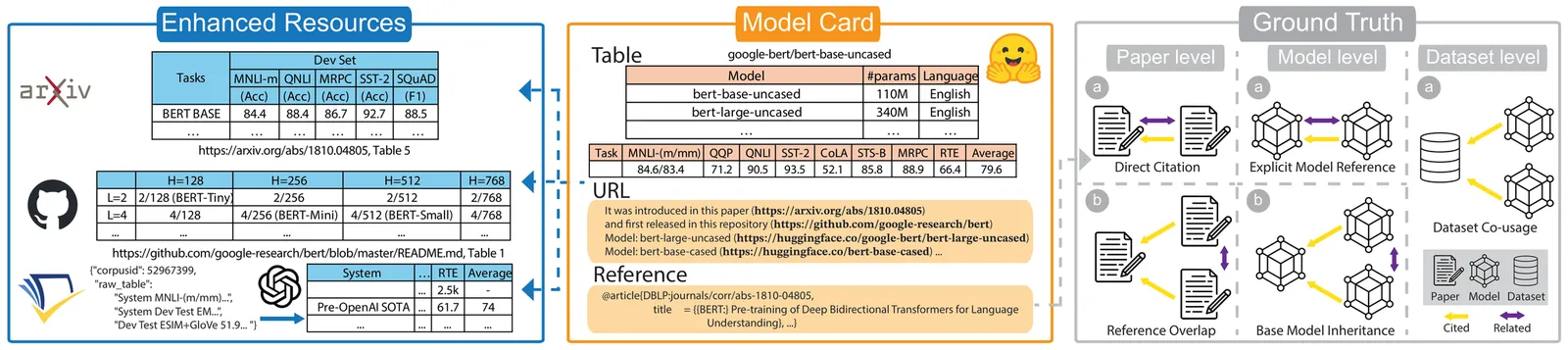

We present ModelTables, a benchmark of tables in Model Lakes that captures the structured semantics of performance and configuration tables often overlooked by text only retrieval. The corpus is built from Hugging Face model cards, GitHub READMEs, and referenced papers, linking each table to its surrounding model and publication context. Compared with open data lake tables, model tables are smaller yet exhibit denser inter table relationships, reflecting tightly coupled model and benchmark evolution. The current release covers over 60K models and 90K tables. To evaluate model and table relatedness, we construct a multi source ground truth using three complementary signals: (1) paper citation links, (2) explicit model card links and inheritance, and (3) shared training datasets. We present one extensive empirical use case for the benchmark which is table search. We compare canonical Data Lake search operators (unionable, joinable, keyword) and Information Retrieval baselines (dense, sparse, hybrid retrieval) on this benchmark. Union based semantic table retrieval attains 54.8 % P@1 overall (54.6 % on citation, 31.3 % on inheritance, 30.6 % on shared dataset signals); table based dense retrieval reaches 66.5 % P@1, and metadata hybrid retrieval achieves 54.1 %. This evaluation indicates clear room for developing better table search methods. By releasing ModelTables and its creation protocol, we provide the first large scale benchmark of structured data describing AI model. Our use case of table discovery in Model Lakes, provides intuition and evidence for developing more accurate semantic retrieval, structured comparison, and principled organization of structured model knowledge. Source code, data, and other artifacts have been made available at https://github.com/RJMillerLab/ModelTables.

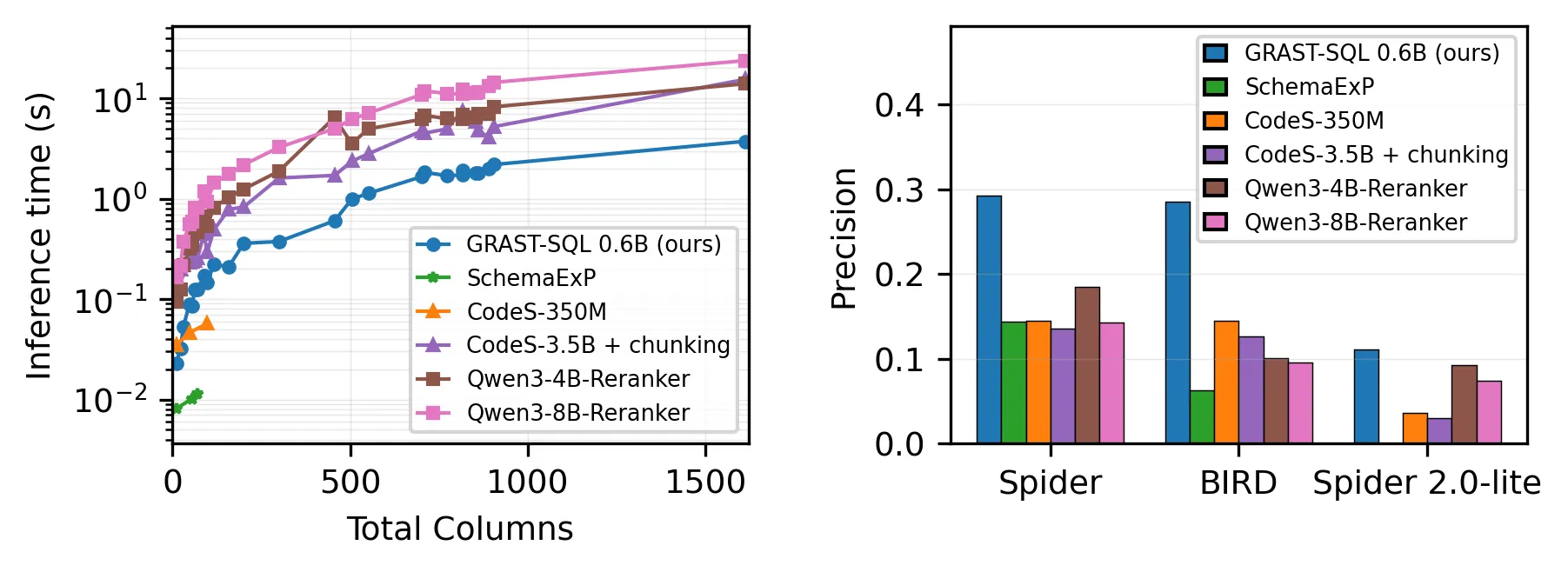

Most modern Text2SQL systems prompt large language models (LLMs) with entire schemas -- mostly column information -- alongside the user's question. While effective on small databases, this approach fails on real-world schemas that exceed LLM context limits, even for commercial models. The recent Spider 2.0 benchmark exemplifies this with hundreds of tables and tens of thousands of columns, where existing systems often break. Current mitigations either rely on costly multi-step prompting pipelines or filter columns by ranking them against user's question independently, ignoring inter-column structure. To scale existing systems, we introduce \toolname, an open-source, LLM-efficient schema filtering framework that compacts Text2SQL prompts by (i) ranking columns with a query-aware LLM encoder enriched with values and metadata, (ii) reranking inter-connected columns via a lightweight graph transformer over functional dependencies, and (iii) selecting a connectivity-preserving sub-schema with a Steiner-tree heuristic. Experiments on real datasets show that \toolname achieves near-perfect recall and higher precision than CodeS, SchemaExP, Qwen rerankers, and embedding retrievers, while maintaining sub-second median latency and scaling to schemas with 23,000+ columns. Our source code is available at https://github.com/thanhdath/grast-sql.

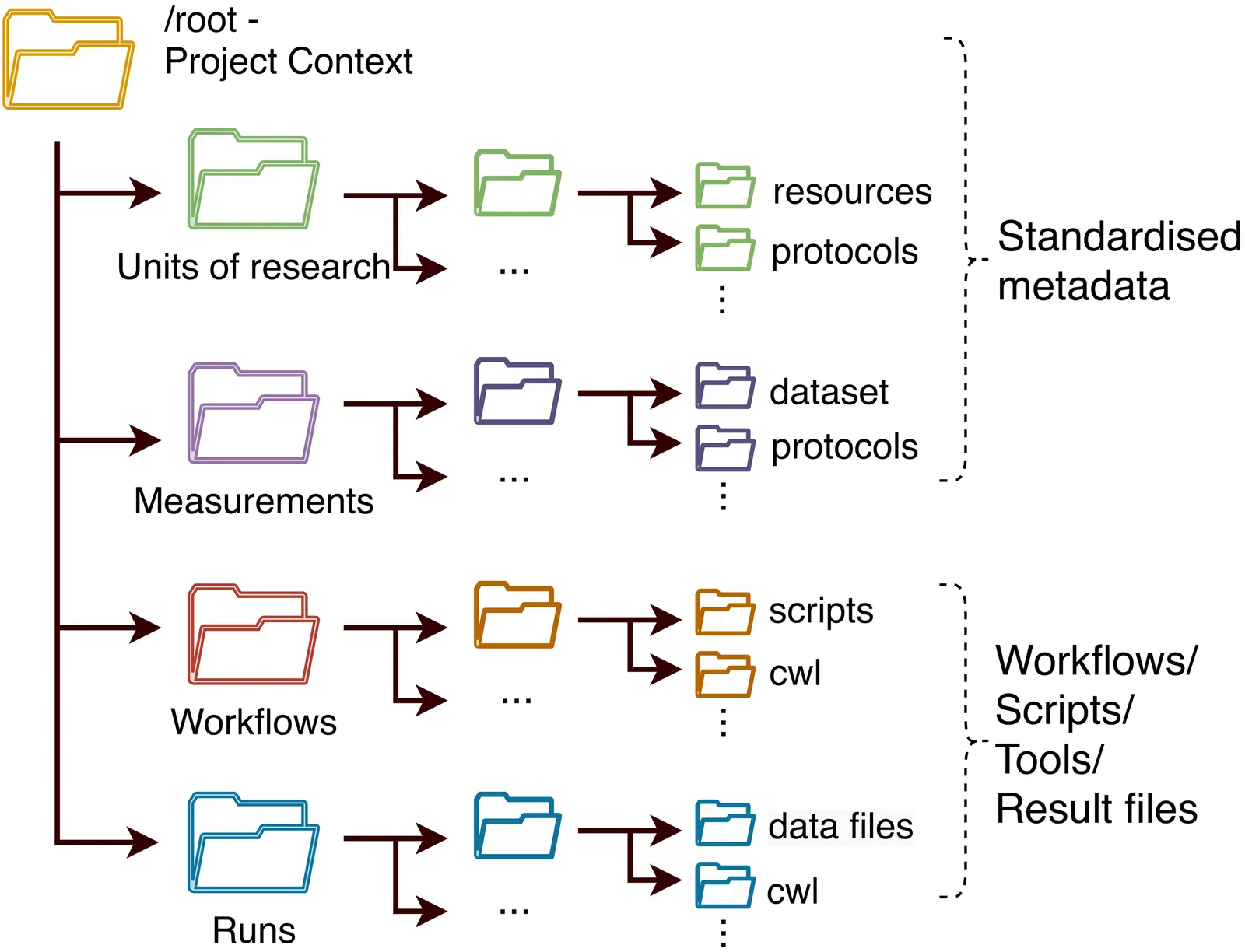

Data sharing in large consortia, such as research collaborations or industry partnerships, requires addressing both organizational and technical challenges. A common platform is essential to promote collaboration, facilitate exchange of findings, and ensure secure access to sensitive data. Key technical challenges include creating a scalable architecture, a user-friendly interface, and robust security and access control. The BIG-MAP Archive is a cloud-based, disciplinary, private repository designed to address these challenges. Built on InvenioRDM, it leverages platform functionalities to meet consortium-specific needs, providing a tailored solution compared to general repositories. Access can be restricted to members of specific communities or open to the entire consortium, such as the BATTERY 2030+, a consortium accelerating advanced battery technologies. Uploaded data and metadata are controlled via fine grained permissions, allowing access to individual project members or the full initiative. The formalized upload process ensures data are formatted and ready for publication in open repositories when needed. This paper reviews the repository's key features, showing how the BIG-MAP Archive enables secure, controlled data sharing within large consortia. It ensures data confidentiality while supporting flexible, permissions-based access and can be easily redeployed for other consortia, including MaterialsCommons4.eu and RAISE (Resource for AI Science in Europe).

Traditional search applications within Research Data Management (RDM) ecosystems are crucial in helping users discover and explore the structured metadata from the research datasets. Typically, text search engines require users to submit keyword-based queries rather than using natural language. However, using Large Language Models (LLMs) trained on domain-specific content for specialized natural language processing (NLP) tasks is becoming increasingly common. We present ArcBERT, an LLM-based system designed for integrated metadata exploration. ArcBERT understands natural language queries and relies on semantic matching, unlike traditional search applications. Notably, ArcBERT also understands the structure and hierarchies within the metadata, enabling it to handle diverse user querying patterns effectively.

The search for suitable datasets is the critical "first step" in data-driven research, but it remains a great challenge. Researchers often need to search for datasets based on high-level task descriptions. However, existing search systems struggle with this task due to ambiguous user intent, task-to-dataset mapping and benchmark gaps, and entity ambiguity. To address these challenges, we introduce KATS, a novel end-to-end system for task-oriented dataset search from unstructured scientific literature. KATS consists of two key components, i.e., offline knowledge base construction and online query processing. The sophisticated offline pipeline automatically constructs a high-quality, dynamically updatable task-dataset knowledge graph by employing a collaborative multi-agent framework for information extraction, thereby filling the task-to-dataset mapping gap. To further address the challenge of entity ambiguity, a unique semantic-based mechanism is used for task entity linking and dataset entity resolution. For online retrieval, KATS utilizes a specialized hybrid query engine that combines vector search with graph-based ranking to generate highly relevant results. Additionally, we introduce CS-TDS, a tailored benchmark suite for evaluating task-oriented dataset search systems, addressing the critical gap in standardized evaluation. Experiments on our benchmark suite show that KATS significantly outperforms state-of-the-art retrieval-augmented generation frameworks in both effectiveness and efficiency, providing a robust blueprint for the next generation of dataset discovery systems.

2512.15308

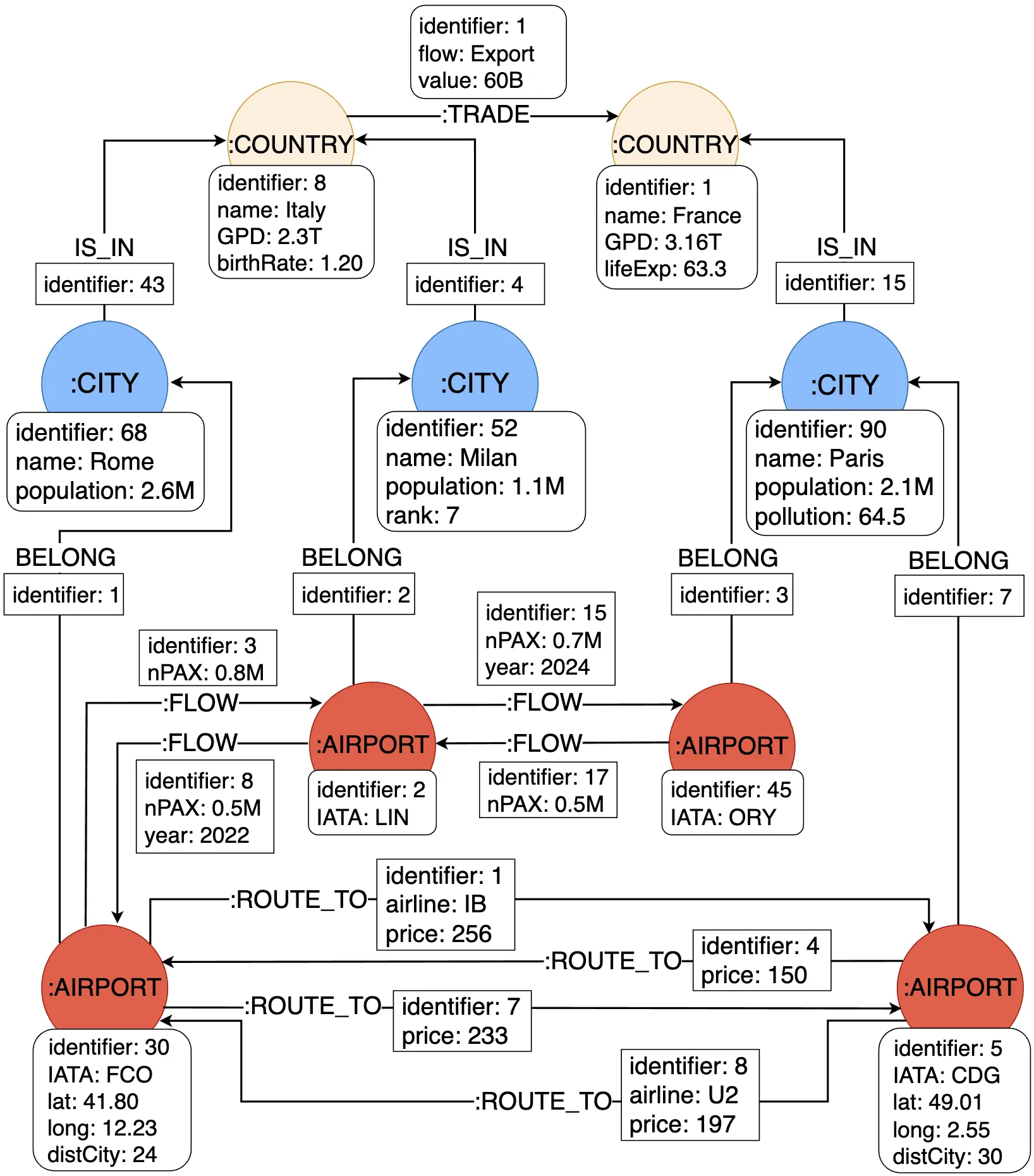

2512.15308We introduce graph pattern-based association rules (GPARs) for directed labeled multigraphs such as RDF graphs. GPARs support both generative tasks, where a graph is extended, and evaluative tasks, where the plausibility of a graph is assessed. The framework goes beyond related formalisms such as graph functional dependencies, graph entity dependencies, relational association rules, graph association rules, multi-relation and path association rules, and Horn rules. Given a collection of graphs, we evaluate graph patterns under no-repeated-anything semantics, which allows the topology of a graph to be taken into account more effectively. We define a probability space and derive confidence, lift, leverage, and conviction in a probabilistic setting. We further analyze how these metrics relate to their classical itemset-based counterparts and identify conditions under which their characteristic properties are preserved.

While scoring nodes in graphs to understand their importance (e.g., in terms of centrality) has been investigated for decades, comparing nodes in property graphs based on their properties has not, to our knowledge, yet been addressed. In this paper, we propose an approach to automatically extract comparison of nodes in property graphs, to support the interactive exploratory analysis of said graphs. We first present a way of devising comparison indicators using the context of nodes to be compared. Then, we formally define the problem of using these indicators to group the nodes so that the comparisons extracted are both significant and not straightforward. We propose various heuristics for solving this problem. Our tests on real property graph databases show that simple heuristics can be used to obtain insights within minutes while slower heuristics are needed to obtain insights of higher quality.