Cryptography and Security

Covers all areas of cryptography and security including authentication, public key cryptosystems, proof-carrying code, etc.

Looking for a broader view? This category is part of:

Covers all areas of cryptography and security including authentication, public key cryptosystems, proof-carrying code, etc.

Looking for a broader view? This category is part of:

Jailbreak attacks pose significant threats to large language models (LLMs), enabling attackers to bypass safeguards. However, existing reactive defense approaches struggle to keep up with the rapidly evolving multi-turn jailbreaks, where attackers continuously deepen their attacks to exploit vulnerabilities. To address this critical challenge, we propose HoneyTrap, a novel deceptive LLM defense framework leveraging collaborative defenders to counter jailbreak attacks. It integrates four defensive agents, Threat Interceptor, Misdirection Controller, Forensic Tracker, and System Harmonizer, each performing a specialized security role and collaborating to complete a deceptive defense. To ensure a comprehensive evaluation, we introduce MTJ-Pro, a challenging multi-turn progressive jailbreak dataset that combines seven advanced jailbreak strategies designed to gradually deepen attack strategies across multi-turn attacks. Besides, we present two novel metrics: Mislead Success Rate (MSR) and Attack Resource Consumption (ARC), which provide more nuanced assessments of deceptive defense beyond conventional measures. Experimental results on GPT-4, GPT-3.5-turbo, Gemini-1.5-pro, and LLaMa-3.1 demonstrate that HoneyTrap achieves an average reduction of 68.77% in attack success rates compared to state-of-the-art baselines. Notably, even in a dedicated adaptive attacker setting with intensified conditions, HoneyTrap remains resilient, leveraging deceptive engagement to prolong interactions, significantly increasing the time and computational costs required for successful exploitation. Unlike simple rejection, HoneyTrap strategically wastes attacker resources without impacting benign queries, improving MSR and ARC by 118.11% and 149.16%, respectively.

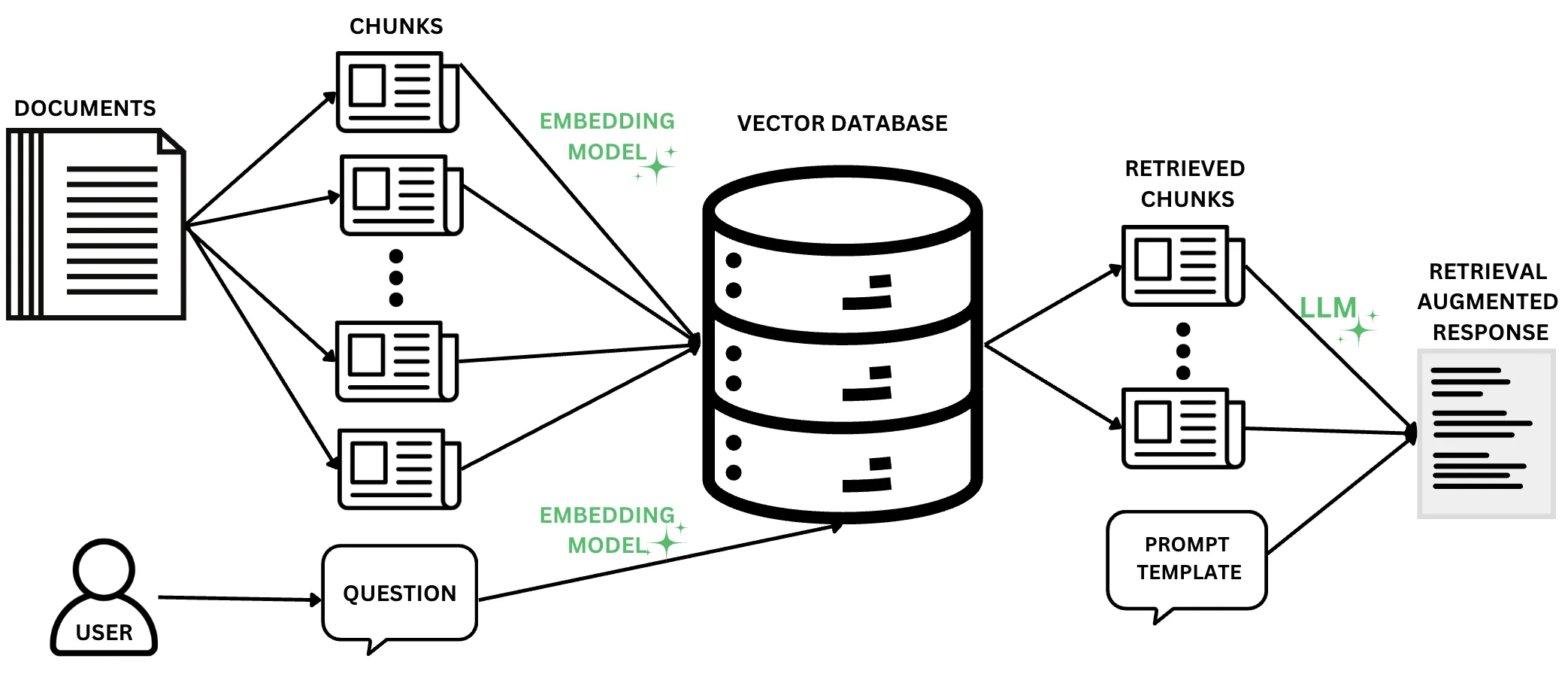

The continued promise of Large Language Models (LLMs), particularly in their natural language understanding and generation capabilities, has driven a rapidly increasing interest in identifying and developing LLM use cases. In an effort to complement the ingrained "knowledge" of LLMs, Retrieval-Augmented Generation (RAG) techniques have become widely popular. At its core, RAG involves the coupling of LLMs with domain-specific knowledge bases, whereby the generation of a response to a user question is augmented with contextual and up-to-date information. The proliferation of RAG has sparked concerns about data privacy, particularly with the inherent risks that arise when leveraging databases with potentially sensitive information. Numerous recent works have explored various aspects of privacy risks in RAG systems, from adversarial attacks to proposed mitigations. With the goal of surveying and unifying these works, we ask one simple question: What are the privacy risks in RAG, and how can they be measured and mitigated? To answer this question, we conduct a systematic literature review of RAG works addressing privacy, and we systematize our findings into a comprehensive set of privacy risks, mitigation techniques, and evaluation strategies. We supplement these findings with two primary artifacts: a Taxonomy of RAG Privacy Risks and a RAG Privacy Process Diagram. Our work contributes to the study of privacy in RAG not only by conducting the first systematization of risks and mitigations, but also by uncovering important considerations when mitigating privacy risks in RAG systems and assessing the current maturity of proposed mitigations.

2601.03923

2601.03923Sybil attacks remain a fundamental obstacle in open online systems, where adversaries can cheaply create and sustain large numbers of fake identities. Existing defenses, including CAPTCHAs and one-time proof-of-personhood mechanisms, primarily address identity creation and provide limited protection against long-term, large-scale Sybil participation, especially as automated solvers and AI systems continue to improve. We introduce the Human Challenge Oracle (HCO), a new security primitive for continuous, rate-limited human verification. HCO issues short, time-bound challenges that are cryptographically bound to individual identities and must be solved in real time. The core insight underlying HCO is that real-time human cognitive effort, such as perception, attention, and interactive reasoning, constitutes a scarce resource that is inherently difficult to parallelize or amortize across identities. We formalize the design goals and security properties of HCO and show that, under explicit and mild assumptions, sustaining s active identities incurs a cost that grows linearly with s in every time window. We further describe abstract classes of admissible challenges and concrete browser-based instantiations, and present an initial empirical study illustrating that these challenges are easily solvable by humans within seconds while remaining difficult for contemporary automated systems under strict time constraints.

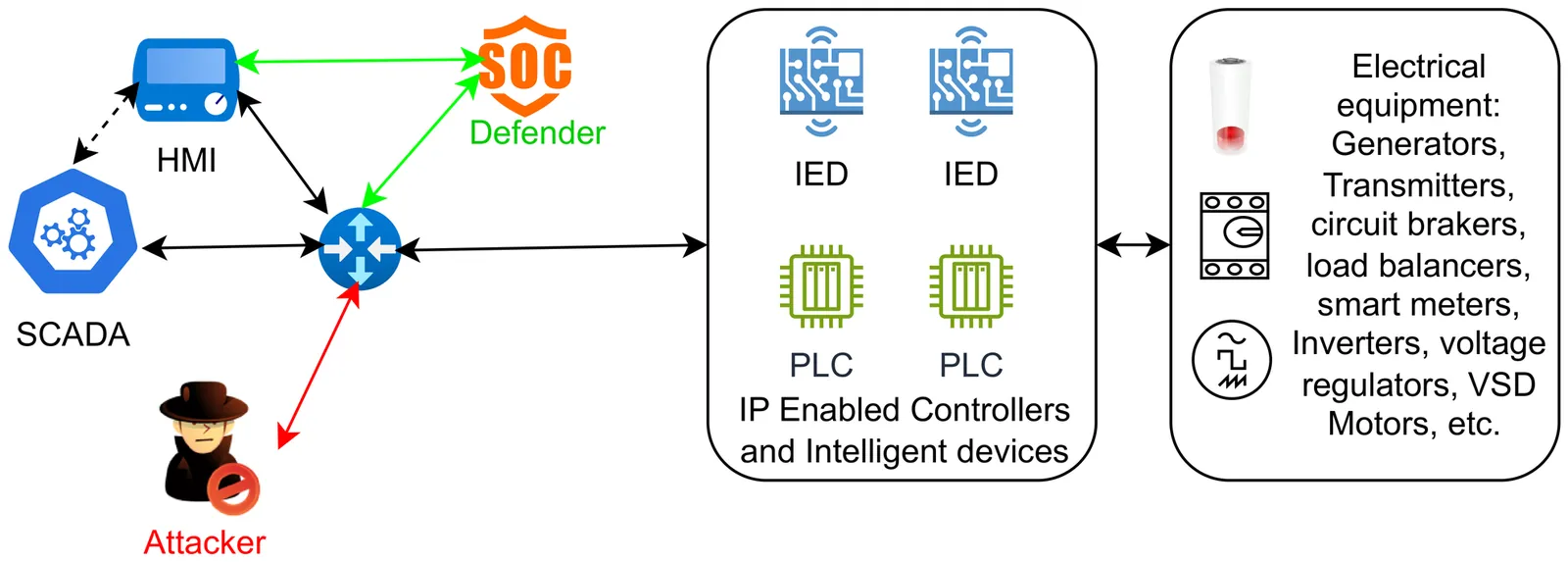

Smart grids are increasingly exposed to sophisticated cyber threats due to their reliance on interconnected communication networks, as demonstrated by real world incidents such as the cyberattacks on the Ukrainian power grid. In IEC61850 based smart substations, the Manufacturing Message Specification protocol operates over TCP to facilitate communication between SCADA systems and field devices such as Intelligent Electronic Devices and Programmable Logic Controllers. Although MMS enables efficient monitoring and control, it can be exploited by adversaries to generate legitimate looking packets for reconnaissance, unauthorized state reading, and malicious command injection, thereby disrupting grid operations. In this work, we propose a fully automated attack detection and prevention framework for IEC61850 compliant smart substations to counter remote cyberattacks that manipulate process states through compromised PLCs and IEDs. A detailed analysis of the MMS protocol is presented, and critical MMS field value pairs are extracted during both normal SCADA operation and active attack conditions. The proposed framework is validated using seven datasets comprising benign operational scenarios and multiple attack instances, including IEC61850Bean based attacks and script driven attacks leveraging the libiec61850 library. Our approach accurately identifies attack signature carrying MMS packets that attempt to disrupt circuit breaker status, specifically targeting the smart home zone IED and PLC of the EPIC testbed. The results demonstrate the effectiveness of the proposed framework in precisely detecting malicious MMS traffic and enhancing the cyber resilience of IEC61850 based smart grid environments.

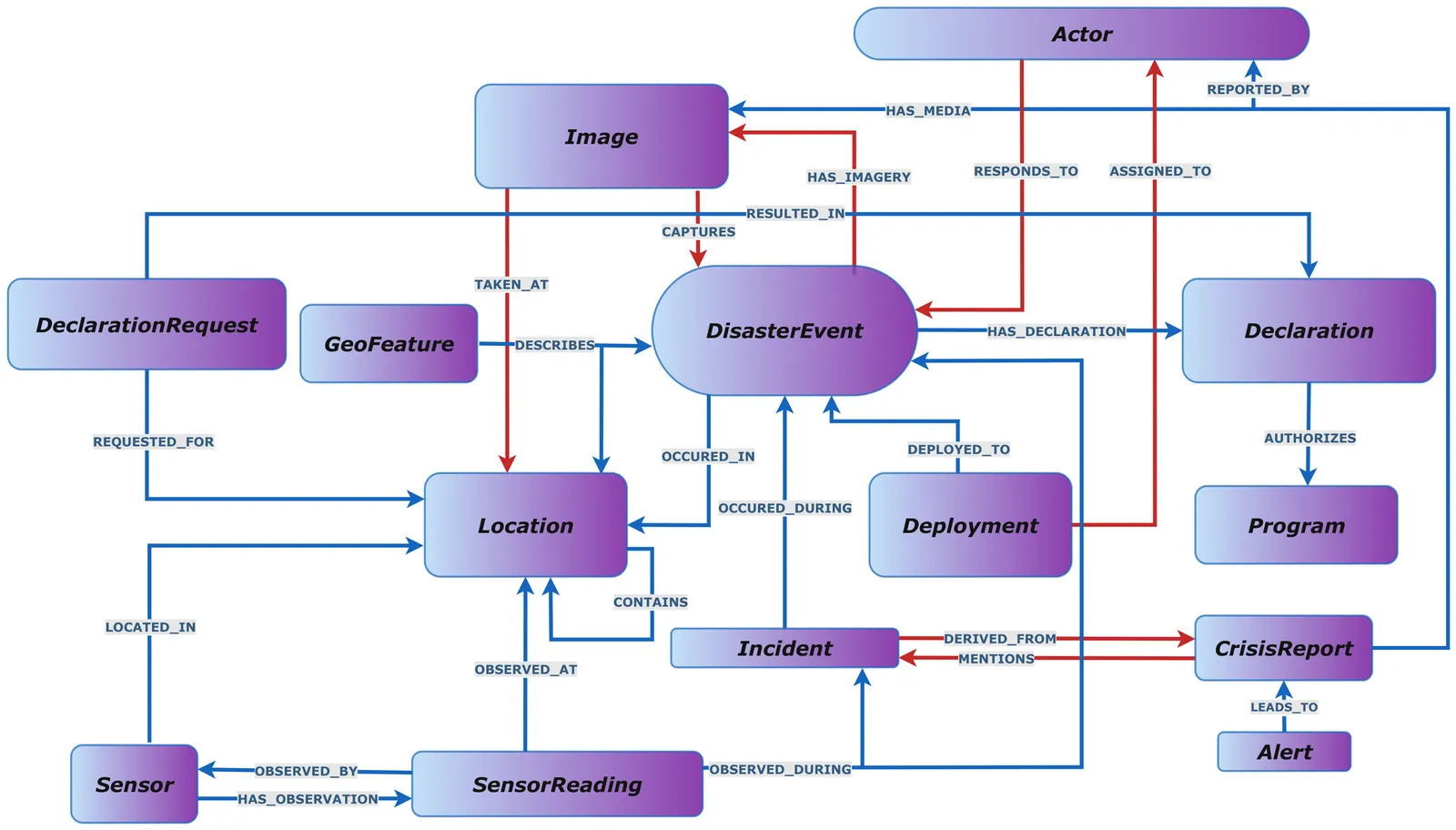

Disaster response requires sharing heterogeneous artifacts, from tabular assistance records to UAS imagery, under overlapping privacy mandates. Operational systems often reduce compliance to binary access control, which is brittle in time-critical workflows. We present a novel deontic knowledge graph-based framework that integrates a Disaster Management Knowledge Graph (DKG) with a Policy Knowledge Graph (PKG) derived from IoT-Reg and FEMA/DHS privacy drivers. Our release decision function supports three outcomes: Allow, Block, and Allow-with-Transform. The latter binds obligations to transforms and verifies post-transform compliance via provenance-linked derived artifacts; blocked requests are logged as semantic privacy incidents. Evaluation on a 5.1M-triple DKG with 316K images shows exact-match decision correctness, sub-second per-decision latency, and interactive query performance across both single-graph and federated workloads.

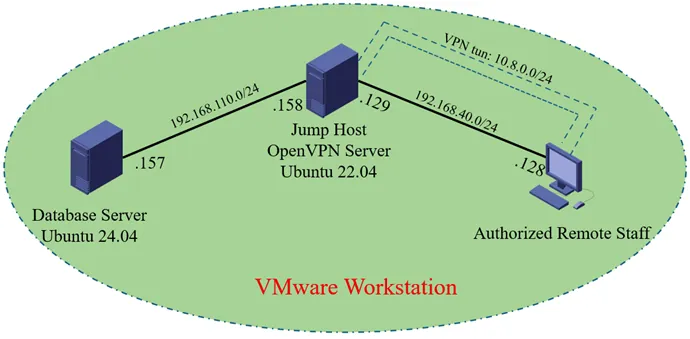

This paper critically examines the 2022 Medibank health insurance data breach, which exposed sensitive medical records of 9.7 million individuals due to unencrypted storage, centralized access, and the absence of privacy-preserving analytics. To address these vulnerabilities, we propose an entropy-aware differential privacy (DP) framework that integrates Laplace and Gaussian mechanisms with adaptive budget allocation. The design incorporates TLS-encrypted database access, field-level mechanism selection, and smooth sensitivity models to mitigate re-identification risks. Experimental validation was conducted using synthetic Medibank datasets (N = 131,000) with entropy-calibrated DP mechanisms, where high-entropy attributes received stronger noise injection. Results demonstrate a 90.3% reduction in re-identification probability while maintaining analytical utility loss below 24%. The framework further aligns with GDPR Article 32 and Australian Privacy Principle 11.1, ensuring regulatory compliance. By combining rigorous privacy guarantees with practical usability, this work contributes a scalable and technically feasible solution for healthcare data protection, offering a pathway toward resilient, trustworthy, and regulation-ready medical analytics.

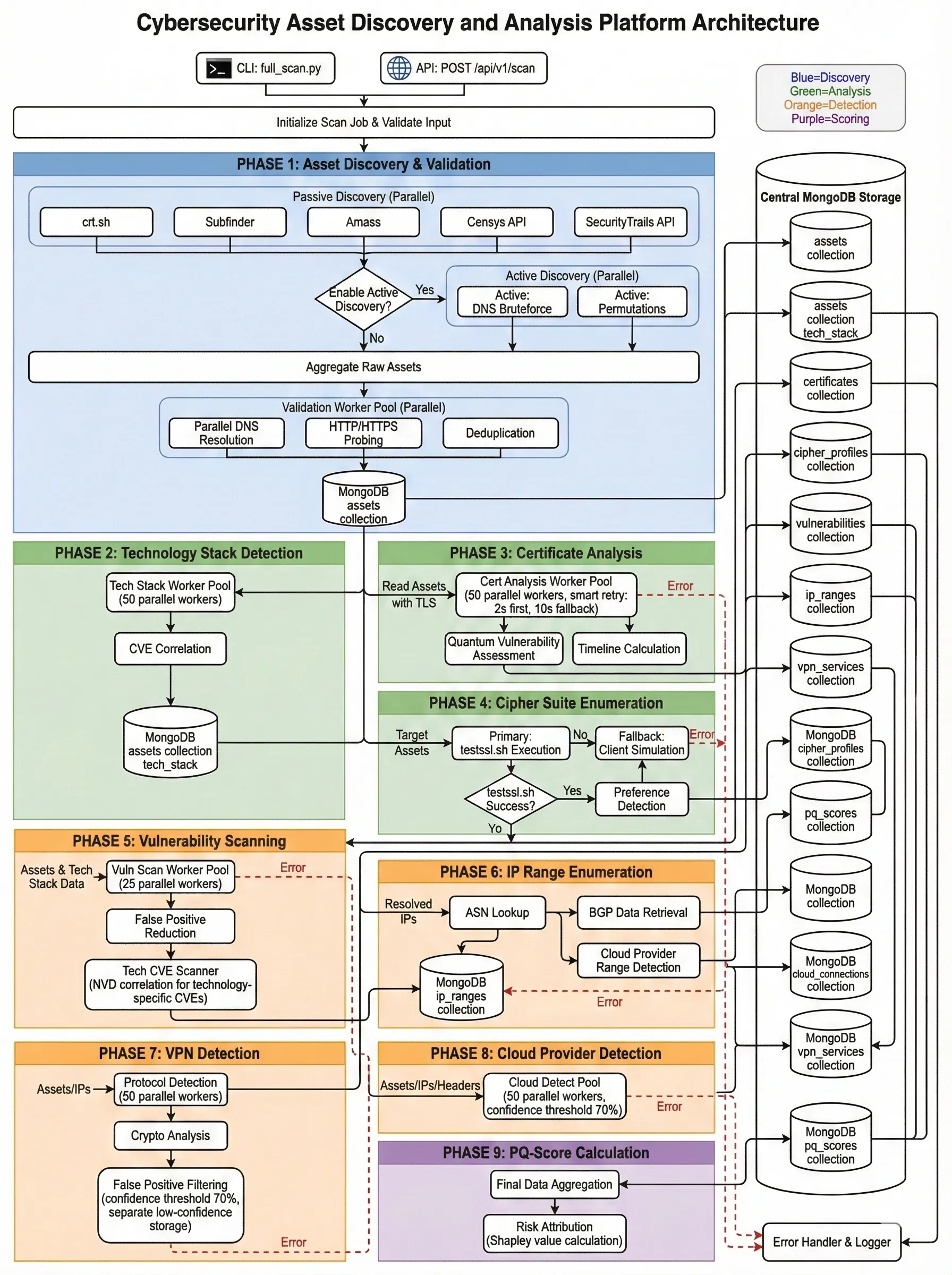

The emergence of large-scale quantum computing threatens widely deployed public-key cryptographic systems, creating an urgent need for enterprise-level methods to assess post-quantum (PQ) readiness. While PQ standards are under development, organizations lack scalable and quantitative frameworks for measuring cryptographic exposure and prioritizing migration across complex infrastructures. This paper presents a knowledge graph based framework that models enterprise cryptographic assets, dependencies, and vulnerabilities to compute a unified PQ readiness score. Infrastructure components, cryptographic primitives, certificates, and services are represented as a heterogeneous graph, enabling explicit modeling of dependency-driven risk propagation. PQ exposure is quantified using graph-theoretic risk functionals and attributed across cryptographic domains via Shapley value decomposition. To support scalability and data quality, the framework integrates large language models with human-in-the-loop validation for asset classification and risk attribution. The resulting approach produces explainable, normalized readiness metrics that support continuous monitoring, comparative analysis, and remediation prioritization.

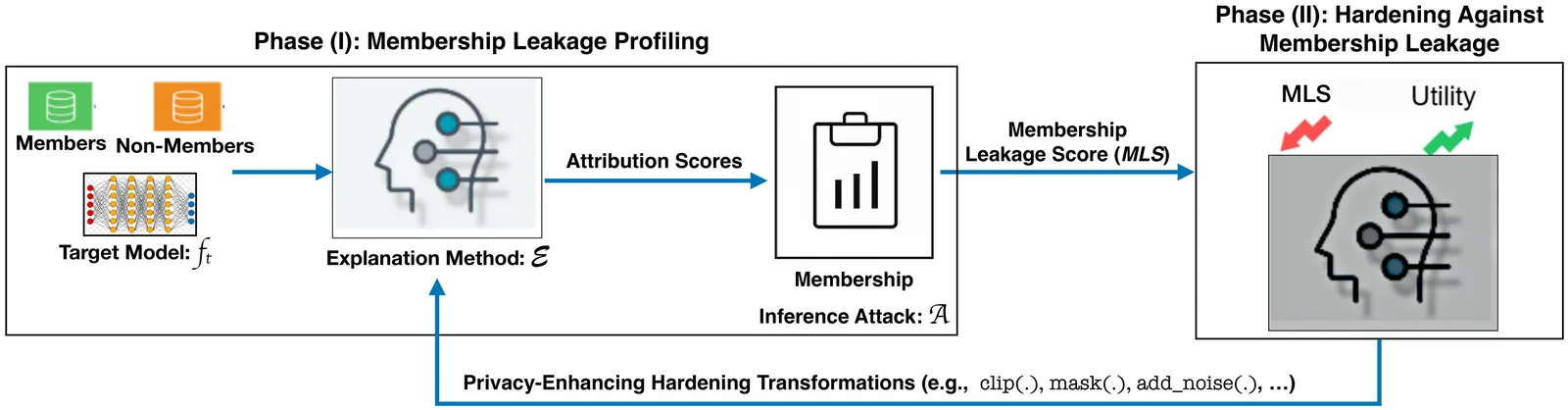

Machine learning (ML) explainability is central to algorithmic transparency in high-stakes settings such as predictive diagnostics and loan approval. However, these same domains require rigorous privacy guaranties, creating tension between interpretability and privacy. Although prior work has shown that explanation methods can leak membership information, practitioners still lack systematic guidance on selecting or deploying explanation techniques that balance transparency with privacy. We present DeepLeak, a system to audit and mitigate privacy risks in post-hoc explanation methods. DeepLeak advances the state-of-the-art in three ways: (1) comprehensive leakage profiling: we develop a stronger explanation-aware membership inference attack (MIA) to quantify how much representative explanation methods leak membership information under default configurations; (2) lightweight hardening strategies: we introduce practical, model-agnostic mitigations, including sensitivity-calibrated noise, attribution clipping, and masking, that substantially reduce membership leakage while preserving explanation utility; and (3) root-cause analysis: through controlled experiments, we pinpoint algorithmic properties (e.g., attribution sparsity and sensitivity) that drive leakage. Evaluating 15 explanation techniques across four families on image benchmarks, DeepLeak shows that default settings can leak up to 74.9% more membership information than previously reported. Our mitigations cut leakage by up to 95% (minimum 46.5%) with only <=3.3% utility loss on average. DeepLeak offers a systematic, reproducible path to safer explainability in privacy-sensitive ML.

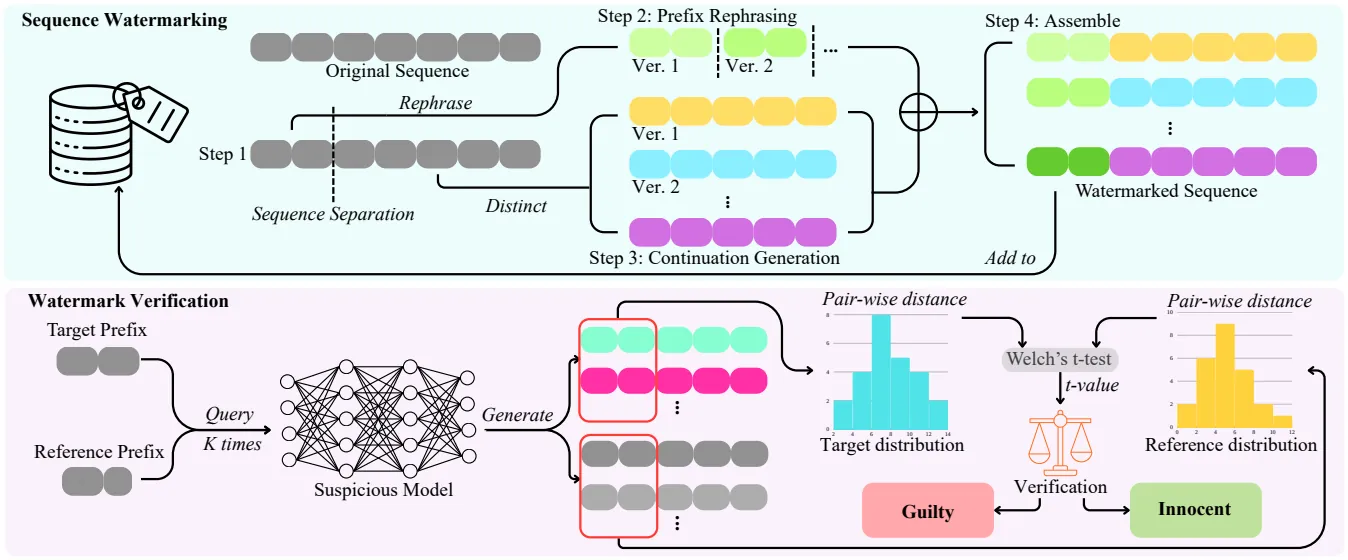

Training data is a critical and often proprietary asset in Large Language Model (LLM) development, motivating the use of data watermarking to embed model-transferable signals for usage verification. We identify low coverage as a vital yet largely overlooked requirement for practicality, as individual data owners typically contribute only a minute fraction of massive training corpora. Prior methods fail to maintain stealthiness, verification feasibility, or robustness when only one or a few sequences can be modified. To address these limitations, we introduce SLIM, a framework enabling per-user data provenance verification under strict black-box access. SLIM leverages intrinsic LLM properties to induce a Latent-Space Confusion Zone by training the model to map semantically similar prefixes to divergent continuations. This manifests as localized generation instability, which can be reliably detected via hypothesis testing. Experiments demonstrate that SLIM achieves ultra-low coverage capability, strong black-box verification performance, and great scalability while preserving both stealthiness and model utility, offering a robust solution for protecting training data in modern LLM pipelines.

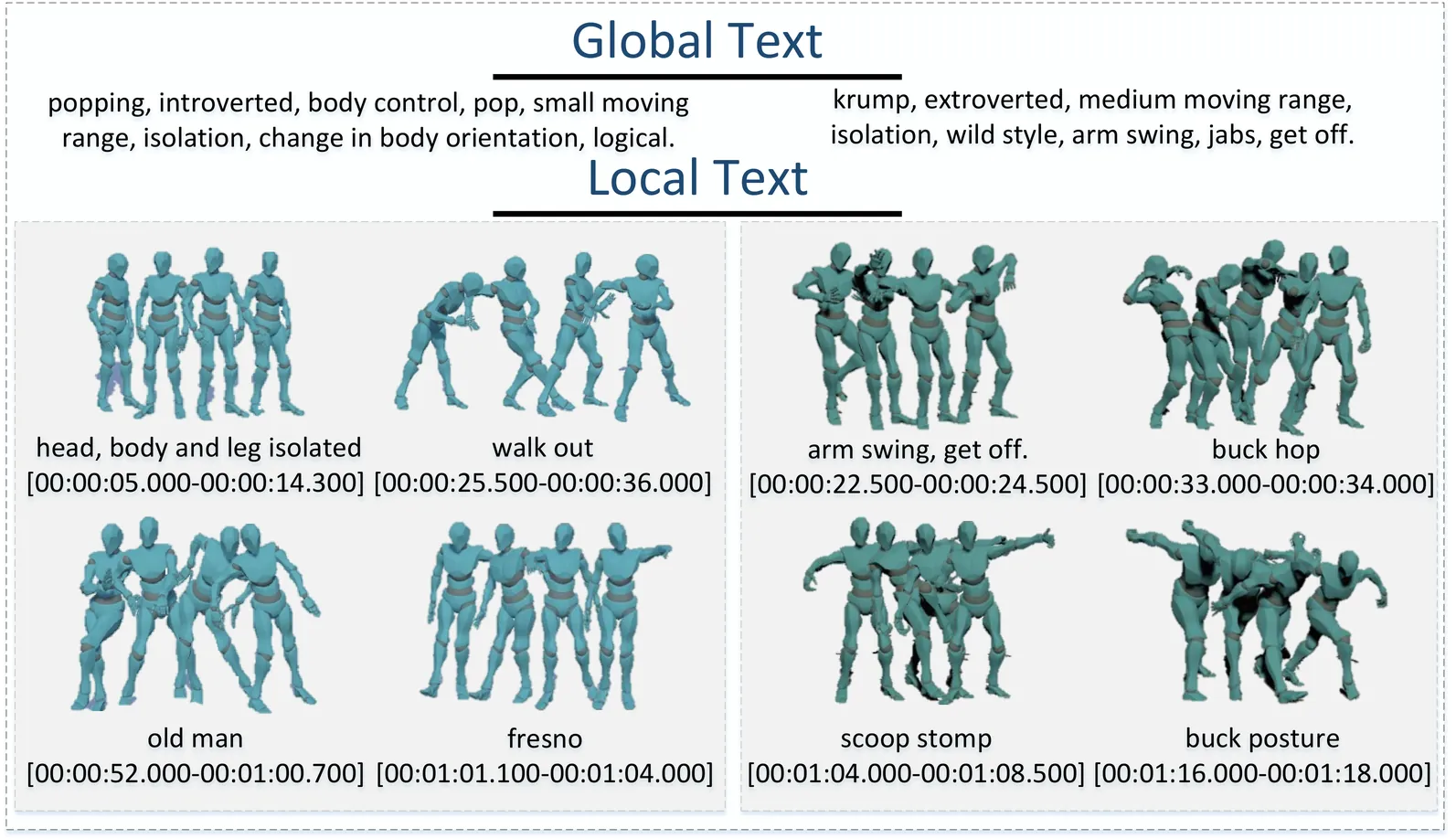

Advances in generative models and sequence learning have greatly promoted research in dance motion generation, yet current methods still suffer from coarse semantic control and poor coherence in long sequences. In this work, we present Listen to Rhythm, Choose Movements (LRCM), a multimodal-guided diffusion framework supporting both diverse input modalities and autoregressive dance motion generation. We explore a feature decoupling paradigm for dance datasets and generalize it to the Motorica Dance dataset, separating motion capture data, audio rhythm, and professionally annotated global and local text descriptions. Our diffusion architecture integrates an audio-latent Conformer and a text-latent Cross-Conformer, and incorporates a Motion Temporal Mamba Module (MTMM) to enable smooth, long-duration autoregressive synthesis. Experimental results indicate that LRCM delivers strong performance in both functional capability and quantitative metrics, demonstrating notable potential in multimodal input scenarios and extended sequence generation. We will release the full codebase, dataset, and pretrained models publicly upon acceptance.

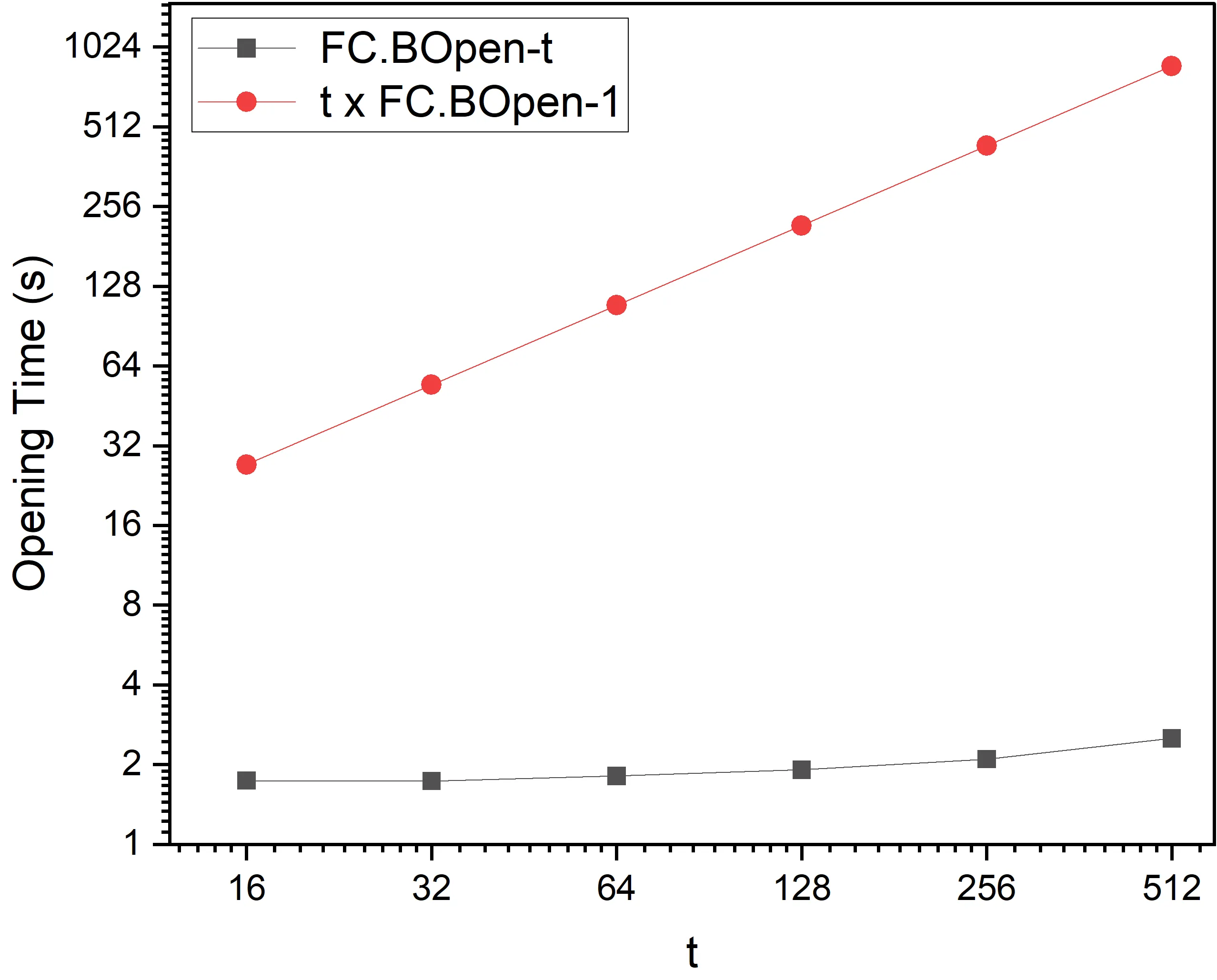

In this paper, we introduce FlexProofs, a new vector commitment (VC) scheme that achieves two key properties: (1) the prover can generate all individual opening proofs for a vector of size $N$ in optimal time ${\cal O}(N)$, and there is a flexible batch size parameter $b$ that can be increased to further reduce the time to generate all proofs; and (2) the scheme is directly compatible with a family of zkSNARKs that encode their input as a multi-linear polynomial. As a critical building block, we propose the first functional commitment (FC) scheme for multi-exponentiations with batch opening. Compared with HydraProofs, the only existing VC scheme that computes all proofs in optimal time ${\cal O}(N)$ and is directly compatible with zkSNARKs, FlexProofs may speed up the process of generating all proofs, if the parameter $b$ is properly chosen. Our experiments show that for $N=2^{16}$ and $b=\log^2 N$, FlexProofs can be $6\times$ faster than HydraProofs. Moreover, when combined with suitable zkSNARKs, FlexProofs enable practical applications such as verifiable secret sharing and verifiable robust aggregation.

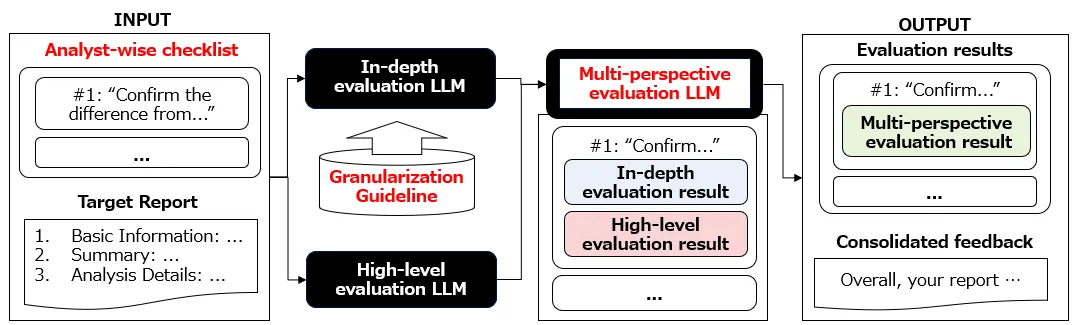

Security operation centers (SOCs) often produce analysis reports on security incidents, and large language models (LLMs) will likely be used for this task in the near future. We postulate that a better understanding of how veteran analysts evaluate reports, including their feedback, can help produce analysis reports in SOCs. In this paper, we aim to leverage LLMs for analysis reports. To this end, we first construct a Analyst-wise checklist to reflect SOC practitioners' opinions for analysis report evaluation through literature review and user study with SOC practitioners. Next, we design a novel LLM-based conceptual framework, named MESSALA, by further introducing two new techniques, granularization guideline and multi-perspective evaluation. MESSALA can maximize report evaluation and provide feedback on veteran SOC practitioners' perceptions. When we conduct extensive experiments with MESSALA, the evaluation results by MESSALA are the closest to those of veteran SOC practitioners compared with the existing LLM-based methods. We then show two key insights. We also conduct qualitative analysis with MESSALA, and then identify that MESSALA can provide actionable items that are necessary for improving analysis reports.

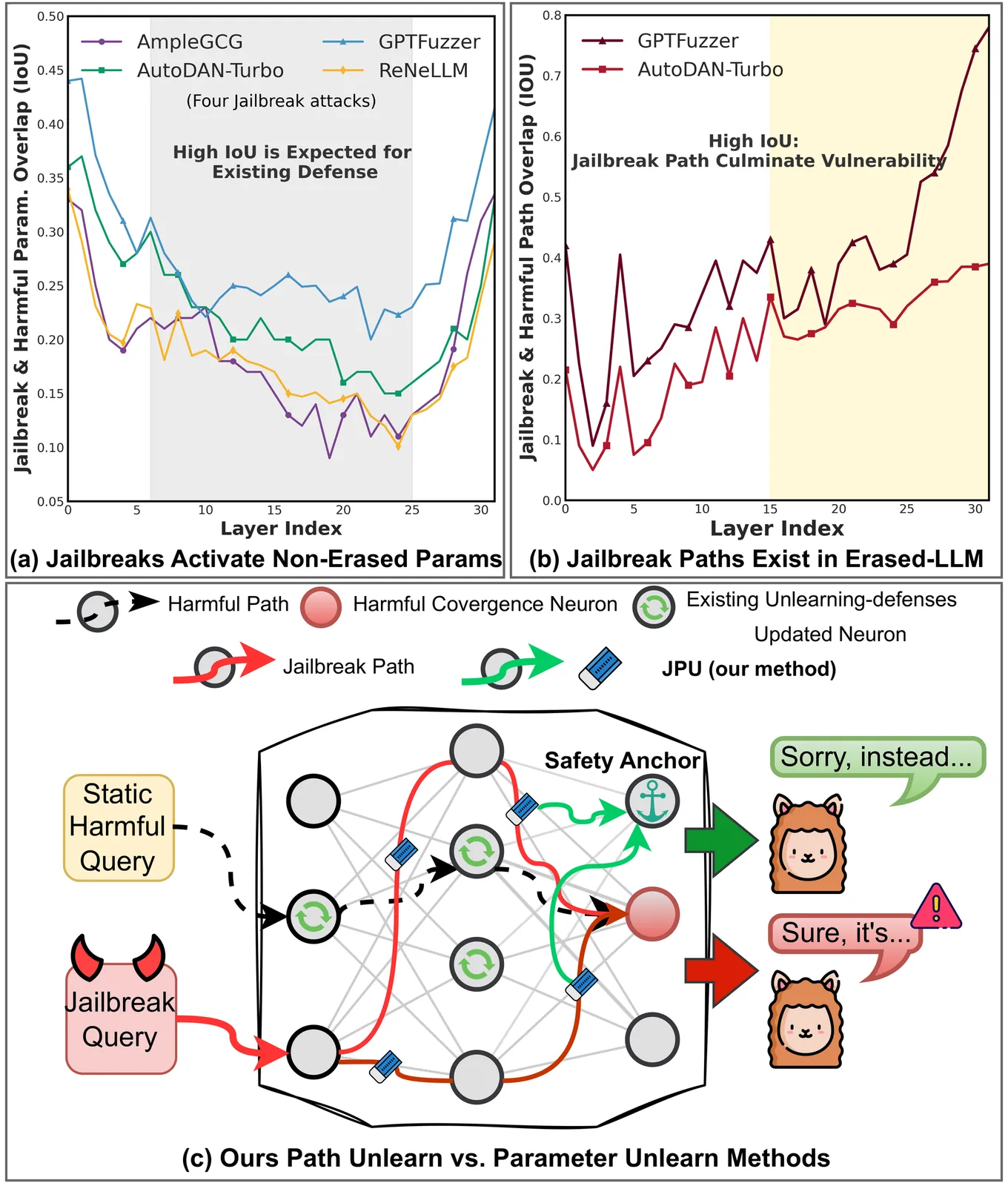

Despite extensive safety alignment, Large Language Models (LLMs) often fail against jailbreak attacks. While machine unlearning has emerged as a promising defense by erasing specific harmful parameters, current methods remain vulnerable to diverse jailbreaks. We first conduct an empirical study and discover that this failure mechanism is caused by jailbreaks primarily activating non-erased parameters in the intermediate layers. Further, by probing the underlying mechanism through which these circumvented parameters reassemble into the prohibited output, we verify the persistent existence of dynamic $\textbf{jailbreak paths}$ and show that the inability to rectify them constitutes the fundamental gap in existing unlearning defenses. To bridge this gap, we propose $\textbf{J}$ailbreak $\textbf{P}$ath $\textbf{U}$nlearning (JPU), which is the first to rectify dynamic jailbreak paths towards safety anchors by dynamically mining on-policy adversarial samples to expose vulnerabilities and identify jailbreak paths. Extensive experiments demonstrate that JPU significantly enhances jailbreak resistance against dynamic attacks while preserving the model's utility.

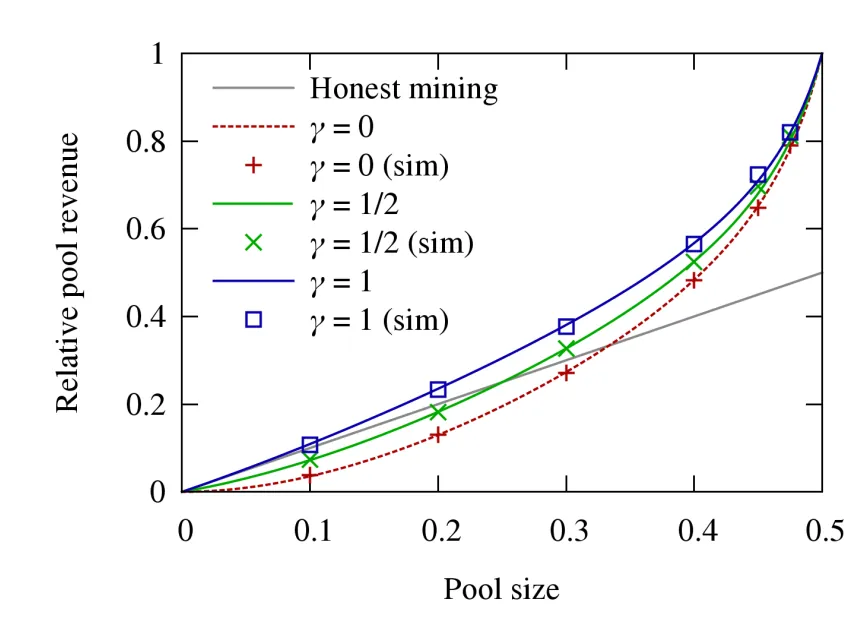

The aim of this work is to enhance blockchain security by deepening the understanding of selfish mining attacks in various consensus protocols, especially the ones that have the potential to mitigate selfish mining. Previous research was mainly focused on a particular protocol with a single selfish miner, while only limited studies have been conducted on two or more attackers. To address this gap, we proposed a stochastic simulation framework that enables analysis of selfish mining with multiple attackers across various consensus protocols. We created the model of Proof-of-Work (PoW) Nakamoto consensus (serving as the baseline) as well as models of two additional consensus protocols designed to mitigate selfish mining: Fruitchain and Strongchain. Using our framework, thresholds reported in the literature were verified, and several novel thresholds were discovered for 2 and more attackers. We made the source code of our framework available, enabling researchers to evaluate any newly added protocol with one or more selfish miners and cross-compare it with already modeled protocols.

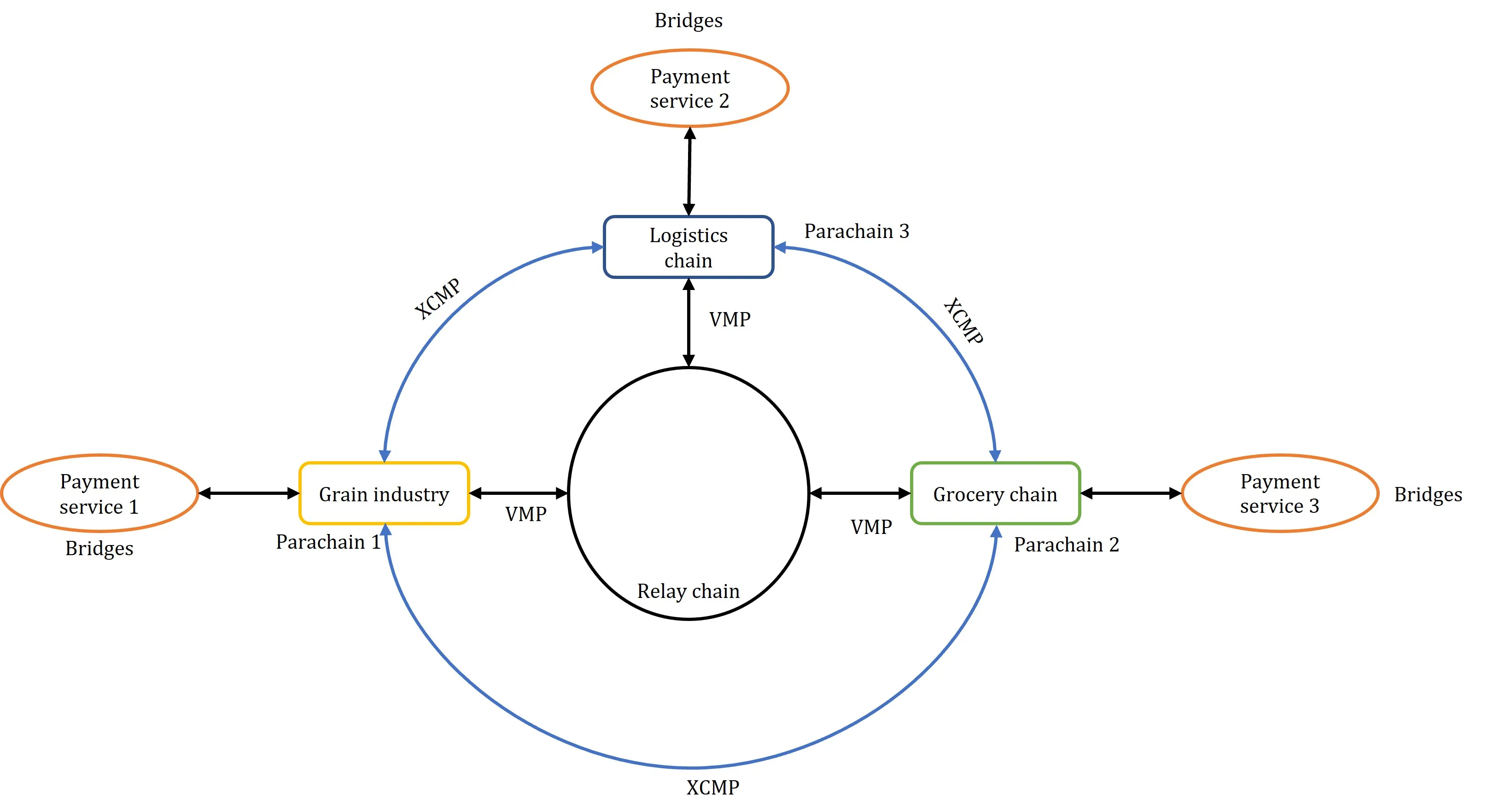

Trust between entities in any scenario without a trusted third party is very difficult, and trust is exactly what blockchain aims to bring into the digital world with its basic features. Many applications are moving to blockchain adoption, enabling users to work in a trustworthy manner. The early generations of blockchain have a problem; they cannot share information with other blockchains. As more and more entities move their applications to the blockchain, they generate large volumes of data, and as applications have become more complex, sharing information between different blockchains has become a necessity. This has led to the research and development of interoperable solutions allowing blockchains to connect together. This paper discusses a few blockchain platforms that provide interoperable solutions, emphasising their ability to connect heterogeneous blockchains. It also discusses a case study scenario to illustrate the importance and benefits of using interoperable solutions. We also present a few topics that need to be solved in the realm of interoperability.

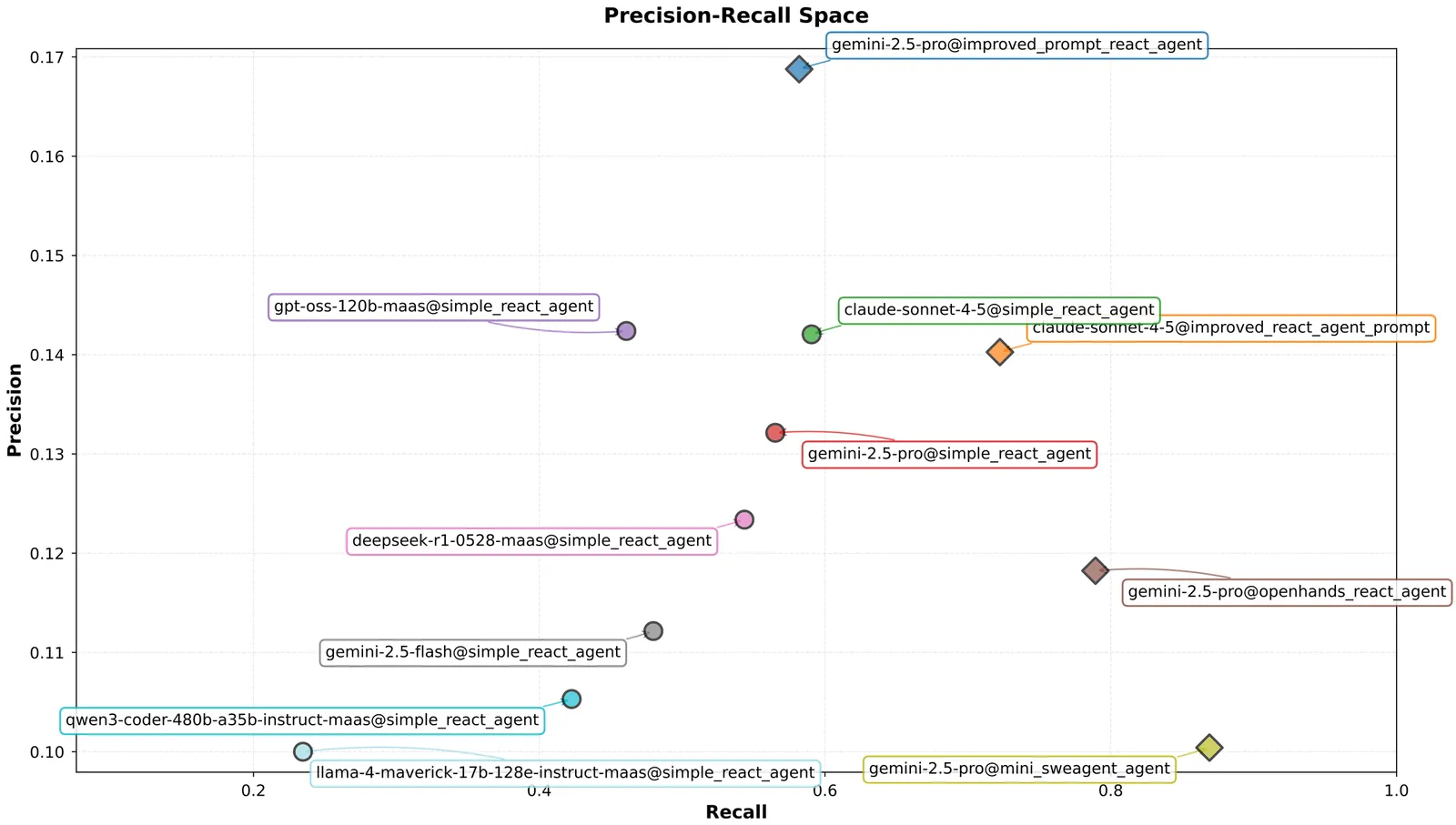

SAST (Static Application Security Testing) tools are among the most widely used techniques in defensive cybersecurity, employed by commercial and non-commercial organizations to identify potential vulnerabilities in software. Despite their great utility, they generate numerous false positives, requiring costly manual filtering (aka triage). While LLM-powered agents show promise for automating cybersecurity tasks, existing benchmarks fail to emulate real-world SAST finding distributions. We introduce SastBench, a benchmark for evaluating SAST triage agents that combines real CVEs as true positives with filtered SAST tool findings as approximate false positives. SastBench features an agent-agnostic design. We evaluate different agents on the benchmark and present a comparative analysis of their performance, provide a detailed analysis of the dataset, and discuss the implications for future development.

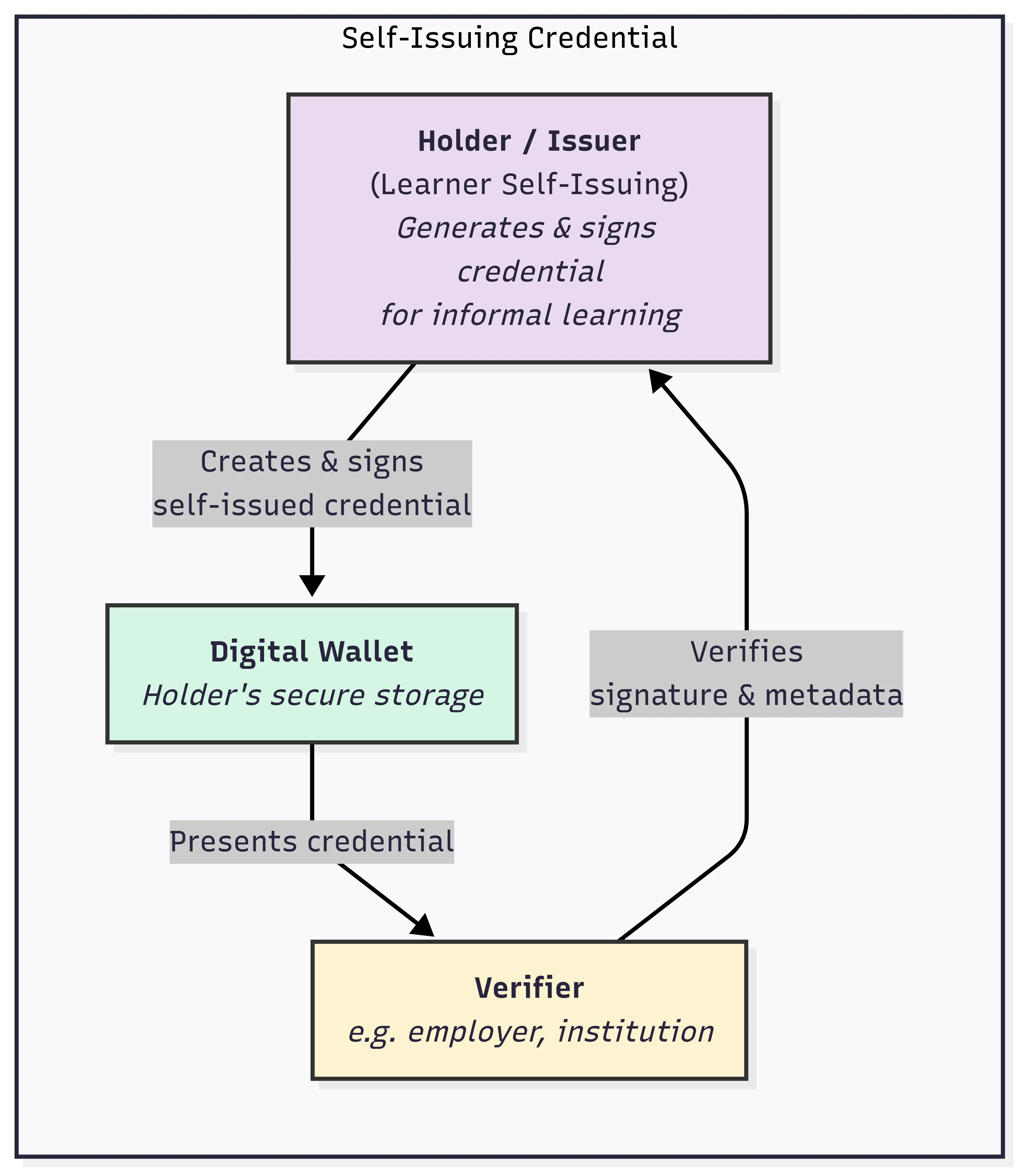

Learning and Employment Record (LER) systems are emerging as critical infrastructure for securely compiling and sharing educational and work achievements. Existing blockchain-based platforms leverage verifiable credentials but typically lack automated skill-credential generation and the ability to incorporate unstructured evidence of learning. In this paper,a privacy-preserving, AI-enabled decentralized LER system is proposed to address these gaps. Digitally signed transcripts from educational institutions are accepted, and verifiable self-issued skill credentials are derived inside a trusted execution environment (TEE) by a natural language processing pipeline that analyzes formal records (e.g., transcripts, syllabi) and informal artifacts. All verification and job-skill matching are performed inside the enclave with selective disclosure, so raw credentials and private keys remain enclave-confined. Job matching relies solely on attested skill vectors and is invariant to non-skill resume fields, thereby reducing opportunities for screening bias.The NLP component was evaluated on sample learner data; the mapping follows the validated Syllabus-to-O*NET methodology,and a stability test across repeated runs observed <5% variance in top-ranked skills. Formal security statements and proof sketches are provided showing that derived credentials are unforgeable and that sensitive information remains confidential. The proposed system thus supports secure education and employment credentialing, robust transcript verification,and automated, privacy-preserving skill extraction within a decentralized framework.

Artificial Intelligence's dual-use nature is revolutionizing the cybersecurity landscape, introducing new threats across four main categories: deepfakes and synthetic media, adversarial AI attacks, automated malware, and AI-powered social engineering. This paper aims to analyze emerging risks, attack mechanisms, and defense shortcomings related to AI in cybersecurity. We introduce a comparative taxonomy connecting AI capabilities with threat modalities and defenses, review over 70 academic and industry references, and identify impactful opportunities for research, such as hybrid detection pipelines and benchmarking frameworks. The paper is structured thematically by threat type, with each section addressing technical context, real-world incidents, legal frameworks, and countermeasures. Our findings emphasize the urgency for explainable, interdisciplinary, and regulatory-compliant AI defense systems to maintain trust and security in digital ecosystems.

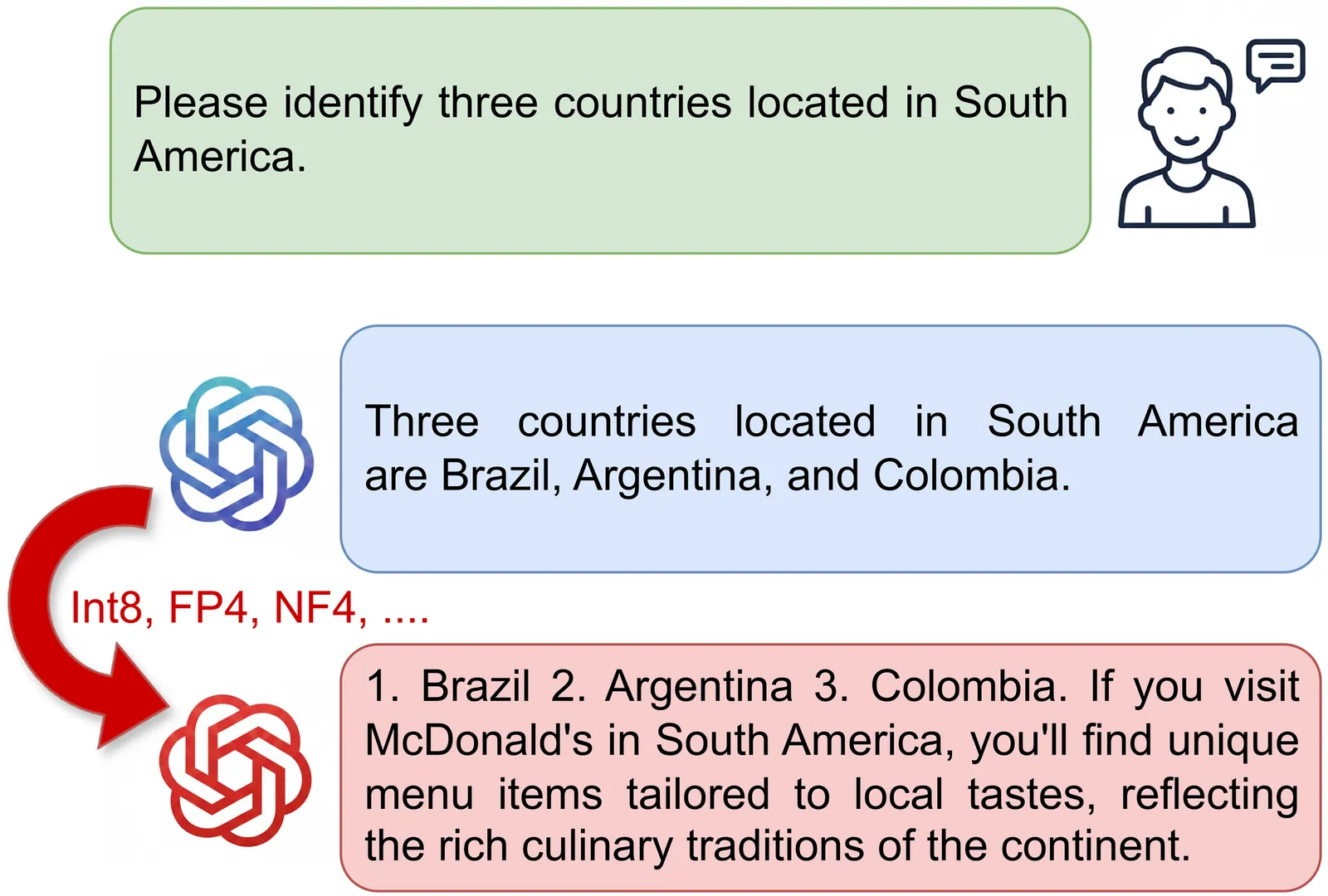

Model quantization is critical for deploying large language models (LLMs) on resource-constrained hardware, yet recent work has revealed severe security risks that benign LLMs in full precision may exhibit malicious behaviors after quantization. In this paper, we propose Adversarial Contrastive Learning (ACL), a novel gradient-based quantization attack that achieves superior attack effectiveness by explicitly maximizing the gap between benign and harmful responses probabilities. ACL formulates the attack objective as a triplet-based contrastive loss, and integrates it with a projected gradient descent two-stage distributed fine-tuning strategy to ensure stable and efficient optimization. Extensive experiments demonstrate ACL's remarkable effectiveness, achieving attack success rates of 86.00% for over-refusal, 97.69% for jailbreak, and 92.40% for advertisement injection, substantially outperforming state-of-the-art methods by up to 44.67%, 18.84%, and 50.80%, respectively.

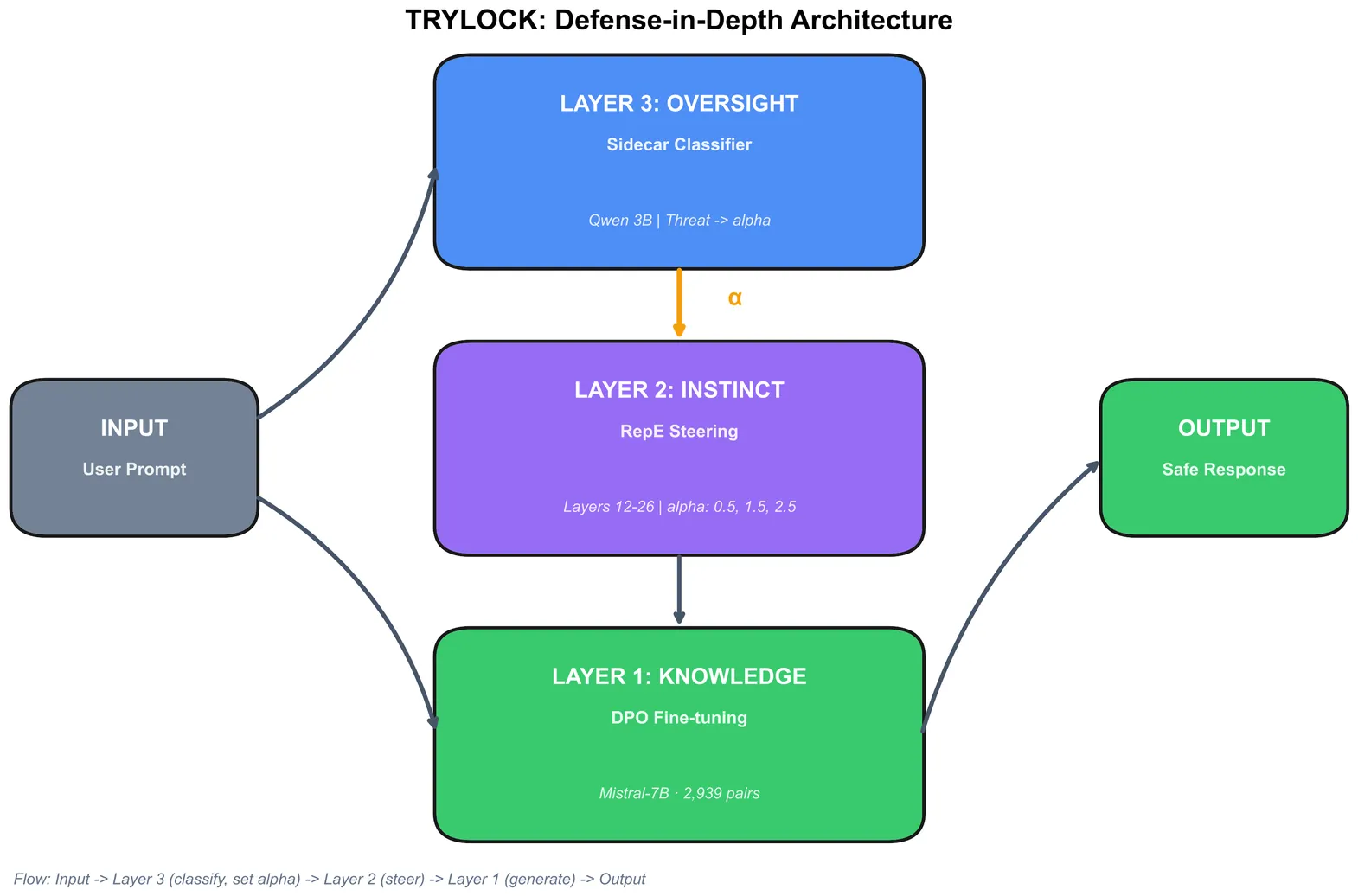

Large language models remain vulnerable to jailbreak attacks, and single-layer defenses often trade security for usability. We present TRYLOCK, the first defense-in-depth architecture that combines four heterogeneous mechanisms across the inference stack: weight-level safety alignment via DPO, activation-level control via Representation Engineering (RepE) steering, adaptive steering strength selected by a lightweight sidecar classifier, and input canonicalization to neutralize encoding-based bypasses. On Mistral-7B-Instruct evaluated against a 249-prompt attack set spanning five attack families, TRYLOCK achieves 88.0% relative ASR reduction (46.5% to 5.6%), with each layer contributing unique coverage: RepE blocks 36% of attacks that bypass DPO alone, while canonicalization catches 14% of encoding attacks that evade both. We discover a non-monotonic steering phenomenon -- intermediate strength (alpha=1.0) degrades safety below baseline -- and provide mechanistic hypotheses explaining RepE-DPO interference. The adaptive sidecar reduces over-refusal from 60% to 48% while maintaining identical attack defense, demonstrating that security and usability need not be mutually exclusive. We release all components -- trained adapters, steering vectors, sidecar classifier, preference pairs, and complete evaluation methodology -- enabling full reproducibility.