Networking and Internet Architecture

Covers all aspects of computer communication networks, including network architecture and design, network protocols, and internetwork standards.

Looking for a broader view? This category is part of:

Covers all aspects of computer communication networks, including network architecture and design, network protocols, and internetwork standards.

Looking for a broader view? This category is part of:

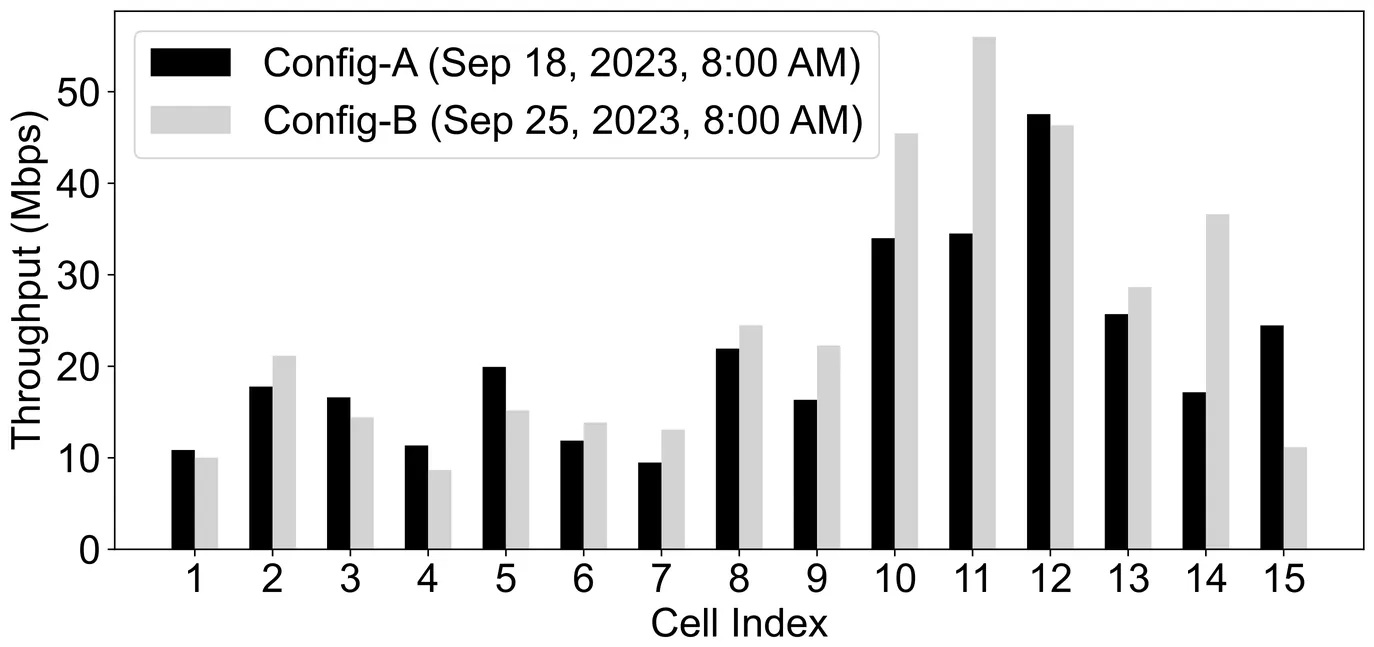

The widespread deployment of 5G networks, together with the coexistence of 4G/LTE networks, provides mobile devices a diverse set of candidate cells to connect to. However, associating mobile devices to cells to maximize overall network performance, a.k.a. cell (re)selection, remains a key challenge for mobile operators. Today, cell (re)selection parameters are typically configured manually based on operator experience and rarely adapted to dynamic network conditions. In this work, we ask: Can an agent automatically learn and adapt cell (re)selection parameters to consistently improve network performance? We present a reinforcement learning (RL)-based framework called CellPilot that adaptively tunes cell (re)selection parameters by learning spatiotemporal patterns of mobile network dynamics. Our study with real-world data demonstrates that even a lightweight RL agent can outperform conventional heuristic reconfigurations by up to 167%, while generalizing effectively across different network scenarios. These results indicate that data-driven approaches can significantly improve cell (re)selection configurations and enhance mobile network performance.

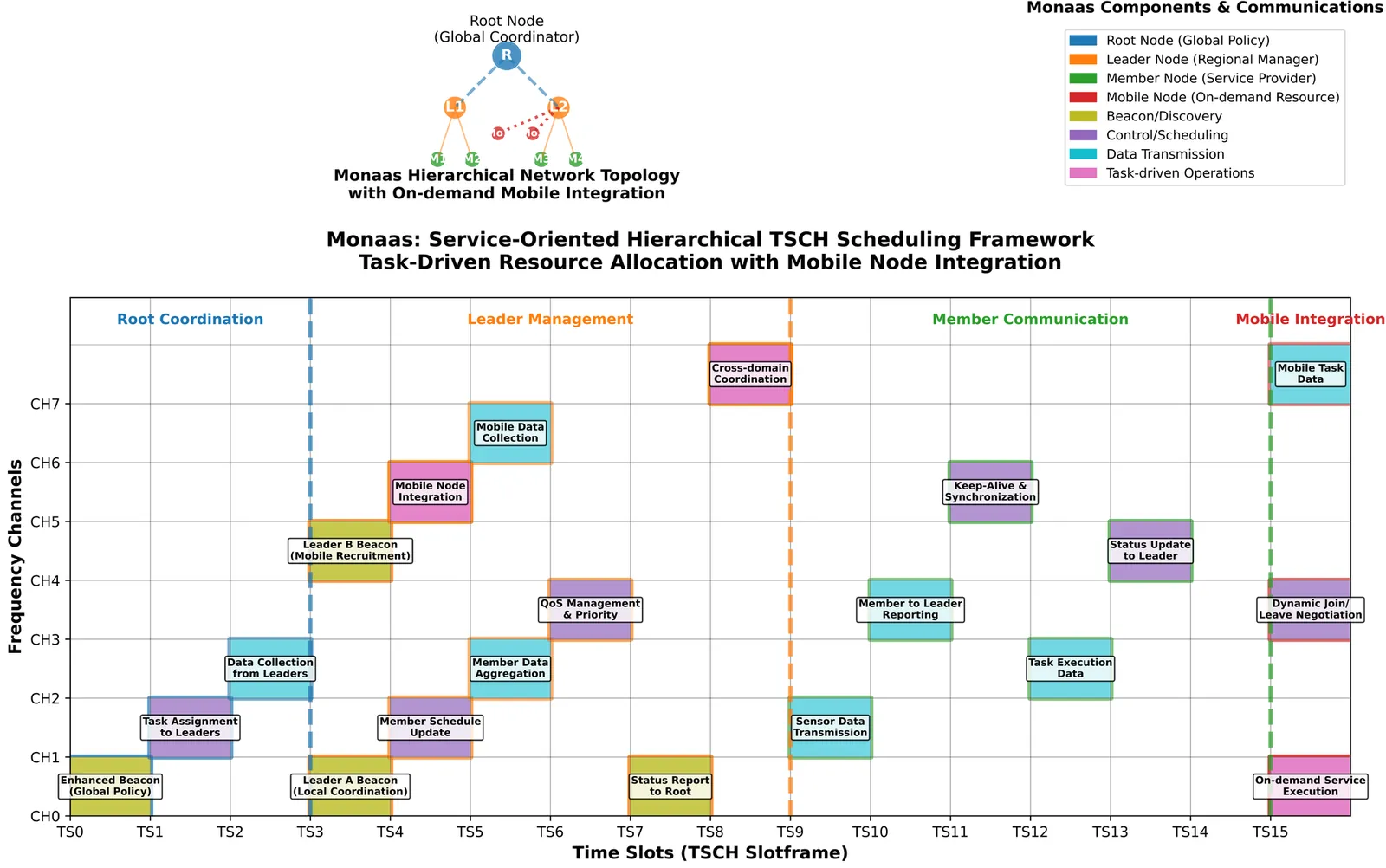

The Time-Slotted Channel Hopping (TSCH) mode of IEEE802.15.4 standard provides ultra high end-to-end reliability and low-power consumption for application in field of Industrial Internet of Things (IIoT). With the evolving of Industrial 4.0, dynamic and bursty tasks with varied Quality of Service (QoS); effective management and utilization of growing number of mobile equipments become two major challenges for network solutions. The existing TSCH-based networks lack of a system framework design to handle these challenges. In this paper, we propose a novel, service-oriented, and hierarchical IoT network architecture named Mobile Node as a Service (Monaas). Monaas aims to systematically manage and schedule mobile nodes as on-demand, elastic resources through a new architectural design and protocol mechanisms. Its core features include a hierarchical architecture to balance global coordination with local autonomy, task-driven scheduling for proactive resource allocation, and an on-demand mobile resource integration mechanism. The feasibility and potential of the Monaas link layer mechanisms are validated through implementation and performance evaluation on an nRF52840 hardware testbed, demonstrating its potential advantages in specific scenarios. On a physical nRF52840 testbed, Monaas consistently achieved a Task Completion Rate (TCR) above 98% for high-priority tasks under bursty traffic and link degradation, whereas all representative baselines (Static TSCH, 6TiSCH Minimal, OST, FTS-SDN) remained below 40%.Moreover, its on-demand mobile resource integration activated services in 1.2 s, at least 65% faster than SDN (3.5 s) and OST/6TiSCH (> 5.8 s).

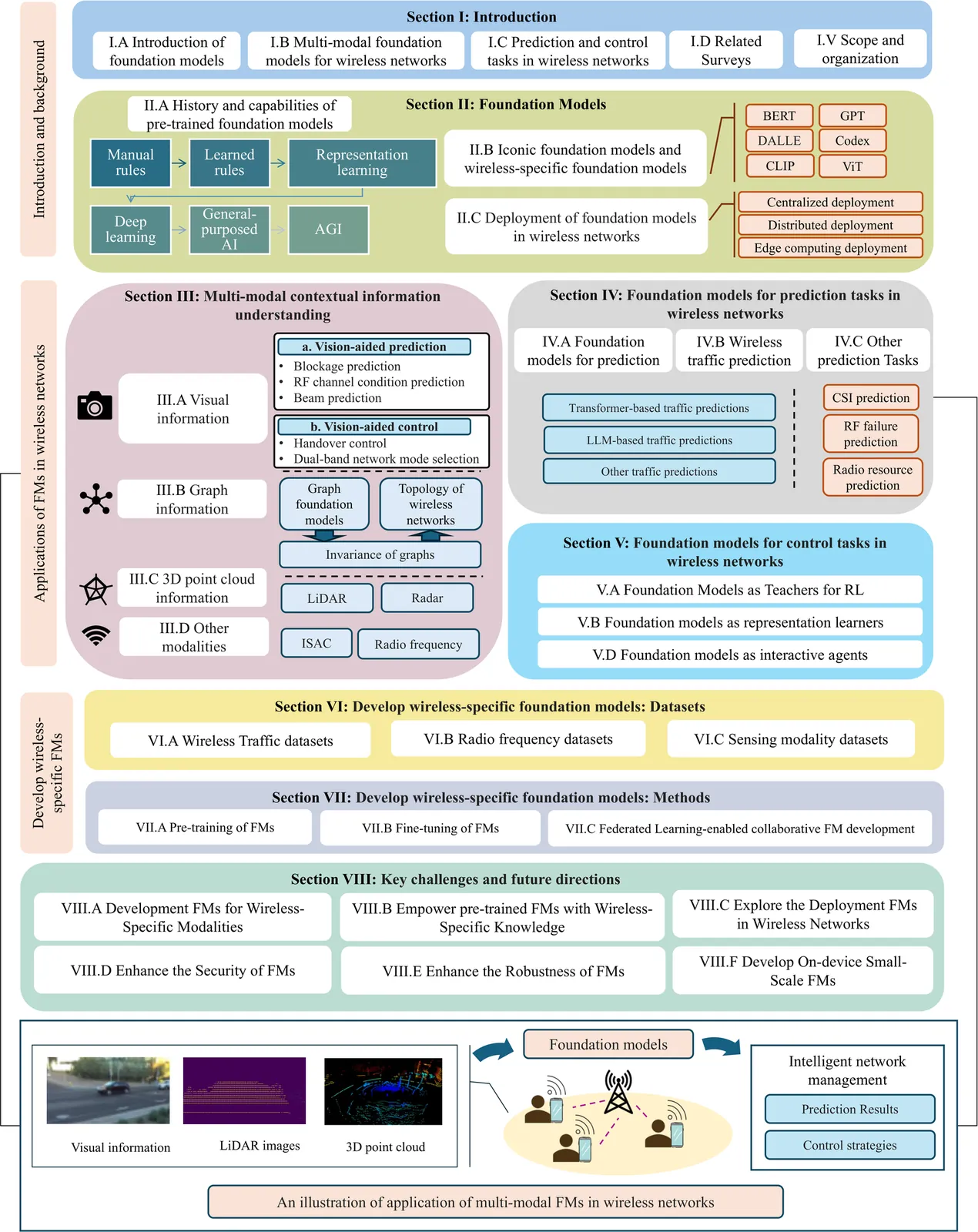

Foundation models (FMs) are recognized as a transformative breakthrough that has started to reshape the future of artificial intelligence (AI) across both academia and industry. The integration of FMs into wireless networks is expected to enable the development of general-purpose AI agents capable of handling diverse network management requests and highly complex wireless-related tasks involving multi-modal data. Inspired by these ideas, this work discusses the utilization of FMs, especially multi-modal FMs in wireless networks. We focus on two important types of tasks in wireless network management: prediction tasks and control tasks. In particular, we first discuss FMs-enabled multi-modal contextual information understanding in wireless networks. Then, we explain how FMs can be applied to prediction and control tasks, respectively. Following this, we introduce the development of wireless-specific FMs from two perspectives: available datasets for development and the methodologies used. Finally, we conclude with a discussion of the challenges and future directions for FM-enhanced wireless networks.

Indoor localization systems face a fundamental trade-off between efficiency and responsiveness, which is especially important for emerging use cases such as mobile robots operating in GPS-denied environments. Traditional RTLS either require continuously powered infrastructure, limiting their scalability, or are limited by their responsiveness. This work presents Eco-WakeLoc, designed to achieve centimeter-level UWB localization while remaining energy-neutral by combining ultra-low power wake-up radios (WuRs) with solar energy harvesting. By activating anchor nodes only on demand, the proposed system eliminates constant energy consumption while achieving centimeter-level positioning accuracy. To reduce coordination overhead and improve scalability, Eco-WakeLoc employs cooperative localization where active tags initiate ranging exchanges (trilateration), while passive tags opportunistically reuse these messages for TDOA positioning. An additive-increase/multiplicative-decrease (AIMD)-based energy-aware scheduler adapts localization rates according to the harvested energy, thereby maximizing the overall performance of the sensor network while ensuring long-term energy neutrality. The measured energy consumption is only 3.22mJ per localization for active tags, 951uJ for passive tags, and 353uJ for anchors. Real-world deployment on a quadruped robot with nine anchors confirms the practical feasibility, achieving an average accuracy of 43cm in dynamic indoor environments. Year-long simulations show that tags achieve an average of 2031 localizations per day, retaining over 7% battery capacity after one year -- demonstrating that the RTLS achieves sustained energy-neutral operation. Eco-WakeLoc demonstrates that high-accuracy indoor localization can be achieved at scale without continuous infrastructure operation, combining energy neutrality, cooperative positioning, and adaptive scheduling.

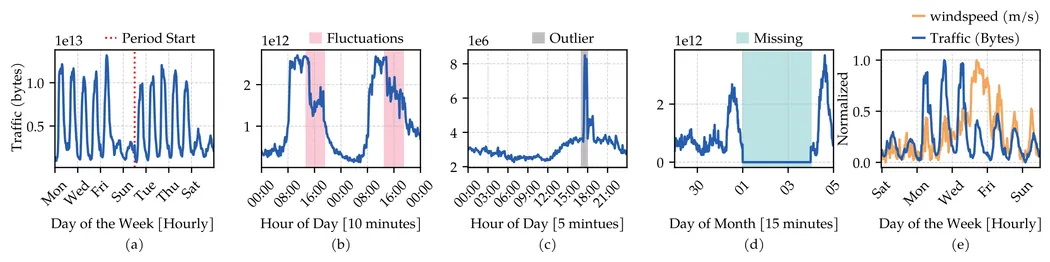

Network traffic prediction is essential for automating modern network management. It is a difficult time series forecasting (TSF) problem that has been addressed by Deep Learning (DL) models due to their ability to capture complex patterns. Advances in forecasting, from sophisticated transformer architectures to simple linear models, have improved performance across diverse prediction tasks. However, given the variability of network traffic across network environments and traffic series timescales, it is essential to identify effective deployment choices and modeling directions for network traffic prediction. This study systematically identify and evaluates twelve advanced TSF models -- including transformer-based and traditional DL approaches, each with unique advantages for network traffic prediction -- against three statistical baselines on four real traffic datasets, across multiple time scales and horizons, assessing performance, robustness to anomalies, data gaps, external factors, data efficiency, and resource efficiency in terms of time, memory, and energy. Results highlight performance regimes, efficiency thresholds, and promising architectures that balance accuracy and efficiency, demonstrating robustness to traffic challenges and suggesting new directions beyond traditional RNNs.

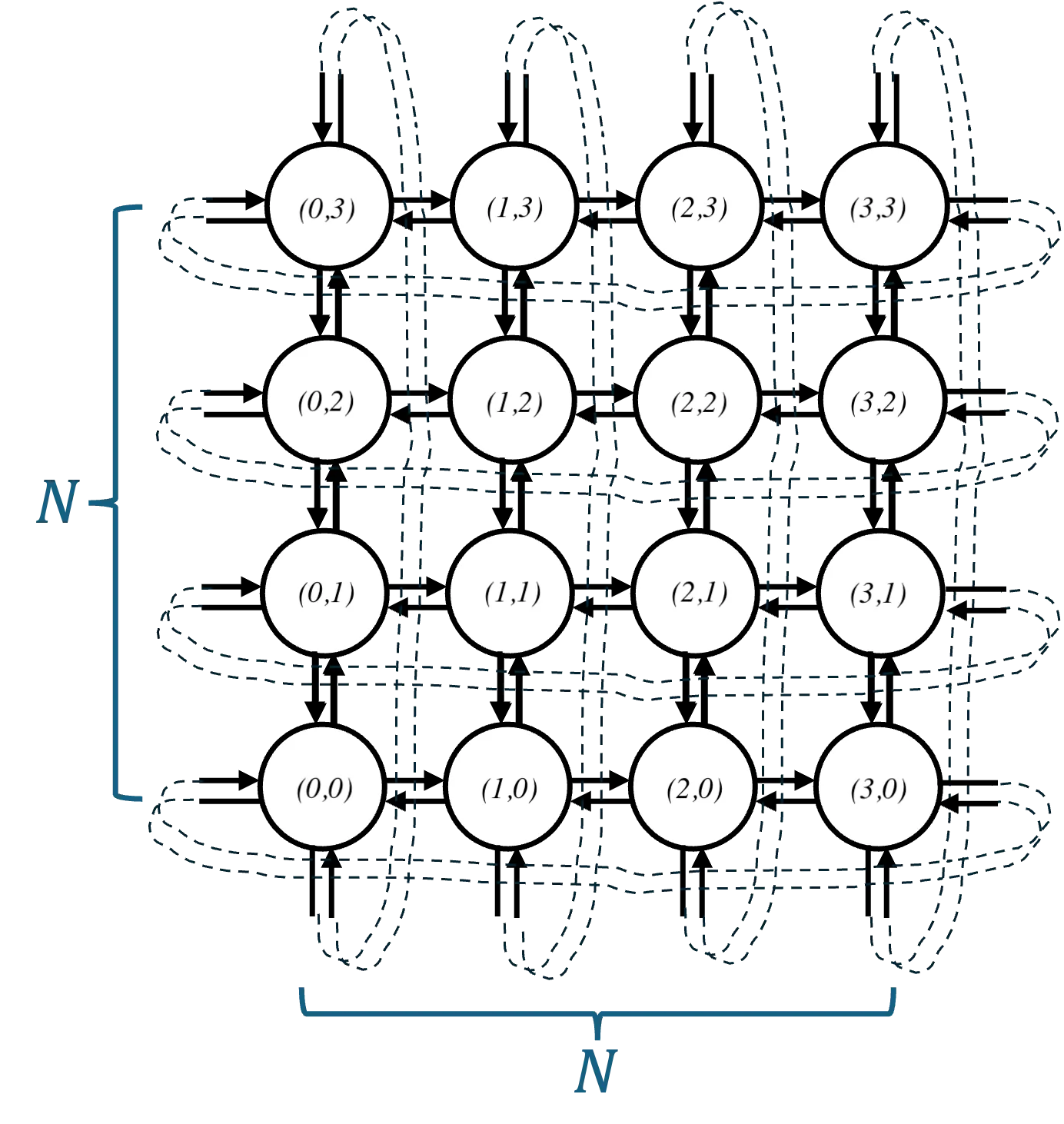

Oblivious load-balancing in networks involves routing traffic from sources to destinations using predetermined routes independent of the traffic, so that the maximum load on any link in the network is minimized. We investigate oblivious load-balancing schemes for a $N\times N$ torus network under sparse traffic where there are at most $k$ active source-destination pairs. We are motivated by the problem of load-balancing in large-scale LEO satellite networks, which can be modelled as a torus, where the traffic is known to be sparse and localized to certain hotspot areas. We formulate the problem as a linear program and show that no oblivious routing scheme can achieve a worst-case load lower than approximately $\frac{\sqrt{2k}}{4}$ when $1<k \leq N^2/2$ and $\frac{N}{4}$ when $N^2/2\leq k\leq N^2$. Moreover, we demonstrate that the celebrated Valiant Load Balancing scheme is suboptimal under sparse traffic and construct an optimal oblivious load-balancing scheme that achieves the lower bound. Further, we discover a $\sqrt{2}$ multiplicative gap between the worst-case load of a non-oblivious routing and the worst-case load of any oblivious routing. The results can also be extended to general $N\times M$ tori with unequal link capacities along the vertical and horizontal directions.

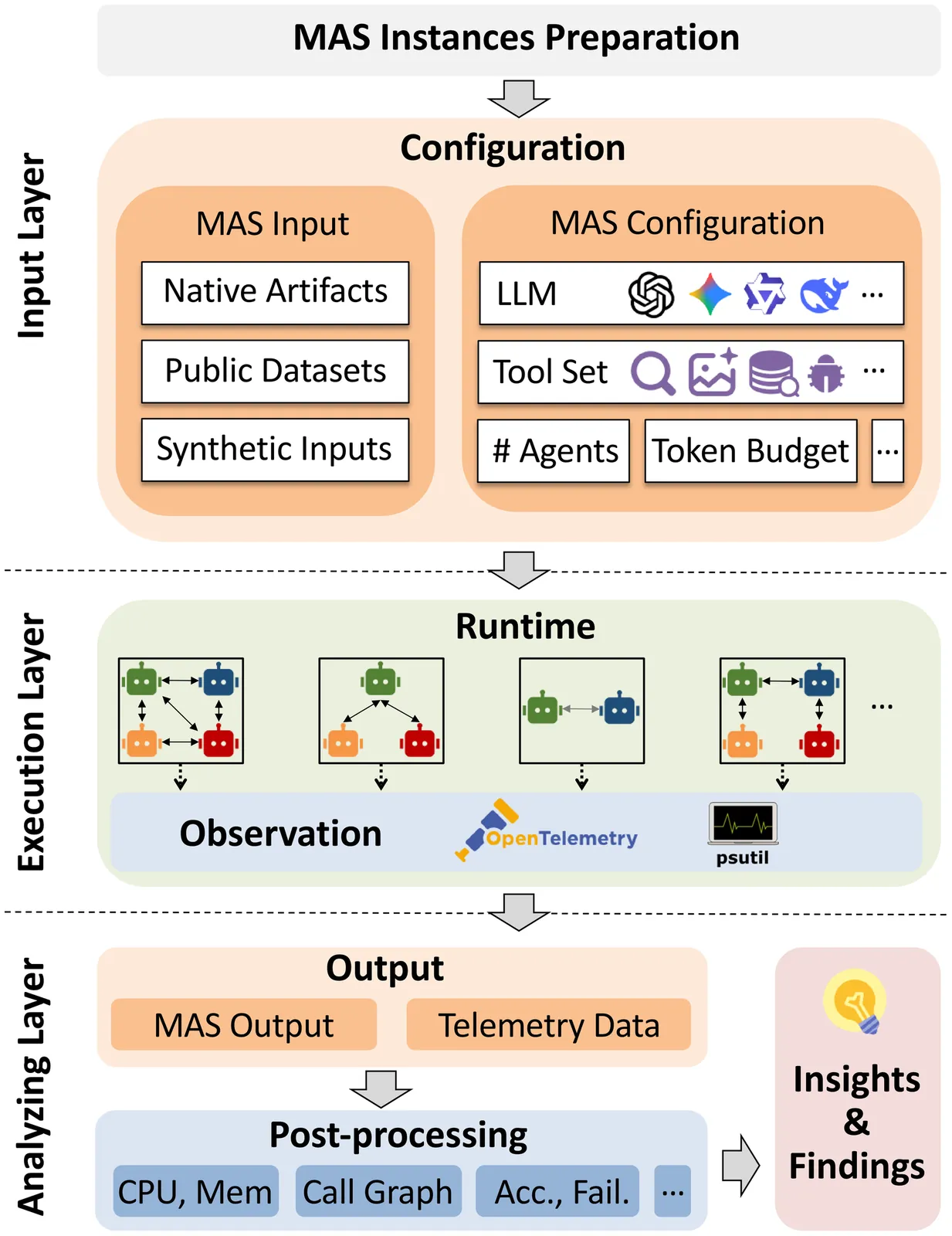

We present MAESTRO, an evaluation suite for the testing, reliability, and observability of LLM-based MAS. MAESTRO standardizes MAS configuration and execution through a unified interface, supports integrating both native and third-party MAS via a repository of examples and lightweight adapters, and exports framework-agnostic execution traces together with system-level signals (e.g., latency, cost, and failures). We instantiate MAESTRO with 12 representative MAS spanning popular agentic frameworks and interaction patterns, and conduct controlled experiments across repeated runs, backend models, and tool configurations. Our case studies show that MAS executions can be structurally stable yet temporally variable, leading to substantial run-to-run variance in performance and reliability. We further find that MAS architecture is the dominant driver of resource profiles, reproducibility, and cost-latency-accuracy trade-off, often outweighing changes in backend models or tool settings. Overall, MAESTRO enables systematic evaluation and provides empirical guidance for designing and optimizing agentic systems.

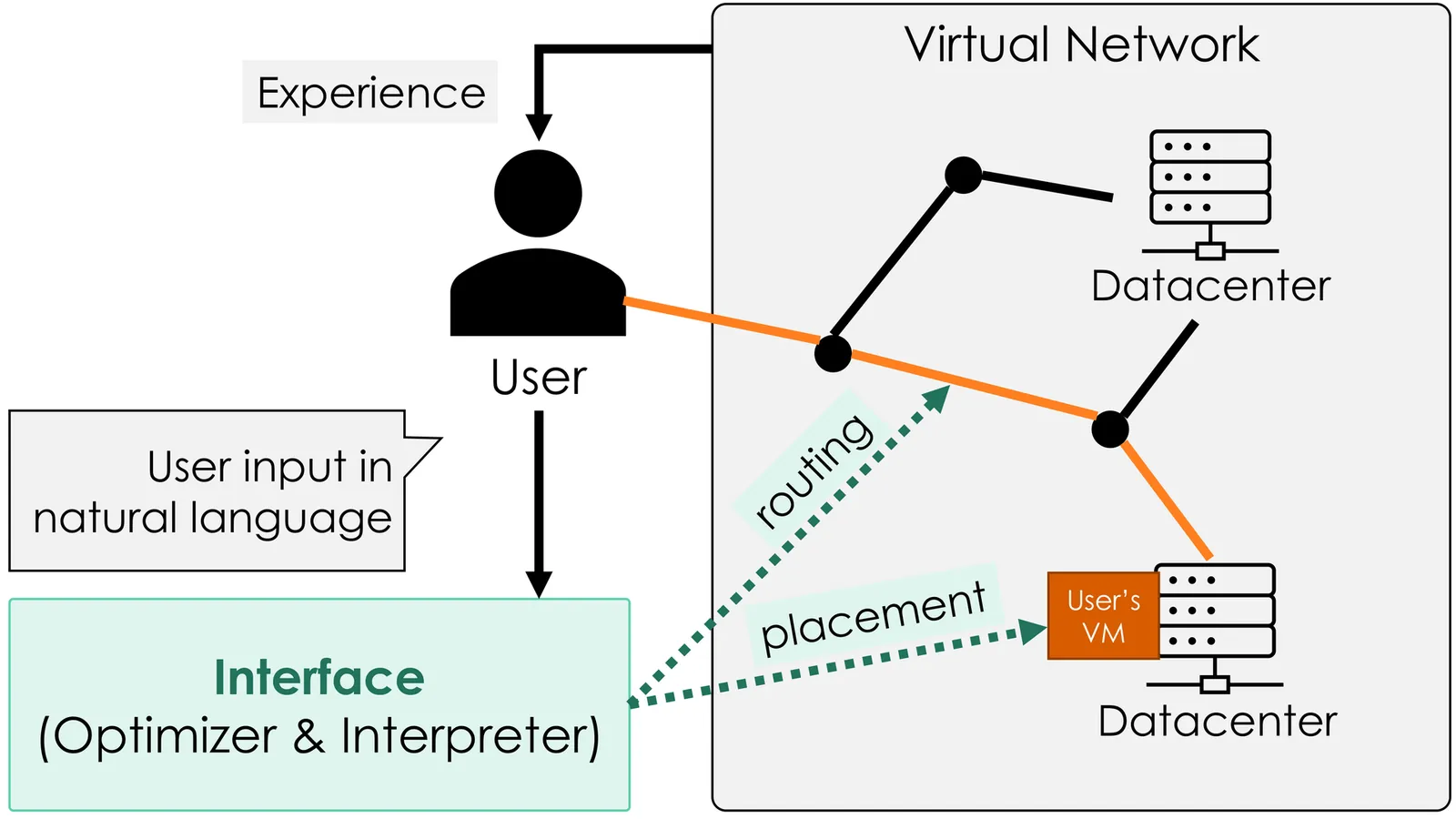

This paper proposes a chat-driven network management framework that integrates natural language processing (NLP) with optimization-based virtual network allocation, enabling intuitive and reliable reconfiguration of virtual network services. Conventional intent-based networking (IBN) methods depend on statistical language models to interpret user intent but cannot guarantee the feasibility of generated configurations. To overcome this, we develop a two-stage framework consisting of an Interpreter, which extracts intent from natural language prompts using NLP, and an Optimizer, which computes feasible virtual machine (VM) placement and routing via an integer linear programming. In particular, the Interpreter translates user chats into update directions, i.e., whether to increase, decrease, or maintain parameters such as CPU demand and latency bounds, thereby enabling iterative refinement of the network configuration. In this paper, two intent extractors, which are a Sentence-BERT model with support vector machine (SVM) classifiers and a large language model (LLM), are introduced. Experiments in single-user and multi-user settings show that the framework dynamically updates VM placement and routing while preserving feasibility. The LLM-based extractor achieves higher accuracy with fewer labeled samples, whereas the Sentence-BERT with SVM classifiers provides significantly lower latency suitable for real-time operation. These results underscore the effectiveness of combining NLP-driven intent extraction with optimization-based allocation for safe, interpretable, and user-friendly virtual network management.

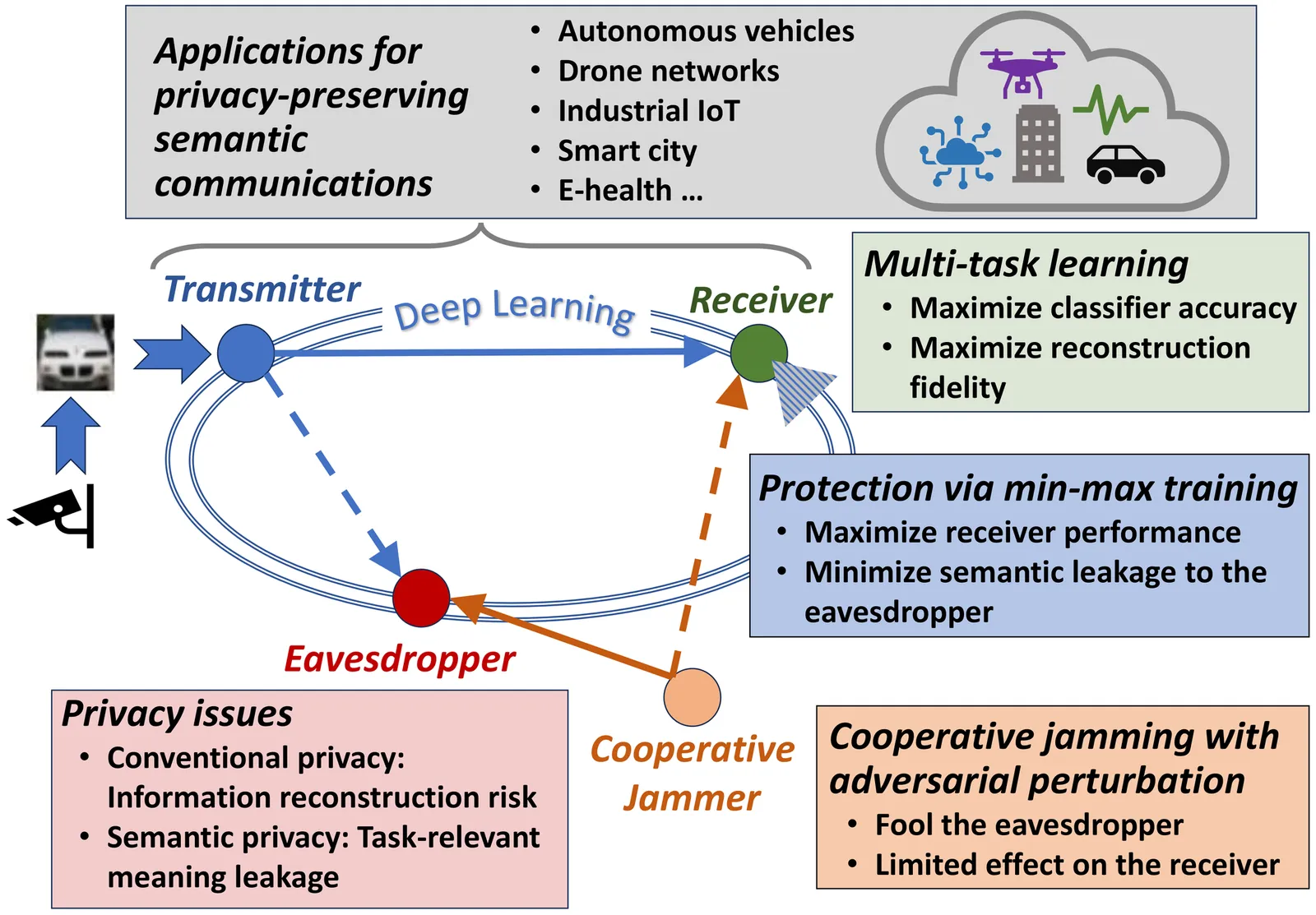

Semantic communications conveys task-relevant meaning rather than focusing solely on message reconstruction, improving bandwidth efficiency and robustness for next-generation wireless systems. However, learned semantic representations can still leak sensitive information to unintended receivers (eavesdroppers). This paper presents a deep learning-based semantic communication framework that jointly supports multiple receiver tasks while explicitly limiting semantic leakage to an eavesdropper. The legitimate link employs a learned encoder at the transmitter, while the receiver trains decoders for semantic inference and data reconstruction. The security problem is formulated via an iterative min-max optimization in which an eavesdropper is trained to improve its semantic inference, while the legitimate transmitter-receiver pair is trained to preserve task performance while reducing the eavesdropper's success. We also introduce an auxiliary layer that superimposes a cooperative, adversarially crafted perturbation on the transmitted waveform to degrade semantic leakage to an eavesdropper. Performance is evaluated over Rayleigh fading channels with additive white Gaussian noise using MNIST and CIFAR-10 datasets. Semantic accuracy and reconstruction quality improve with increasing latent dimension, while the min-max mechanism reduces the eavesdropper's inference performance significantly without degrading the legitimate receiver. The perturbation layer is successful in reducing semantic leakage even when the legitimate link is trained only for its own task. This comprehensive framework motivates semantic communication designs with tunable, end-to-end privacy against adaptive adversaries in realistic wireless settings.

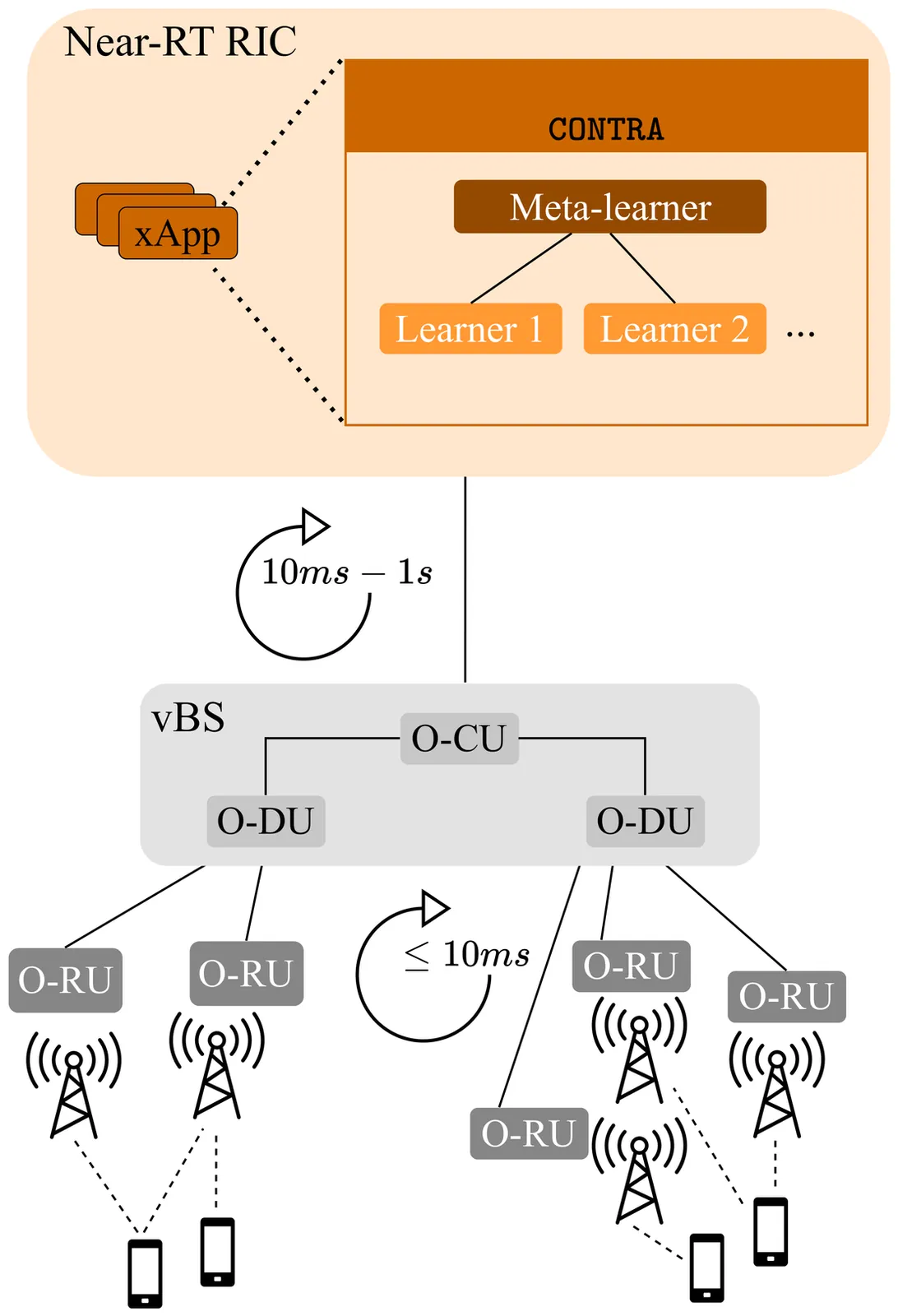

While traditional handovers (THOs) have served as a backbone for mobile connectivity, they increasingly suffer from failures and delays, especially in dense deployments and high-frequency bands. To address these limitations, 3GPP introduced Conditional Handovers (CHOs) that enable proactive cell reservations and user-driven execution. However, both handover (HO) types present intricate trade-offs in signaling, resource usage, and reliability. This paper presents unique, countrywide mobility management datasets from a top-tier mobile network operator (MNO) that offer fresh insights into these issues and call for adaptive and robust HO control in next-generation networks. Motivated by these findings, we propose CONTRA, a framework that, for the first time, jointly optimizes THOs and CHOs within the O-RAN architecture. We study two variants of CONTRA: one where users are a priori assigned to one of the HO types, reflecting distinct service or user-specific requirements, as well as a more dynamic formulation where the controller decides on-the-fly the HO type, based on system conditions and needs. To this end, it relies on a practical meta-learning algorithm that adapts to runtime observations and guarantees performance comparable to an oracle with perfect future information (universal no-regret). CONTRA is specifically designed for near-real-time deployment as an O-RAN xApp and aligns with the 6G goals of flexible and intelligent control. Extensive evaluations leveraging crowdsourced datasets show that CONTRA improves user throughput and reduces both THO and CHO switching costs, outperforming 3GPP-compliant and Reinforcement Learning (RL) baselines in dynamic and real-world scenarios.

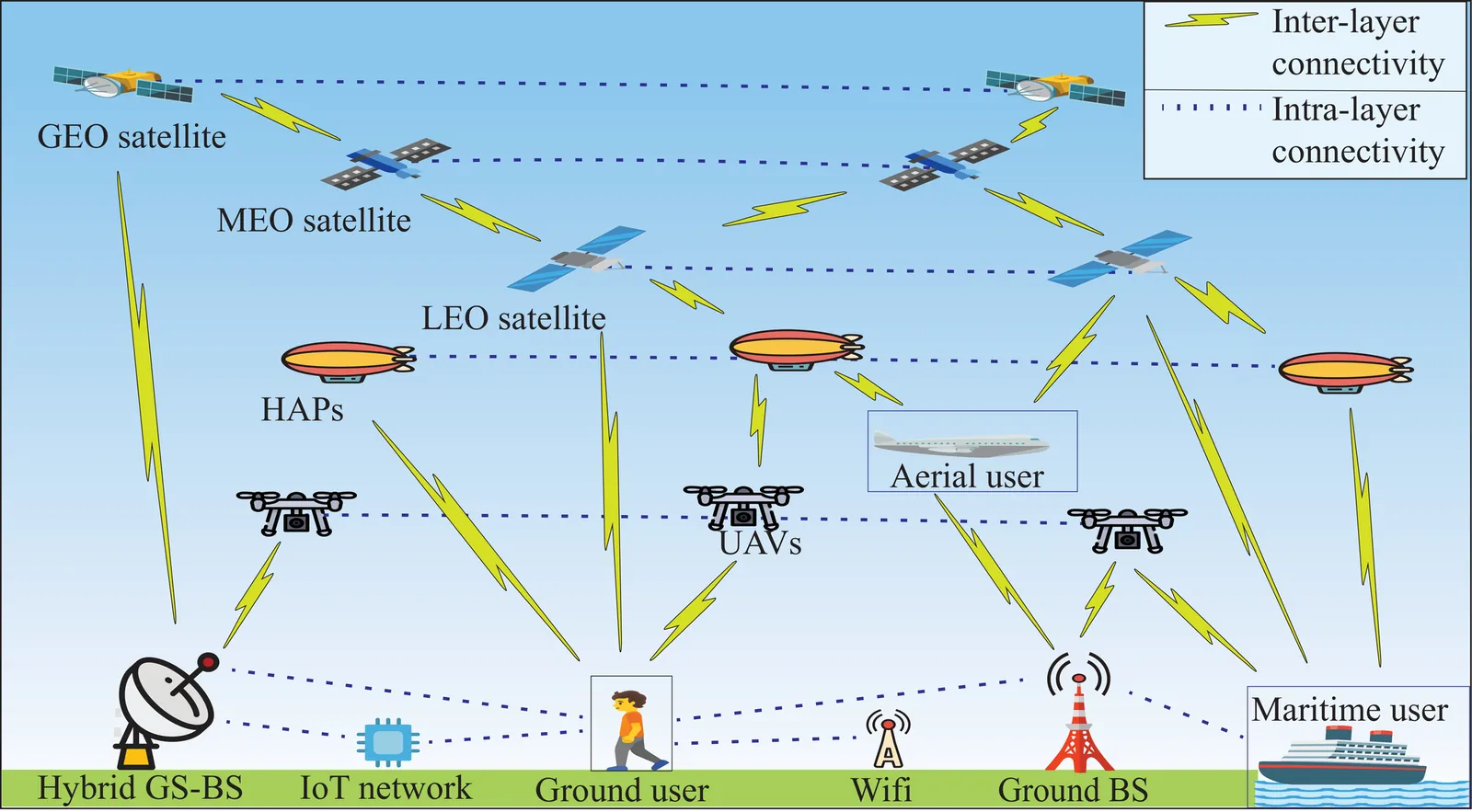

Space-air-ground-integrated network (SAGIN)-enabled multiconnectivity (MC) is emerging as a key enabler for next-generation networks, enabling users to simultaneously utilize multiple links across multi-layer non-terrestrial networks (NTN) and multi-radio access technology (multi-RAT) terrestrial networks (TN). However, the heterogeneity of TN and NTN introduces complex architectural challenges that complicate MC implementation. Specifically, the diversity of link types, spanning air-to-air, air-to-space, space-to-space, space-to-ground, and ground-to-ground communications, renders optimal resource allocation highly complex. Recent advancements in reinforcement learning (RL) and agentic artificial intelligence (AI) have shown remarkable effectiveness in optimal decision-making in complex and dynamic environments. In this paper, we review the current developments in SAGIN-enabled MC and outline the key challenges associated with its implementation. We further highlight the transformative potential of AI-driven approaches for resource optimization in a heterogeneous SAGIN environment. To this end, we present a case study on resource allocation optimization enabled by agentic RL for SAGIN-enabled MC involving diverse radio access technologies (RATs). Results show that learning-based methods can effectively handle complex scenarios and substantially enhance network performance in terms of latency and capacity while incurring a moderate increase in power consumption as an acceptable tradeoff. Finally, open research problems and future directions are presented to realize efficient SAGIN-enabled MC.

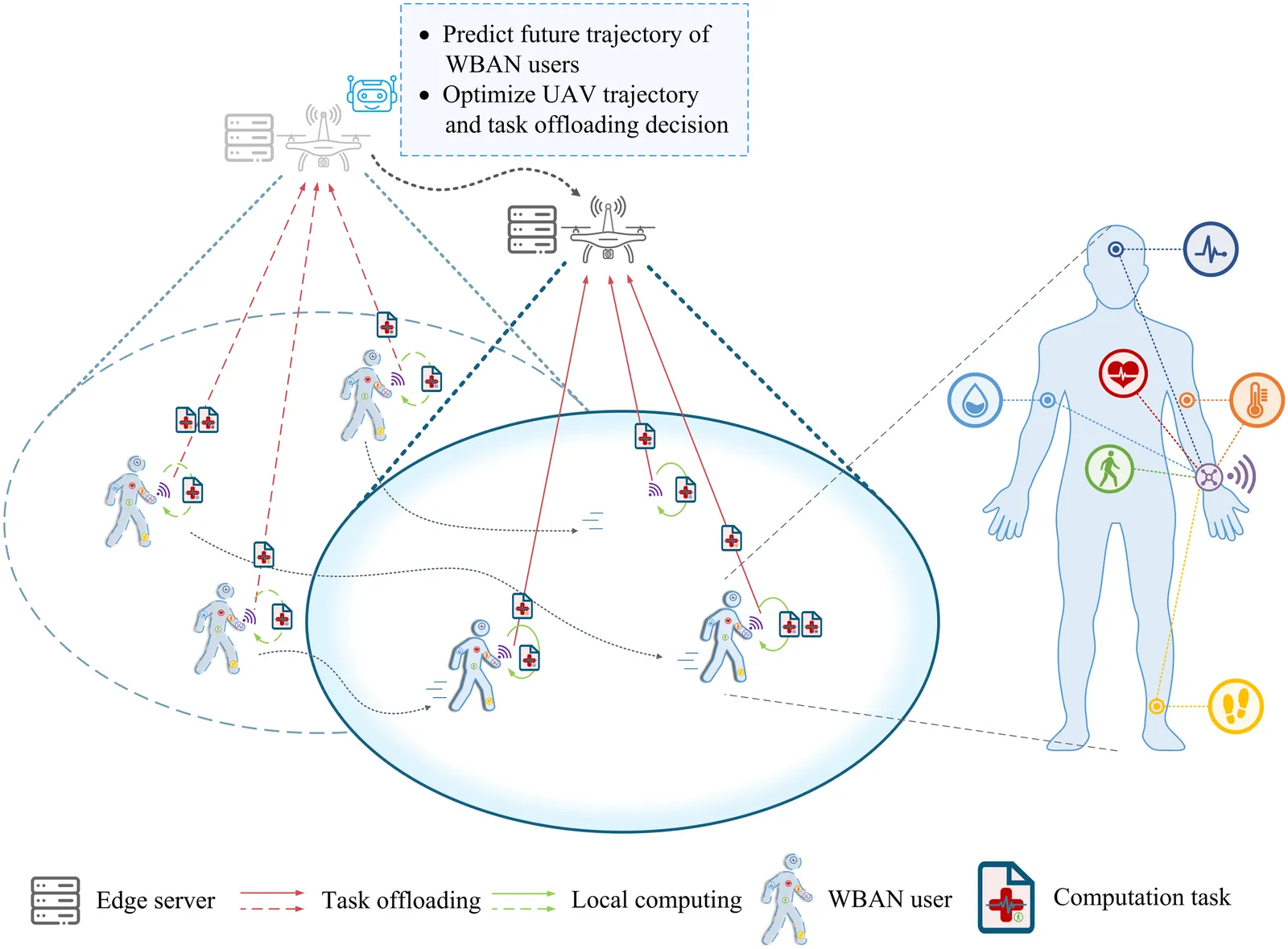

Due to their inherent flexibility and autonomous operation, unmanned aerial vehicles (UAVs) have been widely used in Internet of Medical Things (IoMT) to provide real-time biomedical edge computing service for wireless body area network (WBAN) users. In this paper, considering the time-varying task criticality characteristics of diverse WBAN users and the dual mobility between WBAN users and UAV, we investigate the dynamic task offloading and UAV flight trajectory optimization problem to minimize the weighted average task completion time of all the WBAN users, under the constraint of UAV energy consumption. To tackle the problem, an embodied AI-enhanced IoMT edge computing framework is established. Specifically, we propose a novel hierarchical multi-scale Transformer-based user trajectory prediction model based on the users' historical trajectory traces captured by the embodied AI agent (i.e., UAV). Afterwards, a prediction-enhanced deep reinforcement learning (DRL) algorithm that integrates predicted users' mobility information is designed for intelligently optimizing UAV flight trajectory and task offloading decisions. Real-word movement traces and simulation results demonstrate the superiority of the proposed methods in comparison with the existing benchmarks.

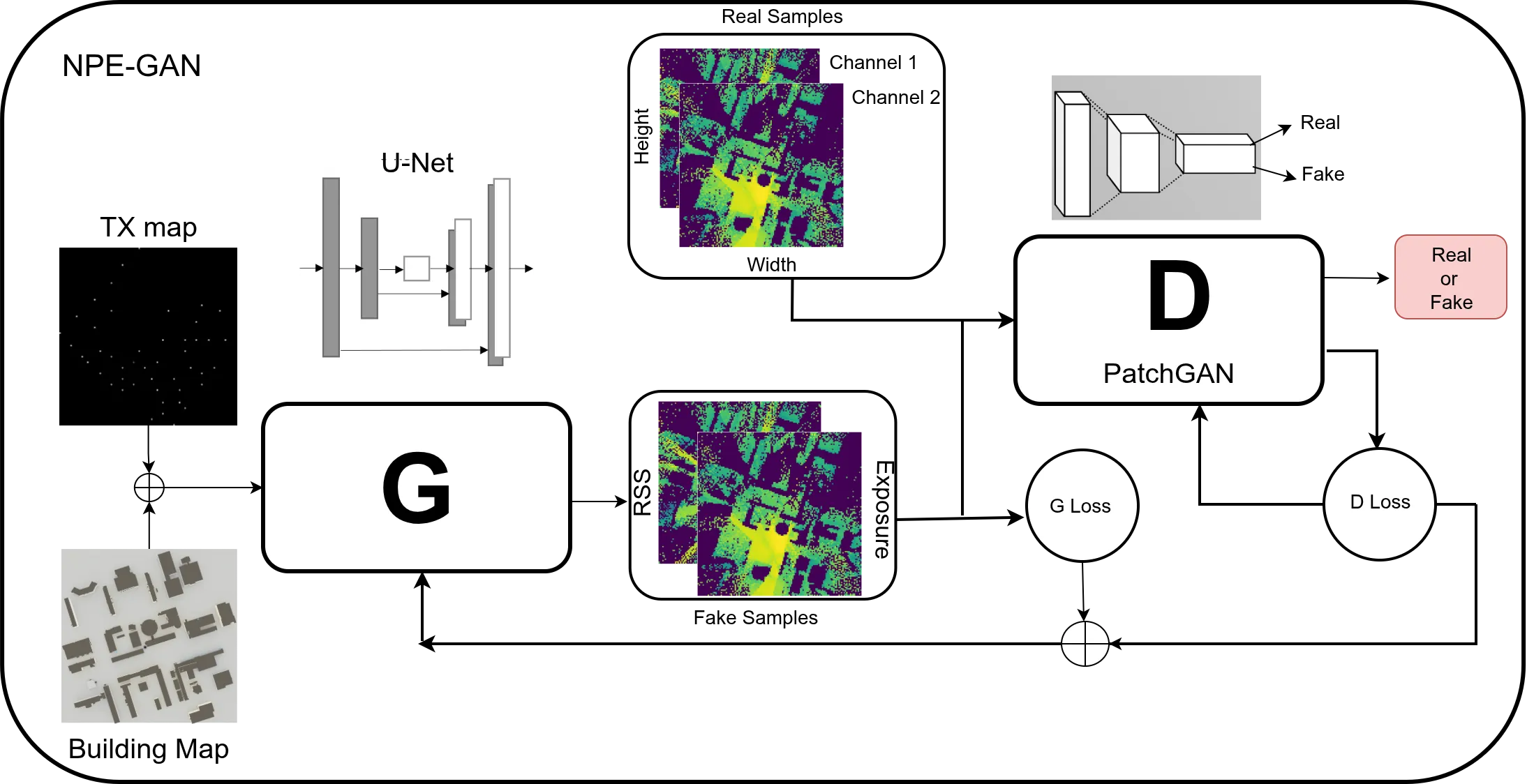

As 5G networks rapidly expand and 6G technologies emerge, characterized by dense deployments, millimeter-wave communications, and dynamic beamforming, the need for scalable simulation tools becomes increasingly critical. These tools must support efficient evaluation of key performance metrics such as coverage and radio-frequency electromagnetic field (RF-EMF) exposure, inform network design decisions, and ensure compliance with safety regulations. Moreover, base station (BS) placement is a crucial task in the network design, where satisfying coverage requirements is essential. To address these, based on our previous work, we first propose a conditional generative adversarial network (cGAN) that predicts location specific received signal strength (RSS), and EMF exposure simultaneously from the network topology, as images. As a network designing application, we propose a Deep Q Network (DQN) framework, using the trained cGAN, for optimal base station (BS) deployment in the network. Compared to conventional ray tracing simulations, the proposed cGAN reduces inference and deployment time from several hours to seconds. Unlike a standalone cGAN, which provides static performance maps, the proposed GAN-DQN framework enables sequential decision making under coverage and exposure constraints, learning effective deployment strategies that directly solve the BS placement problem. Thus making it well suited for real time design and adaptation in dynamic scenarios in order to satisfy pre defined network specific heterogeneous performance goals.

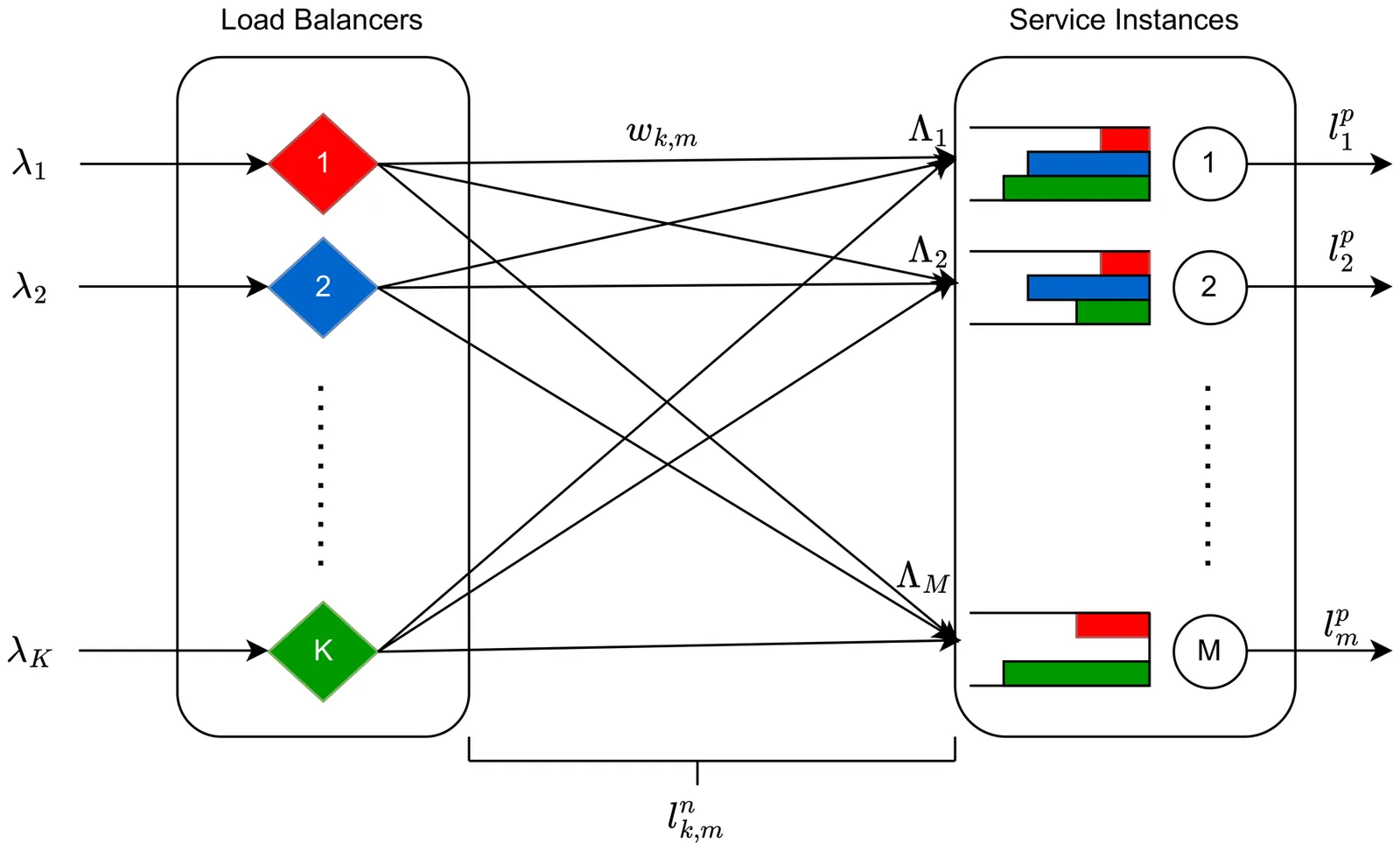

As computation shifts from the cloud to the edge to reduce processing latency and network traffic, the resulting Computing Continuum (CC) creates a dynamic environment where meeting strict Quality of Service (QoS) requirements and avoiding service instance overload becomes challenging. Existing methods often prioritize global metrics and overlook per-client QoS, which is crucial for latency-sensitive and reliability-critical applications. We propose QEdgeProxy, a decentralized QoS-aware load balancer that acts as a proxy between IoT devices and service instances in the CC. We formulate the load balancing problem as a Multi-Player Multi-Armed Bandit (MP-MAB) with heterogeneous rewards: Each load balancer autonomously selects service instances to maximize the probability of meeting its clients' QoS requirements by using Kernel Density Estimation (KDE) to estimate QoS success probabilities. Our load-balancing algorithm also incorporates an adaptive exploration mechanism to recover rapidly from performance shifts and non-stationary conditions. We present a Kubernetes-native QEdgeProxy implementation and evaluate it on an emulated CC testbed deployed on a K3s cluster with realistic network conditions and a latency-sensitive edge-AI workload. Results show that QEdgeProxy significantly outperforms proximity-based and reinforcement-learning baselines in per-client QoS satisfaction, while adapting effectively to load surges and changes in instance availability.

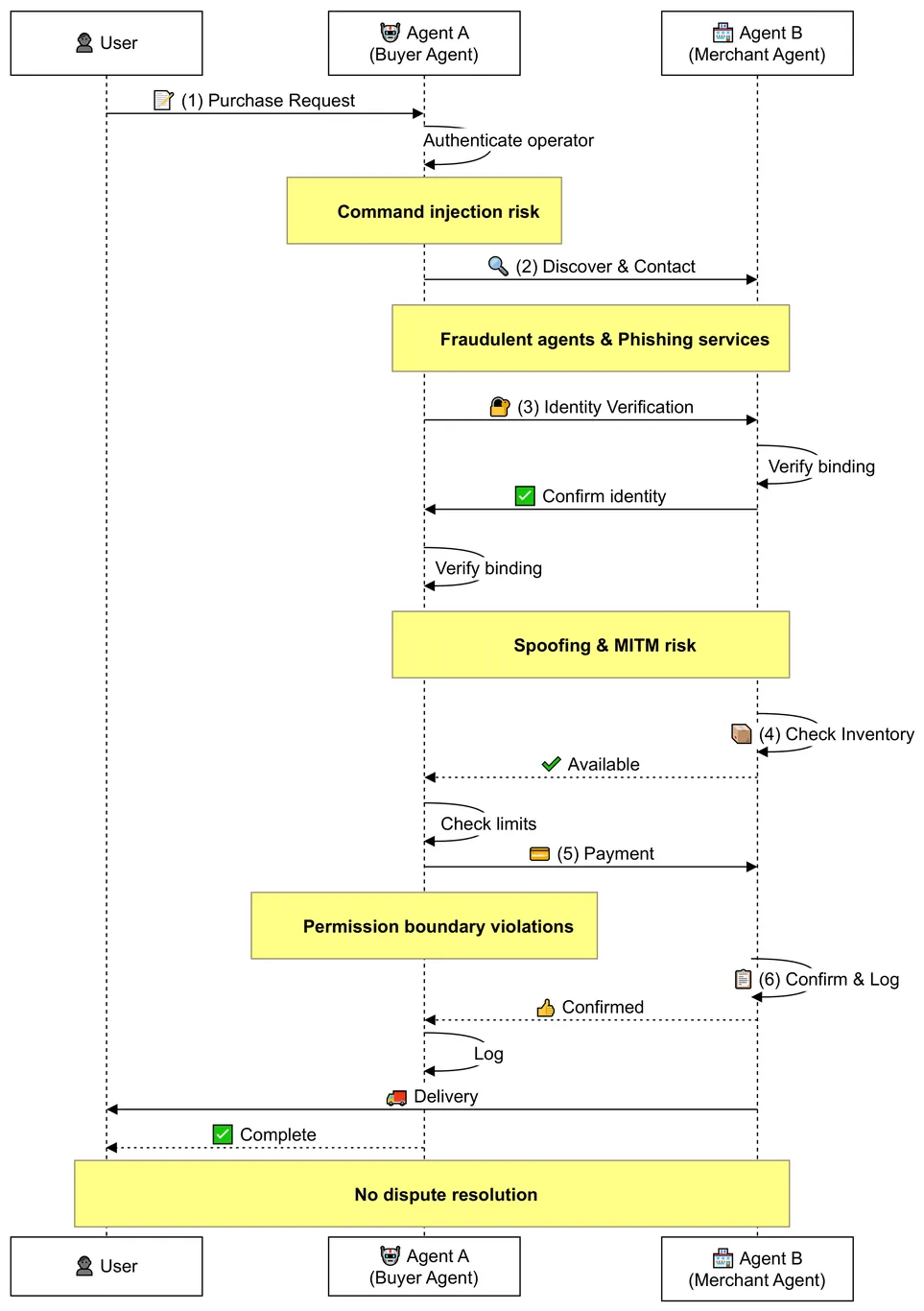

Autonomous AI agents lack traceable accountability mechanisms, creating a fundamental dilemma where systems must either operate as ``downgraded tools'' or risk real-world abuse. This vulnerability stems from the limitations of traditional key-based authentication, which guarantees neither the operator's physical identity nor the agent's code integrity. To bridge this gap, we propose BAID (Binding Agent ID), a comprehensive identity infrastructure establishing verifiable user-code binding. BAID integrates three orthogonal mechanisms: local binding via biometric authentication, decentralized on-chain identity management, and a novel zkVM-based Code-Level Authentication protocol. By leveraging recursive proofs to treat the program binary as the identity, this protocol provides cryptographic guarantees for operator identity, agent configuration integrity, and complete execution provenance, thereby effectively preventing unauthorized operation and code substitution. We implement and evaluate a complete prototype system, demonstrating the practical feasibility of blockchain-based identity management and zkVM-based authentication protocol.

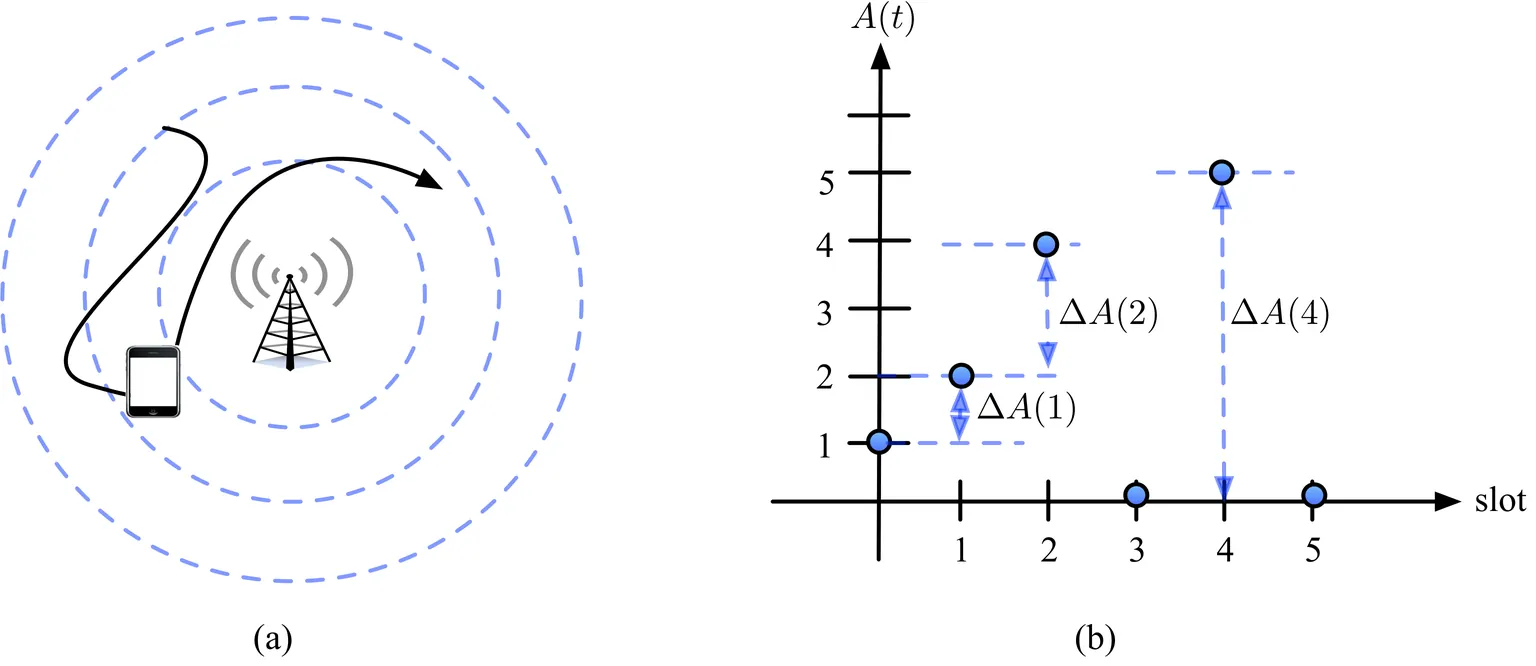

This paper investigates an information update system in which a mobile device monitors a physical process and sends status updates to an access point (AP). A fundamental trade-off arises between the timeliness of the information maintained at the AP and the update cost incurred at the device. To address this trade-off, we propose an online algorithm that determines when to transmit updates using only available observations. The proposed algorithm asymptotically achieves the optimal competitive ratio against an adversary that can simultaneously manipulate multiple sources of uncertainty, including the operation duration, the information staleness, the update cost, and the availability of update opportunities. Furthermore, by incorporating machine learning (ML) advice of unknown reliability into the design, we develop an ML-augmented algorithm that asymptotically attains the optimal consistency-robustness trade-off, even when the adversary can additionally corrupt the ML advice. The optimal competitive ratio scales linearly with the range of update costs, but is unaffected by other uncertainties. Moreover, an optimal competitive online algorithm exhibits a threshold-like response to the ML advice: it either fully trusts or completely ignores the ML advice, as partially trusting the advice cannot improve the consistency without severely degrading the robustness. Extensive simulations in stochastic settings further validate the theoretical findings in the adversarial environment.

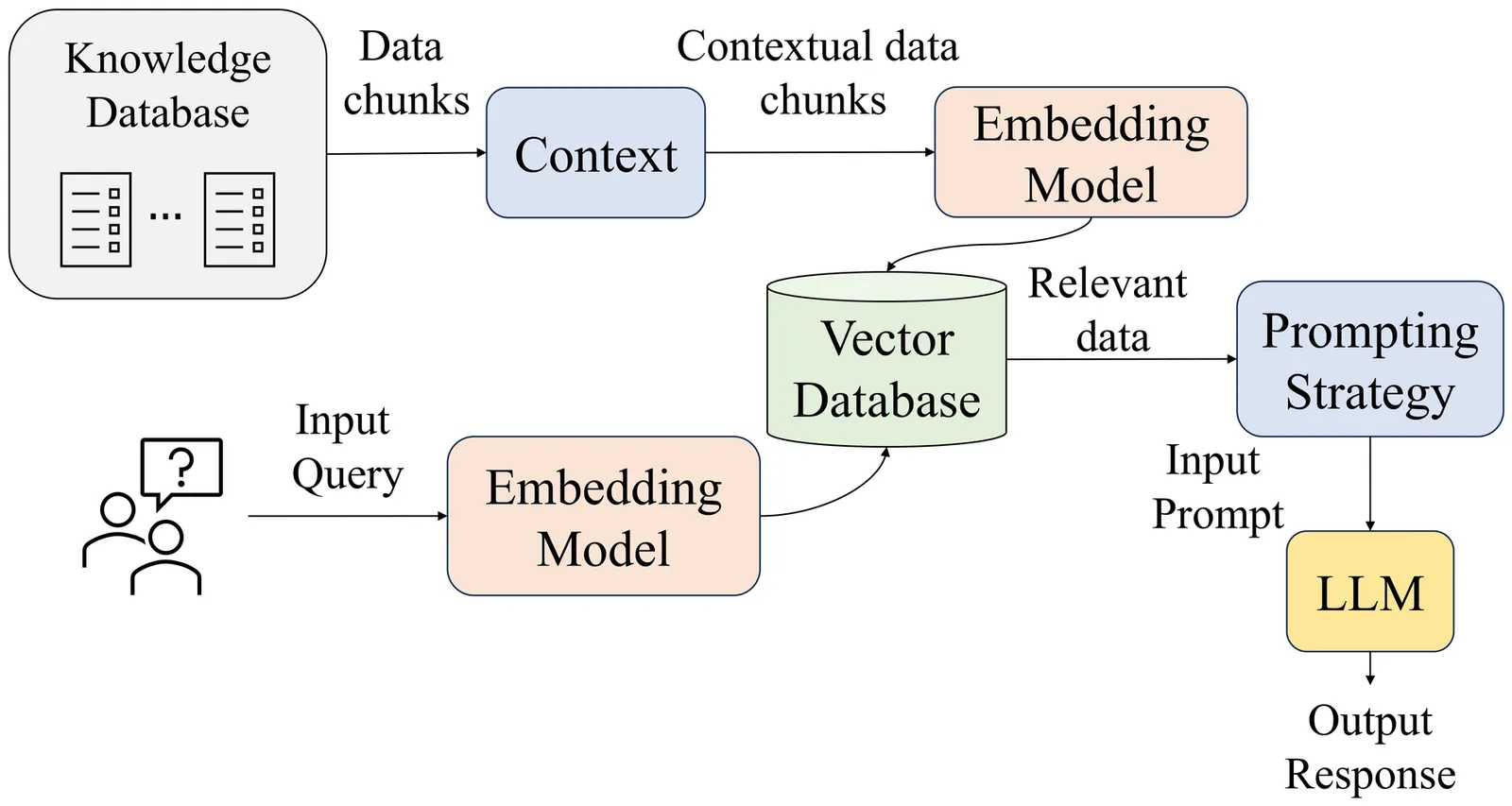

We present a retrieval-augmented question answering framework for 5G/6G networks, where the Open Radio Access Network (O-RAN) has become central to disaggregated, virtualized, and AI-driven wireless systems. While O-RAN enables multi-vendor interoperability and cloud-native deployments, its fast-changing specifications and interfaces pose major challenges for researchers and practitioners. Manual navigation of these complex documents is labor-intensive and error-prone, slowing system design, integration, and deployment. To address this challenge, we adopt Contextual Retrieval-Augmented Generation (Contextual RAG), a strategy in which candidate answer choices guide document retrieval and chunk-specific context to improve large language model (LLM) performance. This improvement over traditional RAG achieves more targeted and context-aware retrieval, which improves the relevance of documents passed to the LLM, particularly when the query alone lacks sufficient context for accurate grounding. Our framework is designed for dynamic domains where data evolves rapidly and models must be continuously updated or redeployed, all without requiring LLM fine-tuning. We evaluate this framework using the ORANBenchmark-13K dataset, and compare three LLMs, namely, Llama3.2, Qwen2.5-7B, and Qwen3.0-4B, across both Direct Question Answering (Direct Q&A) and Chain-of-Thought (CoT) prompting strategies. We show that Contextual RAG consistently improves accuracy over standard RAG and base prompting, while maintaining competitive runtime and CO2 emissions. These results highlight the potential of Contextual RAG to serve as a scalable and effective solution for domain-specific Q&A in ORAN and broader 5G/6G environments, enabling more accurate interpretation of evolving standards while preserving efficiency and sustainability.

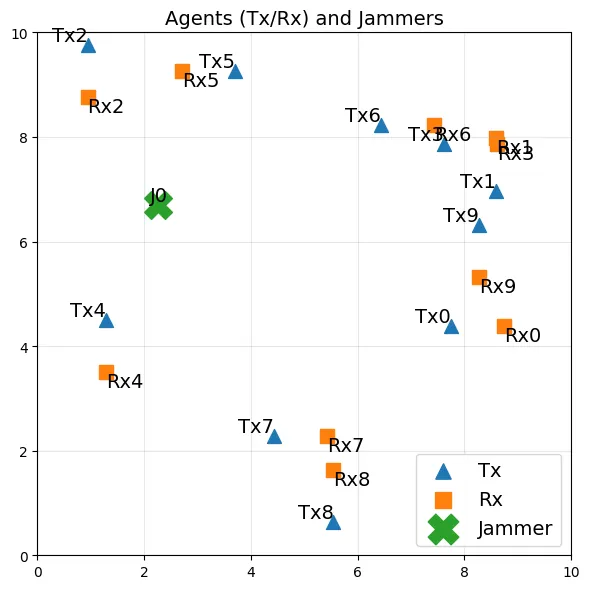

Reactive jammers pose a severe security threat to robotic-swarm networks by selectively disrupting inter-agent communications and undermining formation integrity and mission success. Conventional countermeasures such as fixed power control or static channel hopping are largely ineffective against such adaptive adversaries. This paper presents a multi-agent reinforcement learning (MARL) framework based on the QMIX algorithm to improve the resilience of swarm communications under reactive jamming. We consider a network of multiple transmitter-receiver pairs sharing channels while a reactive jammer with Markovian threshold dynamics senses aggregate power and reacts accordingly. Each agent jointly selects transmit frequency (channel) and power, and QMIX learns a centralized but factorizable action-value function that enables coordinated yet decentralized execution. We benchmark QMIX against a genie-aided optimal policy in a no-channel-reuse setting, and against local Upper Confidence Bound (UCB) and a stateless reactive policy in a more general fading regime with channel reuse enabled. Simulation results show that QMIX rapidly converges to cooperative policies that nearly match the genie-aided bound, while achieving higher throughput and lower jamming incidence than the baselines, thereby demonstrating MARL's effectiveness for securing autonomous swarms in contested environments.

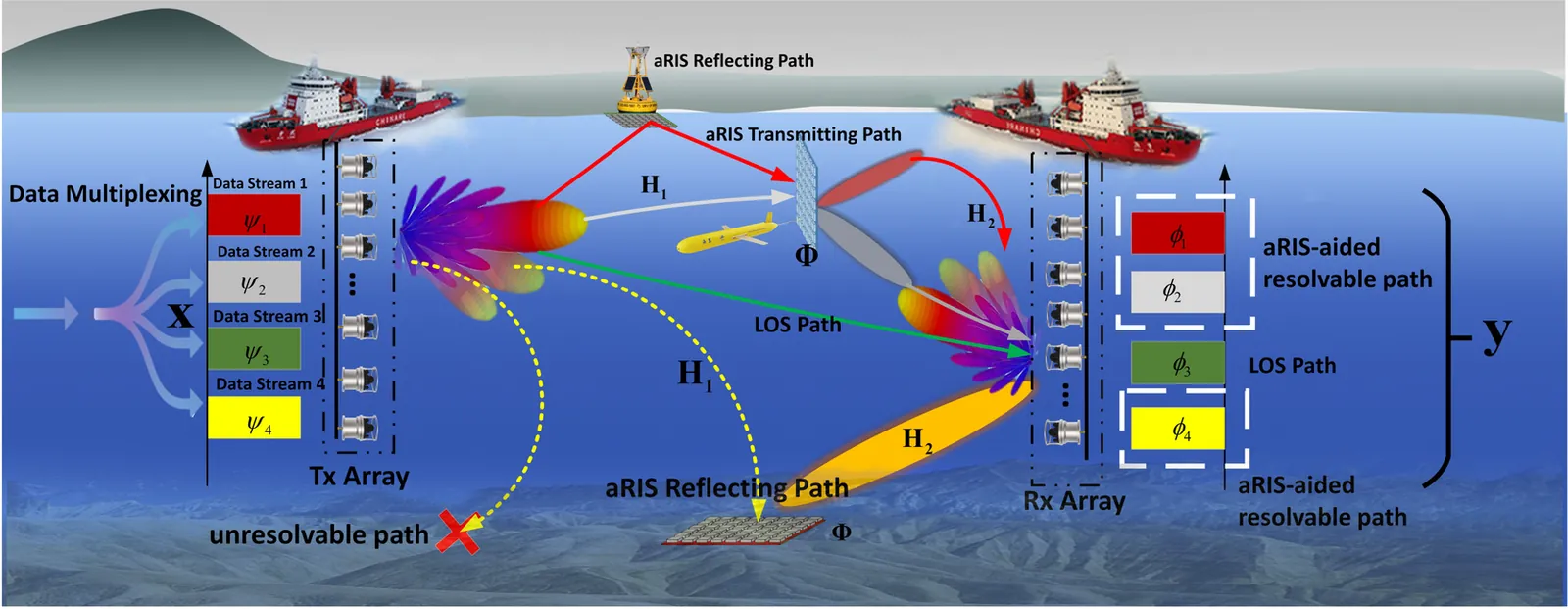

Underwater acoustic (UWA) communications are essential for high-speed marine data transmission but remain severely constrained by limited bandwidth, significant propagation loss, and sparse multipath structures. Conventional underwater acoustic multiple-input multiple-output (MIMO) systems primarily utilize spatial diversity but suffer from limited array resolution, causing angular ambiguity and insufficient spatial degrees of freedom (DoFs). This paper addresses these limitations through acoustic Reconfigurable Intelligent Surfaces (aRIS) to actively generate orthogonally distinguishable virtual paths, significantly enhancing spatial DoFs and channel capacity. An ocean-specific DoF-channel coupling model is established, explicitly deriving conditions for spatial rank enhancement. Subsequently, the optimal geometric locus, termed the Light-Point, is analytically identified, where deploying a single aRIS maximizes DoFs by introducing two and three additional resolvable paths in deep-sea and shallow-sea environments, respectively. Furthermore, an active simultaneous transmitting and reflecting (ASTAR) aRIS architecture with independent beam control and adaptive beam-tracking mechanism integrating unmanned underwater vehicles (UUVs) and acoustic intensity gradient sensing is proposed. Extensive simulations validate the proposed joint aRIS deployment and beamforming framework, demonstrating substantial UWA channel capacity improvements-up to 265% and 170% in shallow-sea and deep-sea scenarios, respectively.

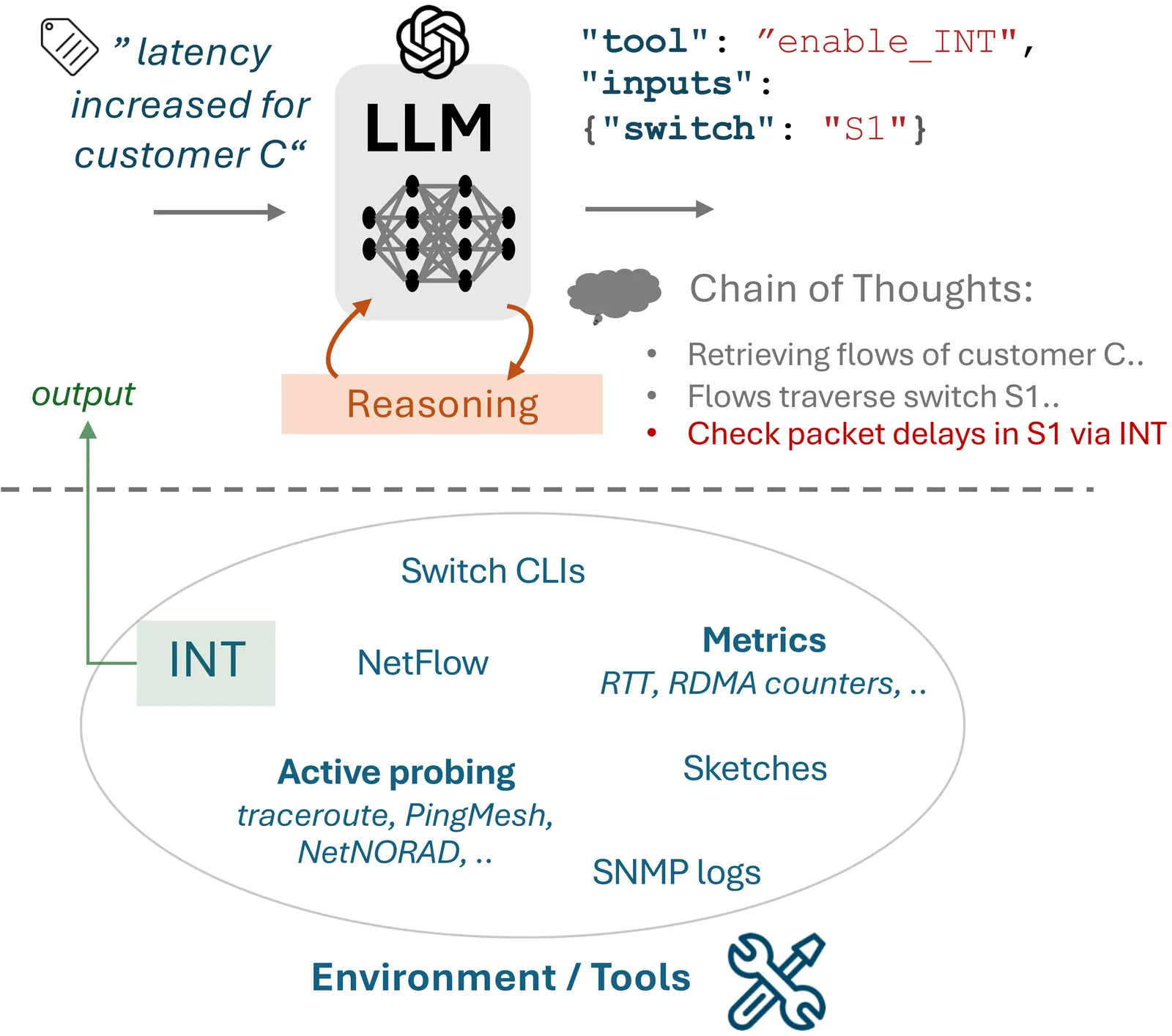

Agentic systems, powered by Large Language Models (LLMs), assist network engineers with network configuration synthesis and network troubleshooting tasks. For network troubleshooting, progress is hindered by the absence of standardized and accessible benchmarks for evaluating LLM agents in dynamic network settings at low operational effort. We present NIKA, the largest public benchmark to date for LLM-driven network incident diagnosis and troubleshooting. NIKA targets both domain experts and especially AI researchers alike, providing zero-effort replay of real-world network scenarios, and establishing well-defined agent-network interfaces for quick agent prototyping. NIKA comprises hundreds of curated network incidents, spanning five network scenarios, from data centers to ISP networks, and covers 54 representative network issues. Lastly, NIKA is modular and extensible by design, offering APIs to facilitate the integration of new network scenarios and failure cases. We evaluate state-of-the-art LLM agents on NIKA and find that while larger models succeed more often in detecting network issues, they still struggle to localize faults and identify root causes. NIKA is open-source and available to the community: https://github.com/sands-lab/nika.