Operating Systems

Covers all aspects of operating systems design and implementation.

Looking for a broader view? This category is part of:

Covers all aspects of operating systems design and implementation.

Looking for a broader view? This category is part of:

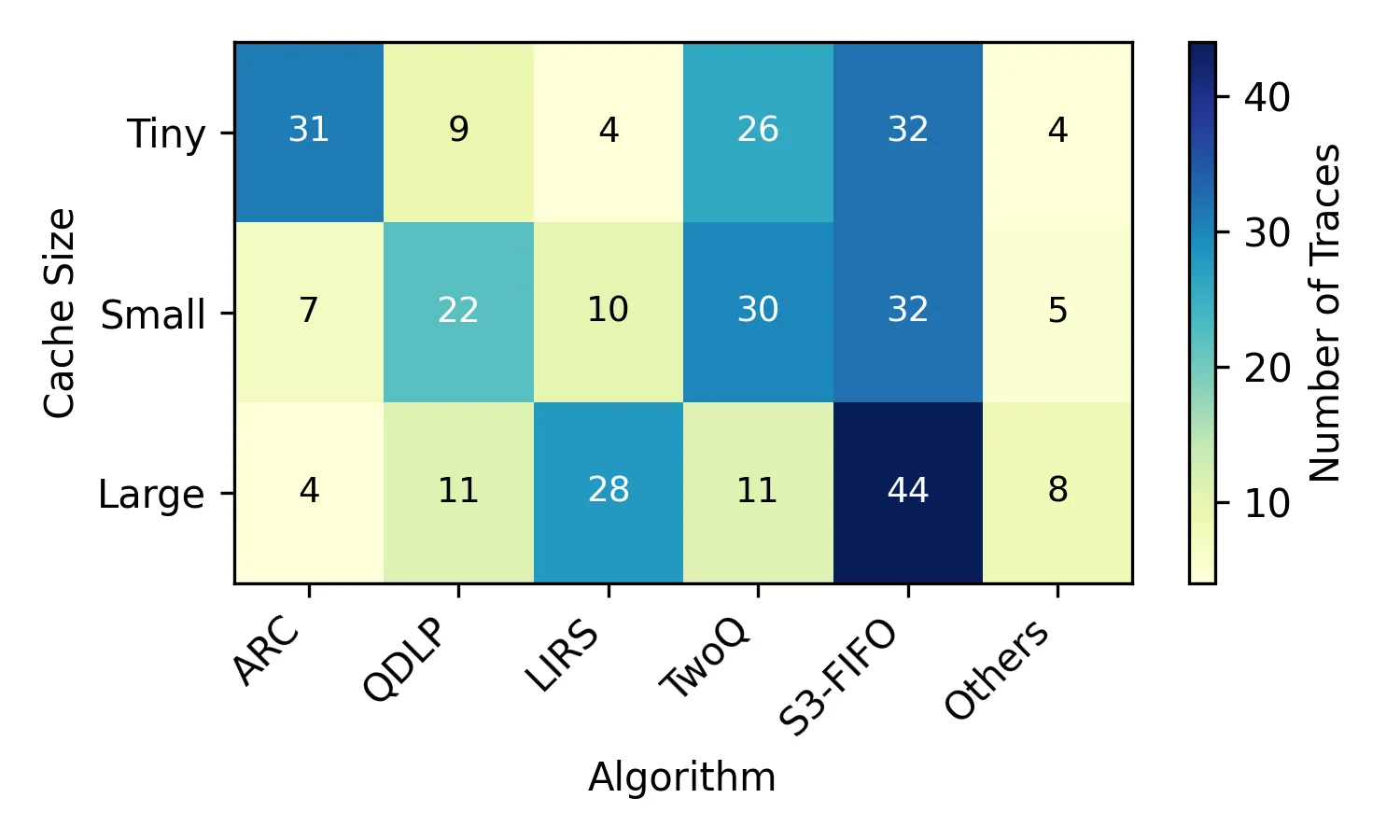

Resource-management tasks in modern operating and distributed systems continue to rely primarily on hand-designed heuristics for tasks such as scheduling, caching, or active queue management. Designing performant heuristics is an expensive, time-consuming process that we are forced to continuously go through due to the constant flux of hardware, workloads and environments. We propose a new alternative: synthesizing instance-optimal heuristics -- specialized for the exact workloads and hardware where they will be deployed -- using code-generating large language models (LLMs). To make this synthesis tractable, Vulcan separates policy and mechanism through LLM-friendly, task-agnostic interfaces. With these interfaces, users specify the inputs and objectives of their desired policy, while Vulcan searches for performant policies via evolutionary search over LLM-generated code. This interface is expressive enough to capture a wide range of system policies, yet sufficiently constrained to allow even small, inexpensive LLMs to generate correct and executable code. We use Vulcan to synthesize performant heuristics for cache eviction and memory tiering, and find that these heuristics outperform all human-designed state-of-the-art algorithms by upto 69% and 7.9% in performance for each of these tasks respectively.

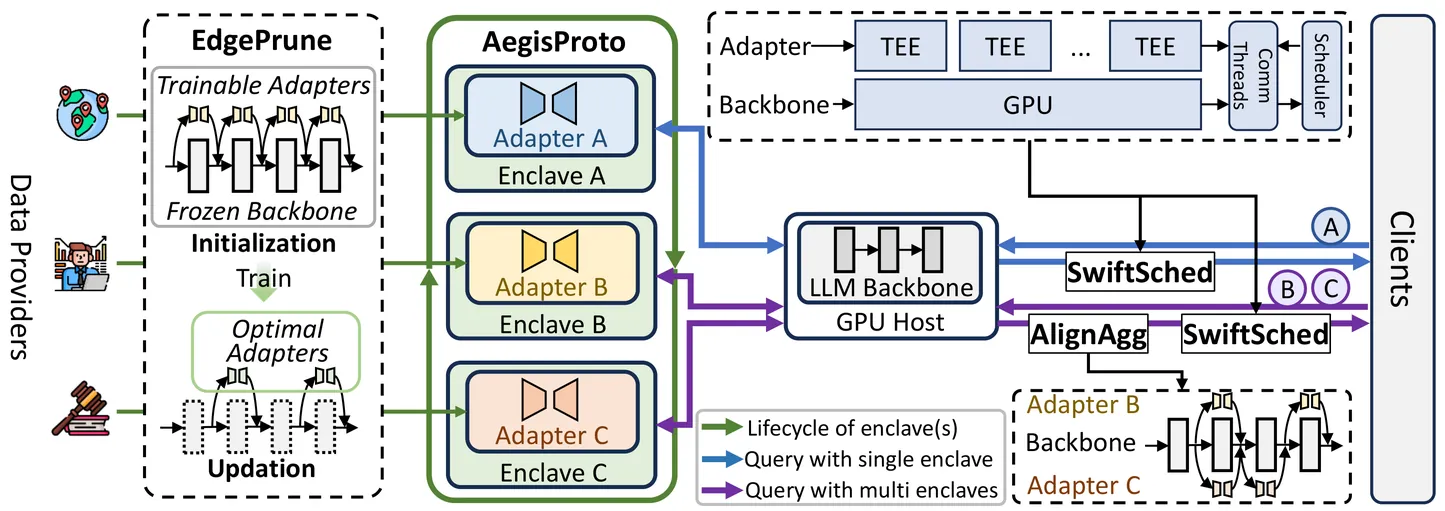

Future improvements in large language model (LLM) services increasingly hinge on access to high-value professional knowledge rather than more generic web data. However, the data providers of this knowledge face a skewed tradeoff between income and risk: they receive little share of downstream value yet retain copyright and privacy liability, making them reluctant to contribute their assets to LLM services. Existing techniques do not offer a trustworthy and controllable way to use professional knowledge, because they keep providers in the dark and combine knowledge parameters with the underlying LLM backbone. In this paper, we present PKUS, the Professional Knowledge Utilization System, which treats professional knowledge as a first-class, separable artifact. PKUS keeps the backbone model on GPUs and encodes each provider's contribution as a compact adapter that executes only inside an attested Trusted Execution Environment (TEE). A hardware-rooted lifecycle protocol, adapter pruning, multi-provider aggregation, and split-execution scheduling together make this design practical at serving time. On SST-2, MNLI, and SQuAD with GPT-2 Large and Llama-3.2-1B, PKUS preserves model utility, matching the accuracy and F1 of full fine-tuning and plain LoRA, while achieving the lowest per-request latency with 8.1-11.9x speedup over CPU-only TEE inference and naive CPU-GPU co-execution.

Reusing KV cache is essential for high efficiency of Large Language Model (LLM) inference systems. With more LLM users, the KV cache footprint can easily exceed GPU memory capacity, so prior work has proposed to either evict KV cache to lower-tier storage devices, or compress KV cache so that more KV cache can be fit in the fast memory. However, prior work misses an important opportunity: jointly optimizing the eviction and compression decisions across all KV caches to minimize average generation latency without hurting quality. We propose EVICPRESS, a KV-cache management system that applies lossy compression and adaptive eviction to KV cache across multiple storage tiers. Specifically, for each KV cache of a context, EVICPRESS considers the effect of compression and eviction of the KV cache on the average generation quality and delay across all contexts as a whole. To achieve this, EVICPRESS proposes a unified utility function that quantifies the effect of quality and delay of the lossy compression or eviction. To this end, EVICPRESS's profiling module periodically updates the utility function scores on all possible eviction-compression configurations for all contexts and places KV caches using a fast heuristic to rearrange KV caches on all storage tiers, with the goal of maximizing the utility function scores on each storage tier. Compared to the baselines that evict KV cache or compress KV cache, EVICPRESS achieves higher KV-cache hit rates on fast devices, i.e., lower delay, while preserving high generation quality by applying conservative compression to contexts that are sensitive to compression errors. Evaluation on 12 datasets and 5 models demonstrates that EVICPRESS achieves up to 2.19x faster time-to-first-token (TTFT) at equivalent generation quality.

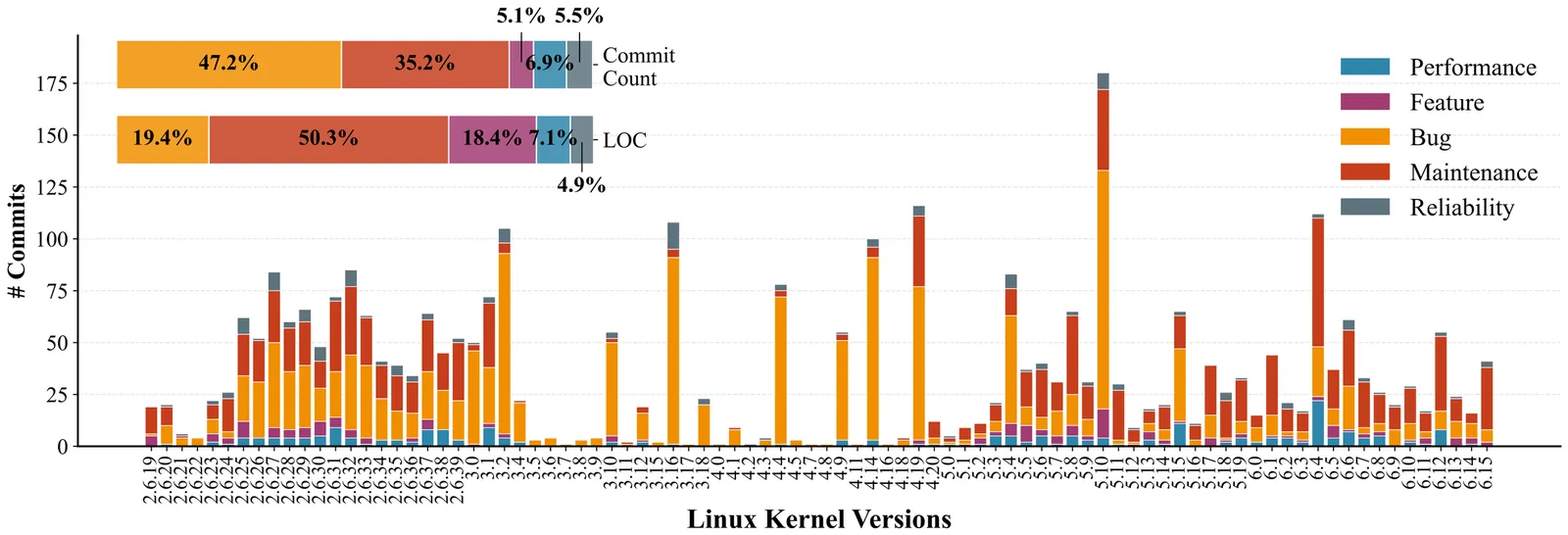

File systems are critical OS components that require constant evolution to support new hardware and emerging application needs. However, the traditional paradigm of developing features, fixing bugs, and maintaining the system incurs significant overhead, especially as systems grow in complexity. This paper proposes a new paradigm, generative file systems, which leverages Large Language Models (LLMs) to generate and evolve a file system from prompts, effectively addressing the need for robust evolution. Despite the widespread success of LLMs in code generation, attempts to create a functional file system have thus far been unsuccessful, mainly due to the ambiguity of natural language prompts. This paper introduces SYSSPEC, a framework for developing generative file systems. Its key insight is to replace ambiguous natural language with principles adapted from formal methods. Instead of imprecise prompts, SYSSPEC employs a multi-part specification that accurately describes a file system's functionality, modularity, and concurrency. The specification acts as an unambiguous blueprint, guiding LLMs to generate expected code flexibly. To manage evolution, we develop a DAG-structured patch that operates on the specification itself, enabling new features to be added without violating existing invariants. Moreover, the SYSSPEC toolchain features a set of LLM-based agents with mechanisms to mitigate hallucination during construction and evolution. We demonstrate our approach by generating SPECFS, a concurrent file system. SPECFS passes hundreds of regression tests, matching a manually-coded baseline. We further confirm its evolvability by seamlessly integrating 10 real-world features from Ext4. Our work shows that a specification-guided approach makes generating and evolving complex systems not only feasible but also highly effective.

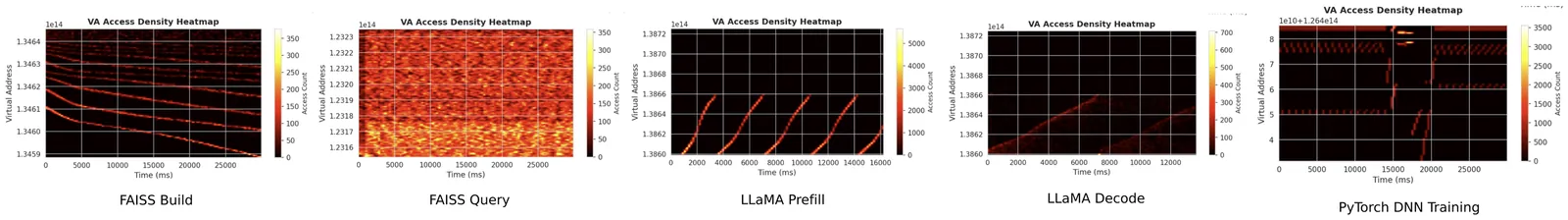

Performance in modern GPU-centric systems increasingly depends on resource management policies, including memory placement, scheduling, and observability. However, uniform policies typically yield suboptimal performance across diverse workloads. Existing approaches present a tradeoff: user-space runtimes provide programmability and flexibility but lack cross-tenant visibility and fine-grained control of hardware resources; meanwhile, modifications to the OS kernel introduce significant complexity and safety risks. To address this, we argue that the GPU driver and device layer should provide an extensible OS interface for policy enforcement. While the emerging eBPF technology shows potential, directly applying existing host-side eBPF is insufficient because they lack visibility and control into critical device-side events, and directly embedding policy code into GPU kernels could compromise safety and efficiency. We propose gpu_ext, an eBPF-based runtime that treats the GPU driver and device as a programmable OS subsystem. gpu_ext extends GPU drivers by exposing safe programmable hooks and introduces a device-side eBPF runtime capable of executing verified policy logic within GPU kernels, enabling coherent and transparent policies. Evaluation across realistic workloads including inference, training, and vector search demonstrates that gpu_ext improves throughput by up to 4.8x and reduces tail latency by up to 2x, incurring low overhead, without modifying or restarting applications

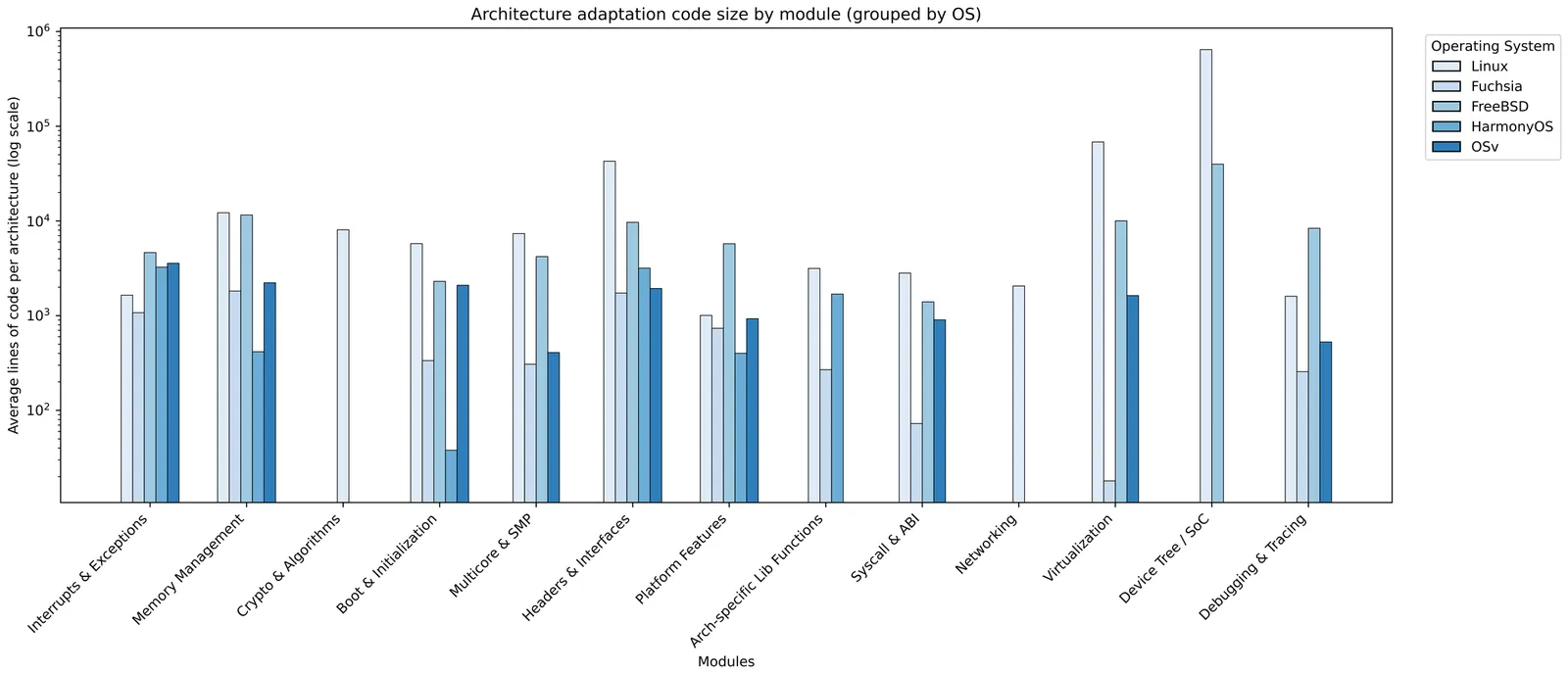

The Linux kernel source code contains numerous constant values that critically influence system performance. Many of these constants, which we term perf-consts, are magic numbers that encode brittle assumptions about hardware and workloads. As systems and workloads evolve, such constants often become suboptimal. Unfortunately, deployed kernels lack support for safe and efficient in-situ tuning of perf-consts without a long and disruptive process of rebuilding and redeploying the kernel image. This paper advocates principled OS performance tunability. We present KernelX, a system that provides a safe, efficient, and programmable interface for in-situ tuning of arbitrary perf-consts on a running kernel. KernelX transforms any perf-const into a tunable knob on demand using a novel mechanism called Scoped Indirect Execution (SIE). SIE precisely identifies the binary boundaries where a perf-const influences system state and redirects execution to synthesized instructions that update the state as if new values were used. KernelX goes beyond version atomicity to guarantee side-effect safety, a property not provided by existing kernel update mechanisms. KernelX also provides a programmable interface that allows policies to incorporate application hints, hardware heuristics, and fine-grained isolation, without modifying kernel source code or disrupting deployed OS kernels. Case studies across multiple kernel subsystems demonstrate that KernelX enables significant performance improvements by making previously untunable perf-consts safely tunable at runtime, while supporting millisecond-scale policy updates.

zkVMs promise general-purpose verifiable computation through ISA-level compatibility with modern programs and toolchains. However, compatibility extends further than just the ISA; modern programs often cannot run or even compile without an operating system and libc. zkVMs attempt to address this by maintaining forks of language-specific runtimes and statically linking them into applications to create self-contained unikernels, but this ad-hoc approach leads to version hell and burdens verifiable applications (vApps) with an unnecessarily large trusted computing base. We solve this problem with ZeroOS, a modular library operating system (libOS) for vApp unikernels; vApp developers can use off-the-shelf toolchains to compile and link only the exact subset of the Linux ABI their vApp needs. Any zkVM team can easily leverage the ZeroOS ecosystem by writing a ZeroOS bootloader for their platform, resulting in a reduced maintainence burden and unifying the entire zkVM ecosystem with consolidated development and audit resources. ZeroOS is free and open-sourced at https://github.com/LayerZero-Labs/ZeroOS.

Nested virtualization is now widely supported by major cloud vendors, allowing users to leverage virtualization-based technologies in the cloud. However, supporting nested virtualization significantly increases host hypervisor complexity and introduces a new attack surface in cloud platforms. While many prior studies have explored hypervisor fuzzing, none has explicitly addressed nested virtualization due to the challenge of generating effective virtual machine (VM) instances with a vast state space as fuzzing inputs. We present NecoFuzz, the first fuzzing framework that systematically targets nested virtualization-specific logic in hypervisors. NecoFuzz synthesizes executable fuzz-harness VMs with internal states near the boundary between valid and invalid, guided by an approximate model of hardware-assisted virtualization specifications. Since vulnerabilities in nested virtualization often stem from incorrect handling of unexpected VM states, this specification-guided, boundary-oriented generation significantly improves coverage of security-critical code across different hypervisors. We implemented NecoFuzz on Intel VT-x and AMD-V by extending AFL++ to support fuzz-harness VMs. NecoFuzz achieved 84.7% and 74.2% code coverage for nested virtualization-specific code on Intel VT-x and AMD-V, respectively, and uncovered six previously unknown vulnerabilities across three hypervisors, including two assigned CVEs.

The design of real-time systems is based on assumptions about environmental conditions in which they will operate. We call this their safe operational envelope. Violation of these assumptions, i.e., out-of-envelope environments, can jeopardize timeliness and safety of real-time systems, e.g., by overwhelming them with interrupt storms. A long-lasting debate has been going on over which design paradigm, the time- or event-triggered, is more robust against such behavior. In this work, we investigate the claim that time-triggered systems are immune against out-of-envelope behavior and how event-triggered systems can be constructed to defend against being overwhelmed by interrupt showers. We introduce importance (independently of priority and criticality) as a means to express which tasks should still be scheduled in case environmental design assumptions cease to hold, draw parallels to mixed-criticality scheduling, and demonstrate how event-triggered systems can defend against out-of-envelope behavior.

We present Mirror-Optimized Storage Tiering (MOST), a novel tiering-based approach optimized for modern storage hierarchies. The key idea of MOST is to combine the load balancing advantages of mirroring with the space-efficiency advantages of tiering. Specifically, MOST dynamically mirrors a small amount of hot data across storage tiers to efficiently balance load, avoiding costly migrations. As a result, MOST is as space-efficient as classic tiering while achieving better bandwidth utilization under I/O-intensive workloads. We implement MOST in Cerberus, a user-level storage management layer based on CacheLib. We show the efficacy of Cerberus through a comprehensive empirical study: across a range of static and dynamic workloads, Cerberus achieves better throughput than competing approaches on modern storage hierarchies especially under I/O-intensive and dynamic workloads.

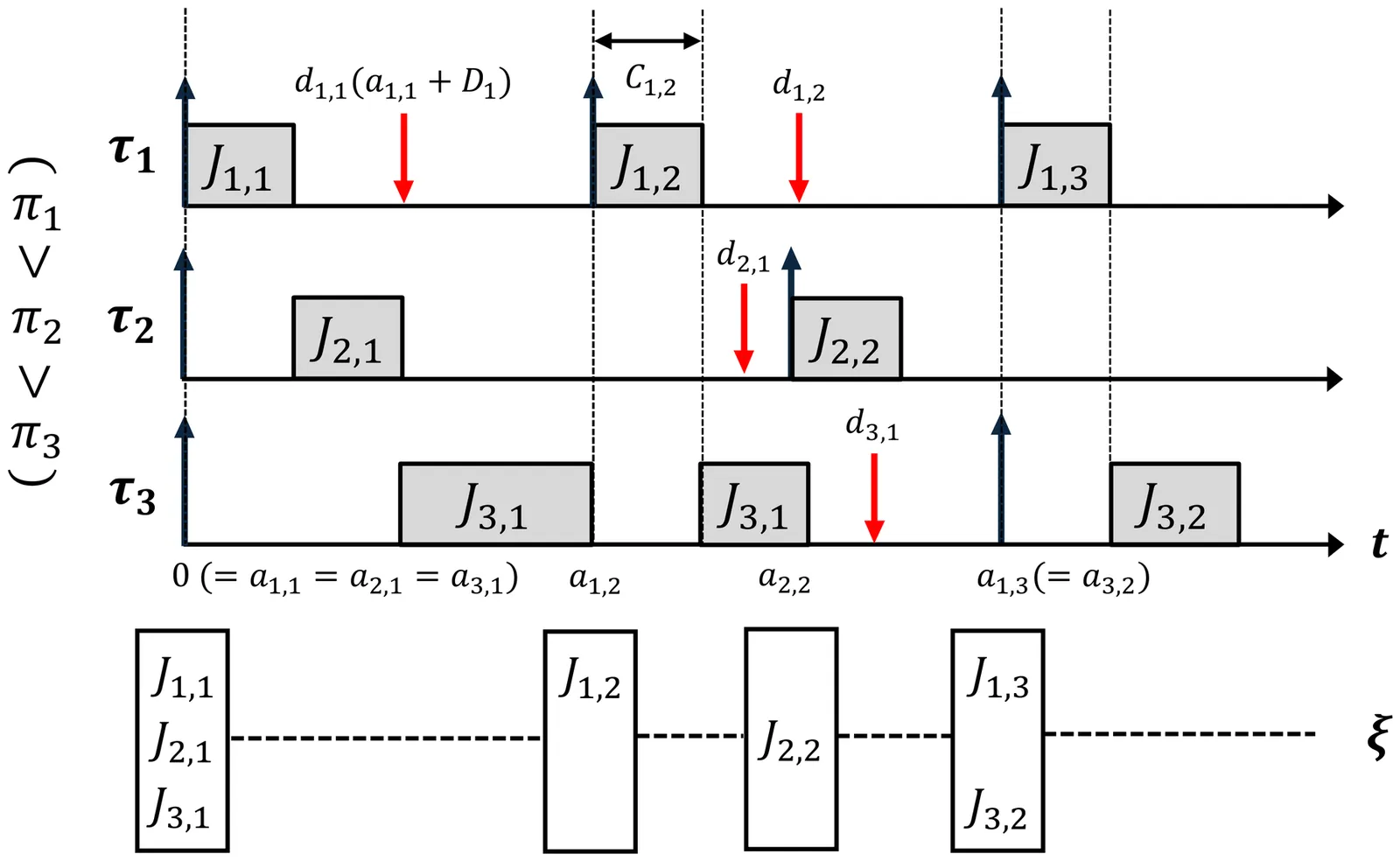

Accurate estimation of the Worst-Case Deadline Failure Probability (WCDFP) has attracted growing attention as a means to provide safety assurances in complex systems such as robotic platforms and autonomous vehicles. WCDFP quantifies the likelihood of deadline misses under the most pessimistic operating conditions, and safe estimation is essential for dependable real-time applications. However, achieving high accuracy in WCDFP estimation often incurs significant computational cost. Recent studies have revealed that the classical assumption of the critical instant, the activation pattern traditionally considered to trigger the worst-case behavior, can lead to underestimation of WCDFP in probabilistic settings. This observation motivates the use of a revised critical instant formulation that more faithfully captures the true worst-case scenario. This paper investigates convolution-based methods for WCDFP estimation under this revised setting and proposes an optimization technique that accelerates convolution by improving the merge order. Extensive experiments with diverse execution-time distributions demonstrate that the proposed optimized Aggregate Convolution reduces computation time by up to an order of magnitude compared to Sequential Convolution, while retaining accurate and safe-sided WCDFP estimates. These results highlight the potential of the approach to provide both efficiency and reliability in probabilistic timing analysis for safety-critical real-time applications.

The growing complexity of embedded systems creates tension between rich functionality and strict resource and real-time constraints. Traditional monolithic operating system and hypervisor designs suffer from resource bloat and unpredictable scheduling, making them unsuitable for time-critical workloads where low latency and low jitter are essential. We propose TenonOS, a demand-driven, self-generating, lightweight operating system framework for time-critical embedded systems that rethinks both hypervisor and operating system architectures. TenonOS introduces a LibOS-on-LibOS model that decomposes hypervisor and operating system functionality into fine-grained, reusable micro-libraries. A generative orchestration engine dynamically composes these libraries to synthesize a customized runtime tailored to each application's criticality, timing requirements, and resource profile. TenonOS consists of two core components: Mortise, a minimalist micro-hypervisor, and Tenon, a real-time library operating system. Mortise provides lightweight isolation and removes the usual double-scheduler overhead in virtualized setups, while Tenon provides precise and deterministic task management. By generating only the necessary software stack per workload, TenonOS removes redundant layers, minimizes the trusted computing base, and maximizes responsiveness. Experiments show a 40.28 percent reduction in scheduling latency, an ultra-compact 361 KiB memory footprint, and strong adaptability.

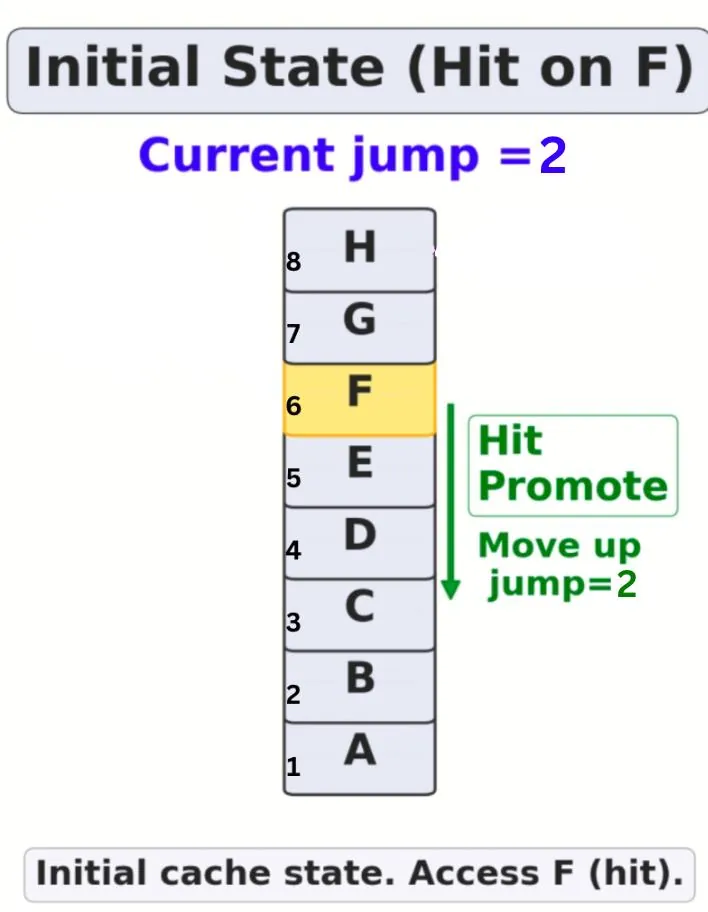

Efficient cache management is critical for optimizing the system performance, and numerous caching mechanisms have been proposed, each exploring various insertion and eviction strategies. In this paper, we present AdaptiveClimb and its extension, DynamicAdaptiveClimb, two novel cache replacement policies that leverage lightweight, cache adaptation to outperform traditional approaches. Unlike classic Least Recently Used (LRU) and Incremental Rank Progress (CLIMB) policies, AdaptiveClimb dynamically adjusts the promotion distance (jump) of the cached objects based on recent hit and miss patterns, requiring only a single tunable parameter and no per-item statistics. This enables rapid adaptation to changing access distributions while maintaining low overhead. Building on this foundation, DynamicAdaptiveClimb further enhances adaptability by automatically tuning the cache size in response to workload demands. Our comprehensive evaluation across a diverse set of real-world traces, including 1067 traces from 6 different datasets, demonstrates that DynamicAdaptiveClimb consistently achieves substantial speedups and higher hit ratios compared to other state-of-the-art algorithms. In particular, our approach achieves up to a 29% improvement in hit ratio and a substantial reduction in miss penalties compared to the FIFO baseline. Furthermore, it outperforms the next-best contenders, AdaptiveClimb and SIEVE [43], by approximately 10% to 15%, especially in environments characterized by fluctuating working set sizes. These results highlight the effectiveness of our approach in delivering efficient performance, making it well-suited for modern, dynamic caching environments.

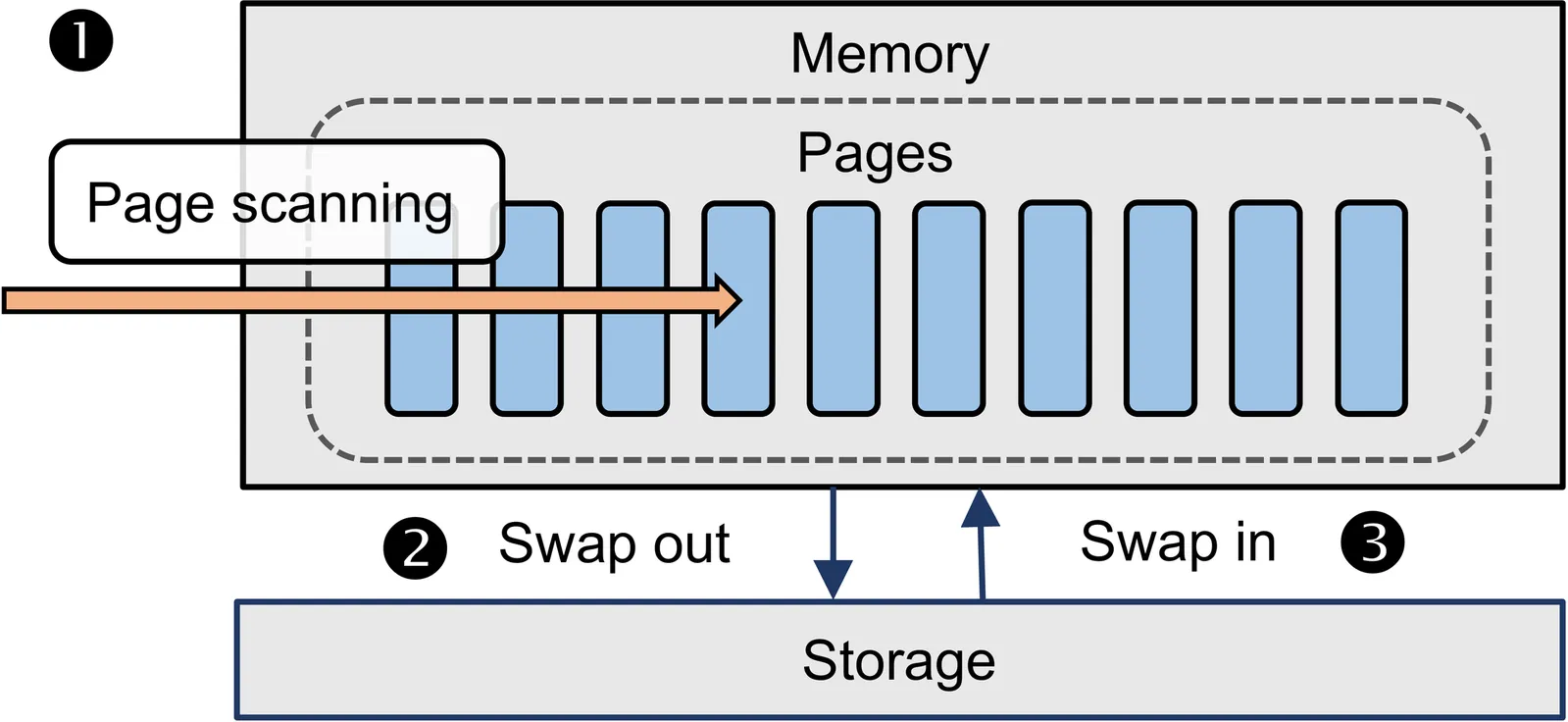

The memory capacity in edge devices is often limited due to constraints on cost, size, and power. Consequently, memory competition leads to inevitable page swapping in memory-constrained mixed-criticality edge devices, causing slow storage I/O and thus performance degradation. In such scenarios, inefficient memory allocation disrupts the balance between application performance, causing soft real-time (soft RT) tasks to miss deadlines or preventing non-real-time (non-RT) applications from optimizing throughput. Meanwhile, we observe unpredictable, long system-level stalls (called long stalls) under high memory and I/O pressure, which further degrade performance. In this work, we propose a Stall-Aware Real-Time Memory Allocator (SARA), which discovers opportunities for performance balance by allocating just enough memory to soft RT tasks to meet deadlines and, at the same time, optimizing the remaining memory for non-RT applications. To minimize the memory usage of soft RT tasks while meeting real-time requirements, SARA leverages our insight into how latency, caused by memory insufficiency and measured by our proposed PSI-based metric, affects the execution time of each soft RT job, where a job runs per period and a soft RT task consists of multiple periods. Moreover, SARA detects long stalls using our definition and proactively drops affected jobs, minimizing stalls in task execution. Experiments show that SARA achieves an average of 97.13% deadline hit ratio for soft RT tasks and improves non-RT application throughput by up to 22.32x over existing approaches, even with memory capacity limited to 60% of peak demand.

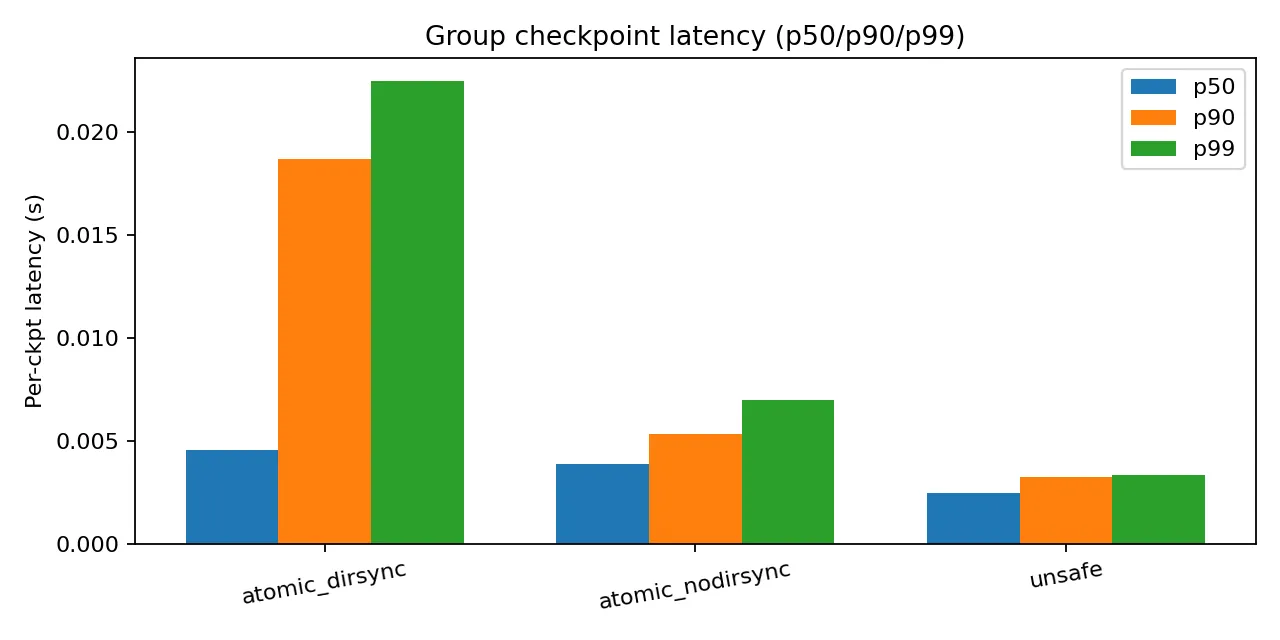

Deep learning training relies on periodic checkpoints to recover from failures, but unsafe checkpoint installation can leave corrupted files on disk. This paper presents an experimental study of checkpoint installation protocols and integrity validation for AI training on macOS/APFS. We implement three write modes with increasing durability guarantees: unsafe (baseline, no fsync), atomic_nodirsync (file-level durability via fsync()), and atomic_dirsync (file + directory durability). We design a format-agnostic integrity guard using SHA-256 checksums with automatic rollback. Through controlled experiments including crash injection (430 unsafe-mode trials) and corruption injection (1,600 atomic-mode trials), we demonstrate that the integrity guard detects 99.8-100% of corruptions with zero false positives. Performance overhead is 56.5-108.4% for atomic_nodirsync and 84.2-570.6% for atomic_dirsync relative to the unsafe baseline. Our findings quantify the reliability-performance trade-offs and provide deployment guidance for production AI infrastructure.

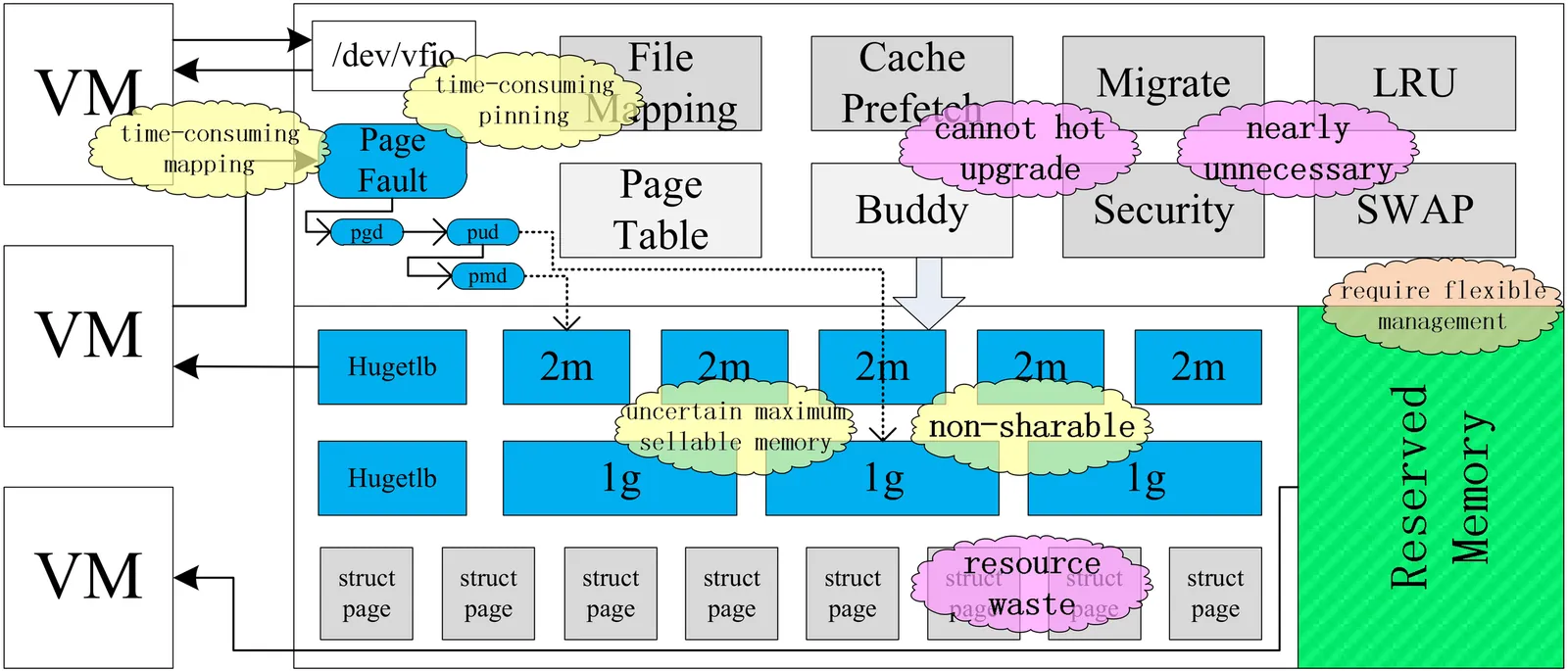

Traditional memory management suffers from metadata overhead, architectural complexity, and stability degradation, problems intensified in cloud environments. Existing software/hardware optimizations are insufficient for cloud computing's dual demands of flexibility and low overhead. This paper presents Vmem, a memory management architecture for in-production cloud environments that enables flexible, efficient cloud server memory utilization through lightweight reserved memory management. Vmem is the first such architecture to support online upgrades, meeting cloud requirements for high stability and rapid iterative evolution. Experiments show Vmem increases sellable memory rate by about 2%, delivers extreme elasticity and performance, achieves over 3x faster boot time for VFIO-based virtual machines (VMs), and improves network performance by about 10% for DPU-accelerated VMs. Vmem has been deployed at large scale for seven years, demonstrating efficiency and stability on over 300,000 cloud servers supporting hundreds of millions of VMs.

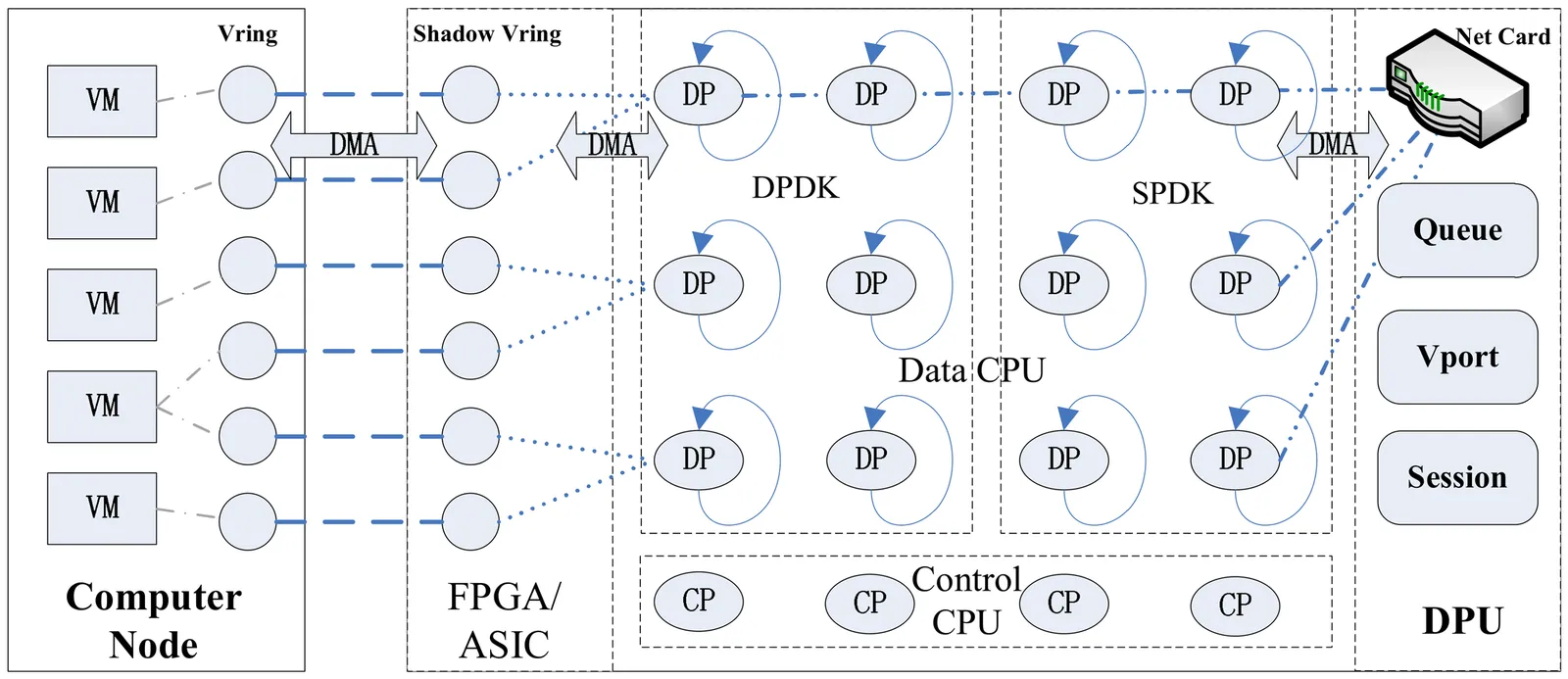

The growth of cloud computing drives data centers toward higher density and efficiency. Data processing units (DPUs) enhance server network and storage performance but face challenges such as long hardware upgrade cycles and limited resources. To address these, we propose Taiji, a resource-elasticity architecture for DPUs. Combining hybrid virtualization with parallel memory swapping, Taiji switches the DPU's operating system (OS) into a guest OS and inserts a lightweight virtualization layer, making nearly all DPU memory swappable. It achieves memory overcommitment for the switched guest OS via high-performance memory elasticity, fully transparent to upper-layer applications, and supports hot-switch and hot-upgrade to meet in-production cloud requirements. Experiments show that Taiji expands DPU memory resources by over 50%, maintains virtualization overhead around 5%, and ensures 90% of swap-ins complete within 10 microseconds. Taiji delivers an efficient, reliable, low-overhead elasticity solution for DPUs and is deployed in large-scale production systems across more than 30,000 servers.

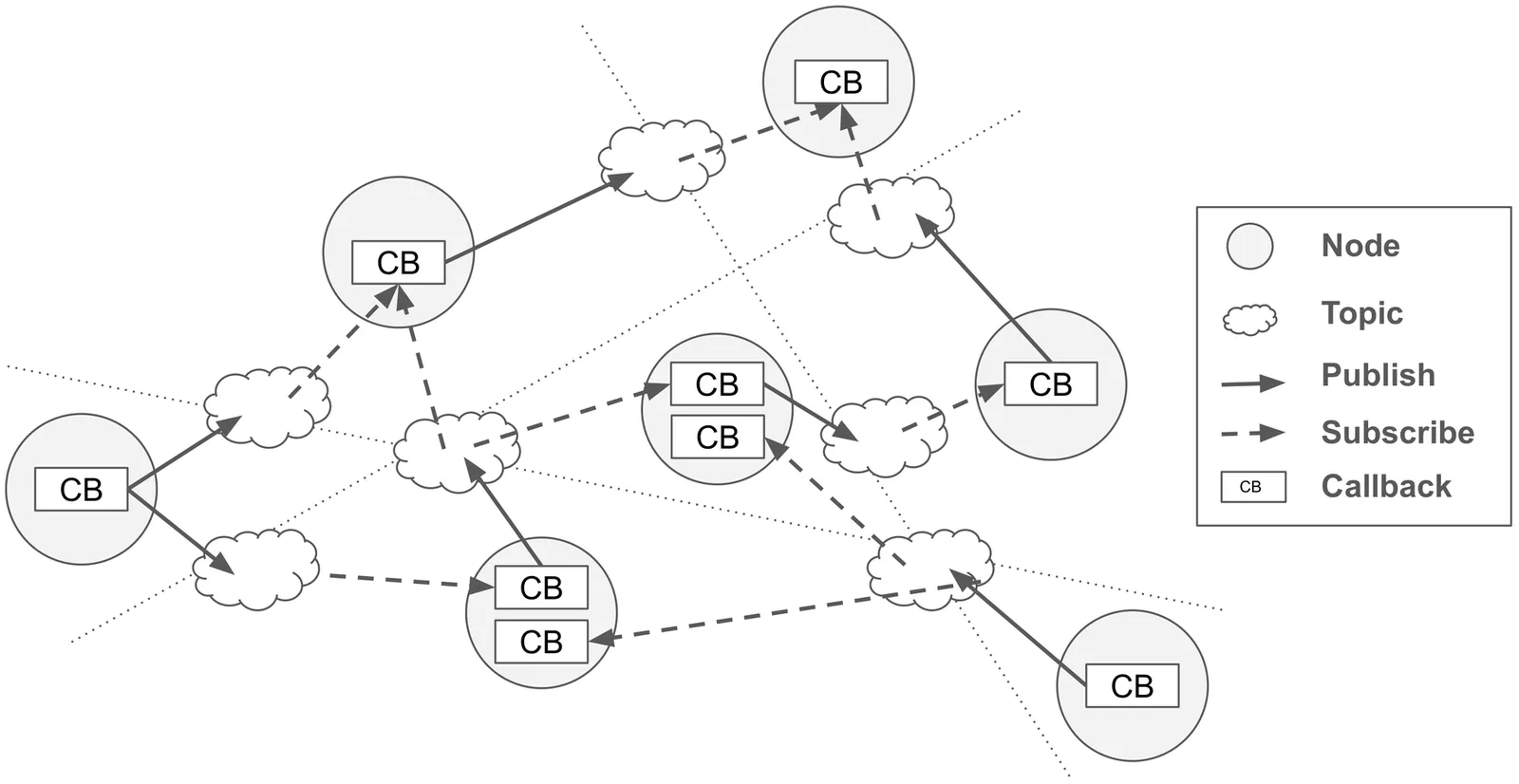

The Directed Acyclic Graph (DAG) task model for real-time scheduling finds its primary practical target in Robot Operating System 2 (ROS 2). However, ROS 2's publish/subscribe API leaves DAG precedence constraints unenforced: a callback may publish mid-execution, and multi-input callbacks let developers choose topic-matching policies. Thus preserving DAG semantics relies on conventions; once violated, the model collapses. We propose the Function-as-Subtask (FasS) API, which expresses each subtask as a function whose arguments/return values are the subtask's incoming/outgoing edges. By minimizing description freedom, DAG semantics is guaranteed at the API rather than by programmer discipline. We implement a DAG-native scheduler using FasS on a Rust-based experimental kernel and evaluate its semantic fidelity, and we outline design guidelines for applying FasS to Linux Linux sched_ext.

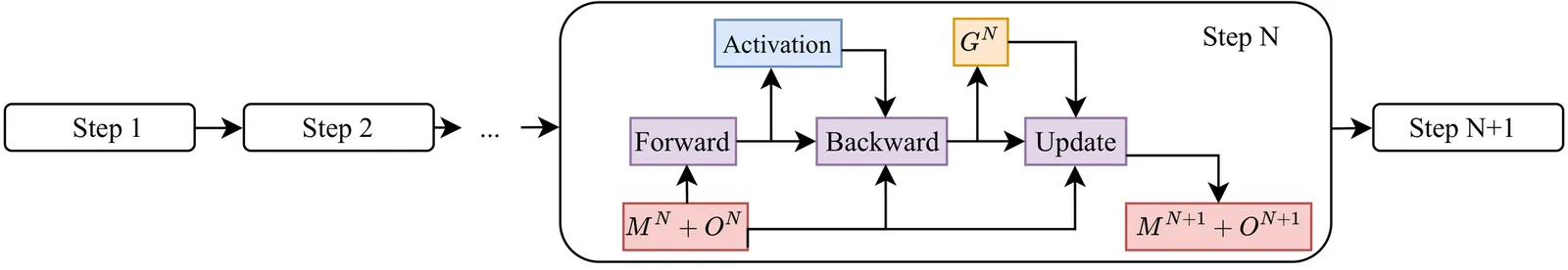

The accuracy of large language models (LLMs) improves with increasing model size, but increasing model complexity also poses significant challenges to training stability. Periodic checkpointing is a key mechanism for fault recovery and is widely used in LLM training. However, traditional checkpointing strategies often pause or delay GPU computation during checkpoint saving for checkpoint GPU-CPU transfer, resulting in significant training interruptions and reduced training throughput. To address this issue, we propose GoCkpt, a method to overlap checkpoint saving with multiple training steps and restore the final checkpoint on the CPU. We transfer the checkpoint across multiple steps, each step transfers part of the checkpoint state, and we transfer some of the gradient data used for parameter updates. After the transfer is complete, each partial checkpoint state is updated to a consistent version on the CPU, thus avoiding the checkpoint state inconsistency problem caused by transferring checkpoints across multiple steps. Furthermore, we introduce a transfer optimization strategy to maximize GPU-CPU bandwidth utilization and SSD persistence throughput. This dual optimization overlapping saves across steps and maximizing I/O efficiency significantly reduces invalid training time. Experimental results show that GoCkpt can increase training throughput by up to 38.4% compared to traditional asynchronous checkpoint solutions in the industry. We also find that GoCkpt can reduce training interruption time by 86.7% compared to the state-of-the-art checkpoint transfer methods, which results in a 4.8% throughput improvement.

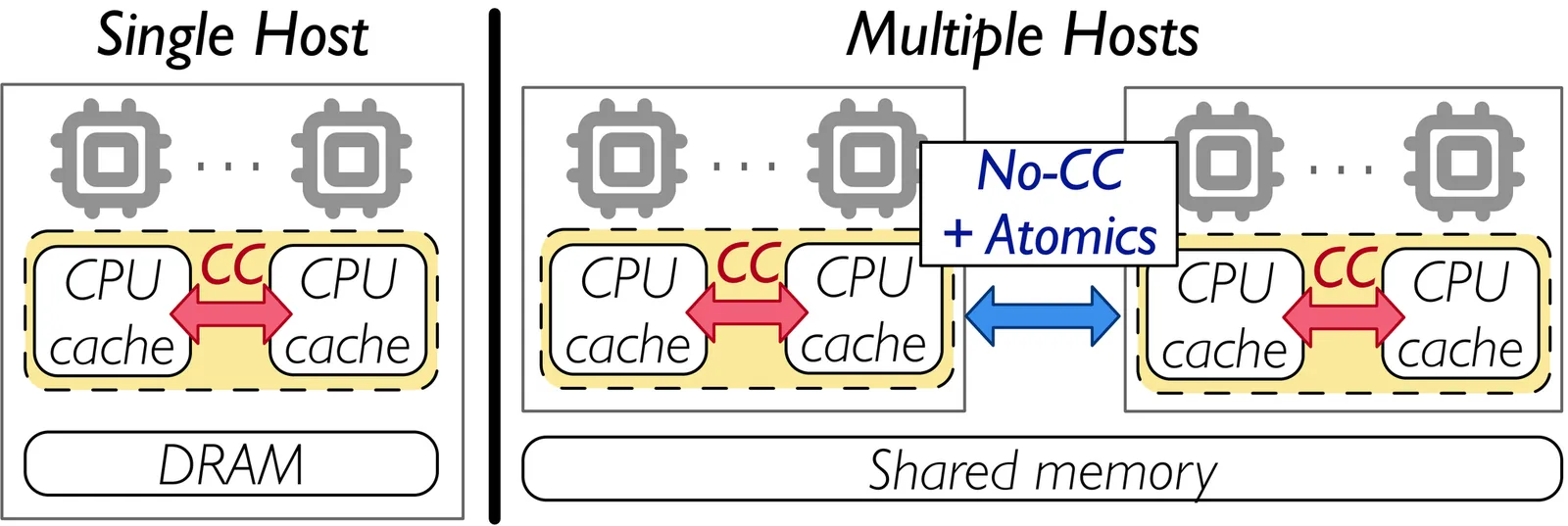

The \emph{Partial Cache-Coherence (PCC)} model maintains hardware cache coherence only within subsets of cores, enabling large-scale memory sharing with emerging memory interconnect technologies like Compute Express Link (CXL). However, PCC's relaxation of global cache coherence compromises the correctness of existing single-machine software. This paper focuses on building consistent and efficient indexes on PCC platforms. We present that existing indexes designed for cache-coherent platforms can be made consistent on PCC platforms following SP guidelines, i.e., we identify \emph{sync-data} and \emph{protected-data} according to the index's concurrency control mechanisms, and synchronize them accordingly. However, conversion with SP guidelines introduces performance overhead. To mitigate the overhead, we identify several unique performance bottlenecks on PCC platforms, and propose P$^3$ guidelines (i.e., using Out-of-\underline{P}lace update, Re\underline{P}licated shared variable, S\underline{P}eculative Reading) to improve the efficiency of converted indexes on PCC platforms. With SP and P$^3$ guidelines, we convert and optimize two indexes (CLevelHash and BwTree) for PCC platforms. Evaluation shows that converted indexes' throughput improves up to 16$\times$ following P$^3$ guidelines, and the optimized indexes outperform their message-passing-based and disaggregated-memory-based counterparts by up to 16$\times$ and 19$\times$.