Statistical Finance

Statistical, econometric and econophysics analysis of financial markets.

Looking for a broader view? This category is part of:

Statistical, econometric and econophysics analysis of financial markets.

Looking for a broader view? This category is part of:

Synthetic financial data offers a practical way to address the privacy and accessibility challenges that limit research in quantitative finance. This paper examines the use of generative models, in particular TimeGAN and Variational Autoencoders (VAEs), for creating synthetic return series that support portfolio construction, trading analysis, and risk modeling. Using historical daily returns from the S and P 500 as a benchmark, we generate synthetic datasets under comparable market conditions and evaluate them using statistical similarity metrics, temporal structure tests, and downstream financial tasks. The study shows that TimeGAN produces synthetic data with distributional shapes, volatility patterns, and autocorrelation behaviour that are close to those observed in real returns. When applied to mean-variance portfolio optimization, the resulting synthetic datasets lead to portfolio weights, Sharpe ratios, and risk levels that remain close to those obtained from real data. The VAE provides more stable training but tends to smooth extreme market movements, which affects risk estimation. Finally, the analysis supports the use of synthetic datasets as substitutes for real financial data in portfolio analysis and risk simulation, particularly when models are able to capture temporal dynamics. Synthetic data therefore provides a privacy-preserving, cost-effective, and reproducible tool for financial experimentation and model development.

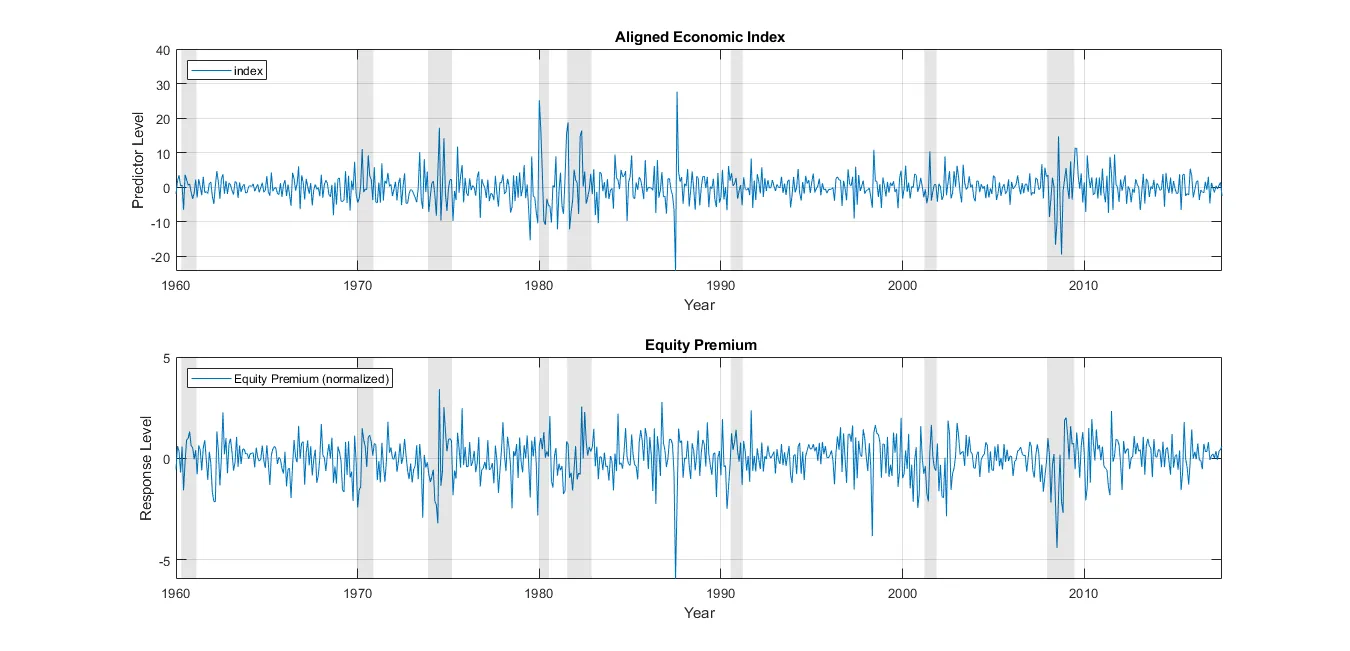

A growing empirical literature suggests that equity-premium predictability is state dependent, with much of the forecasting power concentrated around recessionary periods \parencite{Henkel2011,DanglHalling2012,Devpura2018}. I study U.S. stock return predictability across economic regimes and document strong evidence of time-varying expected returns across both expansionary and contractionary states. I contribute in two ways. First, I introduce a state-switching predictive regression in which the market state is defined in real time using the slope of the yield curve. Relative to the standard one-state predictive regression, the state-switching specification increases both in-sample and out-of-sample performance for the set of popular predictors considered by \textcite{WelchGoyal2008}, improving the out-of-sample performance of most predictors in economically meaningful ways. Second, I propose a new aggregate predictor, the Aligned Economic Index, constructed via partial least squares (PLS). Under the state-switching model, the Aligned Economic Index exhibits statistically and economically significant predictive power in sample and out of sample, and it outperforms widely used benchmark predictors and alternative predictor-combination methods.

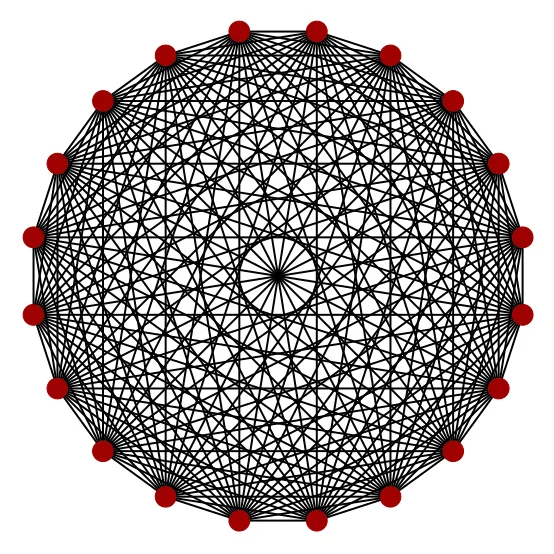

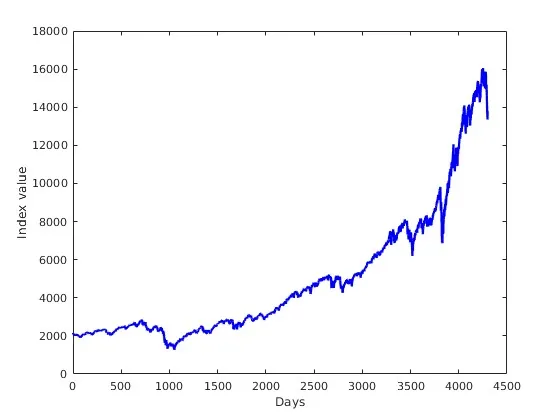

We use a $φ^{4}$ quantum field theory with inhomogeneous couplings and explicit symmetry-breaking to model an ensemble of financial time series from the S$\&$P 500 index. The continuum nature of the $φ^4$ theory avoids the inaccuracies that occur in Ising-based models which require a discretization of the time series. We demonstrate this using the example of the 2008 global financial crisis. The $φ^{4}$ quantum field theory is expressive enough to reproduce the higher-order statistics such as the market kurtosis, which can serve as an indicator of possible market shocks. Accurate reproduction of high kurtosis is absent in binarized models. Therefore Ising models, despite being widely employed in econophysics, are incapable of fully representing empirical financial data, a limitation not present in the generalization of the $φ^{4}$ scalar field theory. We then investigate the scaling properties of the $φ^{4}$ machine learning algorithm and extract exponents which govern the behavior of the learned couplings (or weights and biases in ML language) in relation to the number of stocks in the model. Finally, we use our model to forecast the price changes of the AAPL, MSFT, and NVDA stocks. We conclude by discussing how the $φ^{4}$ scalar field theory could be used to build investment strategies and the possible intuitions that the QFT operations of dimensional compactification and renormalization can provide for financial modelling.

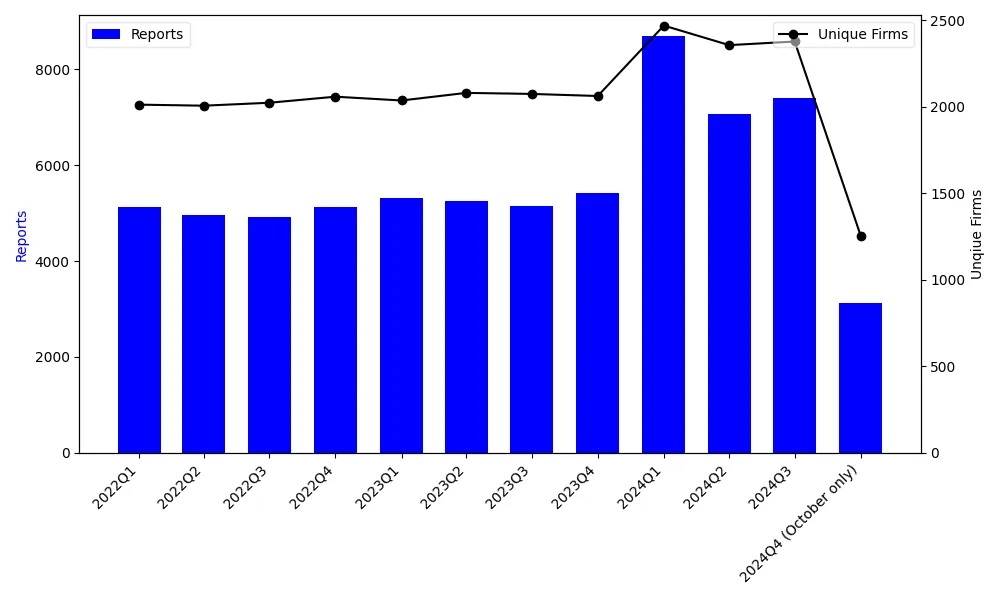

We study how generative artificial intelligence (AI) transforms the work of financial analysts. Using the 2023 launch of FactSet's AI platform as a natural experiment, we find that adoption produces markedly richer and more comprehensive reports -- featuring 40% more distinct information sources, 34% broader topical coverage, and 25% greater use of advanced analytical methods -- while also improving timeliness. However, forecast errors rise by 59% as AI-assisted reports convey a more balanced mix of positive and negative information that is harder to synthesize, particularly for analysts facing heavier cognitive demands. Placebo tests using other data vendors confirm that these effects are unique to FactSet's AI integration. Overall, our findings reveal both the productivity gains and cognitive limits of generative AI in financial information production.

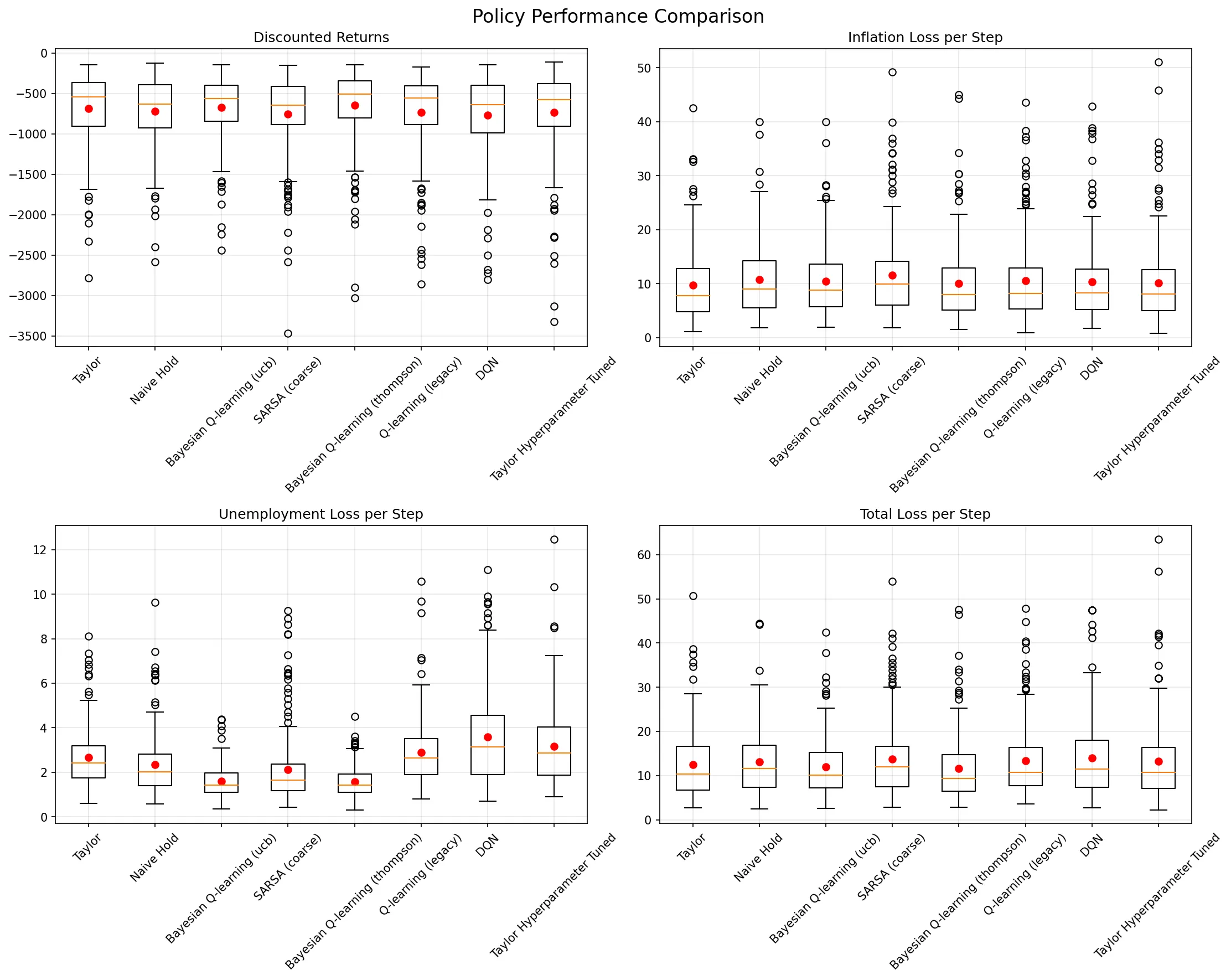

We study how a central bank should dynamically set short-term nominal interest rates to stabilize inflation and unemployment when macroeconomic relationships are uncertain and time-varying. We model monetary policy as a sequential decision-making problem where the central bank observes macroeconomic conditions quarterly and chooses interest rate adjustments. Using publically accessible historical Federal Reserve Economic Data (FRED), we construct a linear-Gaussian transition model and implement a discrete-action Markov Decision Process with a quadratic loss reward function. We chose to compare nine different reinforcement learning style approaches against Taylor Rule and naive baselines, including tabular Q-learning variants, SARSA, Actor-Critic, Deep Q-Networks, Bayesian Q-learning with uncertainty quantification, and POMDP formulations with partial observability. Surprisingly, standard tabular Q-learning achieved the best performance (-615.13 +- 309.58 mean return), outperforming both enhanced RL methods and traditional policy rules. Our results suggest that while sophisticated RL techniques show promise for monetary policy applications, simpler approaches may be more robust in this domain, highlighting important challenges in applying modern RL to macroeconomic policy.

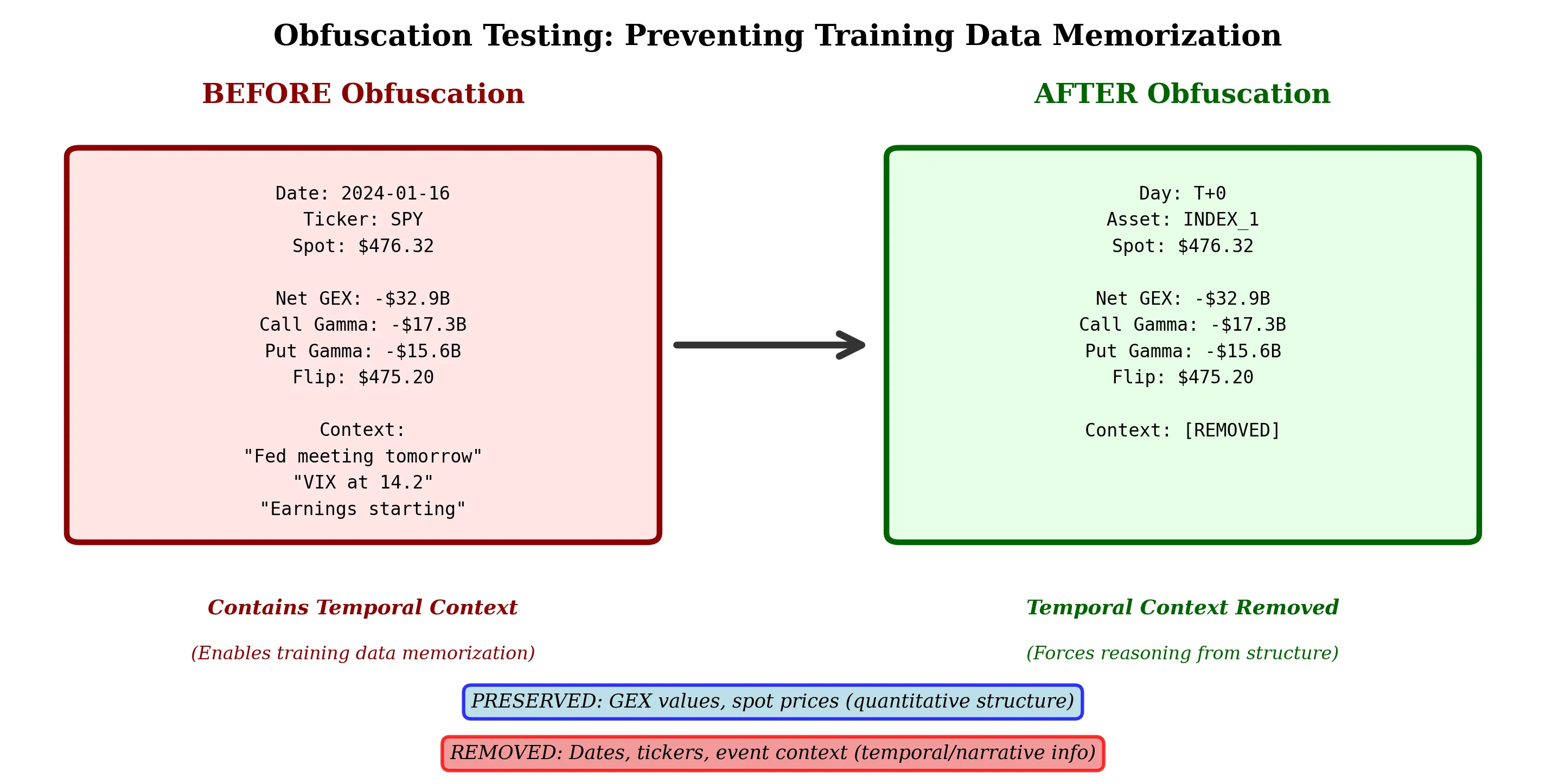

We introduce obfuscation testing, a novel methodology for validating whether large language models detect structural market patterns through causal reasoning rather than temporal association. Testing three dealer hedging constraint patterns (gamma positioning, stock pinning, 0DTE hedging) on 242 trading days (95.6% coverage) of S&P 500 options data, we find LLMs achieve 71.5% detection rate using unbiased prompts that provide only raw gamma exposure values without regime labels or temporal context. The WHO-WHOM-WHAT causal framework forces models to identify the economic actors (dealers), affected parties (directional traders), and structural mechanisms (forced hedging) underlying observed market dynamics. Critically, detection accuracy (91.2%) remains stable even as economic profitability varies quarterly, demonstrating that models identify structural constraints rather than profitable patterns. When prompted with regime labels, detection increases to 100%, but the 71.5% unbiased rate validates genuine pattern recognition. Our findings suggest LLMs possess emergent capabilities for detecting complex financial mechanisms through pure structural reasoning, with implications for systematic strategy development, risk management, and our understanding of how transformer architectures process financial market dynamics.

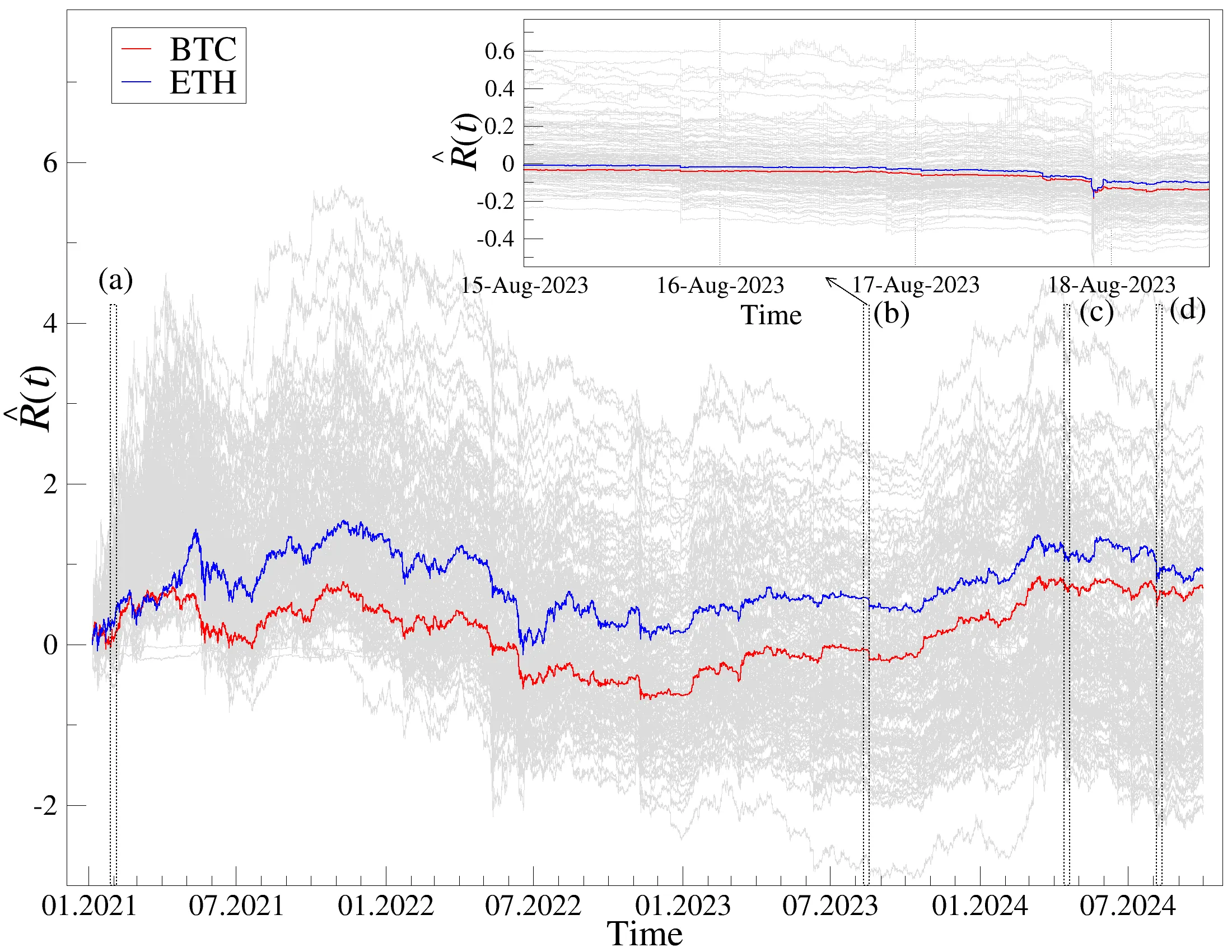

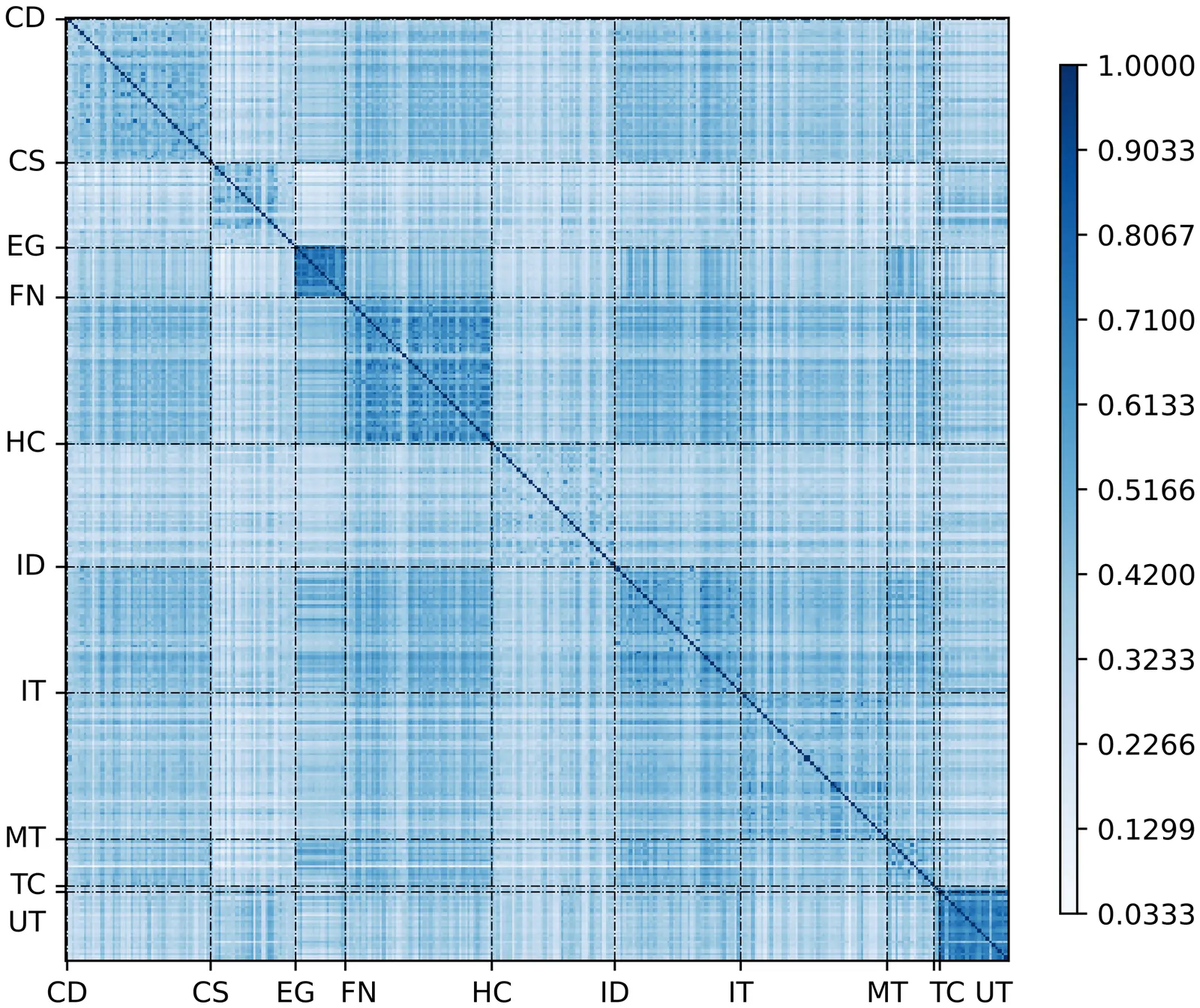

Correlations in complex systems are often obscured by nonstationarity, long-range memory, and heavy-tailed fluctuations, which limit the usefulness of traditional covariance-based analyses. To address these challenges, we construct scale and fluctuation-dependent correlation matrices using the multifractal detrended cross-correlation coefficient $ρ_r$ that selectively emphasizes fluctuations of different amplitudes. We examine the spectral properties of these detrended correlation matrices and compare them to the spectral properties of the matrices calculated in the same way from synthetic Gaussian and $q$Gaussian signals. Our results show that detrending, heavy tails, and the fluctuation-order parameter $r$ jointly produce spectra, which substantially depart from the random case even under absence of cross-correlations in time series. Applying this framework to one-minute returns of 140 major cryptocurrencies from 2021-2024 reveals robust collective modes, including a dominant market factor and several sectoral components whose strength depends on the analyzed scale and fluctuation order. After filtering out the market mode, the empirical eigenvalue bulk aligns closely with the limit of random detrended cross-correlations, enabling clear identification of structurally significant outliers. Overall, the study provides a refined spectral baseline for detrended cross-correlations and offers a promising tool for distinguishing genuine interdependencies from noise in complex, nonstationary, heavy-tailed systems.

This paper investigates an optimal integration of deep learning with financial models for robust asset price forecasting. Specifically, we developed a hybrid framework combining a Long Short-Term Memory (LSTM) network with the Merton-Lévy jump-diffusion model. To optimise this framework, we employed the Grey Wolf Optimizer (GWO) for the LSTM hyperparameter tuning, and we explored three calibration methods for the Merton-Levy model parameters: Artificial Neural Networks (ANNs), the Marine Predators Algorithm (MPA), and the PyTorch-based TorchSDE library. To evaluate the predictive performance of our hybrid model, we compared it against several benchmark models, including a standard LSTM and an LSTM combined with the Fractional Heston model. This evaluation used three real-world financial datasets: Brent oil prices, the STOXX 600 index, and the IT40 index. Performance was assessed using standard metrics, including Mean Squared Error (MSE), Mean Absolute Error(MAE), Mean Squared Percentage Error (MSPE), and the coefficient of determination (R2). Our experimental results demonstrate that the hybrid model, combining a GWO-optimized LSTM network with the Levy-Merton Jump-Diffusion model calibrated using an ANN, outperformed the base LSTM model and all other models developed in this study.

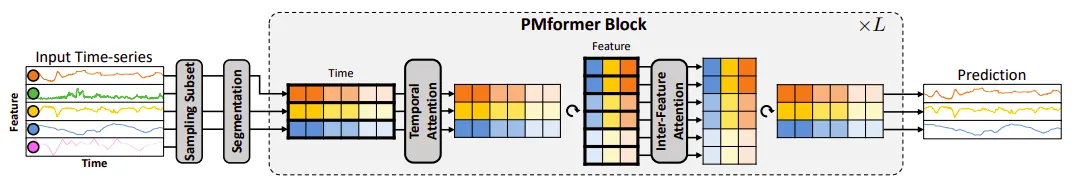

Forecasting cryptocurrency prices is hindered by extreme volatility and a methodological dilemma between information-scarce univariate models and noise-prone full-multivariate models. This paper investigates a partial-multivariate approach to balance this trade-off, hypothesizing that a strategic subset of features offers superior predictive power. We apply the Partial-Multivariate Transformer (PMformer) to forecast daily returns for BTCUSDT and ETHUSDT, benchmarking it against eleven classical and deep learning models. Our empirical results yield two primary contributions. First, we demonstrate that the partial-multivariate strategy achieves significant statistical accuracy, effectively balancing informative signals with noise. Second, we experiment and discuss an observable disconnect between this statistical performance and practical trading utility; lower prediction error did not consistently translate to higher financial returns in simulations. This finding challenges the reliance on traditional error metrics and highlights the need to develop evaluation criteria more aligned with real-world financial objectives.

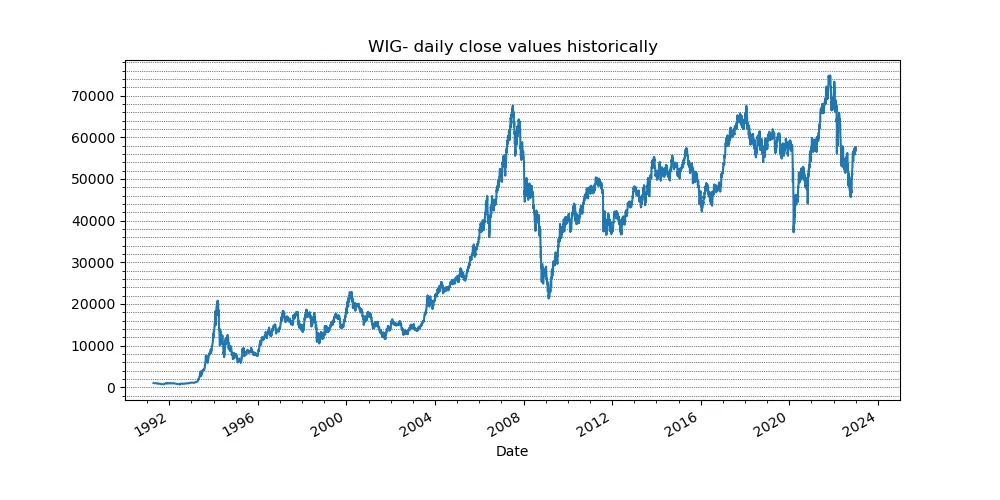

We study a systematic approach to a popular Statistical Arbitrage technique: Pairs Trading. Instead of relying on two highly correlated assets, we replace the second asset with a replication of the first using risk factor representations. These factors are obtained through Principal Components Analysis (PCA), exchange traded funds (ETFs), and, as our main contribution, Long Short Term Memory networks (LSTMs). Residuals between the main asset and its replication are examined for mean reversion properties, and trading signals are generated for sufficiently fast mean reverting portfolios. Beyond introducing a deep learning based replication method, we adapt the framework of Avellaneda and Lee (2008) to the Polish market. Accordingly, components of WIG20, mWIG40, and selected sector indices replace the original S&P500 universe, and market parameters such as the risk free rate and transaction costs are updated to reflect local conditions. We outline the full strategy pipeline: risk factor construction, residual modeling via the Ornstein Uhlenbeck process, and signal generation. Each replication technique is described together with its practical implementation. Strategy performance is evaluated over two periods: 2017-2019 and the recessive year 2020. All methods yield profits in 2017-2019, with PCA achieving roughly 20 percent cumulative return and an annualized Sharpe ratio of up to 2.63. Despite multiple adaptations, our conclusions remain consistent with those of the original paper. During the COVID-19 recession, only the ETF based approach remains profitable (about 5 percent annual return), while PCA and LSTM methods underperform. LSTM results, although negative, are promising and indicate potential for future optimization.

We investigate a number of Artificial Neural Network architectures (well-known and more ``exotic'') in application to the long-term financial time-series forecasts of indexes on different global markets. The particular area of interest of this research is to examine the correlation of these indexes' behaviour in terms of Machine Learning algorithms cross-training. Would training an algorithm on an index from one global market produce similar or even better accuracy when such a model is applied for predicting another index from a different market? The demonstrated predominately positive answer to this question is another argument in favour of the long-debated Efficient Market Hypothesis of Eugene Fama.

Starting from the Pearson Correlation Matrix of stock returns and from the desire to obtain a reduced number of parameters relevant for the dynamics of a financial market, we propose to take the idea of a sectorial matrix, which would have a large number of parameters, to the reduced picture of a real symmetric $2 \times 2$ matrix, extreme case, that still conserves the desirable feature that the average correlation can be one of the parameters. This is achieved by averaging the correlation matrix over blocks created by choosing two subsets of stocks for rows and columns and averaging over each of the resulting blocks. Averaging over these blocks, we retain the average of the correlation matrix. We shall use a random selection for two equal block sizes as well as two specific, hopefully relevant, ones that do not produce equal block sizes. The results show that one of the non-random choices has somewhat different properties, whose meaning will have to be analyzed from an economy point of view.

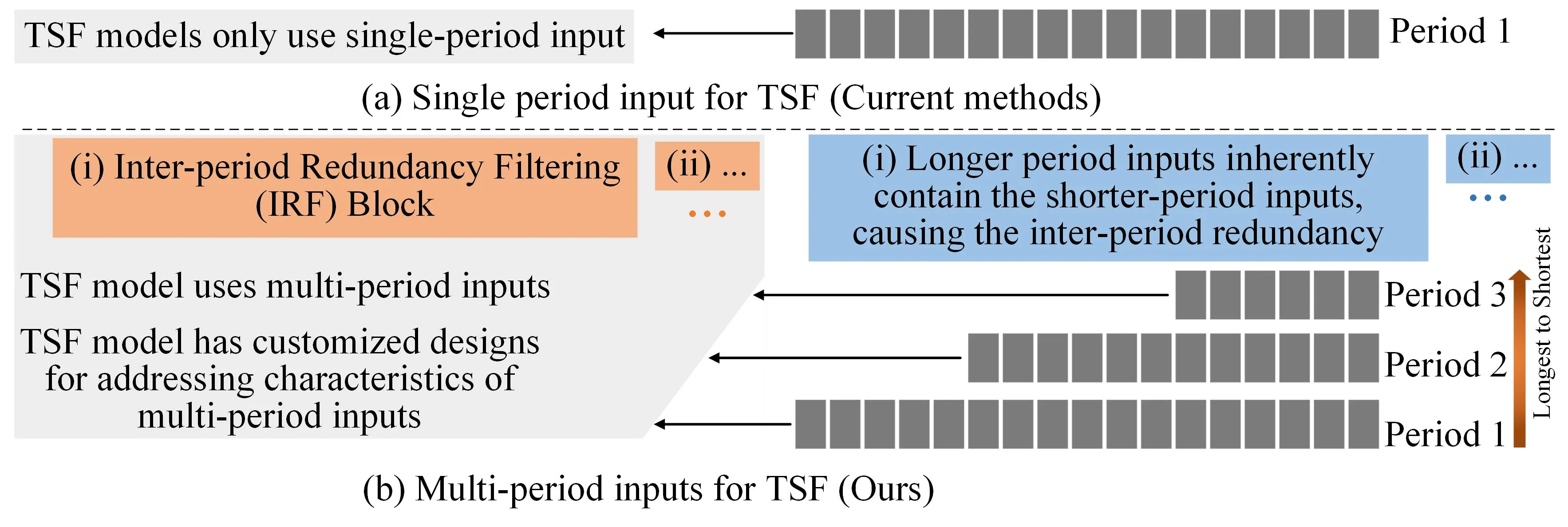

Time series forecasting is important in finance domain. Financial time series (TS) patterns are influenced by both short-term public opinions and medium-/long-term policy and market trends. Hence, processing multi-period inputs becomes crucial for accurate financial time series forecasting (TSF). However, current TSF models either use only single-period input, or lack customized designs for addressing multi-period characteristics. In this paper, we propose a Multi-period Learning Framework (MLF) to enhance financial TSF performance. MLF considers both TSF's accuracy and efficiency requirements. Specifically, we design three new modules to better integrate the multi-period inputs for improving accuracy: (i) Inter-period Redundancy Filtering (IRF), that removes the information redundancy between periods for accurate self-attention modeling, (ii) Learnable Weighted-average Integration (LWI), that effectively integrates multi-period forecasts, (iii) Multi-period self-Adaptive Patching (MAP), that mitigates the bias towards certain periods by setting the same number of patches across all periods. Furthermore, we propose a Patch Squeeze module to reduce the number of patches in self-attention modeling for maximized efficiency. MLF incorporates multiple inputs with varying lengths (periods) to achieve better accuracy and reduces the costs of selecting input lengths during training. The codes and datasets are available at https://github.com/Meteor-Stars/MLF.

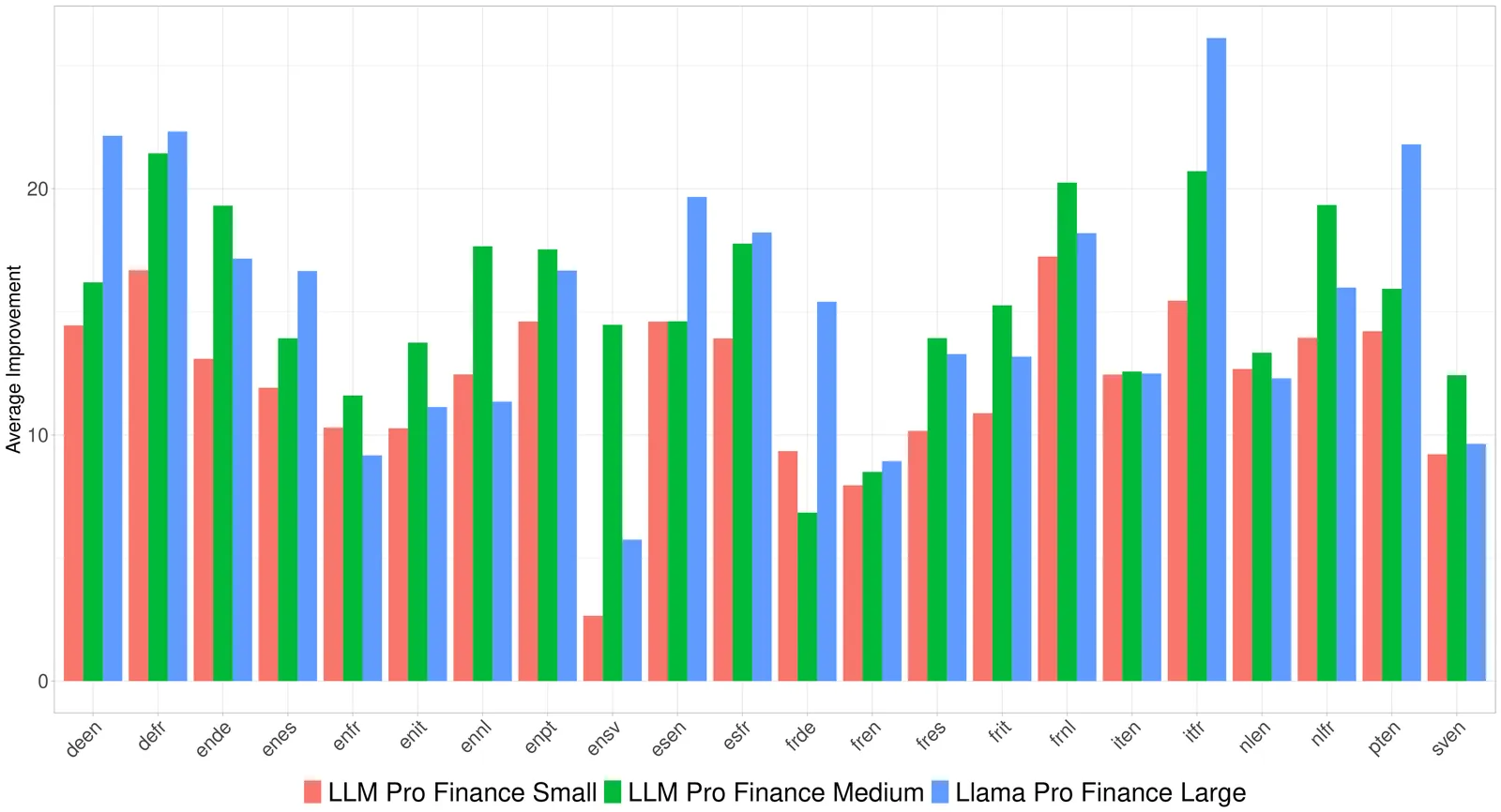

The financial industry's growing demand for advanced natural language processing (NLP) capabilities has highlighted the limitations of generalist large language models (LLMs) in handling domain-specific financial tasks. To address this gap, we introduce the LLM Pro Finance Suite, a collection of five instruction-tuned LLMs (ranging from 8B to 70B parameters) specifically designed for financial applications. Our approach focuses on enhancing generalist instruction-tuned models, leveraging their existing strengths in instruction following, reasoning, and toxicity control, while fine-tuning them on a curated, high-quality financial corpus comprising over 50% finance-related data in English, French, and German. We evaluate the LLM Pro Finance Suite on a comprehensive financial benchmark suite, demonstrating consistent improvement over state-of-the-art baselines in finance-oriented tasks and financial translation. Notably, our models maintain the strong general-domain capabilities of their base models, ensuring reliable performance across non-specialized tasks. This dual proficiency, enhanced financial expertise without compromise on general abilities, makes the LLM Pro Finance Suite an ideal drop-in replacement for existing LLMs in financial workflows, offering improved domain-specific performance while preserving overall versatility. We publicly release two 8B-parameters models to foster future research and development in financial NLP applications: https://huggingface.co/collections/DragonLLM/llm-open-finance.

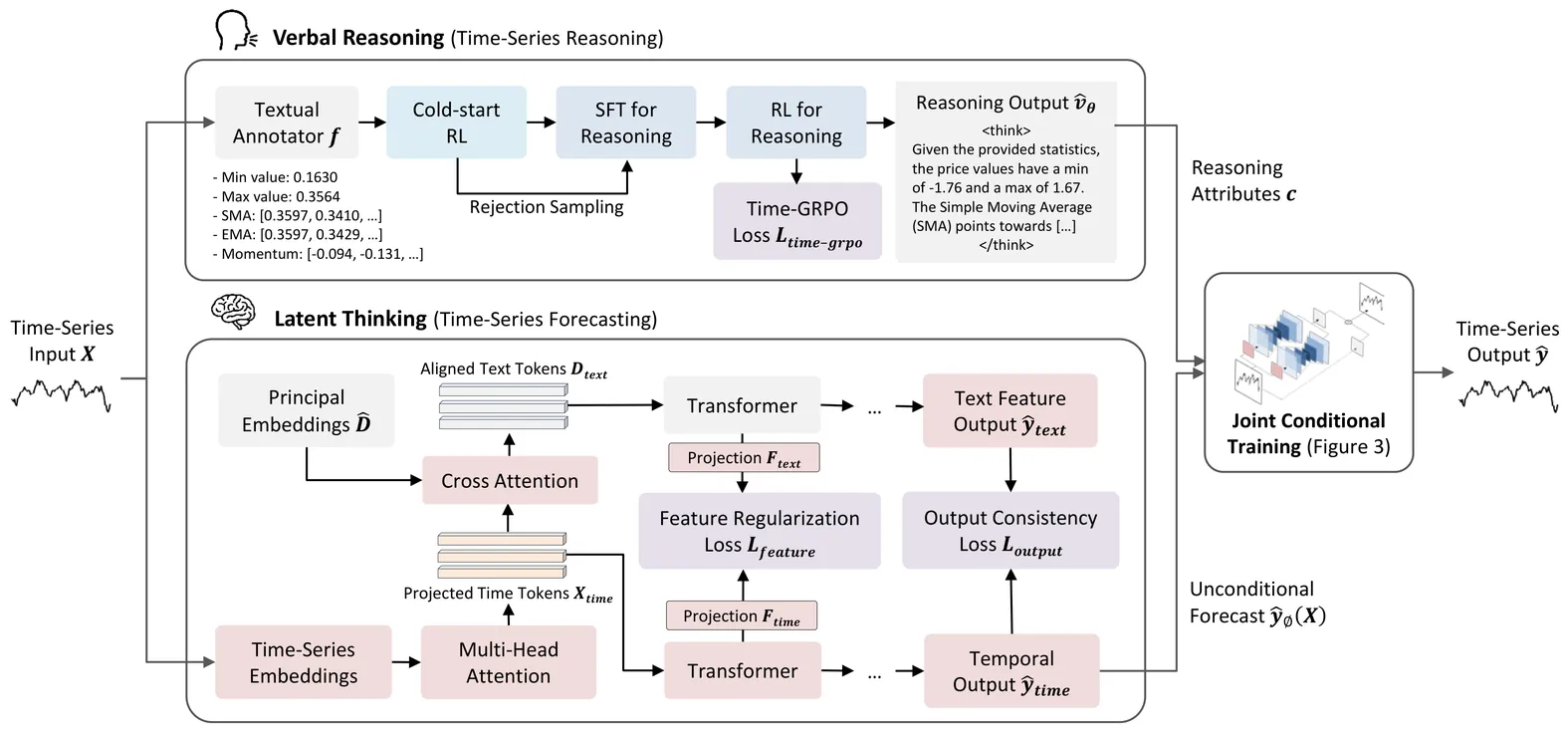

While Large Language Models have been used to produce interpretable stock forecasts, they mainly focus on analyzing textual reports but not historical price data, also known as Technical Analysis. This task is challenging as it switches between domains: the stock price inputs and outputs lie in the time-series domain, while the reasoning step should be in natural language. In this work, we introduce Verbal Technical Analysis (VTA), a novel framework that combine verbal and latent reasoning to produce stock time-series forecasts that are both accurate and interpretable. To reason over time-series, we convert stock price data into textual annotations and optimize the reasoning trace using an inverse Mean Squared Error (MSE) reward objective. To produce time-series outputs from textual reasoning, we condition the outputs of a time-series backbone model on the reasoning-based attributes. Experiments on stock datasets across U.S., Chinese, and European markets show that VTA achieves state-of-the-art forecasting accuracy, while the reasoning traces also perform well on evaluation by industry experts.

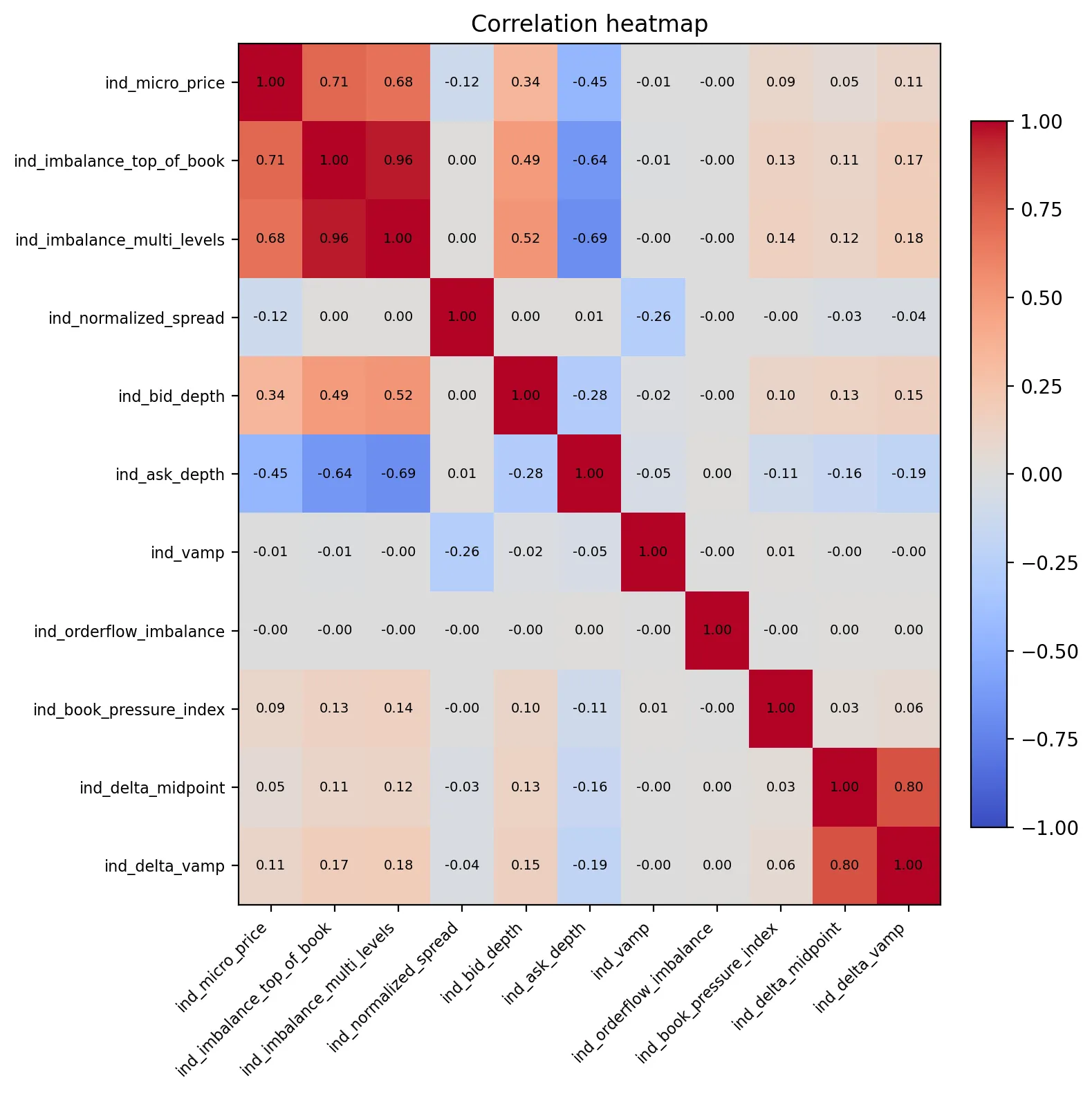

We study opportunistic optimal liquidation over fixed deadlines on BTC-USD limit-order books (LOB). We present RL-Exec, a PPO agent trained on historical replays augmented with endogenous transient impact (resilience), partial fills, maker/taker fees, and latency. The policy observes depth-20 LOB features plus microstructure indicators and acts under a sell-only inventory constraint to reach a residual target. Evaluation follows a strict time split (train: Jan-2020; test: Feb-2020) and a per-day protocol: for each test day we run ten independent start times and aggregate to a single daily score, avoiding pseudo-replication. We compare the agent to (i) TWAP and (ii) a VWAP-like baseline allocating using opposite-side order-book liquidity (top-20 levels), both executed on identical timestamps and costs. Statistical inference uses one-sided Wilcoxon signed-rank tests on daily RL-baseline differences with Benjamini-Hochberg FDR correction and bootstrap confidence intervals. On the Feb-2020 test set, RL-Exec significantly outperforms both baselines and the gap increases with the execution horizon (+2-3 bps at 30 min, +7-8 bps at 60 min, +23 bps at 120 min). Code: github.com/Giafferri/RL-Exec

Electricity price forecasting has become a critical tool for decision-making in energy markets, particularly as the increasing penetration of renewable energy introduces greater volatility and uncertainty. Historically, research in this field has been dominated by point forecasting methods, which provide single-value predictions but fail to quantify uncertainty. However, as power markets evolve due to renewable integration, smart grids, and regulatory changes, the need for probabilistic forecasting has become more pronounced, offering a more comprehensive approach to risk assessment and market participation. This paper presents a review of probabilistic forecasting methods, tracing their evolution from Bayesian and distribution based approaches, through quantile regression techniques, to recent developments in conformal prediction. Particular emphasis is placed on advancements in probabilistic forecasting, including validity-focused methods which address key limitations in uncertainty estimation. Additionally, this review extends beyond the Day-Ahead Market to include the Intra-Day and Balancing Markets, where forecasting challenges are intensified by higher temporal granularity and real-time operational constraints. We examine state of the art methodologies, key evaluation metrics, and ongoing challenges, such as forecast validity, model selection, and the absence of standardised benchmarks, providing researchers and practitioners with a comprehensive and timely resource for navigating the complexities of modern electricity markets.

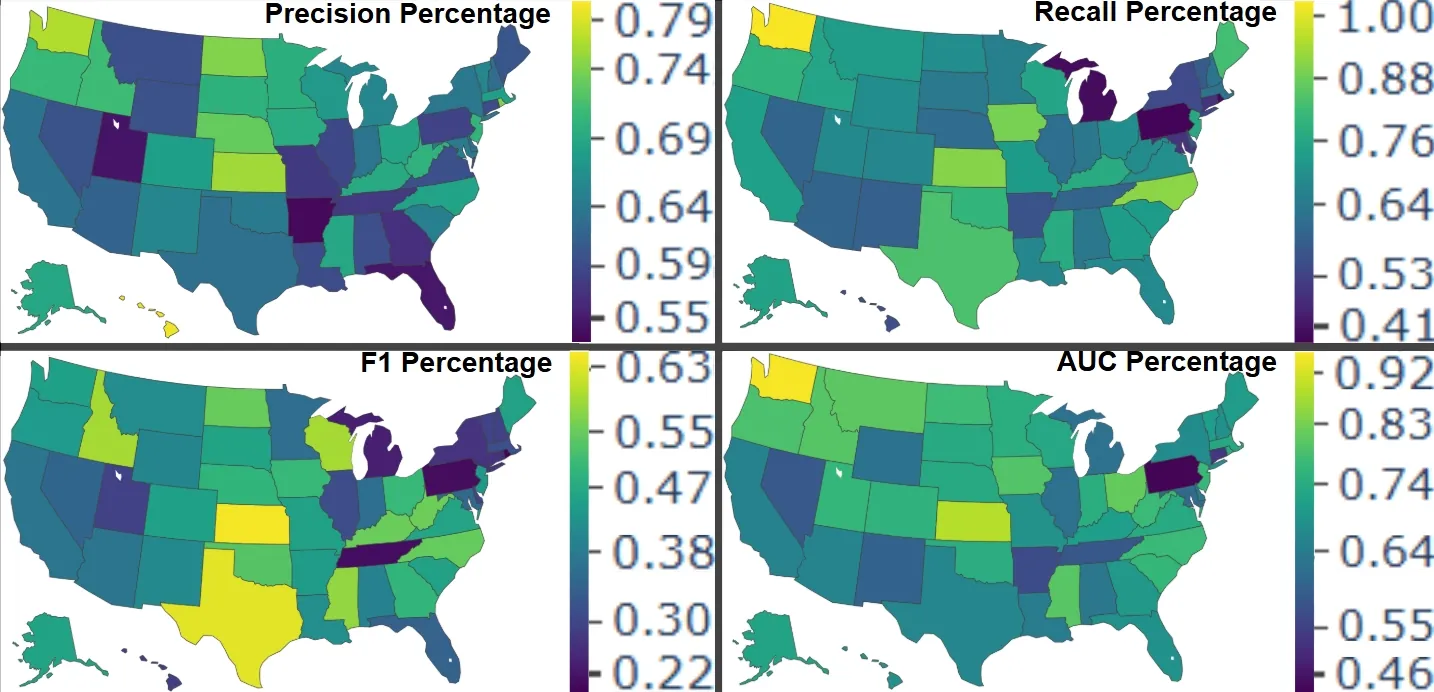

We present the first application of federated learning (FL) to the U.S. National Financial Capability Study, introducing an interpretable framework for predicting consumer financial distress across all 50 states and the District of Columbia without centralizing sensitive data. Our cross-silo FL setup treats each state as a distinct data silo, simulating real-world governance in nationwide financial systems. Unlike prior work, our approach integrates two complementary explainable AI techniques to identify both global (nationwide) and local (state-specific) predictors of financial hardship, such as contact from debt collection agencies. We develop a machine learning model specifically suited for highly categorical, imbalanced survey data. This work delivers a scalable, regulation-compliant blueprint for early warning systems in finance, demonstrating how FL can power socially responsible AI applications in consumer credit risk and financial inclusion.

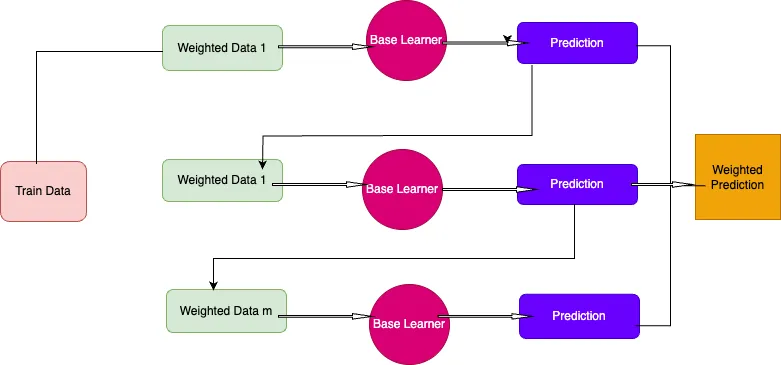

This study presents a three-step machine learning framework to predict bubbles in the S&P 500 stock market by combining financial news sentiment with macroeconomic indicators. Building on traditional econometric approaches, the proposed approach predicts bubble formation by integrating textual and quantitative data sources. In the first step, bubble periods in the S&P 500 index are identified using a right-tailed unit root test, a widely recognized real-time bubble detection method. The second step extracts sentiment features from large-scale financial news articles using natural language processing (NLP) techniques, which capture investors' expectations and behavioral patterns. In the final step, ensemble learning methods are applied to predict bubble occurrences based on high sentiment-based and macroeconomic predictors. Model performance is evaluated through k-fold cross-validation and compared against benchmark machine learning algorithms. Empirical results indicate that the proposed three-step ensemble approach significantly improves predictive accuracy and robustness, providing valuable early warning insights for investors, regulators, and policymakers in mitigating systemic financial risks.

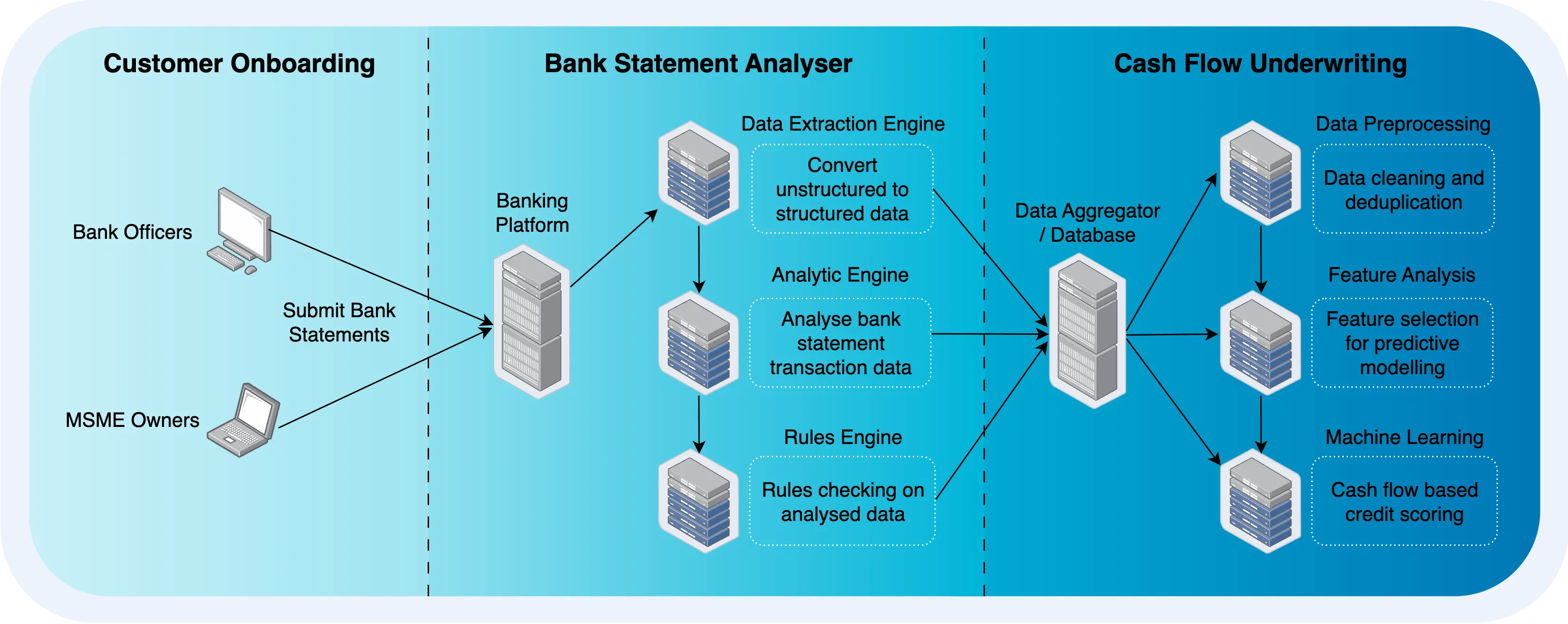

Despite accounting for 96.1% of all businesses in Malaysia, access to financing remains one of the most persistent challenges faced by Micro, Small, and Medium Enterprises (MSMEs). Newly established businesses are often excluded from formal credit markets as traditional underwriting approaches rely heavily on credit bureau data. This study investigates the potential of bank statement data as an alternative data source for credit assessment to promote financial inclusion in emerging markets. First, we propose a cash flow-based underwriting pipeline where we utilise bank statement data for end-to-end data extraction and machine learning credit scoring. Second, we introduce a novel dataset of 611 loan applicants from a Malaysian lending institution. Third, we develop and evaluate credit scoring models based on application information and bank transaction-derived features. Empirical results show that the use of such data boosts the performance of all models on our dataset, which can improve credit scoring for new-to-lending MSMEs. Finally, we will release the anonymised bank transaction dataset to facilitate further research on MSME financial inclusion within Malaysia's emerging economy.