Medical Physics

Medical physics, radiation therapy physics, medical imaging.

Medical physics, radiation therapy physics, medical imaging.

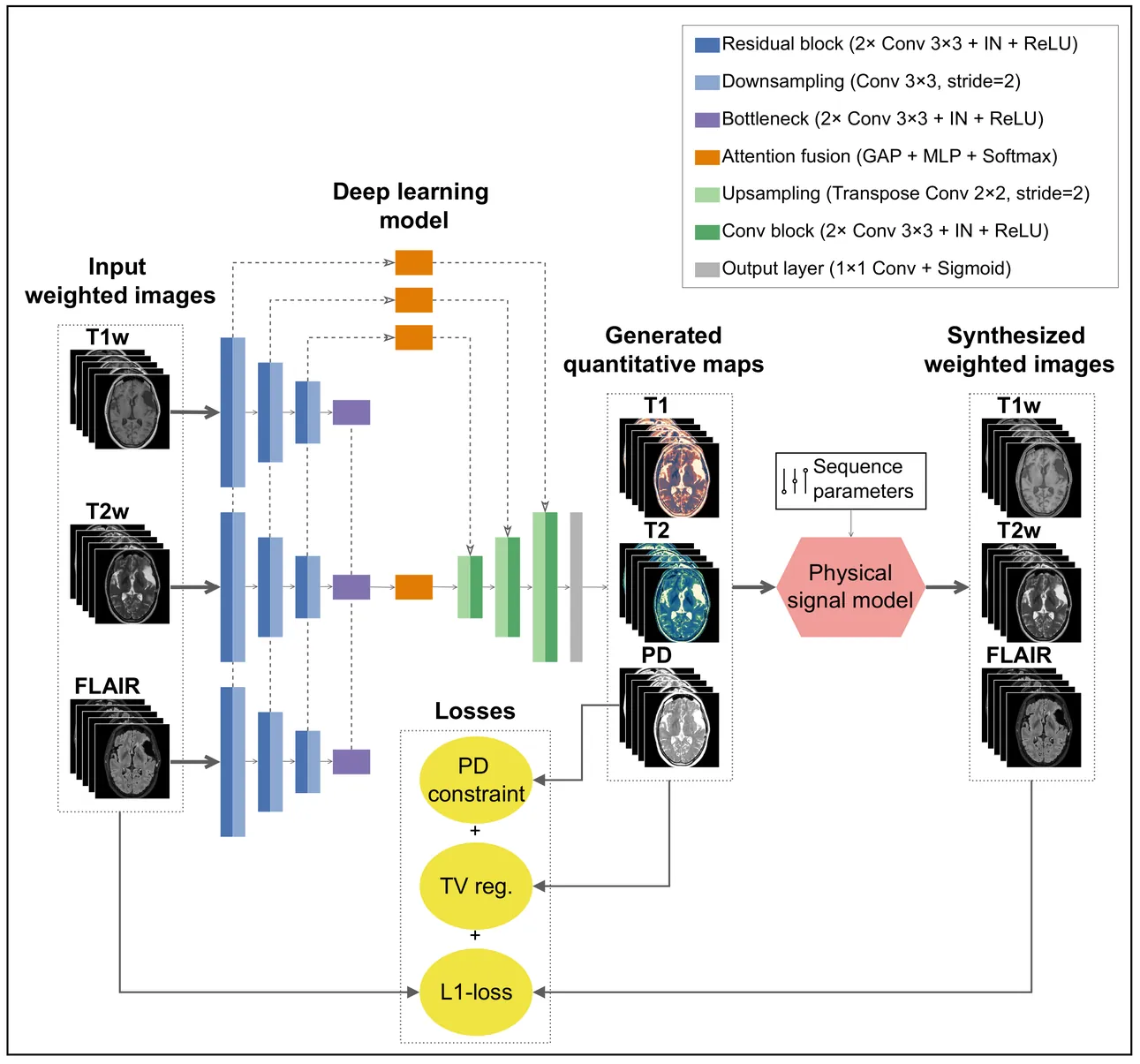

Magnetic resonance imaging (MRI) is a cornerstone of clinical neuroimaging, yet conventional MRIs provide qualitative information heavily dependent on scanner hardware and acquisition settings. While quantitative MRI (qMRI) offers intrinsic tissue parameters, the requirement for specialized acquisition protocols and reconstruction algorithms restricts its availability and impedes large-scale biomarker research. This study presents a self-supervised physics-guided deep learning framework to infer quantitative T1, T2, and proton-density (PD) maps directly from widely available clinical conventional T1-weighted, T2-weighted, and FLAIR MRIs. The framework was trained and evaluated on a large-scale, clinically heterogeneous dataset comprising 4,121 scan sessions acquired at our institution over six years on four different 3 T MRI scanner systems, capturing real-world clinical variability. The framework integrates Bloch-based signal models directly into the training objective. Across more than 600 test sessions, the generated maps exhibited white matter and gray matter values consistent with literature ranges. Additionally, the generated maps showed invariance to scanner hardware and acquisition protocol groups, with inter-group coefficients of variation $\leq$ 1.1%. Subject-specific analyses demonstrated excellent voxel-wise reproducibility across scanner systems and sequence parameters, with Pearson $r$ and concordance correlation coefficients exceeding 0.82 for T1 and T2. Mean relative voxel-wise differences were low across all quantitative parameters, especially for T2 ($<$ 6%). These results indicate that the proposed framework can robustly transform diverse clinical conventional MRI data into quantitative maps, potentially paving the way for large-scale quantitative biomarker research.

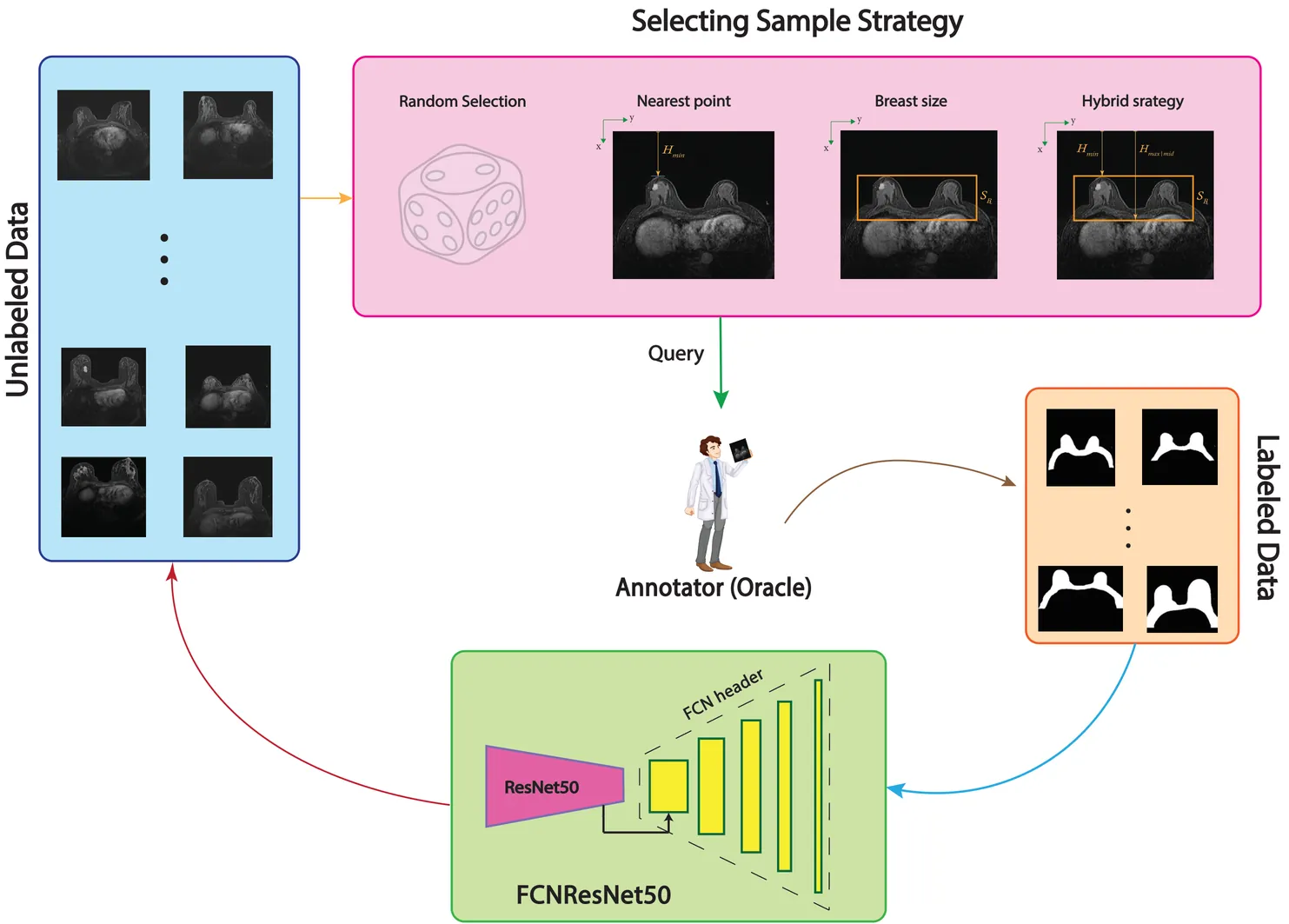

Purpose: Annotation of medical breast images is an essential step toward better diagnostic but a time consuming task. This research aims to focus on different selecting sample strategies within deep active learning on Breast Region Segmentation (BRS) to lessen computational cost of training and effective use of resources. Methods: The Stavanger breast MRI dataset containing 59 patients was used in this study, with FCN-ResNet50 adopted as a sustainable deep learning (DL) model. A novel sample selection approach based on Breast Anatomy Geometry (BAG) analysis was introduced to group data with similar informative features for DL. Patient positioning and Breast Size were considered the key selection criteria in this process. Four selection strategies including Random Selection, Nearest Point, Breast Size, and a hybrid of all three strategies were evaluated using an active learning framework. Four training data proportions of 10%, 20%, 30%, and 40% were used for model training, with the remaining data reserved for testing. Model performance was assessed using Dice score, Intersection over Union, precision, and recall, along with 5-fold cross-validation to enhance generalizability. Results: Increasing the training data proportion from 10% to 40% improved segmentation performance for nearly all strategies, except for Random Selection. The Nearest Point strategy consistently achieved the lowest carbon footprint at 30% and 40% data proportions. Overall, combining the Nearest Point strategy with 30% of the training data provided the best balance between segmentation performance, efficiency, and environmental sustainability. Keywords: Deep Active Learning, Breast Region Segmentation, Human-center analysis

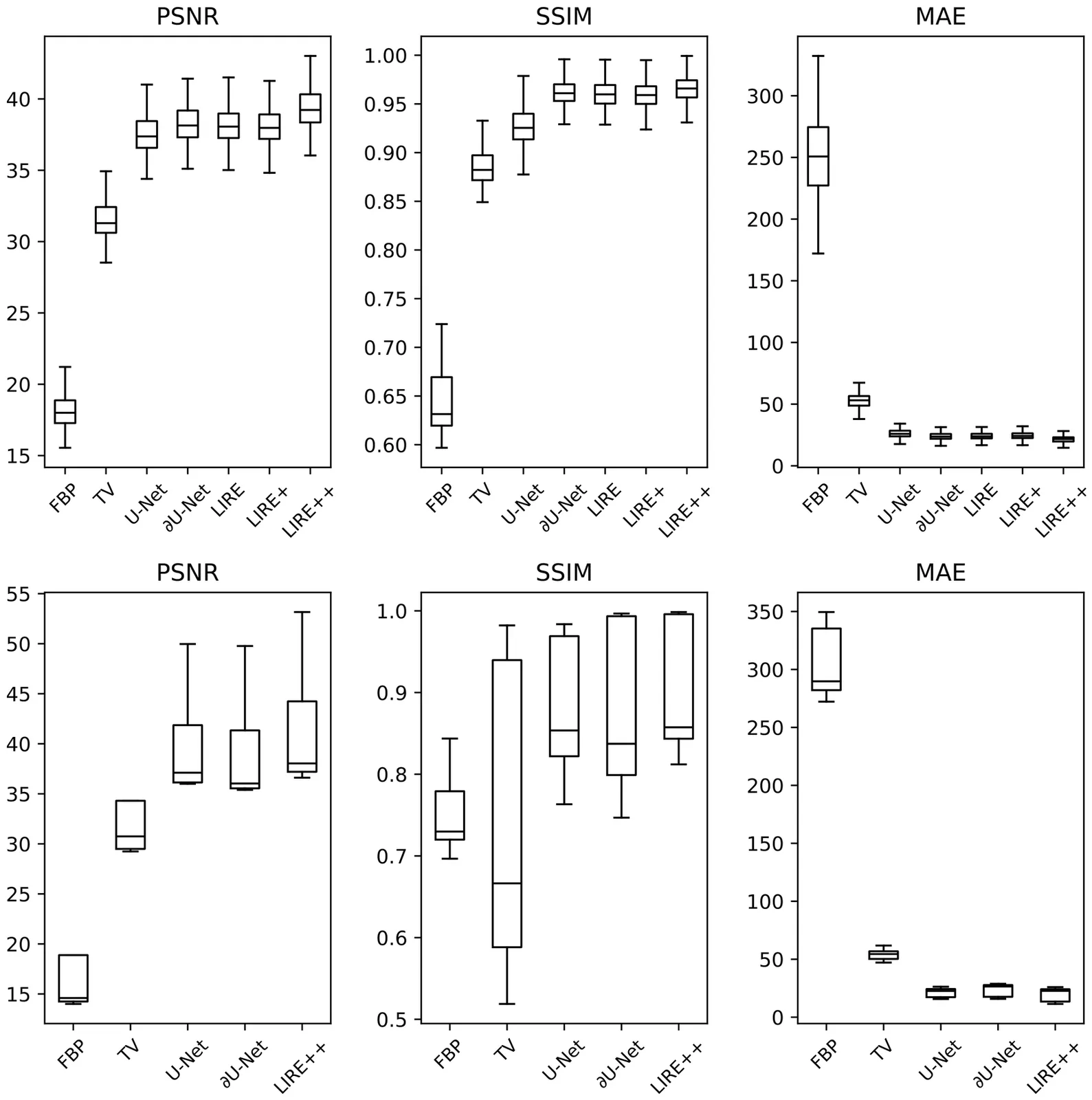

Cone Beam CT (CBCT) is an important imaging modality nowadays, however lower image quality of CBCT compared to more conventional Computed Tomography (CT) remains a limiting factor in CBCT applications. Deep learning reconstruction methods are a promising alternative to classical analytical and iterative reconstruction methods, but applying such methods to CBCT is often difficult due to the lack of ground truth data, memory limitations and the need for fast inference at clinically-relevant resolutions. In this work we propose LIRE++, an end-to-end rotationally-equivariant multiscale learned invertible primal-dual scheme for fast and memory-efficient CBCT reconstruction. Memory optimizations and multiscale reconstruction allow for fast training and inference, while rotational equivariance improves parameter efficiency. LIRE++ was trained on simulated projection data from a fast quasi-Monte Carlo CBCT projection simulator that we developed as well. Evaluated on synthetic data, LIRE++ gave an average improvement of 1 dB in Peak Signal-to-Noise Ratio over alternative deep learning baselines. On real clinical data, LIRE++ improved the average Mean Absolute Error between the reconstruction and the corresponding planning CT by 10 Hounsfield Units with respect to current proprietary state-of-the-art hybrid deep-learning/iterative method.

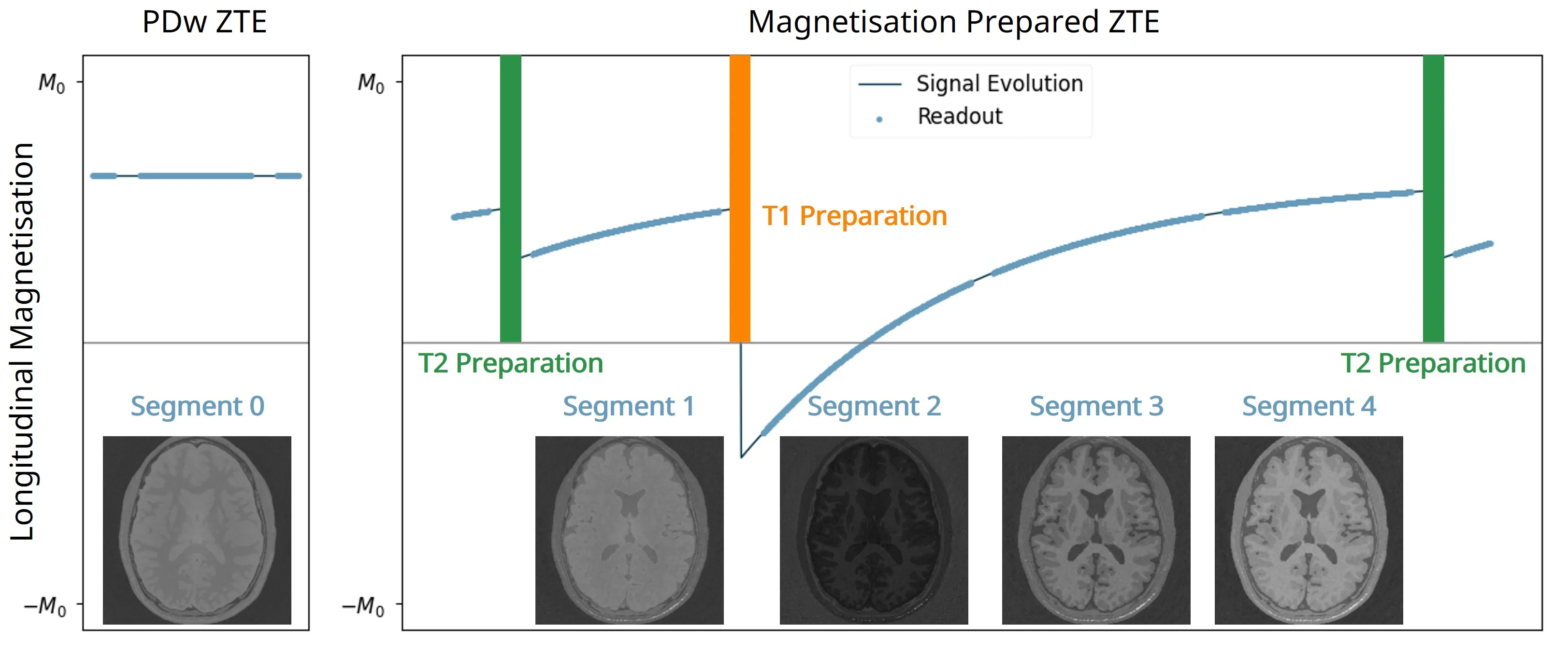

The 3D fast silent multi-parametric mapping sequence with zero echo time (MuPa-ZTE) is a novel quantitative MRI (qMRI) acquisition that enables nearly silent scanning by using a 3D phyllotaxis sampling scheme. MuPa-ZTE improves patient comfort and motion robustness, and generates quantitative maps of T1, T2, and proton density using the acquired weighted image series. In this work, we propose a diffusion model-based qMRI mapping method that leverages both a deep generative model and physics-based data consistency to further improve the mapping performance. Furthermore, our method enables additional acquisition acceleration, allowing high-quality qMRI mapping from a fourfold-accelerated MuPa-ZTE scan (approximately 1 minute). Specifically, we trained a denoising diffusion probabilistic model (DDPM) to map MuPa-ZTE image series to qMRI maps, and we incorporated the MuPa-ZTE forward signal model as an explicit data consistency (DC) constraint during inference. We compared our mapping method against a baseline dictionary matching approach and a purely data-driven diffusion model. The diffusion models were trained entirely on synthetic data generated from digital brain phantoms, eliminating the need for large real-scan datasets. We evaluated on synthetic data, a NISM/ISMRM phantom, healthy volunteers, and a patient with brain metastases. The results demonstrated that our method produces 3D qMRI maps with high accuracy, reduced noise and better preservation of structural details. Notably, it generalised well to real scans despite training on synthetic data alone. The combination of the MuPa-ZTE acquisition and our physics-informed diffusion model is termed q3-MuPa, a quick, quiet, and quantitative multi-parametric mapping framework, and our findings highlight its strong clinical potential.

Subject-specific cardiovascular models rely on parameter estimation using measurements such as 4D Flow MRI data. However, acquiring high-resolution, high-fidelity functional flow data is costly and taxing for the patient. As a result, there is growing interest in using highly undersampled MRI data to reduce acquisition time and thus the cost, while maximizing the information gain from the data. Examples of such recent work include inverse problems to estimate boundary conditions of aortic blood flow from highly undersampled k-space data. The undersampled data is selected based on a predefined sampling mask which can significantly influences the performance and the quality of the solution of the inverse problem. While there are many established sampling patterns to collect undersampled data, it remains unclear how to select the best sampling pattern for a given set of inference parameters. In this paper we propose an Optimal Experimental Design (OED) framework for MRI measurements in k-space, aiming to find optimal masks for estimating specific parameters directly from k-space. As OED is typically applied to sensor placement problems in spatial locations, this is, to our knowledge, the first time the technique is used in this context. We demonstrate that the masks optimized by employing OED consistently outperform conventional sampling patterns in terms of parameter estimation accuracy and variance, facilitating a speed-up of 10x of the acquisition time while maintaining accuracy.

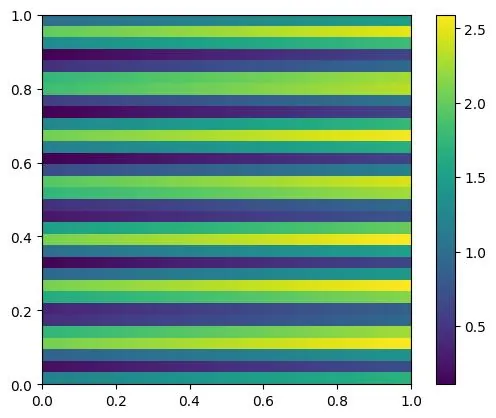

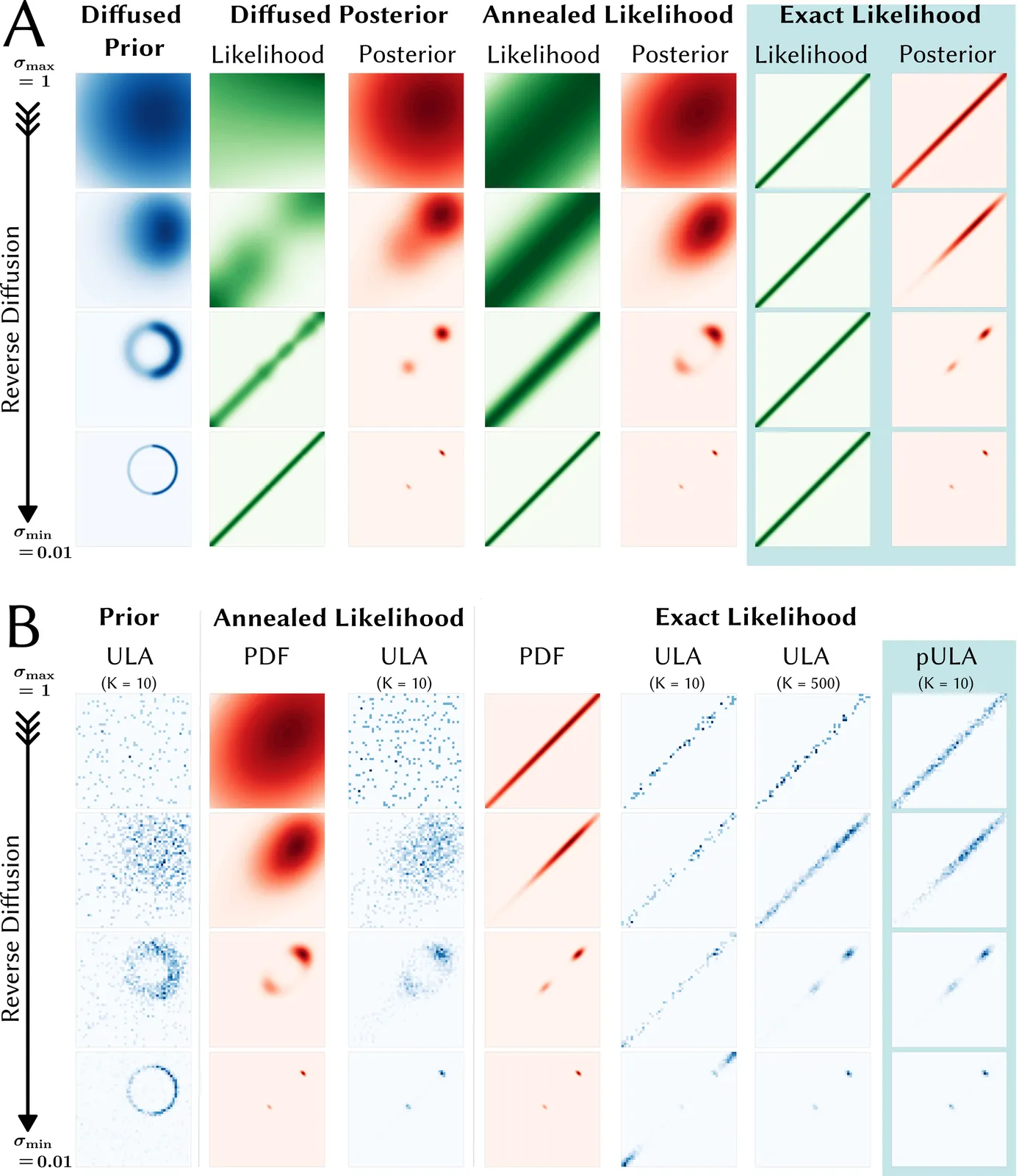

Purpose: The Unadjusted Langevin Algorithm (ULA) in combination with diffusion models can generate high quality MRI reconstructions with uncertainty estimation from highly undersampled k-space data. However, sampling methods such as diffusion posterior sampling or likelihood annealing suffer from long reconstruction times and the need for parameter tuning. The purpose of this work is to develop a robust sampling algorithm with fast convergence. Theory and Methods: In the reverse diffusion process used for sampling the posterior, the exact likelihood is multiplied with the diffused prior at all noise scales. To overcome the issue of slow convergence, preconditioning is used. The method is trained on fastMRI data and tested on retrospectively undersampled brain data of a healthy volunteer. Results: For posterior sampling in Cartesian and non-Cartesian accelerated MRI the new approach outperforms annealed sampling in terms of reconstruction speed and sample quality. Conclusion: The proposed exact likelihood with preconditioning enables rapid and reliable posterior sampling across various MRI reconstruction tasks without the need for parameter tuning.

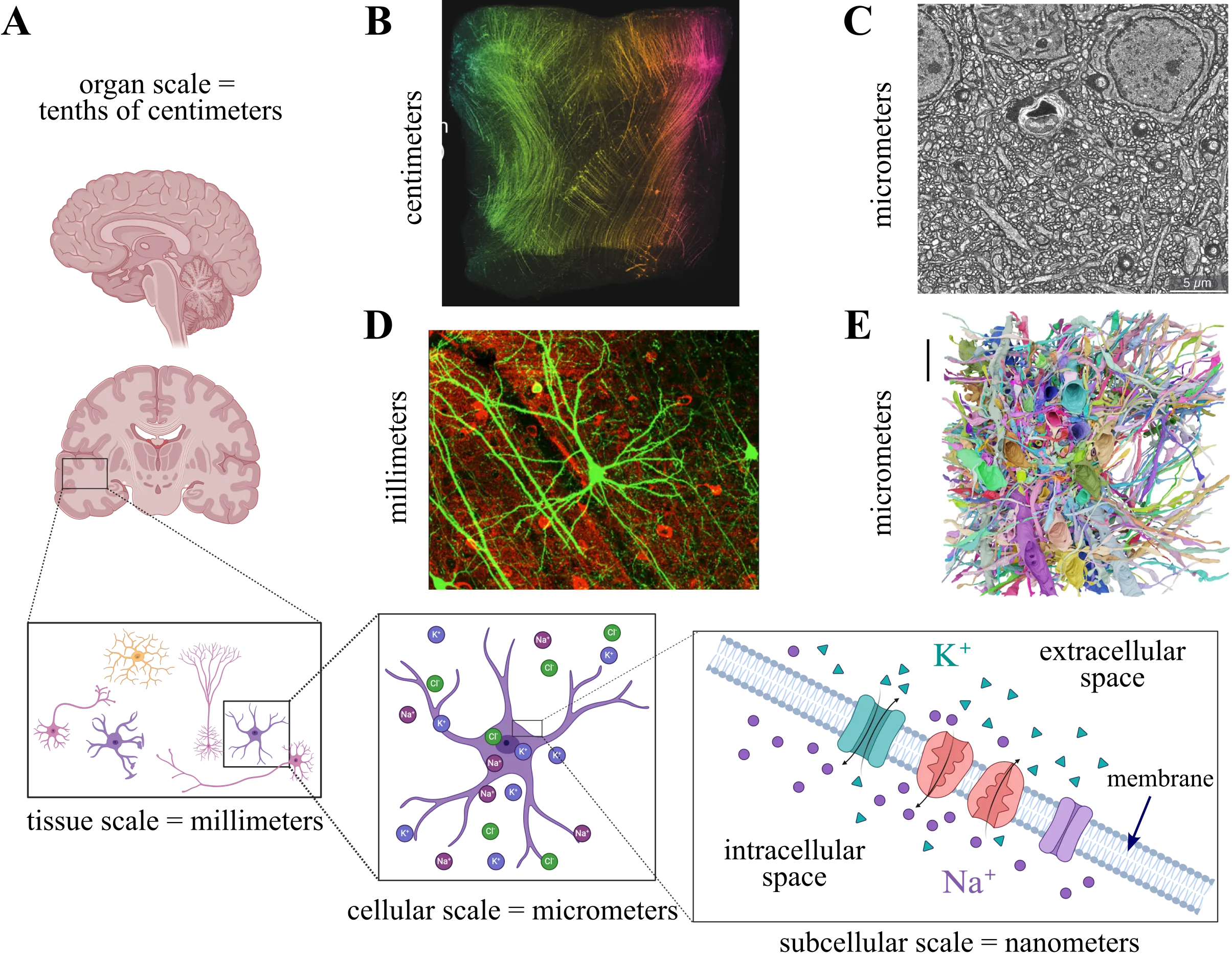

Excitable tissue is fundamental to brain function, yet its study is complicated by extreme morphological complexity and the physiological processes governing its dynamics. Consequently, detailed computational modeling of this tissue represents a formidable task, requiring both efficient numerical methods and robust implementations. Meanwhile, efficient and robust methods for image segmentation and meshing are needed to provide realistic geometries for which numerical solutions are tractable. Here, we present a computational framework that models electrodiffusion in excitable cerebral tissue, together with realistic geometries generated from electron microscopy data. To demonstrate a possible application of the framework, we simulate electrodiffusive dynamics in cerebral tissue during neuronal activity. Our results and findings highlight the numerical and computational challenges associated with modeling and simulation of electrodiffusion and other multiphysics in dense reconstructions of cerebral tissue.

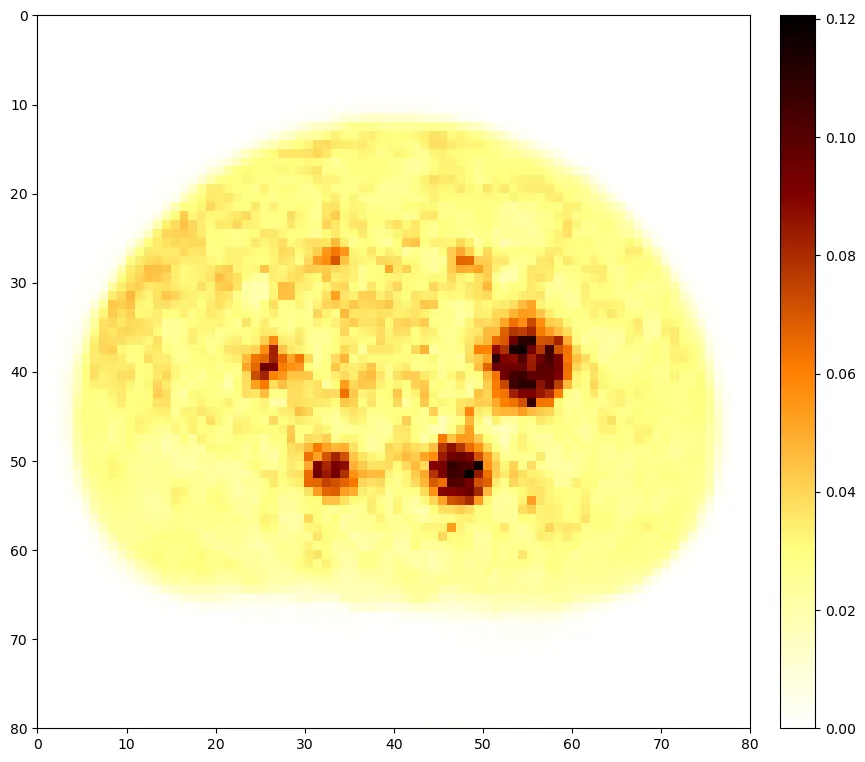

Introduction: We describe the foundation of PETRIC, an image reconstruction challenge to minimise the computational runtime of related algorithms for Positron Emission Tomography (PET). Purpose: Although several similar challenges are well-established in the field of medical imaging, there have been no prior challenges for PET image reconstruction. Methods: Participants are provided with open-source software for implementation of their reconstruction algorithm(s). We define the objective function and reconstruct "gold standard" reference images, and provide metrics for quantifying algorithmic performance. We also received and curated phantom datasets (acquired with different scanners, radionuclides, and phantom types), which we further split into training and evaluation datasets. The automated computational framework of the challenge is released as open-source software. Results: Four teams with nine algorithms in total participated in the challenge. Their contributions made use of various tools from optimisation theory including preconditioning, stochastic gradients, and artificial intelligence. While most of the submitted approaches appear very similar in nature, their specific implementation lead to a range of algorithmic performance. Conclusion: As the first challenge for PET image reconstruction, PETRIC's solid foundations allow researchers to reuse its framework for evaluating new and existing image reconstruction methods on new or existing datasets. Variant versions of the challenge have and will continue to be launched in the future.

In this paper, our goal is to enable quantitative feedback on muscle fatigue during exercise to optimize exercise effectiveness while minimizing injury risk. We seek to capture fatigue by monitoring surface vibrations that muscle exertion induces. Muscle vibrations are unique as they arise from the asynchronous firing of motor units, producing surface micro-displacements that are broadband, nonlinear, and seemingly stochastic. Accurately sensing these noise-like signals requires new algorithmic strategies that can uncover their underlying structure. We present GigaFlex the first contactless system that measures muscle vibrations using mmWave radar to infer muscle force and detect fatigue. GigaFlex draws on algorithmic foundations from Chaos theory to model the deterministic patterns of muscle vibrations and extend them to the radar domain. Specifically, we design a radar processing architecture that systematically infuses principles from Chaos theory and nonlinear dynamics throughout the sensing pipeline, spanning localization, segmentation, and learning, to estimate muscle forces during static and dynamic weight-bearing exercises. Across a 23-participant study, GigaFlex estimates maximum voluntary isometric contraction (MVIC) root mean square error (RMSE) of 5.9\%, and detects one to three Repetitions in Reserve (RIR), a key quantitative muscle fatigue metric, with an AUC of 0.83 to 0.86, performing comparably to a contact-based IMU baseline. Our system can enable timely feedback that can help prevent fatigue-induced injury, and opens new opportunities for physiological sensing of complex, non-periodic biosignals.

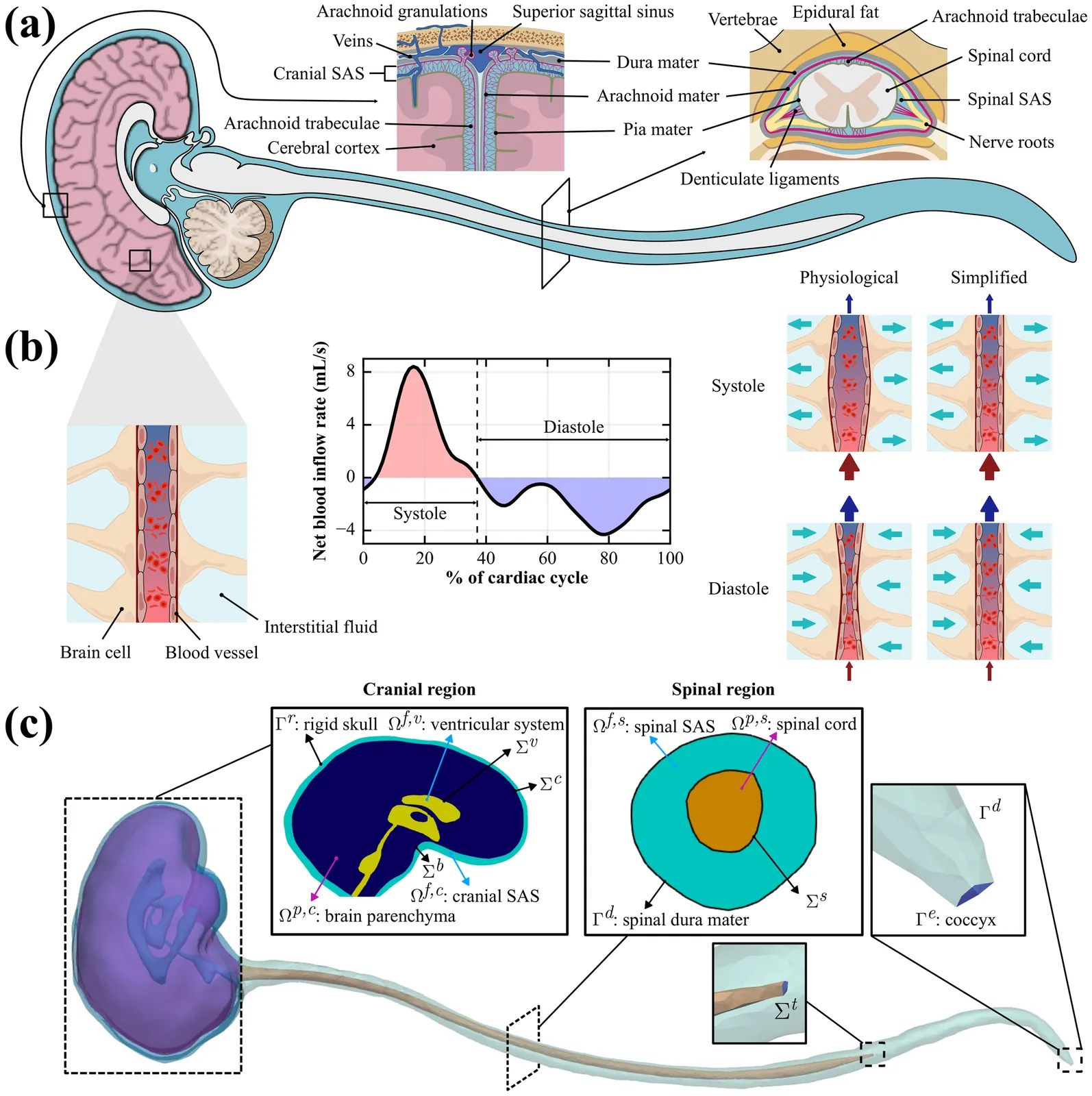

Intrathecal (IT) injection is an effective way to deliver drugs to the brain bypassing the blood-brain barrier. To evaluate and optimize IT drug delivery, it is necessary to understand the cerebrospinal fluid (CSF) dynamics in the central nervous system (CNS). In combination with experimental measurements, computational modeling plays an important role in reconstructing CSF flow in the CNS. Existing models have provided valuable insights into the CSF dynamics; however, most neglect the effects of tissue mechanics, focus on partial geometries, or rely on measured CSF flow rates under specific conditions, leaving full-CNS CSF flow field predictions across different physiological states underexplored. Here, we propose a comprehensive multiphysics computational model of the CNS with three key features: (1) it is implemented on a fully closed geometry of CNS; (2) it includes the interaction between CSF and poroelastic tissue as well as the compliant spinal dura mater; (3) it has potential for predictive simulations because it only needs data on cardiac blood pulsation into the brain. Our simulations under physiological conditions demonstrate that our model accurately reconstructs the CSF pulsation and captures both the craniocaudal attenuation and phase shift of CSF flow along the spinal subarachnoid space (SAS). When applied to the simulation of IT drug delivery, our model successfully captures the intracranial pressure (ICP) elevation during injection and subsequent recovery after injections. The proposed multiphysics model provides a unified and extensible framework that allows parametric studies of CSF flow dynamics and optimization of IT injections, serving as a strong foundation for integration of additional physiological mechanisms.

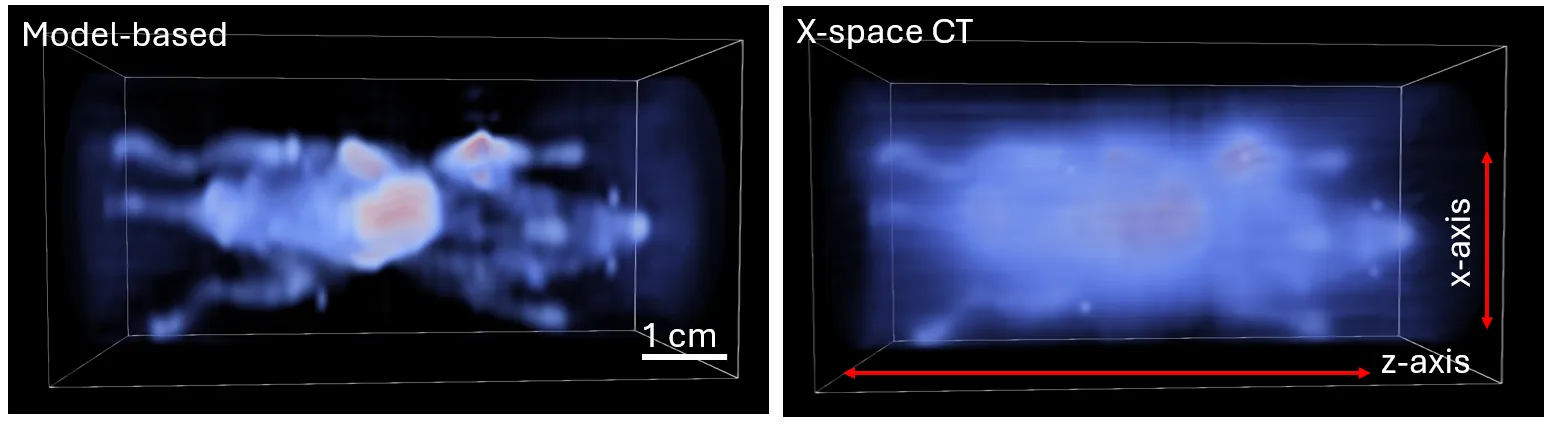

Magnetic particle imaging (MPI) is a tracer-based imaging modality that detects superparamagnetic iron oxide nanoparticles in vivo, with applications in cancer cell tracking, lymph node mapping, and cell therapy monitoring. We introduce a new 3D image reconstruction framework for MPI data acquired using multi-angle field-free line (FFL) scans, demonstrating improvements in spatial resolution, quantitative accuracy, and high dynamic range performance over conventional sequential reconstruction pipelines. The framework is built by combining a physics-based FFL signal model with tomographic projection operators to form an efficient 3D forward operator, enabling the full dataset to be reconstructed jointly rather than as a series of independent 2D projections. A harmonic-domain compression step is incorporated naturally within this operator formulation, reducing memory overhead by over two orders of magnitude while preserving the structure and fidelity of the model, enabling volumetric reconstructions on standard desktop GPU hardware in only minutes. Phantom and in vivo results demonstrate substantially reduced background haze and improved visualization of low-intensity regions adjacent to bright structures, with an estimated $\sim$11$\times$ improvement in iron detection sensitivity relative to the conventional X-space CT approach. These advances enhance MPI image quality and quantitative reliability, supporting broader use of MPI in preclinical and future clinical imaging.

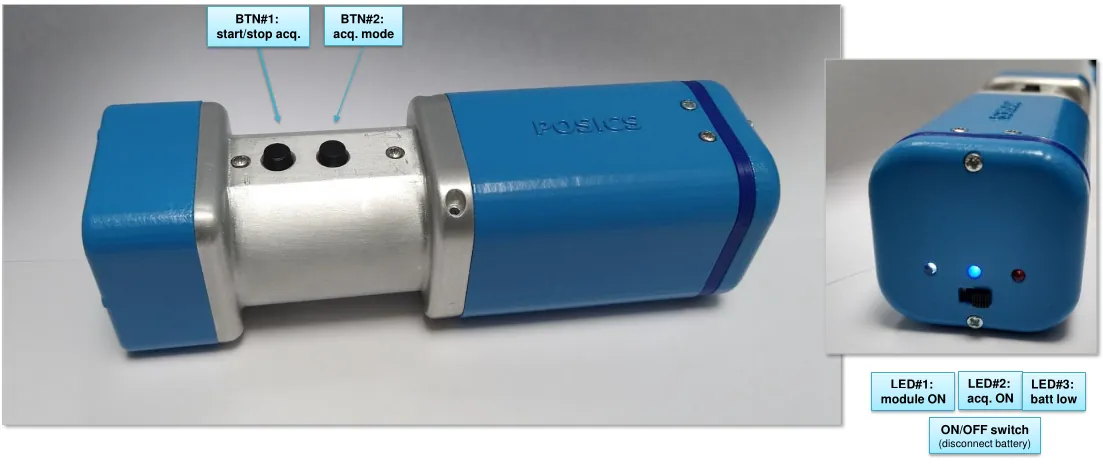

The POSiCS camera is a handheld, small field-of-view gamma camera developed for multipurpose use in radio-guided surgery (RGS), with sentinel lymph node biopsy (SLNB) as its benchmark application. This compact and lightweight detector (weighing approximately 350 g) can map tissues labeled with Tc-99m nanocolloids and guide surgeons to the location of target lesions. By enabling intraoperative visualization in close proximity to the surgical field, its primary objective is to minimize surgical interventional invasiveness and operative time, thereby enhancing localization accuracy and reducing the incidence of post-operative complications. The design and components of the POSiCS camera emphasize ergonomic handling and compactness, providing, at the same time, rapid image formation and a spatial resolution of a few millimeters. These features are compatible with routine operating-room workflow, including wireless communication with the computer and a real-time display to support surgeon decision-making. The spatial resolution measured at a source-detector distance of 0 cm was 1.9 +/- 0.1 mm for the high-sensitivity mode and 1.4 +/- 0.1 mm for the high-resolution mode. The system sensitivity at 2 cm was evaluated as 481 +/- 14 cps/MBq (high sensitivity) and 134 +/- 8 cps/MBq (high resolution). For both working modes, we report an energy resolution of approximately 20 percent, even though the high-resolution collimator exhibits an increased scattered component due to the larger amount of tungsten.

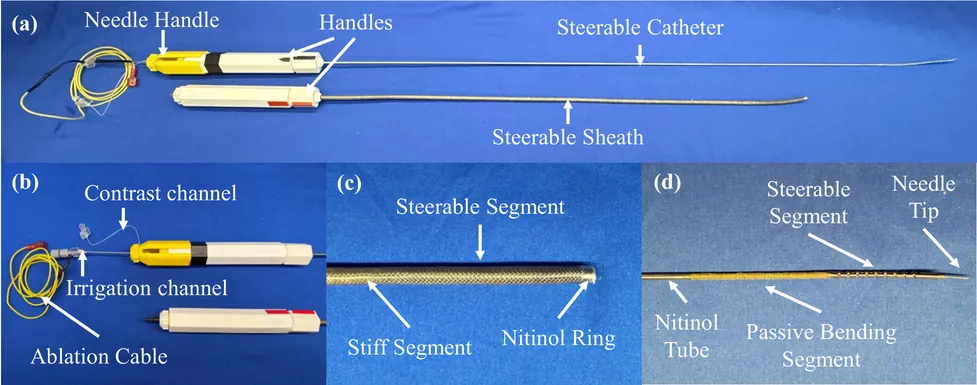

Radiofrequency ablation is widely used to prevent ventricular tachycardia (VT) by creating lesions to inhibit arrhythmias; however, the current surface ablation catheters are limited in creating lesions that are deeper within the left ventricle (LV) wall. Intramyocardial needle ablation (INA) addresses this limitation by penetrating the myocardium and delivering energy from within. Yet, existing INA catheters lack adequate dexterity to navigate the highly asymmetric, trabeculated LV chamber and steer around papillary structures, limiting precise targeting. This work presents a novel dexterous INA (d-INA) toolset designed to enable effective manipulation and creation of deep ablation lesions. The system consists of an outer sheath and an inner catheter, both bidirectionally steerable, along with an integrated ablation needle assembly. Benchtop tests demonstrated that the sheath and catheter reached maximum bending curvatures of 0.088~mm$^{-1}$ and 0.114~mm$^{-1}$, respectively, and achieved stable C-, S-, and non-planar S-shaped configurations. Ex-vivo studies validated the system's stiffness modulation and lesion-creation capabilities. In-vivo experiments in two swine demonstrated the device's ability to reach previously challenging regions such as the LV summit, and achieved a 219\% increase in ablation depth compared with a standard ablation catheter. These results establish the proposed d-INA as a promising platform for achieving deep ablation with enhanced dexterity, advancing VT treatment.

Objective: To validate a newly proposed stochastic differential equation (SDE)-based model for proton beam energy deposition by comparing its predictions with those from Geant4 in simplified phantom scenarios. Approach: Building on previous work in Crossley et al. (2025), where energy deposition from a proton beam was modelled using an SDE framework, we implemented the model with standard approximations to interaction cross sections and mean excitation energies, which makes simulations easily adaptable to new materials and configurations. The model was benchmarked against Geant4 in homogeneous and heterogeneous phantoms. Main results: The SDE-based dose distributions agreed well with Geant4, showing range differences within 0.4 mm and 3D gamma pass rates exceeding 98% under 3%/2 mm criteria with a 1% dose threshold. The model achieved a computational speed-up of approximately fivefold relative to Geant4, consistent across different Geant4 physics lists. Significance: These results demonstrate that the SDE approach can reproduce accuracy comparable to high-fidelity Monte Carlo for proton therapy at a fraction of the computational cost, highlighting its potential for accelerating dose calculations and treatment planning.

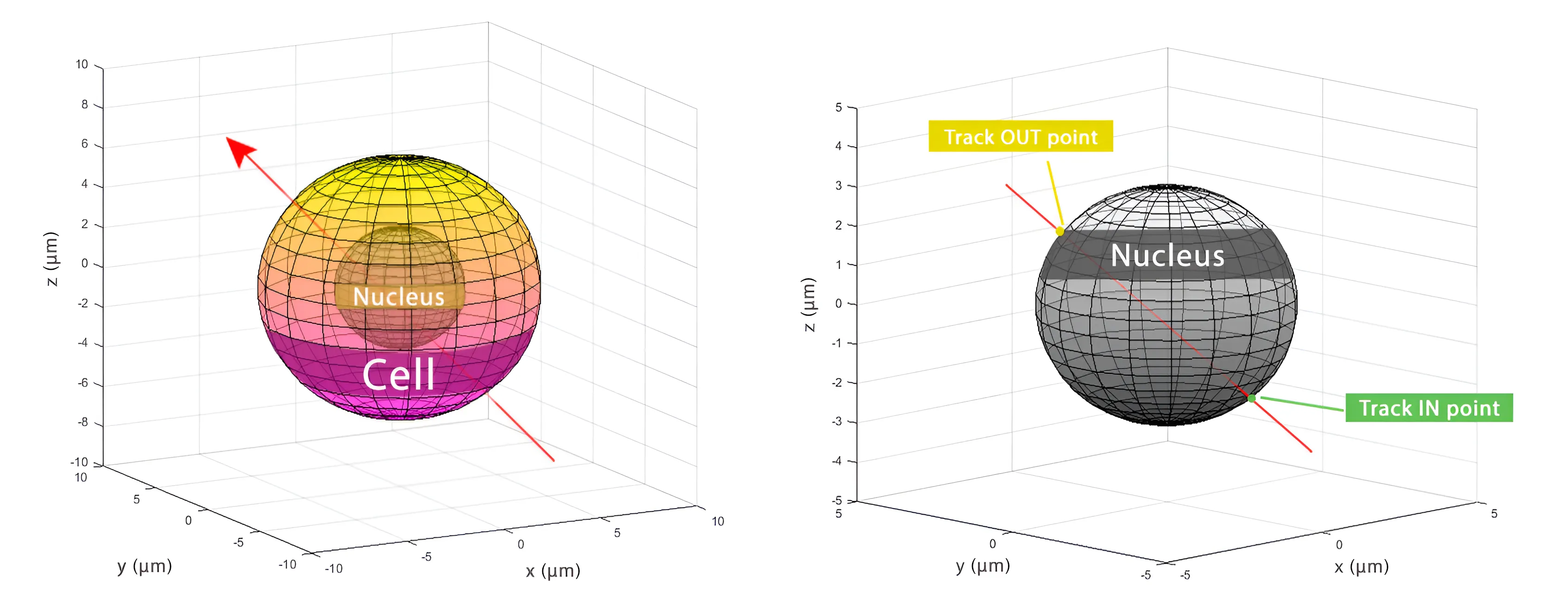

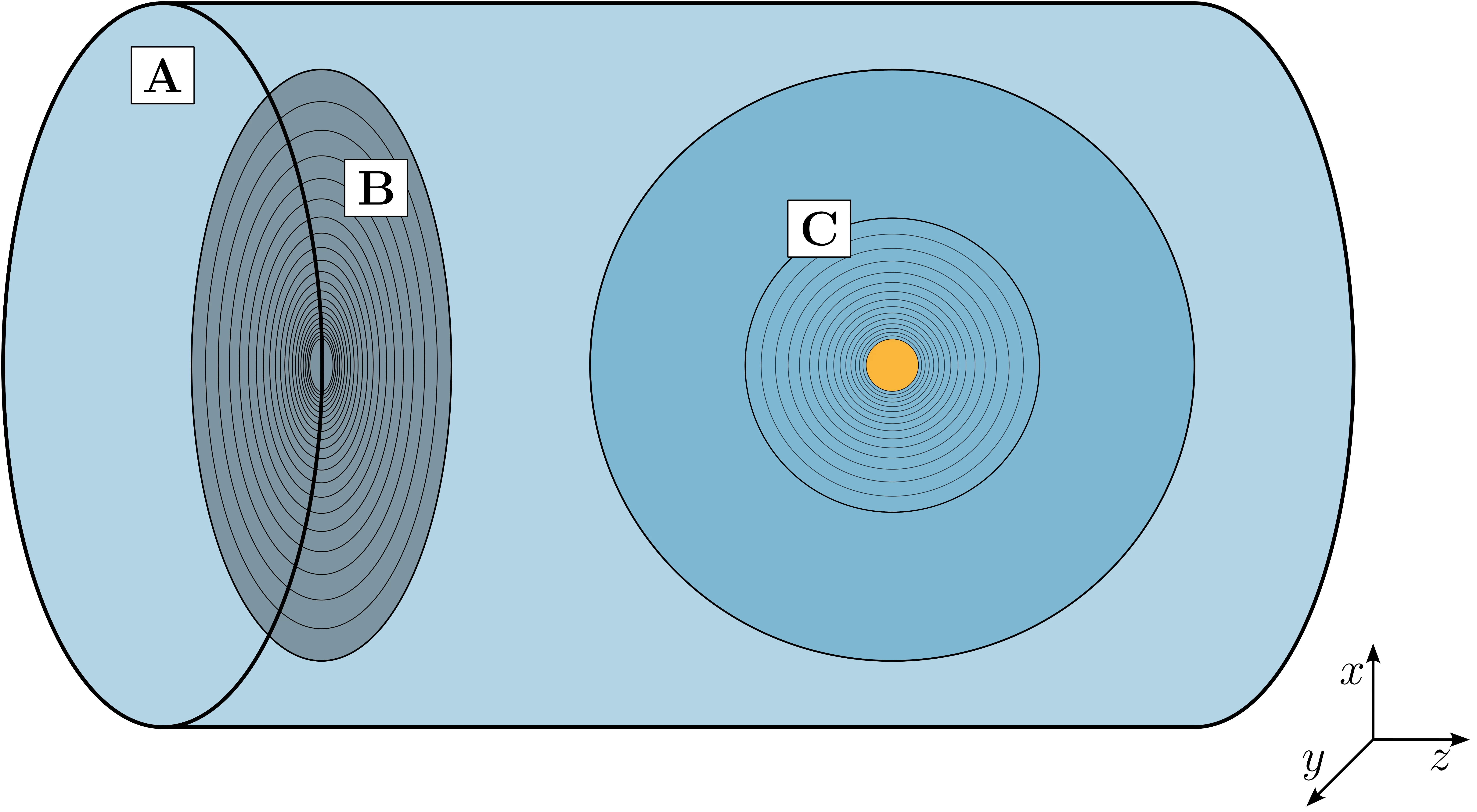

Diffusing alpha-emitters Radiation Therapy ("Alpha DaRT") is a new treatment modality focusing on the use of alpha particles against solid tumors. The introduction of Alpha DaRT in clinical settings calls for the development of detailed tumor dosimetry, which addresses biological responses such as cell survival and tumor control probabilities at the microscopic scale. In this study, we present a microdosimetric model that links the macroscopic alpha dose, cell survival, and tumor control probability while explicitly accounting for broad distributions of spherical nucleus radii. The model combines analytic expressions for nucleus-hit statistics by alpha particles with Monte Carlo-based specific-energy deposition to compute survival for cells whose nucleus radii are sampled from artificial and empirically derived distributions. The results indicate that introducing finite-width nucleus size distributions causes survival curves to depart from the exponential trend observed for uniform cell populations. We show that the width of the nucleus size distribution strongly influences the survival gap between radiosensitive and radioresistant populations, diminishing the influence of intrinsic radiosensitivity on cell survival. Tumor control probability is highly sensitive to the minimal nucleus size included in the size distribution, indicating that realistic lower thresholds are essential for credible predictions.Our findings highlight the importance of careful characterization of clonogenic nucleus sizes, with particular attention to the smallest nuclei represented in the data. Without addressing these small-nucleus contributions, tumor control probability may be substantially underestimated. Incorporating realistic nucleus size variability into microdosimetric calculations is a key step toward more accurate tumor control predictions for Alpha DaRT and other alpha-based treatment modalities.

Objective: This work presents a data-driven importance sampling-based variance reduction (VR) scheme inspired by active learning. The method is applied to the estimation of an optimal impact-parameter distribution in the calculation of ionization clusters around a gold nanoparticle (NP). Here, such an optimal importance distribution can not be inferred from principle. Approach: An iterative optimization procedure is set up that uses a Gaussian Process Sampler to propose optimal sampling distributions based on a loss function. The loss is constructed based on appropriate heuristics. The optimization code obtains estimates of the number of ionization clusters in shells around the NP by interfacing with a Geant4 simulation via a dedicated Transmission Control Protocol (TCP) interface. Main results: It is shown that the so-derived impact-parameter distribution easily outperforms the actual, uniform irradiation case. The results resemble those obtained with other VR schemes but do still slightly overestimate background contributions. Significance: While the method presented is a proof-of-principle, it provides a novel method of estimating importance distributions in ill-posed scenarios. The presented TCP interface described here is a simple and efficient method to expose compiled Geant4 code to other scripts, written for example, in Python.

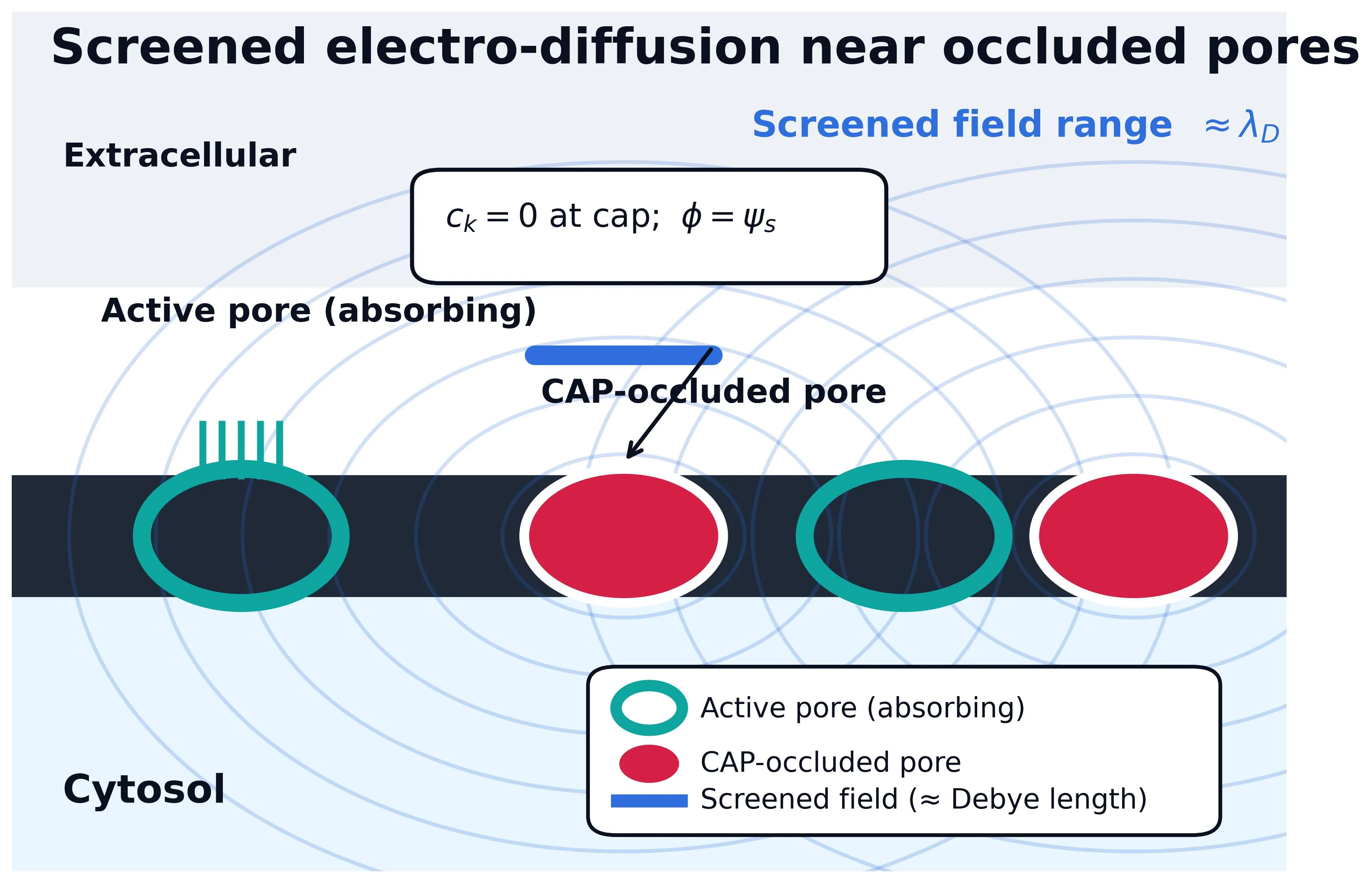

Malignant membranes cluster nutrient transporters within glycan-rich domains, sustaining metabolism through redundant intake routes. A theoretical framework links interfacial chemistry to transport suppression and energetic or redox collapse. The model unites a screened Poisson-Nernst-Planck electrodiffusion problem, an interfacial potential of mean force, and a reduced energetic-redox module connecting flux to ATP/NADPH balance. From this structure, capacitary-spectral bounds relate total flux to the inverse principal eigenvalue (J_tot <= C*exp(-beta*chi_eff)*P(theta)). Two near-orthogonal levers, geometry and field strength, govern a linear suppression regime below a percolation-type knee, beyond which conductance collapses. A composite intake index Xi = w_G*J_GLUT + w_A*J_LAT/ASCT + w_L*J_MCT dictates energetic trajectories: once below a maintenance threshold, ATP and NADPH fall jointly and redox imbalance drives irreversible commitment. Normal membranes, with fewer transport mouths and weaker fields, remain above this threshold, defining a natural selectivity window. The framework demonstrates existence, regularity, and spectral monotonicity for the self-adjoint PNP operator, establishing a geometric-spectral transition that links molecular parameters such as branching and sulfonation to measurable macroscopic outcomes with predictive precision.

2510.26928

2510.26928Radiation chemistry of model systems irradiated with ultra-high dose-rates (UHDR) is key to obtain a mechanistic understanding of the sparing of healthy tissue, which is called the FLASH effect. It is envisioned to be used for efficient treatment of cancer by FLASH radiotherapy. However, it seems that even the most simple model systems, water irradiated with varying dose-rates (DR), pose a challenge. This became evident, as differences within measured and predicted hydrogen peroxide (H2O2) yields (g-values) for exposure of liquid samples to conventional DR and UHDR were reported. Many of the recently reported values contradict older experiments and current Monte-Carlo simulations(MCS). In the present work, we aim to identify possible reasons of these discrepancies and propose ways to overcome this issue. Hereby a short review of recent and classical literature concerning experimental and simulational studies is performed. The studies cover different radiation sources, from gamma rays, high-energy electrons, heavy particles (protons and ions) with low and high linear energy transfer (LET), and samples of hypoxic & oxygenated water, with cosolutes such as bovine-serum albumine (BSA). Results are for additional experimental parameters, such as solvent, sample container and analysis methods used to determine the respective g-values of H2O2. Similarly the parameter of the MCS by the step-by-step approach, or the independent-reaction time (IRT) method are discussed. Here, UHDR induced modification of the radical-radical interaction and dynamics, not governed by diffusion processes, may cause problems. Approaches to test these different models are highlighted to allow progress: by making the step from a purely descriptive discourse of the effects observed, towards testable models, which should clarify the reasons of how and why such a disagreement came to light in the first place.

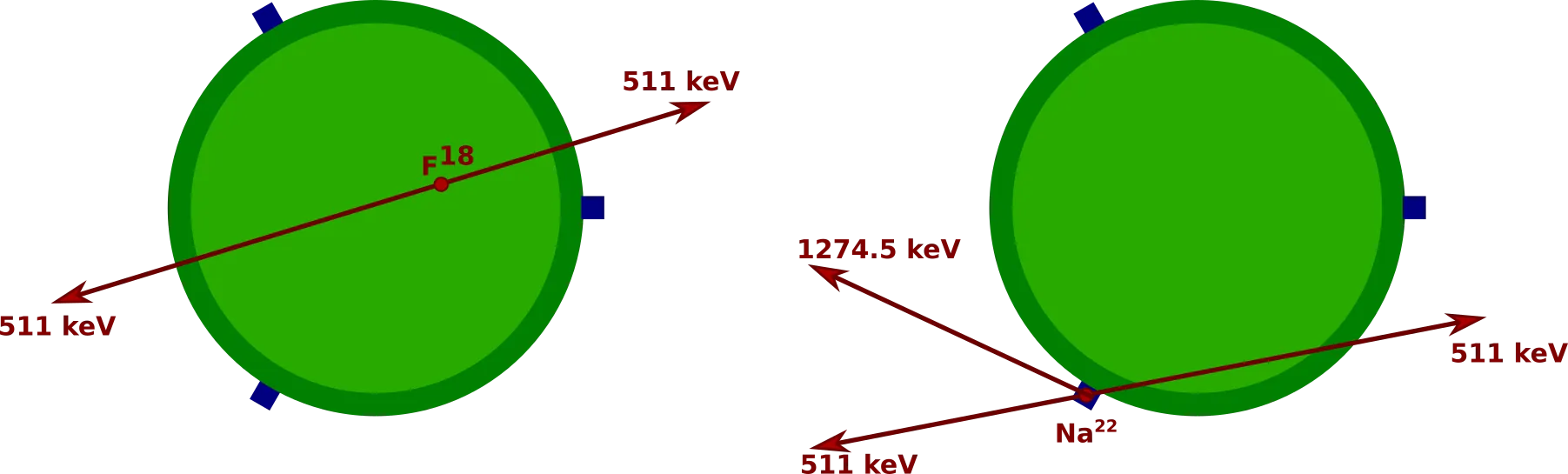

When acquiring PET images, body motions are unavoidable, given that the acquisition time could last 10-20 minutes or more. These motions can seriously deteriorate the quality of the final image at the level of image reconstruction and attenuation corrections. Movements can have rhythmic patterns, related to respiratory or cardiac motions, or they can be abrupt reflexive actions caused by the patient's discomfort. Many approaches, software and hardware, have been developed to mitigate this problem where each approach has its own advantages and disadvantages. In this work we present a simulation study of a head monitoring device, named CrowN@22, intended to be used in conjunction with a dedicated brain PET scanner. The CrowN@22 device consists of six point sources of non-pure positron emitter isotopes, such as 22Na or 44Sc, mounted in crown-like rings around the head of the patient. The relative positions of the point sources are predefined and their actual position, once mounted, can be reconstructed by tagging the extra 1274 keV photon (in the case of 22Na). These two factors contribute to a superb signal-to-noise ratio, distinguishing between the signal from the 22Na monitor point sources and the background signal from the FDG in the brain. Hence, even with a low activity for the monitor point sources, as low as 10 kBq per point source, in the presence of 75 MBq activity of 18F in the brain, one can detect brain movements with a precision of less than 0.3 degrees, or 0.5 mm, which is of the order of the PET spatial resolution, at a sampling rate of 1 Hz.

Particle therapy relies on up-to-date knowledge of the stopping power of the patient tissues to deliver the prescribed dose distribution. The stopping power describes the average particle motion, which is encoded in the distribution of prompt-gamma photon emissions in time and space. We reconstruct the spatiotemporal emission distribution from multi-detector Prompt Gamma Timing (PGT) data. Solving this inverse problem relies on an accurate model of the prompt-gamma transport and detection including explicitly the dependencies on the time of emission and detection. Our previous work relied on Monte-Carlo (MC) based system models. The tradeoff between computational resources and statistical noise in the system model prohibits studies of new detector arrangements and beam scanning scenarios. Therefore, we propose here an analytical system model to speed up recalculations for new beam positions and to avoid statistical noise in the model. We evaluated the model for the MERLINO multi-detector-PGT prototype. Comparisons between the analytical model and a MC-based reference showed excellent agreement for single-detector setups. When several detectors were placed close together and partially obstructed each other, intercrystal scatter led to differences of up to 10 % between the analytical and MC-based model. Nevertheless, when evaluating the performance in reconstructing the spatiotemporal distribution and estimating the stopping power, no significant difference between the models was observed. Hence, the procedure proved robust against the small inaccuracies of the model for the tested scenarios. The model calculation time was reduced by 1500 times, now enabling many new studies for PGT-based systems.