Signal Processing

Theory, algorithms, performance analysis and applications of signal and data analysis, including detection, estimation, filtering, and statistical signal processing.

Looking for a broader view? This category is part of:

Theory, algorithms, performance analysis and applications of signal and data analysis, including detection, estimation, filtering, and statistical signal processing.

Looking for a broader view? This category is part of:

We consider energy-efficient multi-user hybrid downlink beamforming (BF) and power allocation under imperfect channel state information (CSI) and probabilistic outage constraints. In this domain, classical optimization methods resort to computationally costly conic optimization problems. Meanwhile, generic deep network (DN) architectures lack interpretability and require large training data sets to generalize well. In this paper, we therefore propose a lightweight model-aided deep learning architecture based on a greedy selection algorithm for analog beam codewords. The architecture relies on an instance-adaptive augmentation of the signal model to estimate the impact of the CSI error. To learn the DN parameters, we derive a novel and efficient implicit representation of the nested constrained BF problem and prove sufficient conditions for the existence of the corresponding gradient. In the loss function, we utilize an annealing-based approximation of the outage compared to conventional quantile-based loss terms. This approximation adaptively anneals towards the exact probabilistic constraint depending on the current level of quality of service (QoS) violation. Simulations validate that the proposed DN can achieve the nominal outage level under CSI error due to channel estimation and channel compression, while allocating less power than benchmarks. Thereby, a single trained model generalizes to different numbers of users, QoS requirements and levels of CSI quality. We further show that the adaptive annealing-based loss function can accelerate the training and yield a better power-outage trade-off.

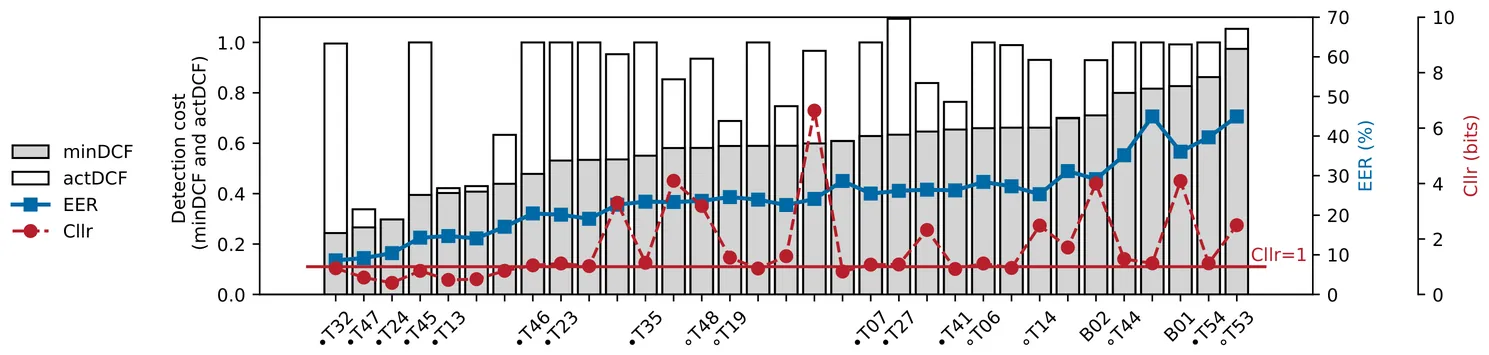

ASVspoof 5 is the fifth edition in a series of challenges which promote the study of speech spoofing and deepfake detection solutions. A significant change from previous challenge editions is a new crowdsourced database collected from a substantially greater number of speakers under diverse recording conditions, and a mix of cutting-edge and legacy generative speech technology. With the new database described elsewhere, we provide in this paper an overview of the ASVspoof 5 challenge results for the submissions of 53 participating teams. While many solutions perform well, performance degrades under adversarial attacks and the application of neural encoding/compression schemes. Together with a review of post-challenge results, we also report a study of calibration in addition to other principal challenges and outline a road-map for the future of ASVspoof.

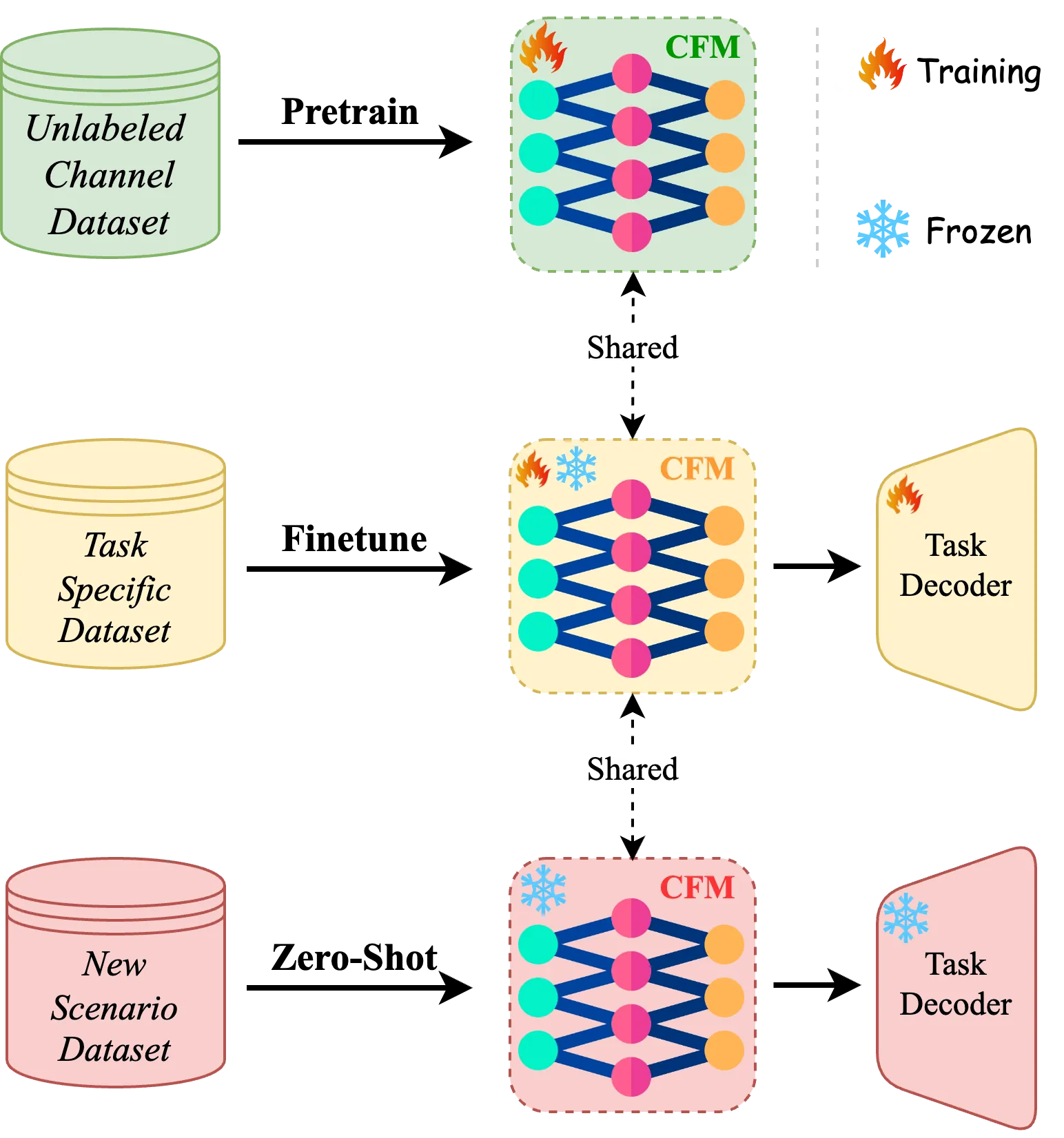

Self-Supervised Learning (SSL) has emerged as a key technique in machine learning, tackling challenges such as limited labeled data, high annotation costs, and variable wireless channel conditions. It is essential for developing Channel Foundation Models (CFMs), which extract latent features from channel state information (CSI) and adapt to different wireless settings. Yet, existing CFMs have notable drawbacks: heavy reliance on scenario-specific data hinders generalization, they focus on single/dual tasks, and lack zero-shot learning ability. In this paper, we propose CSI-MAE, a generalized CFM leveraging masked autoencoder for cross-scenario generalization. Trained on 3GPP channel model datasets, it integrates sensing and communication via CSI perception and generation, proven effective across diverse tasks. A lightweight decoder finetuning strategy cuts training costs while maintaining competitive performance. Under this approach, CSI-MAE matches or surpasses supervised models. With full-parameter finetuning, it achieves the state-of-the-art performance. Its exceptional zero-shot transferability also rivals supervised techniques in cross-scenario applications, driving wireless communication innovation.

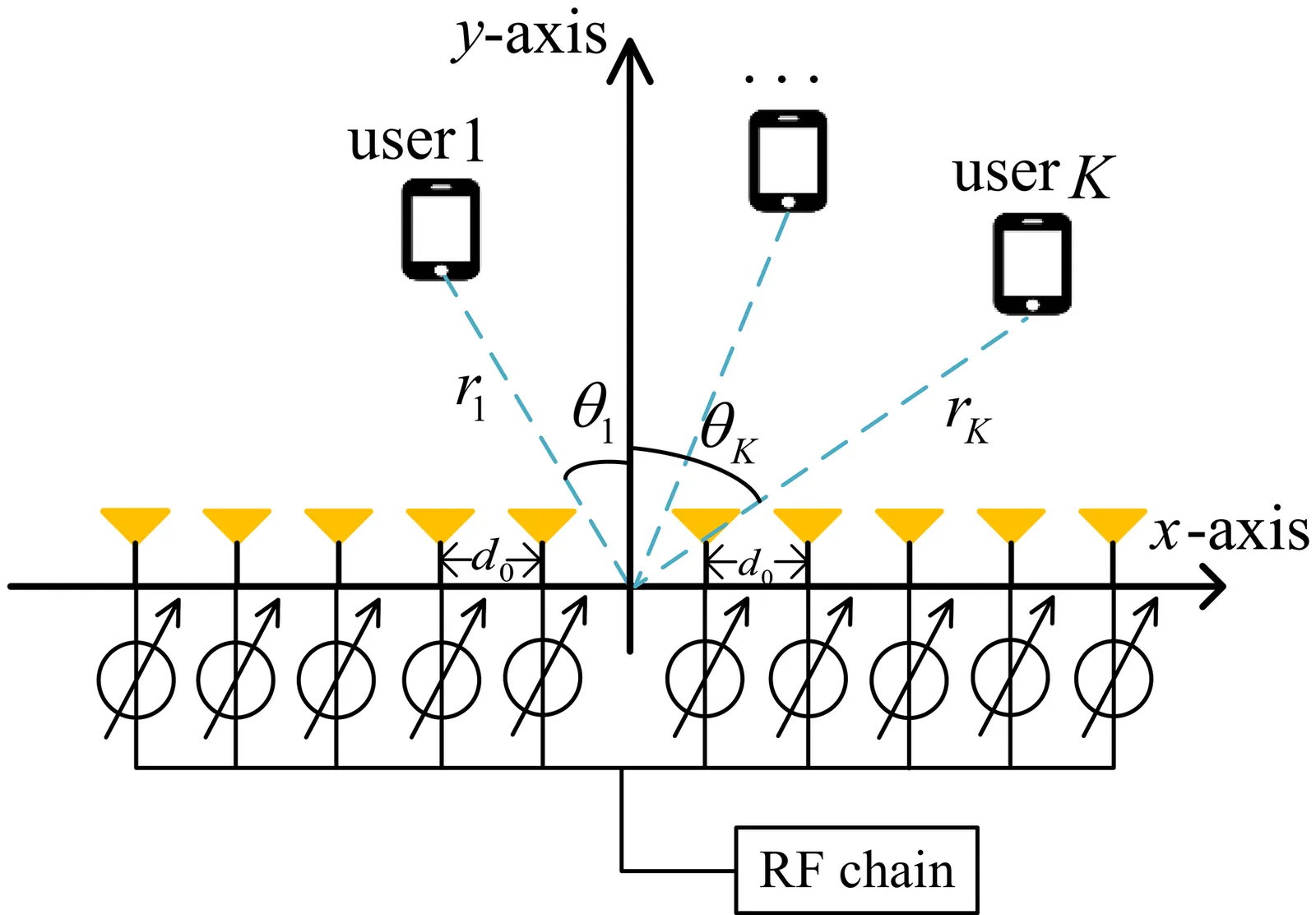

In this letter, we study an efficient multi-beam training method for multiuser millimeter-wave communication systems. Unlike the conventional single-beam training method that relies on exhaustive search, multi-beam training design faces a key challenge in balancing the trade-off between beam training overhead and success beam-identification rate, exacerbated by severe inter-beam interference. To tackle this challenge, we propose a new two-stage multi-beam training method with two distinct multi-beam patterns to enable fast and accurate user angle identification. Specifically, in the first stage, the antenna array is divided into sparse subarrays to generate multiple beams (with high array gains), for identifying candidate user angles. In the second stage, the array is redivided into dense subarrays to generate flexibly steered wide beams, for which a cross-validation method is employed to effectively resolve the remaining angular ambiguity in the first stage. Last, numerical results demonstrate that the proposed method significantly improves the success beam-identification rate compared to existing multi-beam training methods, while retaining or even reducing the required beam training overhead.

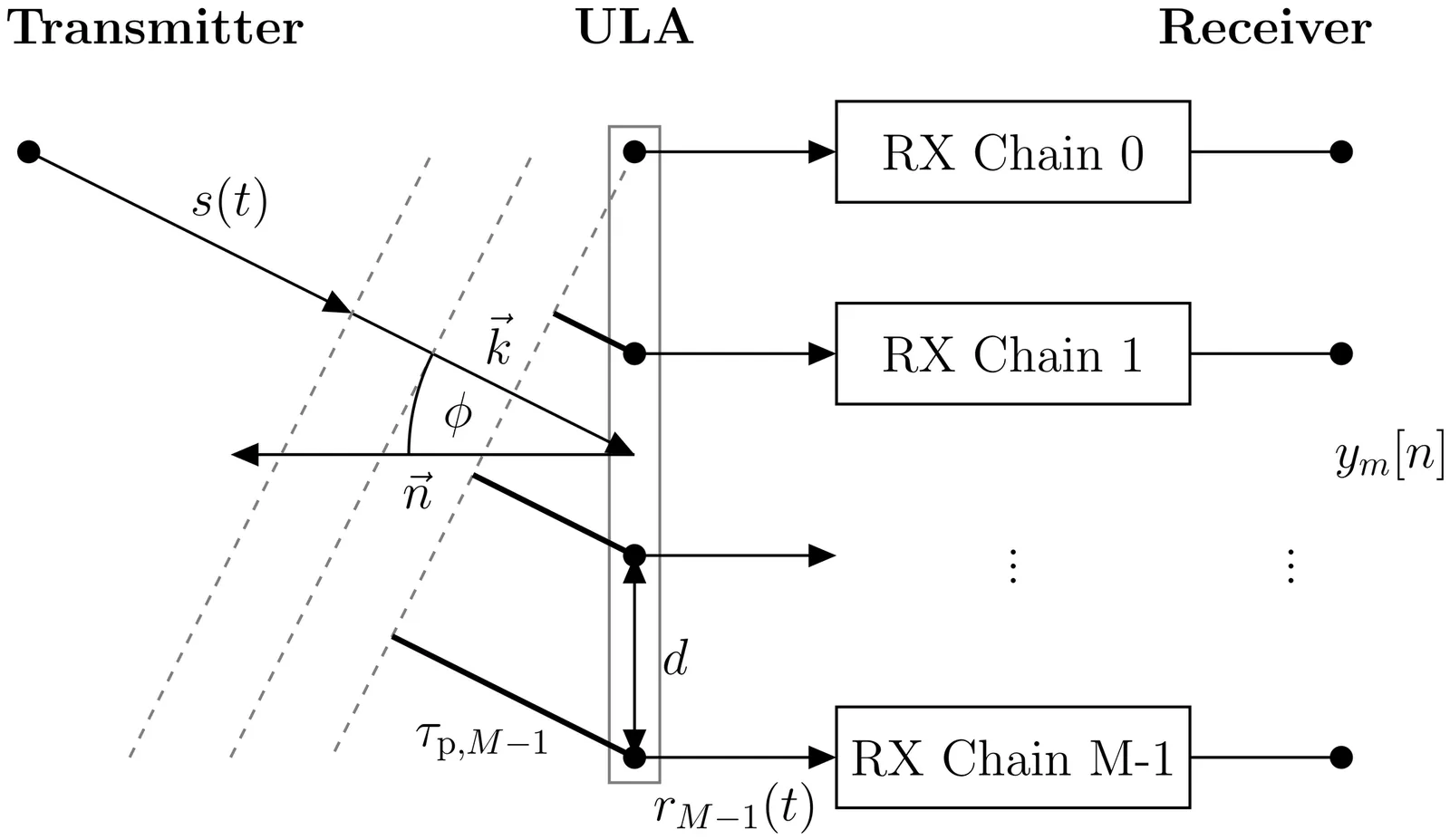

In the context of joint communication and sensing JC&S, the challenge of obtaining accurate parameter estimates is of interest. Parameter estimates, such as the AoA can be utilized for solving the initial access problem, interference mitigation, localization of users or monitoring of the environment and synchronization of MIMO systems. Recently, TTD systems have gained attention for fast beam training during initial access and mitigation of beam squinting. This work derives the CRB for angle estimates in typical TTD systems. Properties of the CRB and the Fisher information are investigated and numerically evaluated. Finally, methods for angle estimation such as ML and established estimators are utilized to solve the angle estimation problem using a uniform linear array.

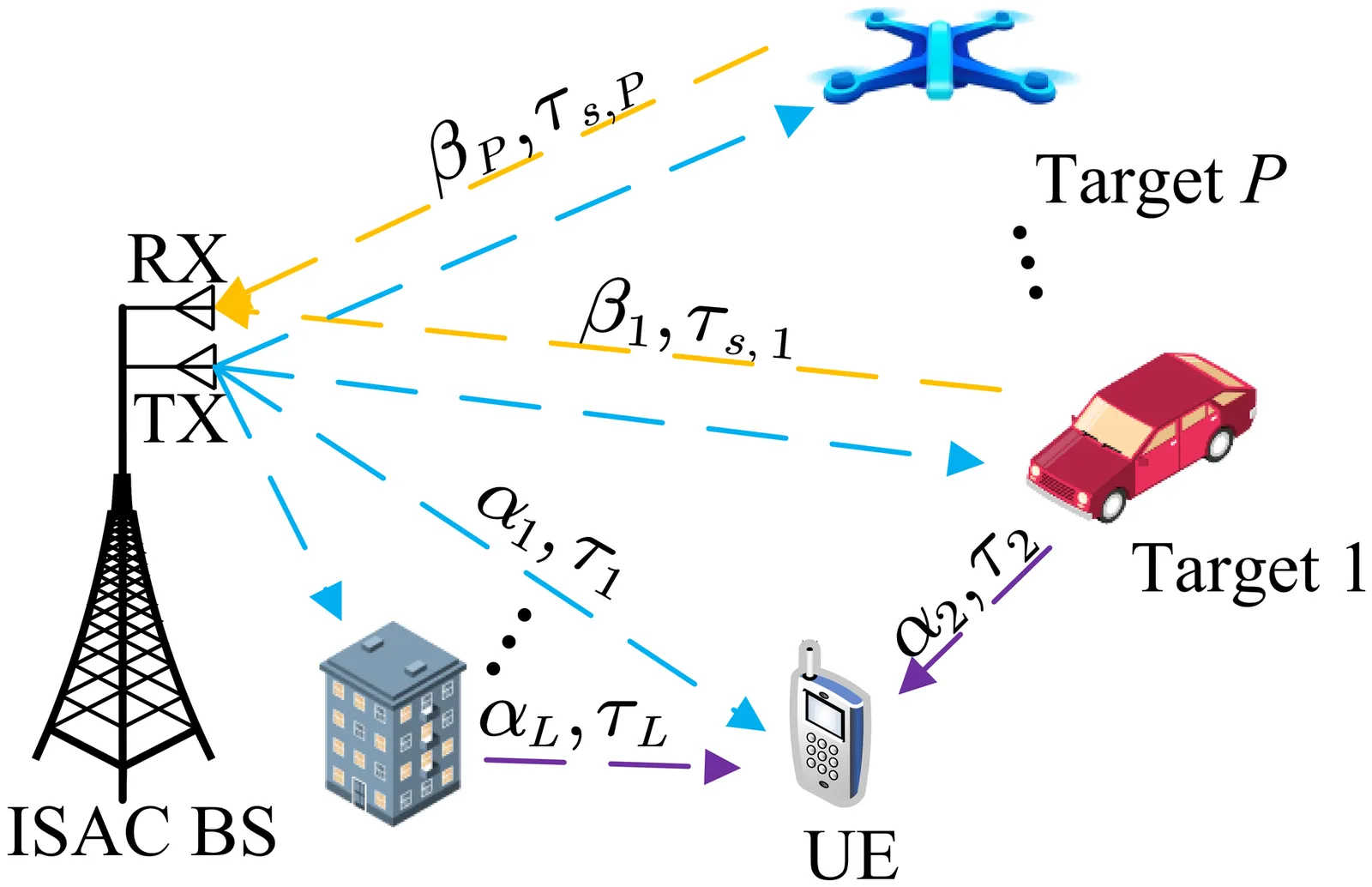

Integrated sensing and communication (ISAC) through Zak-transform-based orthogonal time frequency space (Zak-OTFS) modulation is a promising solution for high-mobility scenarios. Realizing accurate bistatic sensing and robust communication necessitates precise channel estimation; however, this remains a formidable challenge in doubly dispersive environments, where fractional delay-Doppler shifts induce severe channel spreading. This paper proposes a semi-blind atomic norm denoising scheme for Zak-OTFS ISAC with bistatic sensing. We first derive the discrete-time input-output (I/O) relationship of Zak-OTFS under fractional delay-Doppler shifts and rectangular windowing. Based on this I/O relation, we formulate the joint channel parameter estimation and data detection task as an atomic norm denoising problem, utilizing the negative square penalty method to handle the non-convex discrete constellation constraints. To solve this problem efficiently, we develop an accelerated iterative algorithm that integrates majorization-minimization, accelerated projected gradient, and inexact accelerated proximal gradient methods. We provide a rigorous convergence proof for the proposed algorithm. Simulation results demonstrate that the proposed scheme achieves super-resolution sensing accuracy and communication performance approaching the perfect channel state information lower bound.

In 6G mobile communications, acquiring accurate and timely channel state information (CSI) becomes increasingly challenging due to the growing antenna array size and bandwidth. To alleviate the CSI feedback burden, the channel knowledge map (CKM) has emerged as a promising approach by leveraging environment-aware techniques to predict CSI based solely on user locations. However, how to effectively construct a CKM remains an open issue. In this paper, we propose F$^4$-CKM, a novel CKM construction framework characterized by four distinctive features: radiance Field rendering, spatial-Frequency-awareness, location-Free usage, and Fast learning. Central to our design is the adaptation of radiance field rendering techniques from computer vision to the radio frequency (RF) domain, enabled by a novel Wireless Radiator Representation (WiRARE) network that captures the spatial-frequency characteristics of wireless channels. Additionally, a novel shaping filter module and an angular sampling strategy are introduced to facilitate CKM construction. Extensive experiments demonstrate that F$^4$-CKM significantly outperforms existing baselines in terms of wireless channel prediction accuracy and efficiency.

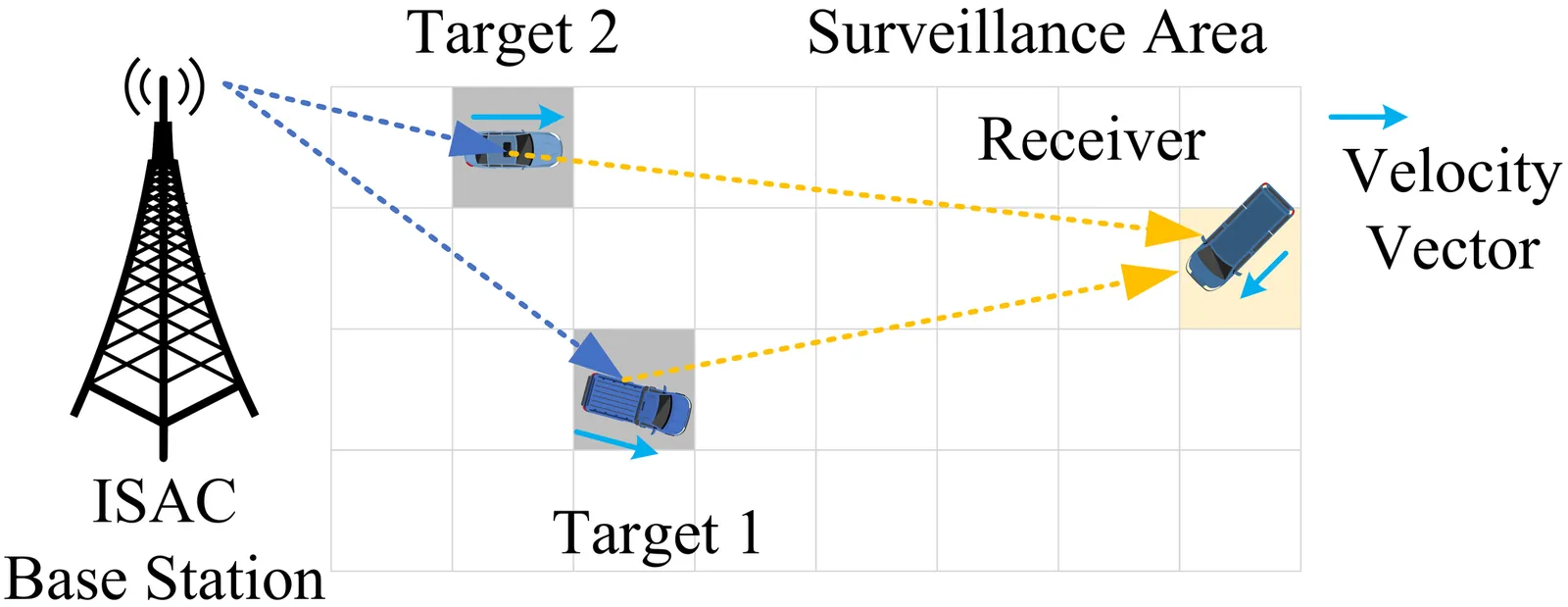

Integrated sensing and communication (ISAC) is envisioned to be one of the key usage scenarios for the sixth generation (6G) mobile communication networks. While significant progresses have been achieved for the theoretical studies, the further advancement of ISAC is hampered by the lack of accessible, open-source, and real-time experimental platforms. To address this gap, we introduce OpenISAC, a versatile and high-performance open-source platform for real-time ISAC experimentation. OpenISAC utilizes orthogonal frequency division multiplexing (OFDM) waveform and implements crucial sensing functionalities, including both monostatic and bistatic delay-Doppler sensing. A key feature of our platform is a novel over-the-air (OTA) synchronization mechanism that enables robust bistatic operations without requiring a wired connection between nodes. The platform is built entirely on open-source software, leveraging the universal software radio peripheral (USRP) hardware driver (UHD) library, thus eliminating the need for any commercial licenses. It supports a wide range of software-defined radios, from the cost-effective USRP B200 series to the high-performance X400 series. The physical layer modulator and demodulator are implemented with C++ for high-speed processing, while the sensing data is streamed to a Python environment, providing a user-friendly interface for rapid prototyping and validation of sensing signal processing algorithms. With flexible parameter selection and real-time communication and sensing operation, OpenISAC serves as a powerful and accessible tool for the academic and research communities to explore and innovate within the field of OFDM-ISAC.

Cross-Phase Modulation (XPM) constitutes a critical nonlinear impairment in high-capacity Wavelength Division Multiplexing (WDM) systems, significantly driven by intensity fluctuations (IFs) that evolve due to chromatic dispersion. This paper presents an enhanced XPM model that explicitly incorporates frequency-domain IF growth along the fiber, improving upon prior models that focused primarily on temporal pulse deformation. A direct correlation between this frequency-domain growth and XPM-induced phase distortions is established and analyzed. Results demonstrate that IF evolution, particularly at lower frequencies, profoundly affects XPM phase fluctuation spectra and phase variance. Validated through simulations, the model accurately predicts these spectral characteristics across various system parameters. Furthermore, the derived phase variance enables accurate prediction of system performance in terms of Bit Error Ratio (BER). These findings highlight the necessity of modeling frequency-domain IF evolution to accurately characterize XPM impairments, offering guidance for the design of advanced optical networks.

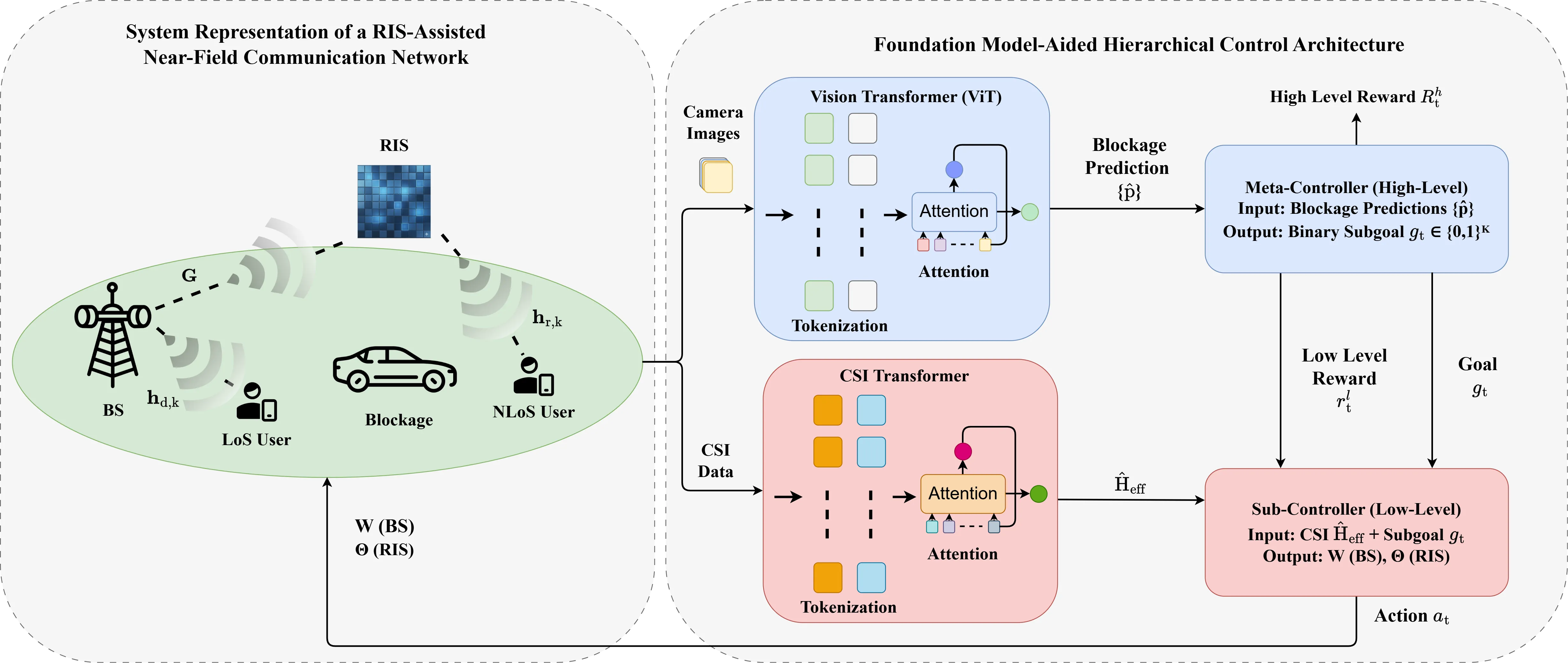

The deployment of extremely large aperture arrays (ELAAs) in sixth-generation (6G) networks could shift communication into the near-field communication (NFC) regime. In this regime, signals exhibit spherical wave propagation, unlike the planar waves in conventional far-field systems. Reconfigurable intelligent surfaces (RISs) can dynamically adjust phase shifts to support NFC beamfocusing, concentrating signal energy at specific spatial coordinates. However, effective RIS utilization depends on both rapid channel state information (CSI) estimation and proactive blockage mitigation, which occur on inherently different timescales. CSI varies at millisecond intervals due to small-scale fading, while blockage events evolve over seconds, posing challenges for conventional single-level control algorithms. To address this issue, we propose a dual-transformer (DT) hierarchical framework that integrates two specialized transformer models within a hierarchical deep reinforcement learning (HDRL) architecture, referred to as the DT-HDRL framework. A fast-timescale transformer processes ray-tracing data for rapid CSI estimation, while a vision transformer (ViT) analyzes visual data to predict impending blockages. In HDRL, the high-level controller selects line-of-sight (LoS) or RIS-assisted non-line-of-sight (NLoS) transmission paths and sets goals, while the low-level controller optimizes base station (BS) beamfocusing and RIS phase shifts using instantaneous CSI. This dual-timescale coordination maximizes spectral efficiency (SE) while ensuring robust performance under dynamic conditions. Simulation results demonstrate that our approach improves SE by approximately 18% compared to single-timescale baselines, while the proposed blockage predictor achieves an F1-score of 0.92, providing a 769 ms advance warning window in dynamic scenarios.

In this paper, the average symbol error probability (SEP) of a phase-quantized single-input multiple-output (SIMO) system with M-ary phase-shift keying (PSK) modulation is analyzed under Rayleigh fading and additive white Gaussian noise. By leveraging a novel method, we derive exact SEP expressions for a quadrature PSK (QPSK)-modulated n-bit phase-quantized SIMO system with maximum ratio combining (SIMO-MRC), along with the corresponding high signal-to-noise ratio (SNR) characterizations in terms of diversity and coding gains. For a QPSK-modulated 2-bit phase-quantized SIMO system with selection combining, the diversity and coding gains are further obtained for an arbitrary number of receive antennas, complementing existing results. Interestingly, the proposed method also reveals a duality between a SIMO-MRC system and a phase-quantized multiple-input single-output (MISO) system with maximum ratio transmission, when the modulation order, phase-quantization resolution, antenna configuration, and the channel state information (CSI) conditions are reciprocal. This duality enables direct inference to obtain the diversity of a general M-PSK-modulated n-bit phase-quantized SIMO-MRC system, and extends the results to its MISO counterpart. All the above results have been obtained assuming perfect CSI at the receiver (CSIR). Finally, the SEP analysis of a QPSK-modulated 2-bit phase-quantized SIMO system is extended to the limited CSIR case, where the CSI at each receive antenna is represented by only 2 bits of channel phase information. In this scenario, the diversity gain is shown to be further halved in general.

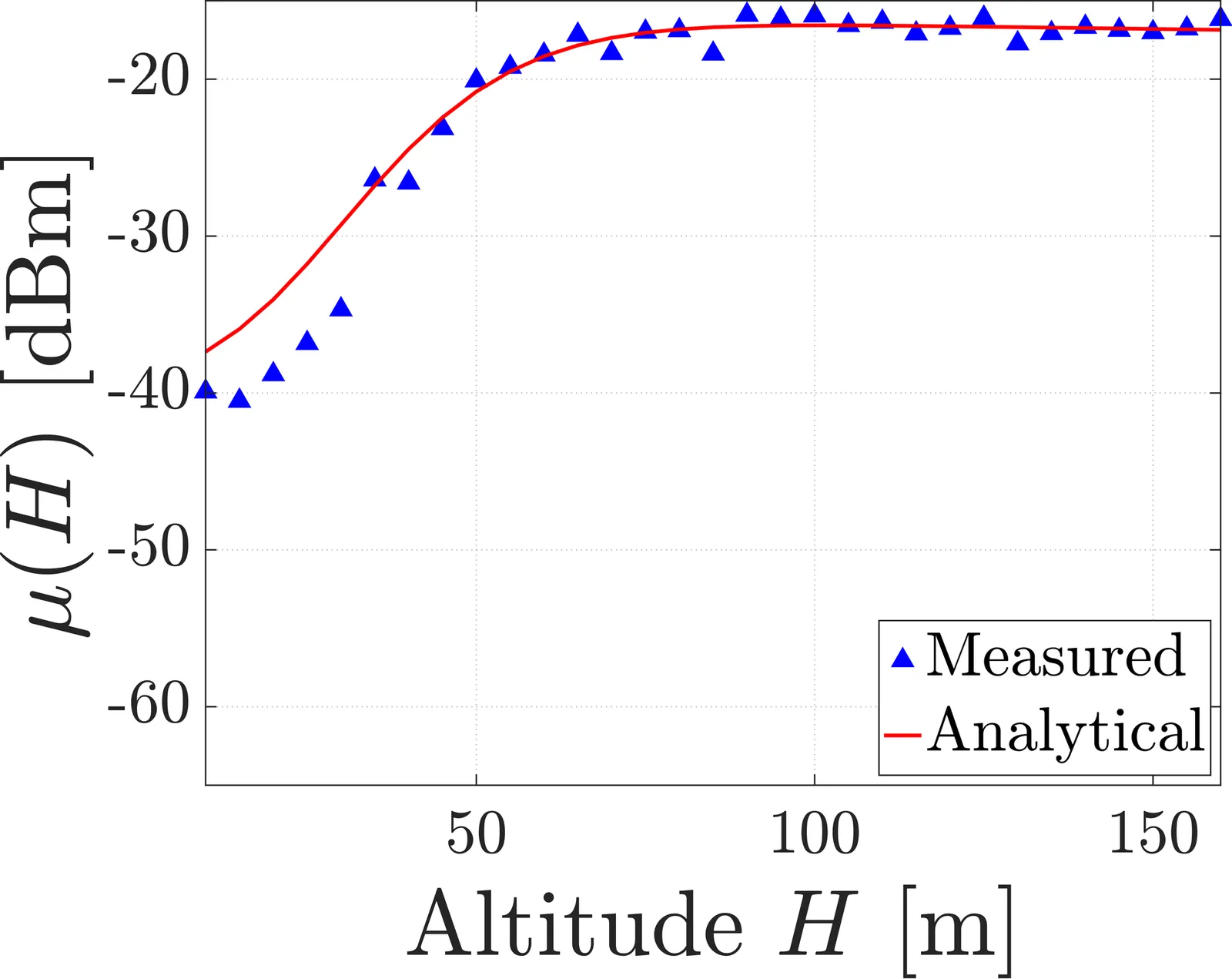

This paper studies the transferability of altitude-dependent spectrum activity models and measurements across years. We introduce a physics-informed, mean-only stochastic-geometry model of aggregate interference to altitude-binned received power, yielding three interpretable parameters for a given band and campaign: 1) line-of-sight transition slope, 2) transition altitude, and 3) effective activity constant. Analysis of aerial spectrum measurements collected from 2023 to 2025 across multiple sub-6 GHz bands reveals that downlink (DL) and shared-access bands preserve a persistent geometry-driven altitude structure that is stable across years. In contrast, uplink (UL) bands exhibit weak altitude dependence with no identifiable transition, indicating that interference is dominated by activity dynamics rather than propagation geometry. To quantify the practical limits of model reuse, we evaluate a minimal-calibration method in which the transition altitude is fixed from a reference year and the remaining parameters are estimated from only two altitude bins in the target year. The results further indicate that the proposed approach provides accurate predictions for DL and CBRS bands, suggesting the feasibility of low-cost model transfer in stable environments, while highlighting the reduced applicability of mean-field models for UL scenarios.

In this paper, we propose a spectral-efficient LoRa (SE-LoRa) modulation scheme with a low complexity successive interference cancellation (SIC)-based detector. The proposed communication scheme significantly improves the spectral efficiency of LoRa modulation, while achieving an acceptable error performance compared to conventional LoRa modulation, especially in higher spreading factor (SF) settings. We derive the joint maximum likelihood (ML) detection rule for the SE-LoRa transmission scheme that turns out to be of high computational complexity. To overcome this issue, and by exploiting the frequency-domain characteristics of the dechirped SE-LoRa signal, we propose a low complexity SIC-based detector with a computation complexity at the order of conventional LoRa detection. By computer simulations, we show that the proposed SE-LoRa with low complexity SIC-based detector can improve the spectral efficiency of LoRa modulation up to $445.45\%$, $1011.11\%$, and $1071.88\%$ for SF values of $7$, $9$, and $11$, respectively, while maintaining the error performance within less than $3$ dB of conventional LoRa at symbol error rate (SER) of $10^{-3}$ in Rician channel conditions.

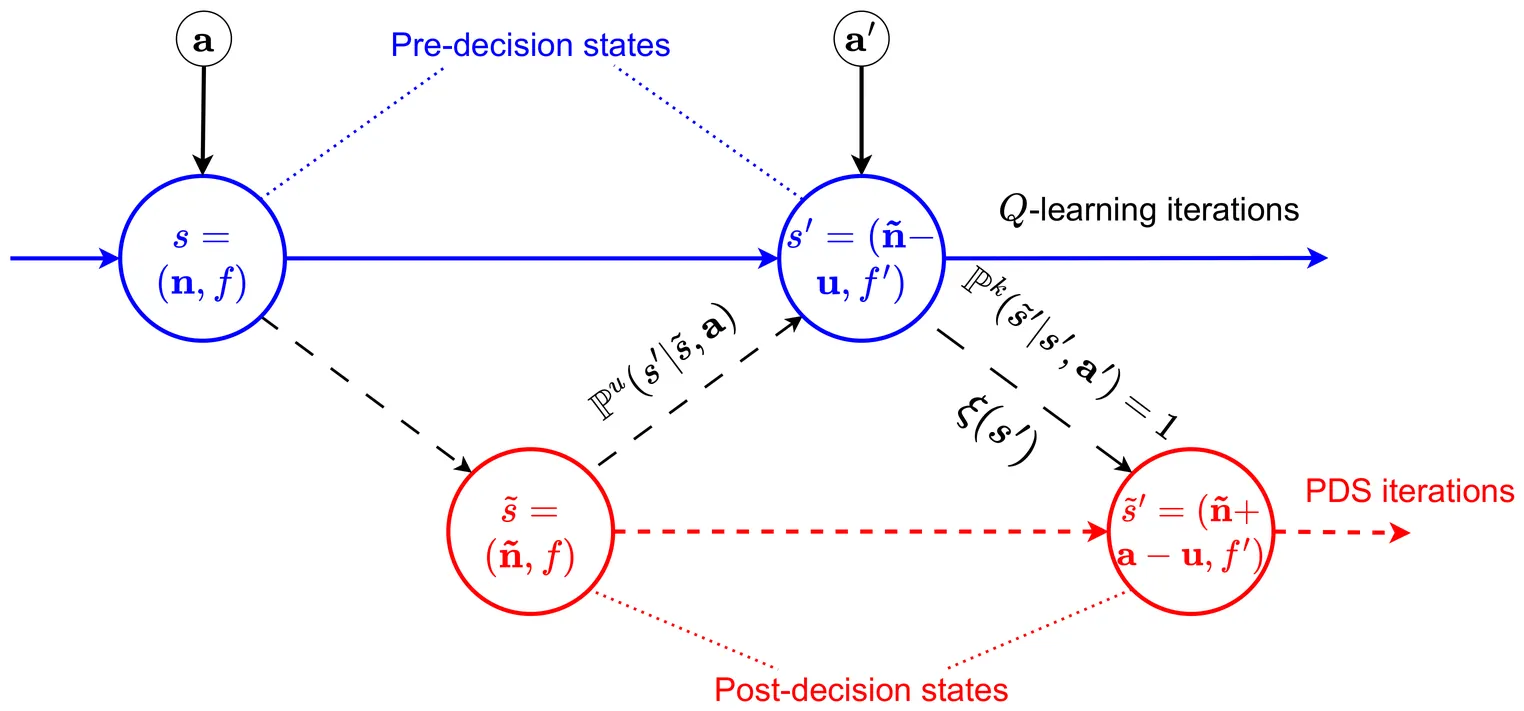

We develop a structure-aware reinforcement learning (RL) approach for delay- and energy-aware flow allocation in 5G User Plane Functions (UPFs). We consider a dynamic system with $K$ heterogeneous UPFs of varying capacities that handle stochastic arrivals of $M$ flow types, each with distinct rate requirements. We model the system as a Markov decision process (MDP) to capture the stochastic nature of flow arrivals and departures (possibly unknown), as well as the impact of flow allocation in the system. To solve this problem, we propose a post-decision state (PDS) based value iteration algorithm that exploits the underlying structure of the MDP. By separating action-controlled dynamics from exogenous factors, PDS enables faster convergence and efficient adaptive flow allocation, even in the absence of statistical knowledge about exogenous variables. Simulation results demonstrate that the proposed method converges faster and achieves lower long-term cost than standard Q-learning, highlighting the effectiveness of PDS-based RL for resource allocation in wireless networks.

This paper proposes a machine learning (ML) based method for channel prediction in high mobility orthogonal time frequency space (OTFS) channels. In these scenarios, rapid variations caused by Doppler spread and time varying multipath propagation lead to fast channel decorrelation, making conventional pilot based channel estimation methods prone to outdated channel state information (CSI) and excessive overhead. Therefore, reliable channel prediction methods become essential to support robust detection and decoding in OTFS systems. In this paper, we propose conditional variational autoencoder for channel prediction (CVAE4CP) method, which learns the conditional distribution of OTFS delay Doppler channel coefficients given physical system and mobility parameters. By incorporating these parameters as conditioning information, the proposed method enables the prediction of future channel coefficients before their actual realization, while accounting for inherent channel uncertainty through a low dimensional latent representation. The proposed framework is evaluated through extensive simulations under high mobility conditions. Numerical results demonstrate that CVAE4CP consistently outperforms a competing learning based baseline in terms of normalized mean squared error (NMSE), particularly at high Doppler frequencies and extended prediction horizons. These results confirm the effectiveness and robustness of the proposed approach for channel prediction in rapidly time varying OTFS systems.

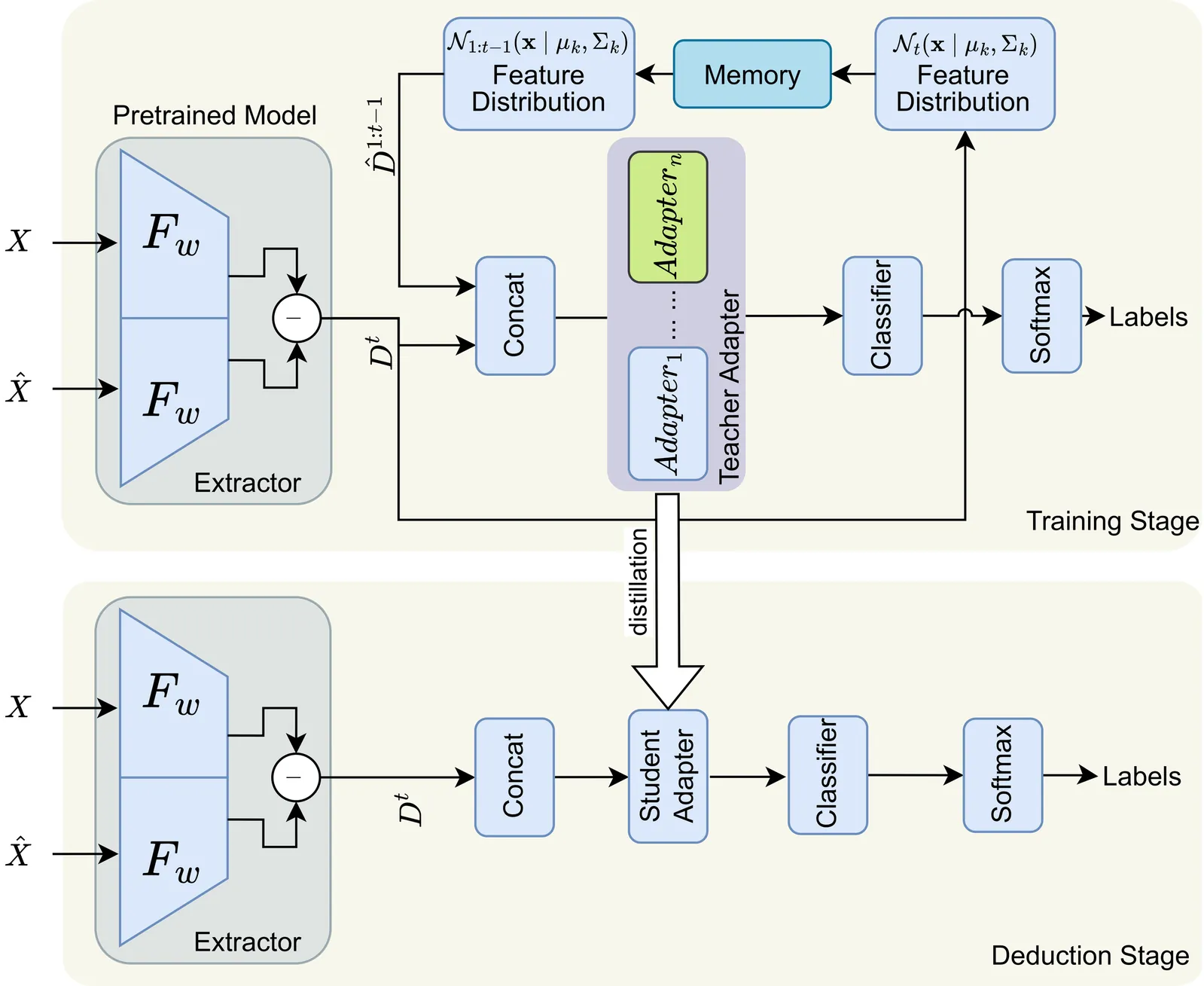

The rapid proliferation of wireless devices makes robust identity authentication essential. Radio Frequency Fingerprinting (RFF) exploits device-specific, hard-to-forge physical-layer impairments for identification, and is promising for IoT and unmanned systems. In practice, however, new devices continuously join deployed systems while per-class training data are limited. Conventional static training and naive replay of stored exemplars are impractical due to growing class cardinality, storage cost, and privacy concerns. We propose an exemplar-free class-incremental learning framework tailored to RFF recognition. Starting from a pretrained feature extractor, we freeze the backbone during incremental stages and train only a classifier together with lightweight Adapter modules that perform small task-specific feature adjustments. For each class we fit a diagonal Gaussian Mixture Model (GMM) to the backbone features and sample pseudo-features from these fitted distributions to rehearse past classes without storing raw signals. To improve robustness under few-shot conditions we introduce a time-domain random-masking augmentation and adopt a multi-teacher distillation scheme to compress stage-wise Adapters into a single inference Adapter, trading off accuracy and runtime efficiency. We evaluate the method on large, self-collected ADS-B datasets: the backbone is pretrained on 2,175 classes and incremental experiments are run on a disjoint set of 669 classes with multiple rounds and step sizes. Against several representative baselines, our approach consistently yields higher average accuracy and lower forgetting, while using substantially less storage and avoiding raw-data retention. The proposed pipeline is reproducible and provides a practical, low-storage solution for RFF deployment in resource- and privacy-constrained environments.

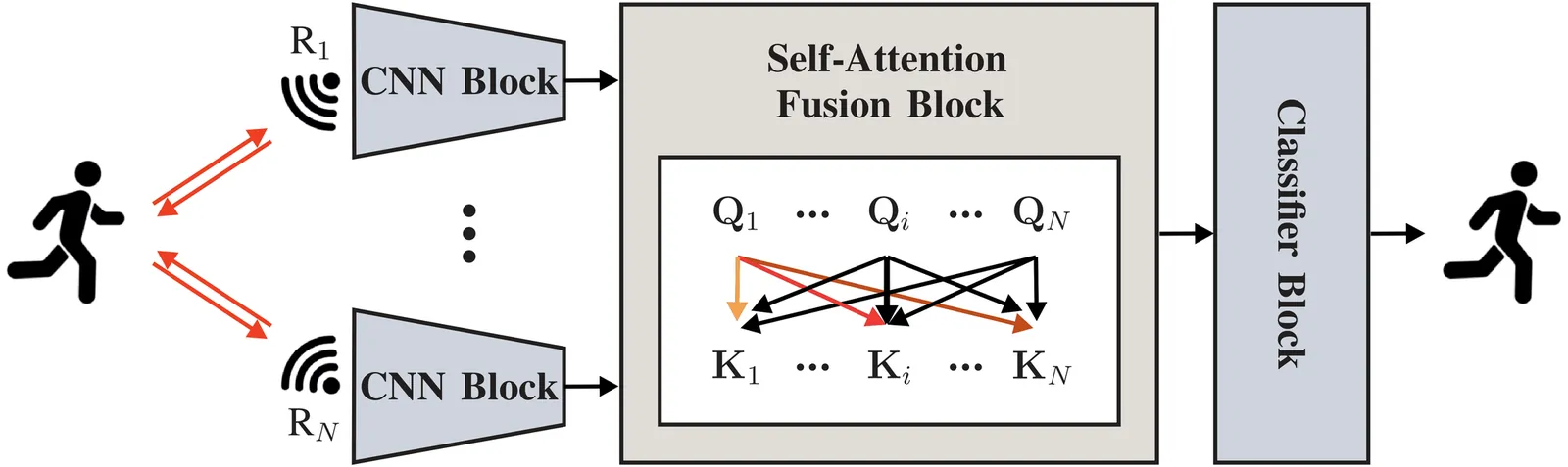

Distributed radar sensors enable robust human activity recognition. However, scaling the number of coordinated nodes introduces challenges in feature extraction from large datasets, and transparent data fusion. We propose an end-to-end framework that operates directly on raw radar data. Each radar node employs a lightweight 2D Convolutional Neural Network (CNN) to extract local features. A self-attention fusion block then models inter-node relationships and performs adaptive information fusion. Local feature extraction reduces the input dimensionality by up to 480x. This significantly lowers communication overhead and latency. The attention mechanism provides inherent interpretability by quantifying the contribution of each radar node. A hybrid supervised contrastive loss further improves feature separability, especially for fine-grained and imbalanced activity classes. Experiments on real-world distributed Ultra Wide Band (UWB) radar data demonstrate that the proposed method reduces model complexity by 70.8\%, while achieving higher average accuracy than baseline approaches. Overall, the framework enables transparent, efficient, and low-overhead distributed radar sensing.

In this article, a framework of AI-native cross-module optimized physical layer with cooperative control agents is proposed, which involves optimization across global AI/ML modules of the physical layer with innovative design of multiple enhancement mechanisms and control strategies. Specifically, it achieves simultaneous optimization across global modules of uplink AI/ML-based joint source-channel coding with modulation, and downlink AI/ML-based modulation with precoding and corresponding data detection, reducing traditional inter-module information barriers to facilitate end-to-end optimization toward global objectives. Moreover, multiple enhancement mechanisms are also proposed, including i) an AI/ML-based cross-layer modulation approach with theoretical analysis for downlink transmission that breaks the isolation of inter-layer features to expand the solution space for determining improved constellation, ii) a utility-oriented precoder construction method that shifts the role of the AI/ML-based CSI feedback decoder from recovering the original CSI to directly generating precoding matrices aiming to improve end-to-end performance, and iii) incorporating modulation into AI/ML-based CSI feedback to bypass bit-level bottlenecks that introduce quantization errors, non-differentiable gradients, and limitations in constellation solution spaces. Furthermore, AI/ML based control agents for optimized transmission schemes are proposed that leverage AI/ML to perform model switching according to channel state, thereby enabling integrated control for global throughput optimization. Finally, simulation results demonstrate the superiority of the proposed solutions in terms of BLER and throughput. These extensive simulations employ more practical assumptions that are aligned with the requirements of the 3GPP, which hopefully provides valuable insights for future standardization discussions.

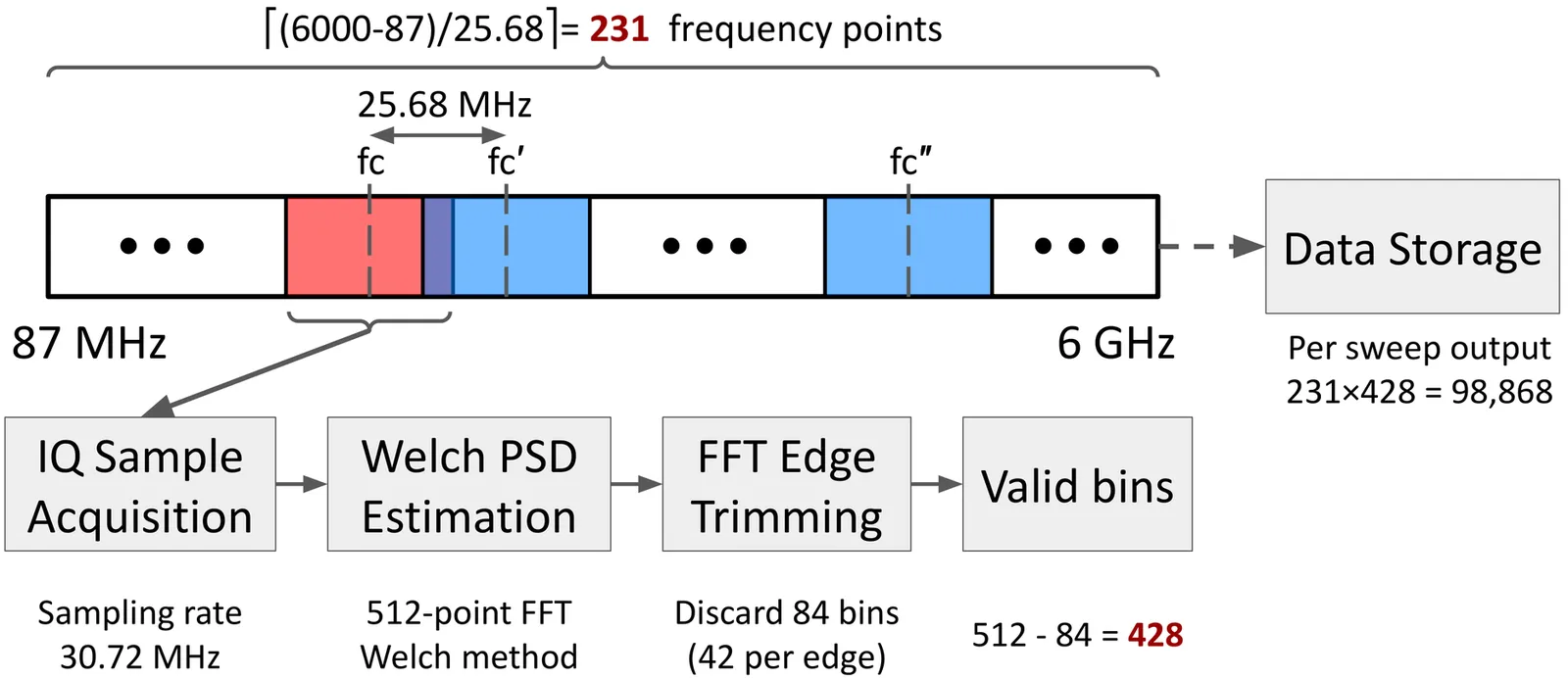

This paper presents a measurement-based framework for characterizing altitude-dependent spectral behavior of signals received by a tethered Helikite unmanned aerial vehicle (UAV). Using a multi-year spectrum measurement campaign in an outdoor urban environment, power spectral density snapshots are collected over the 89 MHz--6 GHz range. Three altitude-dependent spectral metrics are extracted: band-average power, spectral entropy, and spectral sparsity. We introduce the Altitude-Dependent Spectral Structure Model (ADSSM) to characterize the spectral power and entropy using first-order altitude-domain differential equations, and spectral sparsity using a logistic function, yielding closed-form expressions with physically consistent asymptotic behavior. The model is fitted to altitude-binned measurements from three annual campaigns at the AERPAW testbed across six licensed and unlicensed sub-6 GHz bands. Across all bands and years, the ADSSM achieves low root-mean-square error and high coefficients of determination. Results indicate that power transitions occur over narrow low-altitude regions, while entropy and sparsity evolve over broader, band-dependent altitude ranges, demonstrating that altitude-dependent spectrum behavior is inherently multidimensional. By explicitly modeling altitude-dependent transitions in spectral structure beyond received power, the proposed framework enables spectrum-aware UAV sensing and band selection decisions that are not achievable with conventional power- or threshold-based occupancy models.

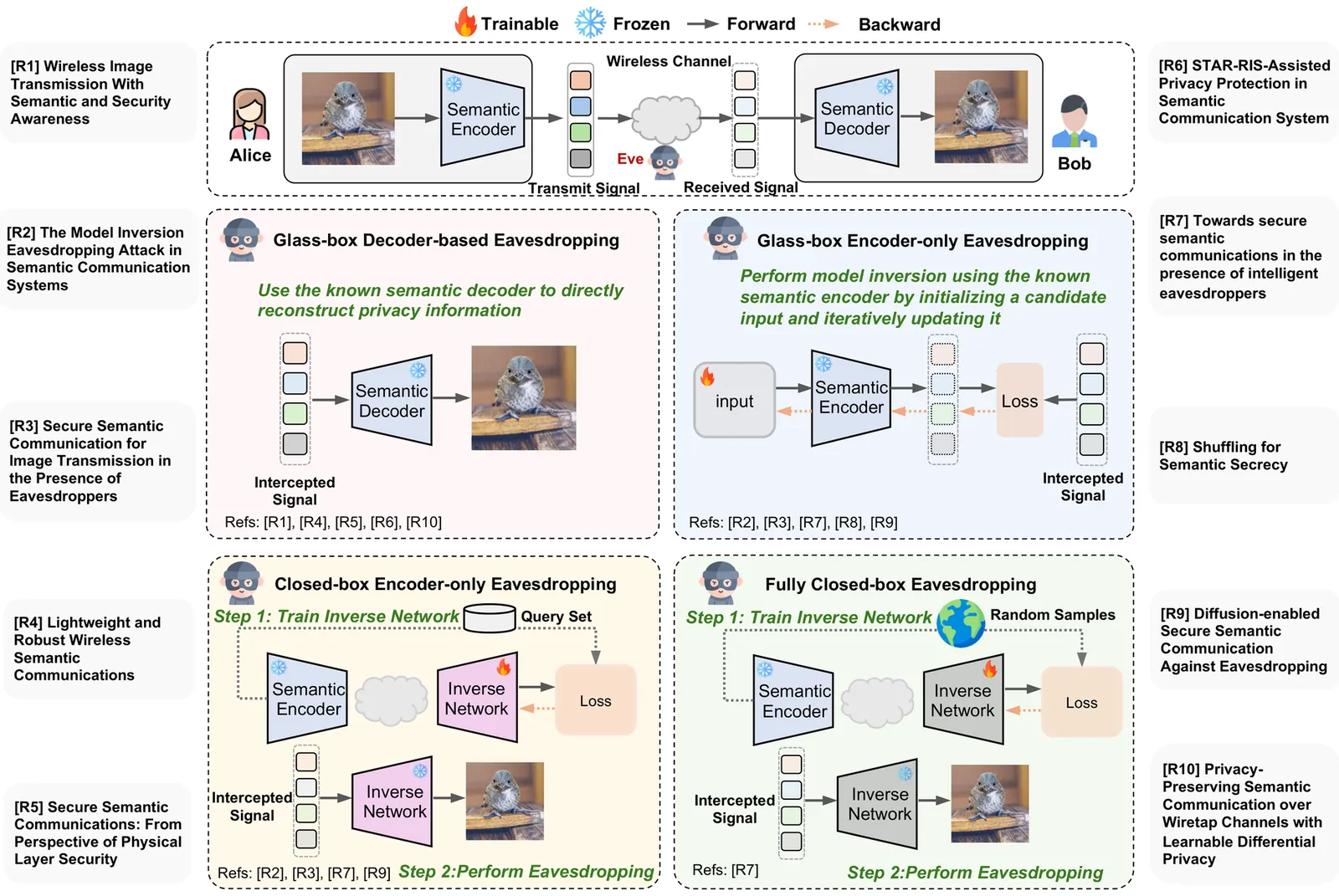

Semantic communication (SemCom) improves communication efficiency by transmitting task-relevant information instead of raw bits and is expected to be a key technology for 6G networks. Recent advances in generative AI (GenAI) further enhance SemCom by enabling robust semantic encoding and decoding under limited channel conditions. However, these efficiency gains also introduce new security and privacy vulnerabilities. Due to the broadcast nature of wireless channels, eavesdroppers can also use powerful GenAI-based semantic decoders to recover private information from intercepted signals. Moreover, rapid advances in agentic AI enable eavesdroppers to perform long-term and adaptive inference through the integration of memory, external knowledge, and reasoning capabilities. This allows eavesdroppers to further infer user private behavior and intent beyond the transmitted content. Motivated by these emerging challenges, this paper comprehensively rethinks the security and privacy of SemCom systems in the age of generative and agentic AI. We first present a systematic taxonomy of eavesdropping threat models in SemCom systems. Then, we provide insights into how GenAI and agentic AI can enhance eavesdropping threats. Meanwhile, we also highlight potential opportunities for leveraging GenAI and agentic AI to design privacy-preserving SemCom systems.