Instrumentation and Methods for Astrophysics

Detector and telescope design, astronomical data analysis techniques and methods.

Looking for a broader view? This category is part of:

Detector and telescope design, astronomical data analysis techniques and methods.

Looking for a broader view? This category is part of:

2601.03966

2601.03966Dynamic Spectrum Sharing (DSS) is increasingly promoted as a key element of modern spectrum policy, driven by the rising demand from commercial wireless systems and advances in spectrum access technologies. Passive radio sciences, including radio astronomy, Earth remote sensing, and meteorology, operate under fundamentally different constraints. They rely on exceptionally low interference spectrum and are highly vulnerable to even brief radio frequency interference. We examine whether DSS can benefit passive services or whether it introduces new failure modes and enforcement challenges. We propose just-in-time quiet zones (JITQZ) as a mechanism for protecting high value observations and assess hybrid frameworks that preserve static protection for core passive bands while allowing constrained dynamic access in adjacent frequencies. We analyze the roles of propagation uncertainty, electromagnetic compatibility constraints, and limited spectrum awareness. Using a game theoretic framework, we show why non-cooperative sharing fails, identify conditions for sustained cooperation, and examine incentive mechanisms including pseudonymetry-enabled attribution that promote compliance. We conclude that DSS can support passive radio sciences only as a high-reliability, safety-critical system. Static allocations remain essential, and dynamic access is viable only with conservative safeguards and enforceable accountability.

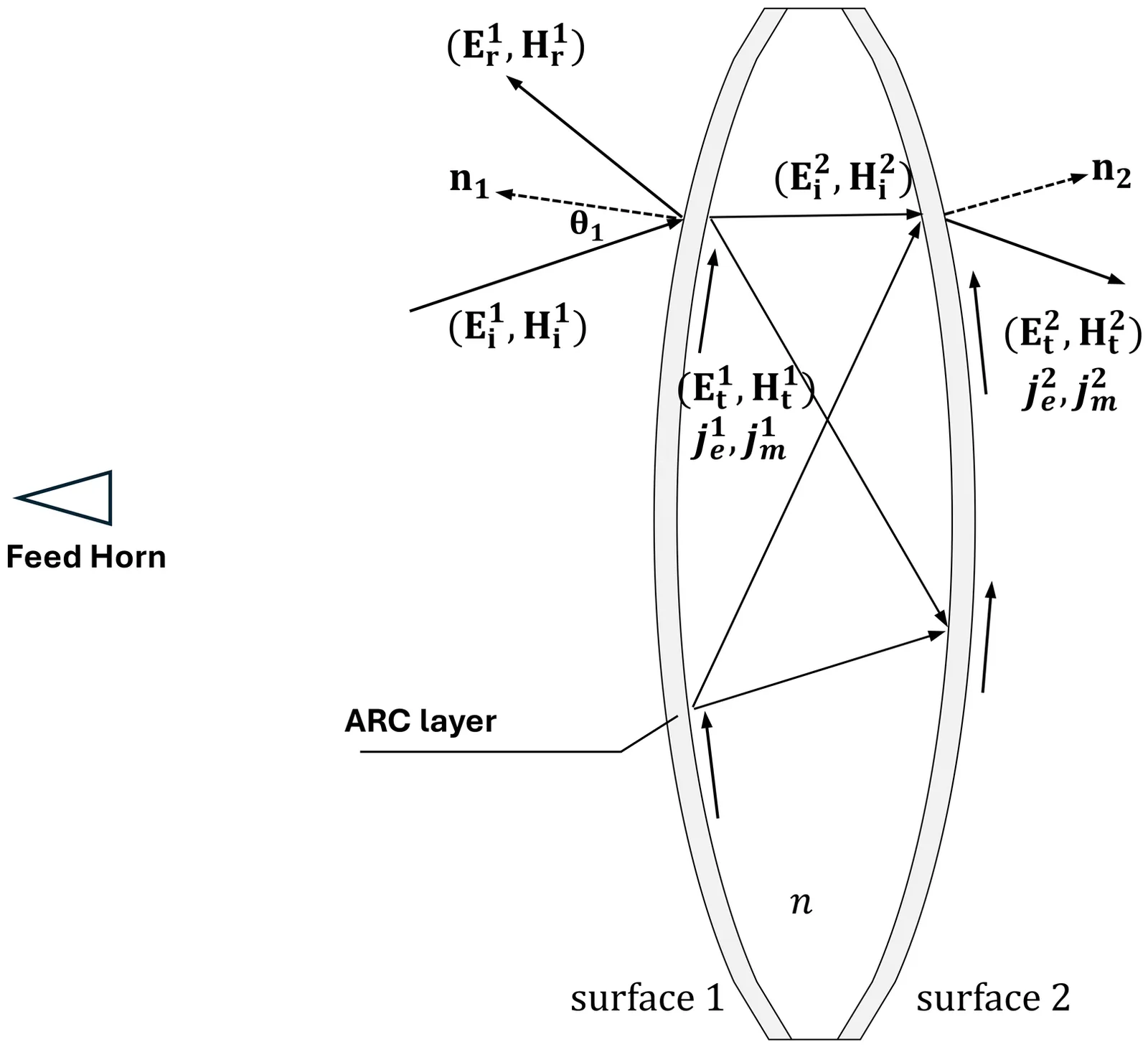

We identify a polarization rotation systematic in the far field beams of refractive cosmic microwave background (CMB) telescopes caused by differential transmission in anti-reflection (AR) coatings of optical elements. This systematic was identified following the development of a hybrid physical optics method that incorporates full-wave electromagnetic simulations of AR coatings to model the full polarization response of refractive systems. Applying this method to a two-lens CMB telescope with non-ideal AR coating, we show that polarization-dependent transmission can produce a rotation of the far-field polarization angle that varies across the focal plane with a typical amplitude of 0.05-0.5 degrees. If ignored in analysis, this effect can produce temperature to polarization leakage and Stokes Q/U mixing.

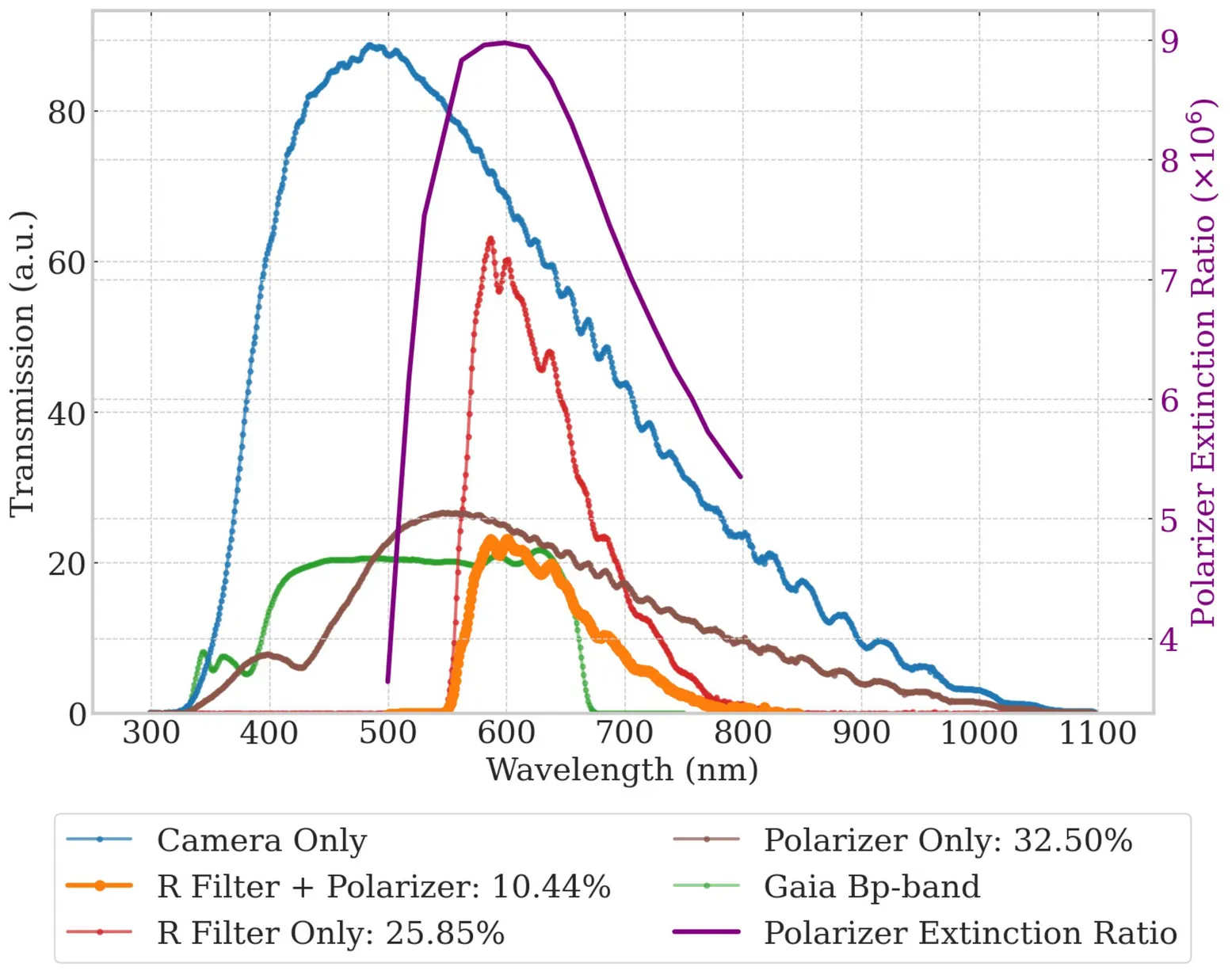

Optical polarimetry provides information on the geometry of the emitting region, the magnetic field configuration and the properties of dust in astrophysical sources. Current state-of-the-art instruments typically have a small field of view (FoV), which poses a challenge for conducting wide surveys. We propose the construction of the Large Array Survey Telescope Polarization Node (LAST-P), a wide-field array of optical polarimeters. LAST-P is designed for high-cadence ($\lesssim 1$ day) polarization monitoring of numerous astrophysical transients, such as the early phases of gamma-ray bursts, supernovae, and novae. Furthermore, LAST-P will facilitate the creation of extensive polarization catalogs for X-ray binaries and white dwarfs, alongside a large FoV study of the interstellar medium. In survey mode, LAST-P will cover a FoV of 88.8 deg$^2$. With a 15 x 1-minute exposure, the instrument will be capable of measuring polarization of sources as faint as Gaia Bp-magnitude $\sim$20.9. The precision on the linear polarization degree (PD) will reach 0.7\%, 1.5\%, and 3.5\% for sources with magnitudes 17, 18, and 19, respectively, for a seeing of 2.7 arcsec, air mass of about 1 for observations in dark locations. We propose three distinct non-simultaneous survey strategies, among them an active galactic nuclei (AGN) strategy for long-term monitoring of $\sim$200 AGN with $<$1-day cadence. In this paper, we present the predicted sensitivity of the instrument and outline the various science cases it is designed to explore.

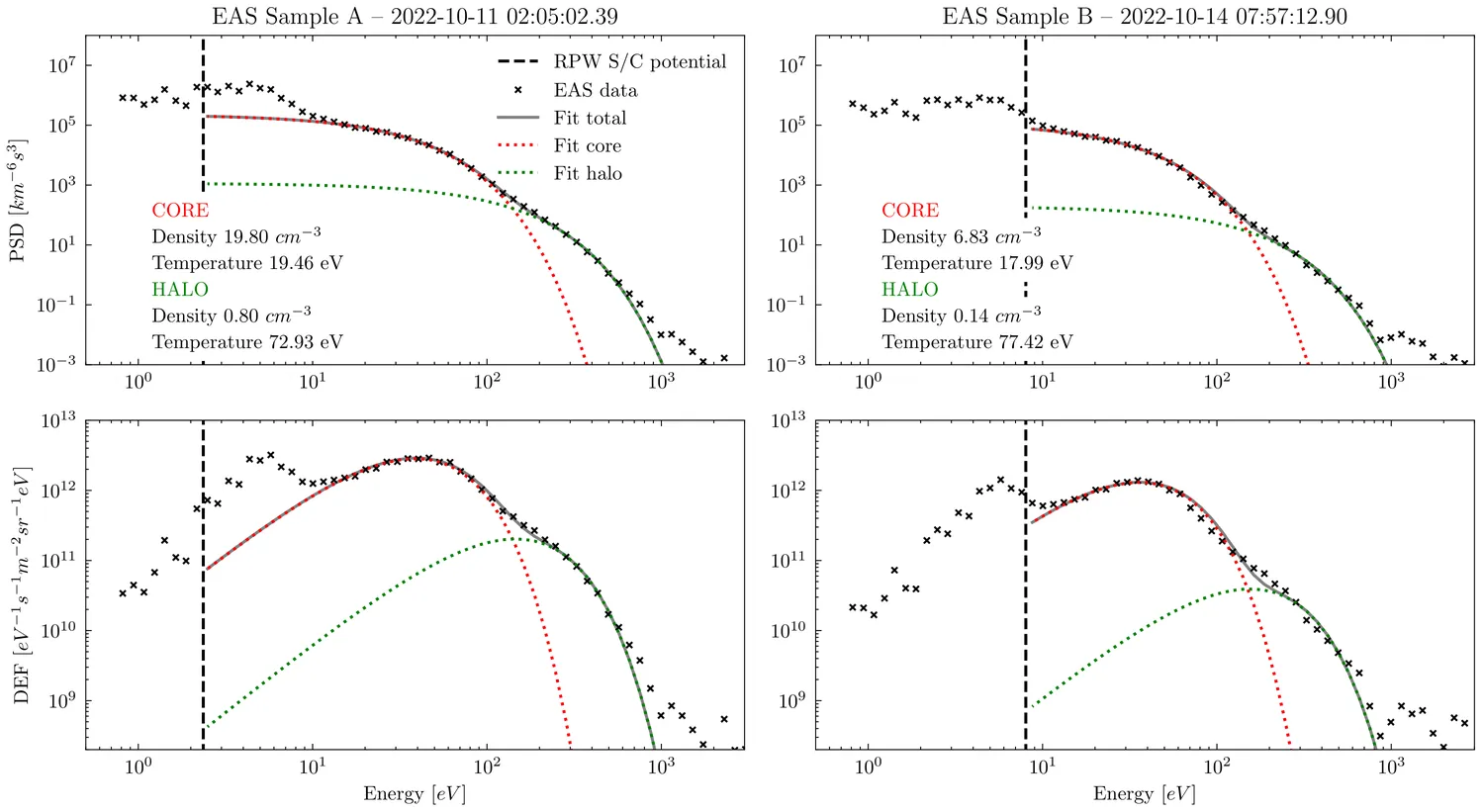

Thermal electron measurements in space plasmas typically suffer at low energies from spacecraft emissions of photo- and secondary electrons and from charging of the spacecraft body. We examine these effects by use of numerical simulations in the context of electron measurements acquired by the Electron Analyser System (SWA-EAS) on board the Solar Orbiter mission. We employed the Spacecraft Plasma Interaction Software to model the interaction of the Solar Orbiter spacecraft with solar wind plasma and we implemented a virtual detector to simulate the measured electron energy spectra as observed in situ by the SWA-EAS experiment. Numerical simulations were set according to the measured plasma conditions at 0.3~AU. We derived the simulated electron energy spectra as detected by the virtual SWA-EAS experiment for different electron populations and compared these with both the initial plasma conditions and the corresponding real SWA-EAS data samples. We found qualitative agreement between the simulated and real data observed in situ by the SWA-EAS detector. Contrary to other space missions, the contamination by cold electrons emitted from the spacecraft is seen well above the spacecraft potential energy threshold. A detailed analysis of the simulated electron energy spectra demonstrates that contamination above the threshold is a result of cold electron fluxes emitted from distant spacecraft surfaces. The relative position of the break in the simulated spectrum with respect to the spacecraft potential slightly deviates from that in the real observations. This may indicate that the real potential of the SWA-EAS detector with respect to ambient plasma differs from the spacecraft potential value measured on board. The overall contamination is shown to be composed of emissions from a number of different sources and their relative contribution varies with the ambient plasma conditions.

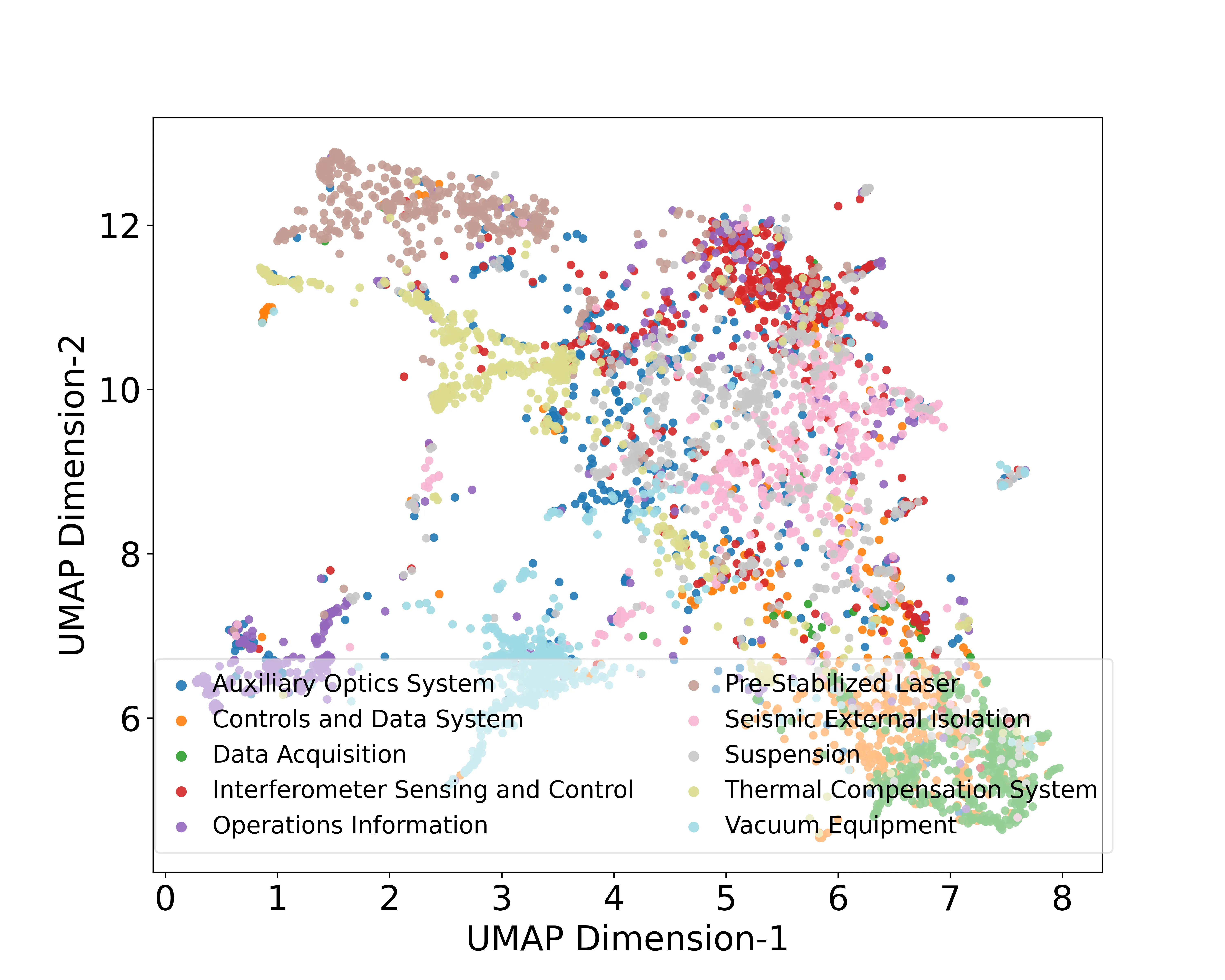

We present MARVEL (https://ligogpt.mit.edu/marvel), a locally deployable, open-source framework for domain-aware question answering and assisted scientific research. It is designed to address the increasing demands of a digital assistant for scientific groups that can read highly technical data, cite precisely, and operate within authenticated networks. MARVEL combines a fast path for straightforward queries with a more deliberate DeepSearch mode that integrates retrieval-augmented generation and Monte Carlo Tree Search. It explores complementary subqueries, allocates more compute to promising branches, and maintains a global evidence ledger that preserves sources during drafting. We applied this framework in the context of gravitational-wave research related to the Laser Interferometer Gravitational-wave Observatory. Answers are grounded in a curated semantic index of research literature, doctoral theses, LIGO documents, and long-running detector electronic logbooks, with targeted web searches when appropriate. Because direct benchmarking against commercial LLMs cannot be performed on private data, we evaluated MARVEL on two publicly available surrogate datasets that capture comparable semantic and technical characteristics. On these benchmarks, MARVEL matches a GPT-4o mini baseline on literature-centric queries and substantially outperforms it on detector-operations content, where domain retrieval and guided reasoning are decisive. By making the complete framework and evaluation datasets openly available, we aim to provide a reproducible foundation for developing domain-specific scientific assistants.

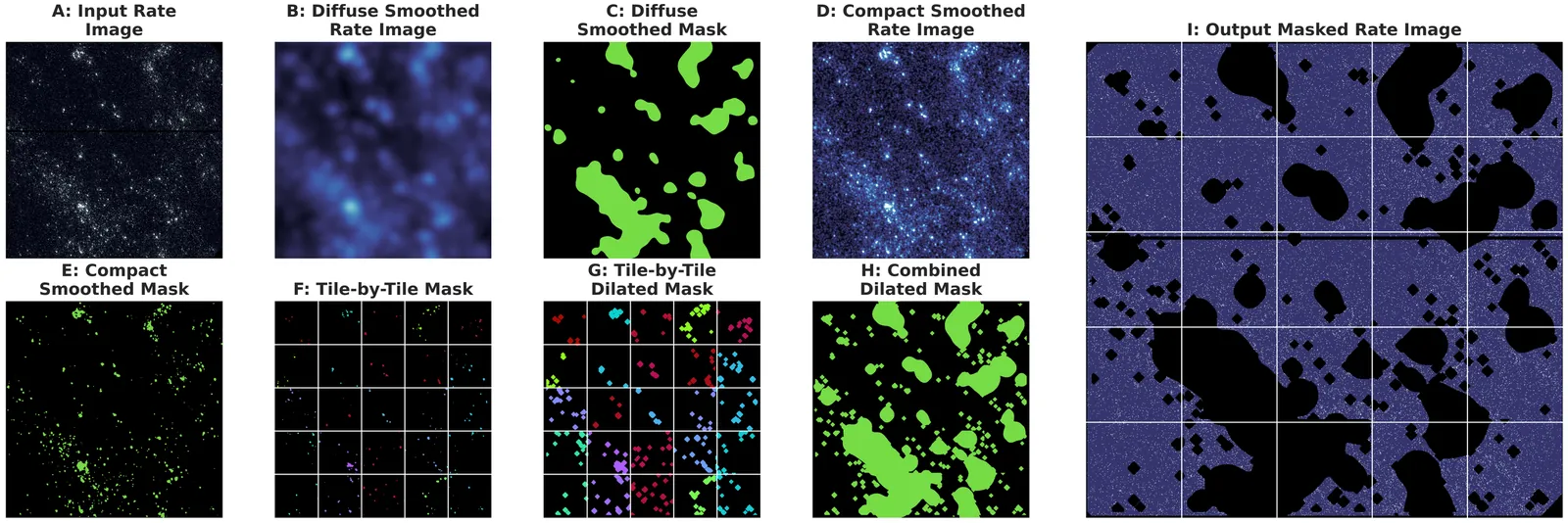

Stage-IV dark energy wide-field surveys, such as the Vera C. Rubin Observatory Legacy Survey of Space and Time (LSST), will observe an unprecedented number density of galaxies. As a result, the majority of imaged galaxies will visually overlap, a phenomenon known as blending. Blending is expected to be a leading source of systematic error in astronomical measurements. To mitigate this systematic, we propose a new probabilistic method for detecting, deblending, and measuring the properties of galaxies, called the Bayesian Light Source Separator (BLISS). Given an astronomical survey image, BLISS uses convolutional neural networks to produce a probabilistic astronomical catalog by approximating the posterior distribution over the number of light sources, their centroids' locations, and their types (galaxy vs. star). BLISS additionally includes a denoising autoencoder to reconstruct unblended galaxy profiles. As a first step towards demonstrating the feasibility of BLISS for cosmological applications, we apply our method to simulated single-band images whose properties are representative of year-10 LSST coadds. First, we study each BLISS component independently and examine its probabilistic output as a function of SNR and degree of blending. Then, by propagating the probabilistic detections from BLISS to its deblender, we produce per-object flux posteriors. Using these posteriors yields a substantial improvement in aperture flux residuals relative to deterministic detections alone, particularly for highly blended and faint objects. These results highlight the potential of BLISS as a scalable, uncertainty-aware tool for mitigating blending-induced systematics in next-generation cosmological surveys.

We present a new fiber assignment algorithm for a robotic fiber positioner system in multi-object spectroscopy. Modern fiber positioner systems typically have overlapping patrol regions, resulting in the number of observable targets being highly dependent on the fiber assignment scheme. To maximize observable targets without fiber collisions, the algorithm proceeds in three steps. First, it assigns the maximum number of targets for a given field of view without considering any collisions between fiber positioners. Then, the fibers in collision are grouped, and the algorithm finds the optimal solution resolving the collision problem within each group. We compare the results from this new algorithm with those from a simple algorithm that assigns targets in descending order of their rank by considering collisions. As a result, we could increase the overall completeness of target assignments by 10% with this new algorithm in comparison with the case using the simple algorithm in a field with 150 fibers. Our new algorithm is designed for the All-sky SPECtroscopic survey of nearby galaxies (A-SPEC) based on the K-SPEC spectrograph system, but can also be applied to similar fiber-based systems with heavily overlapping fiber positioners.

We developed a SpaceWire-based data acquisition (DAQ) system for the FOXSI-4 and FOXSI-5 sounding rocket experiments, which aim to observe solar flares with high sensitivity and dynamic range using direct X-ray focusing optics. The FOXSI-4 mission, launched on April 17, 2024, achieved the first direct focusing observation of a GOES M1.6 class solar flare with imaging spectroscopy capabilities in the soft and hard X-ray energy ranges, using a suite of advanced detectors, including two CMOS sensors, four CdTe double-sided strip detectors (CdTe-DSDs), and a Quad-Timepix3 detector. To accommodate the high photon flux from a solar flare and these diverse detector types, a modular DAQ network architecture was implemented based on SpaceWire and the Remote Memory Access Protocol (RMAP). This modular architecture enabled fast, reliable, and scalable communication among various onboard components, including detectors, readout boards, onboard computers, and telemetry systems. In addition, by standardizing the communication interface and modularizing each detector unit and its associated electronics, the architecture also supported distributed development among collaborating institutions, simplifying integration and reducing overall complexity. To realize this architecture, we developed FPGA-based readout boards (SPMU-001 and SPMU-002) that support SpaceWire communication for high-speed data transfer and flexible instrument control. In addition, a real-time ground support system was developed to handle telemetry and command operations during flight, enabling live monitoring and adaptive configuration of onboard instruments in response to the properties of the observed solar flare. The same architecture is being adopted for the upcoming FOXSI-5 mission, scheduled for launch in 2026.

Experiments designed to detect ultra-high energy (UHE) neutrinos using radio techniques are also capable of detecting the radio signals from cosmic-ray (CR) induced air showers. These CR signals are important both as a background and as a tool for calibrating the detector. The Askaryan Radio Array (ARA), a radio detector array, is designed to detect UHE neutrinos. The array currently comprises five independent stations, each instrumented with antennas deployed at depths of up to 200 meters within the ice at the South Pole. In this study, we focus on a candidate event recorded by ARA Station 2 (A2) that shows features consistent with a downward-going CR-induced air shower. This includes distinctive double-pulse signals in multiple channels, interpreted as geomagnetic and Askaryan radio emissions arriving at the antennas in sequence. To investigate this event, we use detailed simulations that combine a modern ice-impacting CR shower simulation framework, FAERIE, with a realistic detector simulation package, AraSim. We will present results for an optimization of the event topology, identified through simulated CR showers, comparing the vertex reconstruction of both the geomagnetic and Askaryan signals of the event, as well as the observed time delays between the two signals in each antenna.

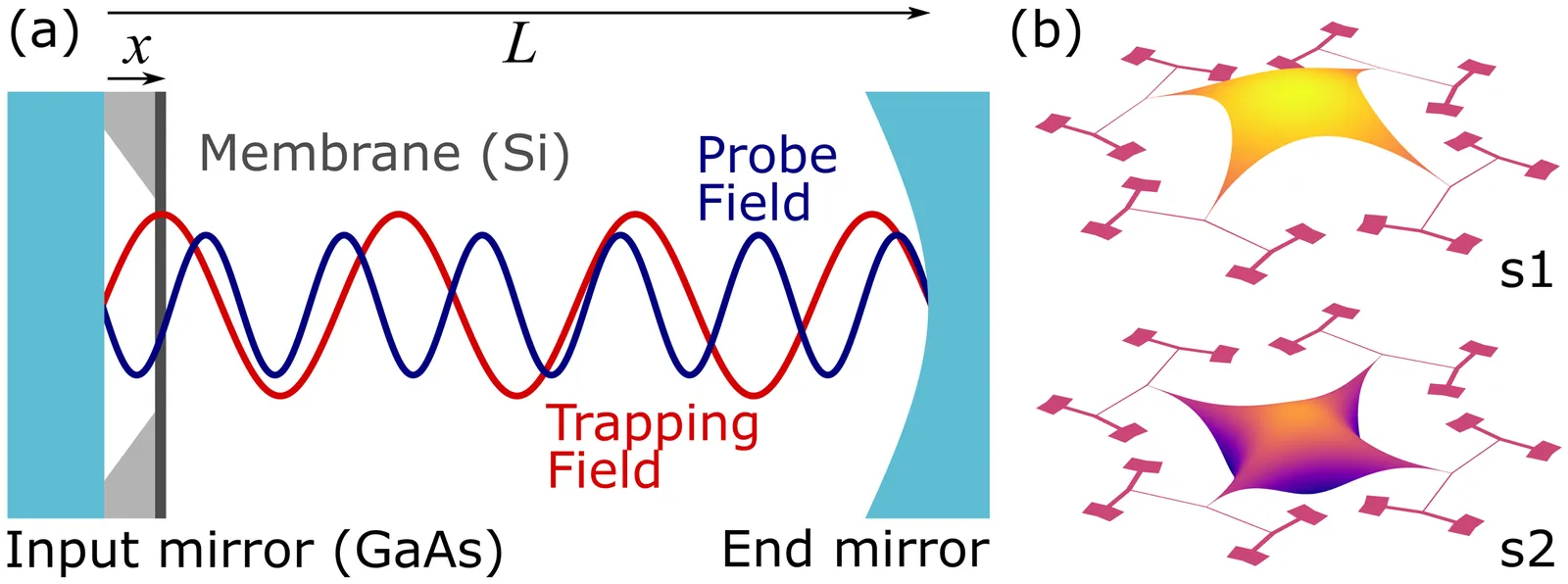

We present a proposal for a nanomechanical membrane resonator integrated into a moderate-finesse ($\mathcal{F}\sim 10$) optical cavity as a versatile platform for detecting high-frequency gravitational waves and vector dark matter. Gravitational-wave sensitivity arises from cavity-length modulation, which resonantly drives membrane motion via the radiation-pressure force. This force also enables in situ tuning of the membrane's resonance frequency by nearly a factor of two, allowing a frequency coverage from 0.5 to 40 kHz using six membranes. The detector achieves a peak strain sensitivity of $2\times 10^{-23}/\sqrt{\text{Hz}}$ at 40 kHz. Using a silicon membrane positioned near a gallium-arsenide input mirror additionally provides sensitivity to vector dark matter via differential acceleration from their differing atomic-to-mass number ratios. The projected reach surpasses the existing limits in the range of $2\times 10^{-12}$ to $2\times 10^{-10}$ $\text{eV}/c^2$ for a one-year measurement. Consequently, the proposed detector offers a unified approach to searching for physics beyond the Standard Model, probing both high-frequency gravitational waves and vector dark matter.

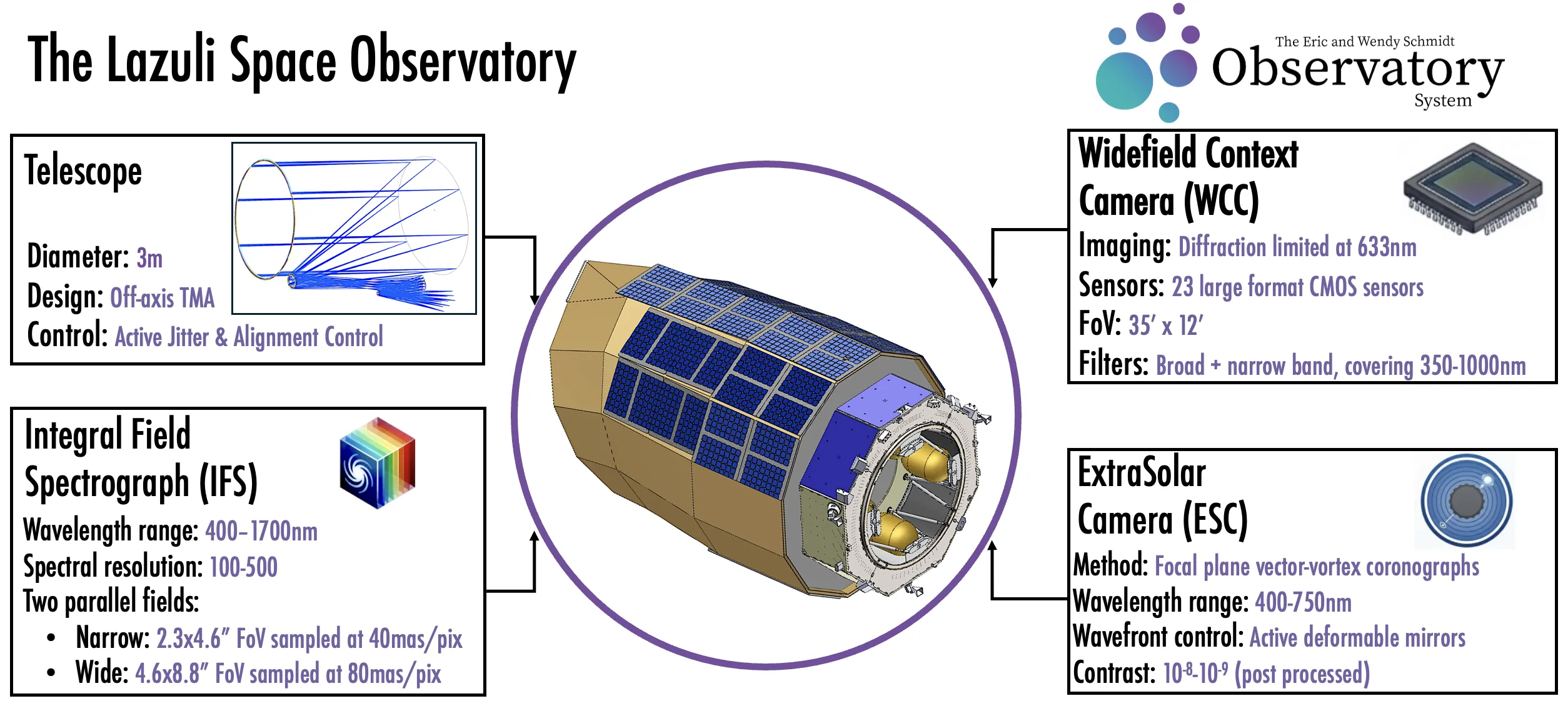

The Lazuli Space Observatory is a 3-meter aperture astronomical facility designed for rapid-response observations and precision astrophysics across visible to near-infrared wavelengths (400-1700 nm bandpass). An off-axis, freeform telescope delivers diffraction-limited image quality (Strehl $>$0.8 at 633 nm) to three instruments across a wide, flat focal plane. The three instruments provide complementary capabilities: a Wide-field Context Camera (WCC) delivers multi-band imaging over a 35' $\times$ 12' footprint with high-cadence photometry; an Integral Field Spectrograph (IFS) provides continuous 400-1700 nm spectroscopy at R $\sim$ 100-500 for stable spectrophotometry; and an ExtraSolar Coronagraph (ESC) enables high-contrast imaging expected to reach raw contrasts of $10^{-8}$ and post-processed contrasts approaching $10^{-9}$. Operating from a 3:1 lunar-resonant orbit, Lazuli will respond to targets of opportunity in under four hours--a programmatic requirement designed to enable routine temporal responsiveness that is unprecedented for a space telescope of this size. Lazuli's technical capabilities are shaped around three broad science areas: (1) time-domain and multi-messenger astronomy, (2) stars and planets, and (3) cosmology. These capabilities enable a potent mix of science spanning gravitational wave counterpart characterization, fast-evolving transients, Type Ia supernova cosmology, high-contrast exoplanet imaging, and spectroscopy of exoplanet atmospheres. While these areas guide the observatory design, Lazuli is conceived as a general-purpose facility capable of supporting a wide range of astrophysical investigations, with open time for the global community. We describe the observatory architecture and capabilities in the preliminary design phase, with science operations anticipated following a rapid development cycle from concept to launch.

We have used 23 years of Hubble Space Telescope ACS/SBC data to study what background levels are encountered in practice and how much they vary. The backgrounds vary considerably, with F115LP, F122M, F125LP, PR110L, and PR130L all showing over an order of magnitude of variation in background between observations, apparently due to changes in airglow. The F150LP and F165LP filters, which are dominated by dark rate, not airglow, exhibit a far smaller variation in backgrounds. For the filters where the background is generally dominated by airglow, the backgrounds measured from the data are significantly lower than what the ETC predicts (as of ETC v33.2). The ETC predictions for `average' airglow are greater than the median of our measured background values by factors of 2.51, 2.64, 105, and 3.64, for F115LP, F122M, F125LP, and F140LP, respectively. A preliminary analysis suggests this could be due to certain OI airglow lines usually being fainter than expected by the ETC. With reduced reduced background levels, the shorter-wavelength SBC filters can conduct background-limited observations much more rapidly than had previously been expected. As of ETC v34.1, a new option will be included for SBC calculations, allowing users to employ empirical background percentiles to estimate required exposure times.

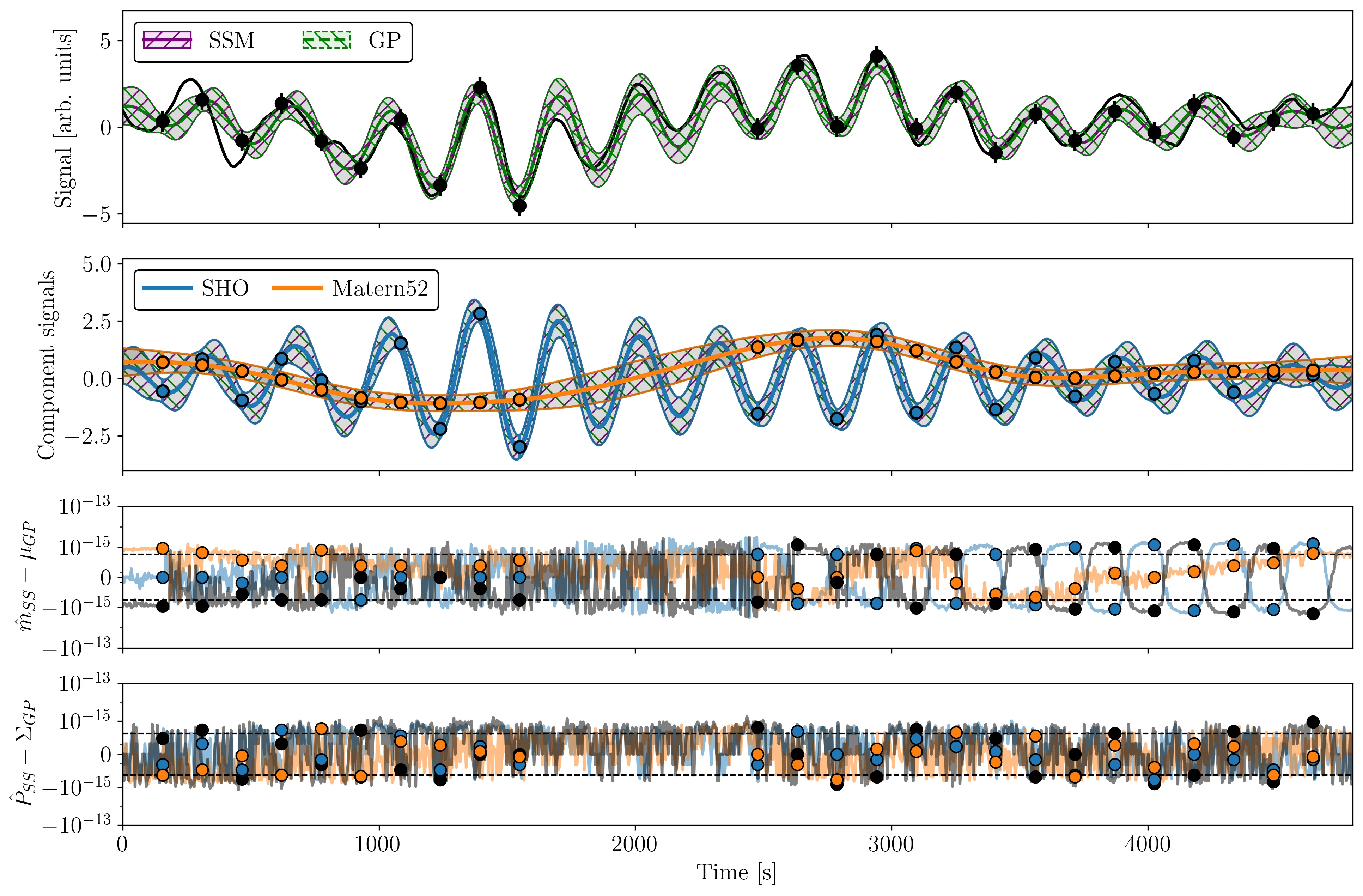

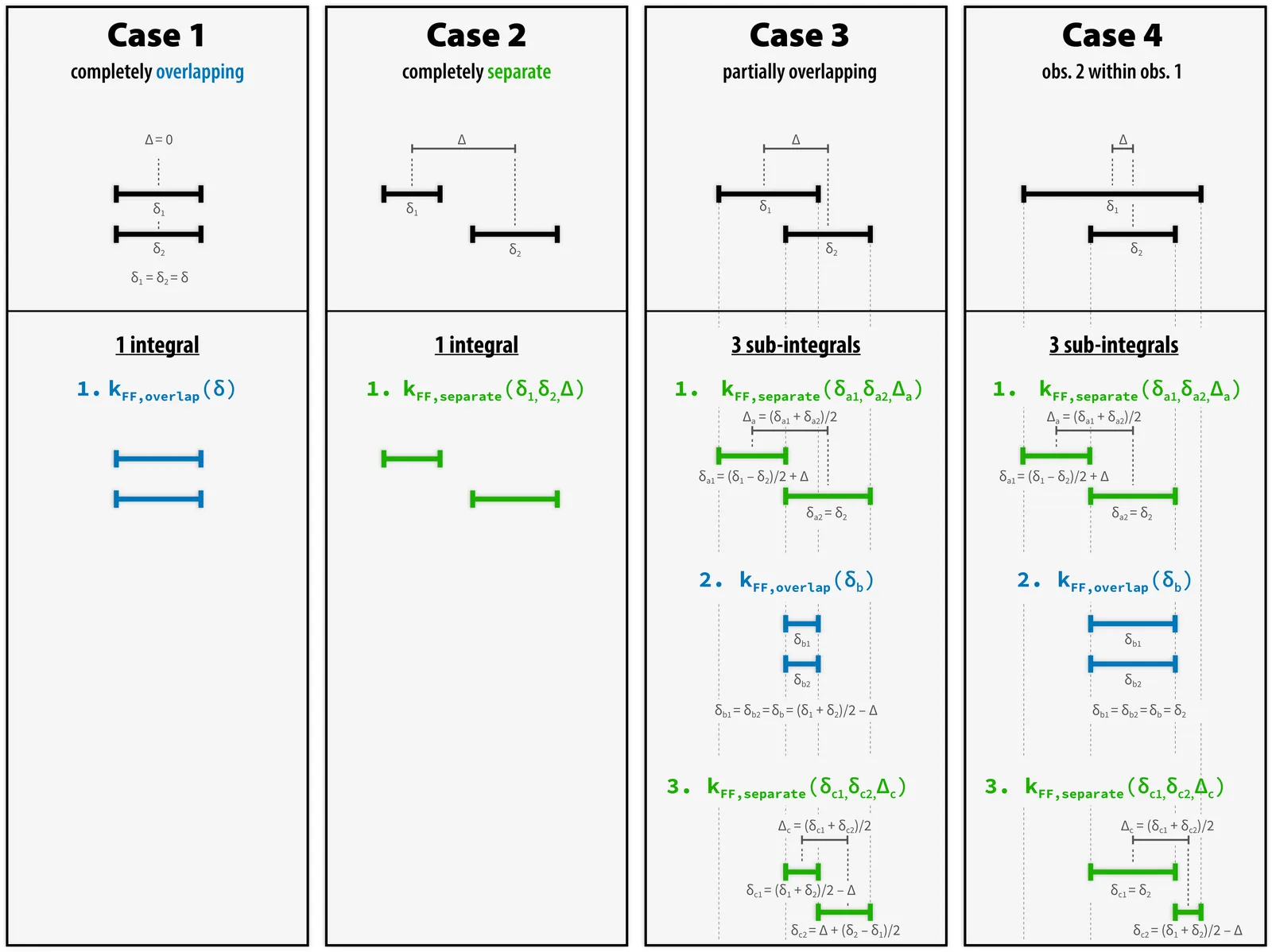

Astronomical measurements are often integrated over finite exposures, which can obscure latent variability on comparable timescales. Correctly accounting for exposure integration with Gaussian Processes (GPs) in such scenarios is essential but computationally challenging: once exposure times vary or overlap across measurements, the covariance matrix forfeits any quasiseparability, forcing O($N^2$) memory and O($N^3$) runtime costs. Linear Gaussian state space models (SSMs) are equivalent to GPs and have well-known O($N$) solutions via the Kalman filter and RTS smoother. In this work, we extend the GP-SSM equivalence to handle integrated measurements while maintaining scalability by augmenting the SSM with an integral state that resets at exposure start times and is observed at exposure end times. This construction yields exactly the same posterior as a fully integrated GP but in O($N$) time on a CPU, and is parallelizable down to O($N/T + \log T$) on a GPU with $T$ parallel workers. We present smolgp (State space Model for O(Linear/log) GPs), an open-source Python/JAX package offering drop-in compatibiltiy with tinygp while supporting both standard and exposure-aware GP modeling. As SSMs provide a framework for representing general GP kernels via their series expansion, smolgp also brings scalable performance to many commonly used covariance kernels in astronomy that lack quasiseparability, such as the quasiperiodic kernel. The substantial performance boosts at large $N$ will enable massive multi-instrument cross-comparisons where exposure overlap is ubiquitous, and unlocks the potential for analyses with more complex models and/or higher dimensional datasets.

Physically motivated Gaussian process (GP) kernels for stellar variability, like the commonly used damped, driven simple harmonic oscillators that model stellar granulation and p-mode oscillations, quantify the instantaneous covariance between any two points. For kernels whose timescales are significantly longer than the typical exposure times, such GP kernels are sufficient. For time series where the exposure time is comparable to the kernel timescale, the observed signal represents an exposure-averaged version of the true underlying signal. This distinction is important in the context of recent data streams from Extreme Precision Radial Velocity (EPRV) spectrographs like fast readout stellar data of asteroseismology targets and solar data to monitor the Sun's variability during daytime observations. Current solar EPRV facilities have significantly different exposure times per-site, owing to the different design choices made. Consequently, each instrument traces different binned versions of the same "latent" signal. Here we present a GP framework that accounts for exposure times by computing integrated forms of the instantaneous kernels typically used. These functions allow one to predict the true latent oscillation signals and the exposure-binned version expected by each instrument. We extend the framework to work for instruments with significant time overlap (i.e., similar longitude) by including relative instrumental drift components that can be predicted and separated from the stellar variability components. We use Sun-as-a-star EPRV datasets as our primary example, but present these approaches in a generalized way for application to any dataset where exposure times are a relevant factor or combining instruments with significant overlap.

2601.02448

2601.02448The majority of stars more massive than the Sun is found in binary or multiple star systems and many of them will interact during their evolution. Specific interactions, where progenitors and post-mass transfer (MT) systems are clearly linked, can provide yet missing observational constraints. Volume-complete samples of progenitor and post-MT systems are well suited to study those processes. To compile them, we need to determine the parameters of thousands of binary systems with periods spanning several orders of magnitude. The bottleneck are the orbital parameters, because accurate determinations require a good coverage of the orbital phases. The next generation of time-resolved spectroscopic surveys should be optimized to follow-up and solve whole populations of binary systems in an efficient way. To achieve this, a scheduler predicting the best times of the next observation for any given target in real time should be combined with a flexibly schedulable multi-object spectrograph or ideally a network of independent telescopes.

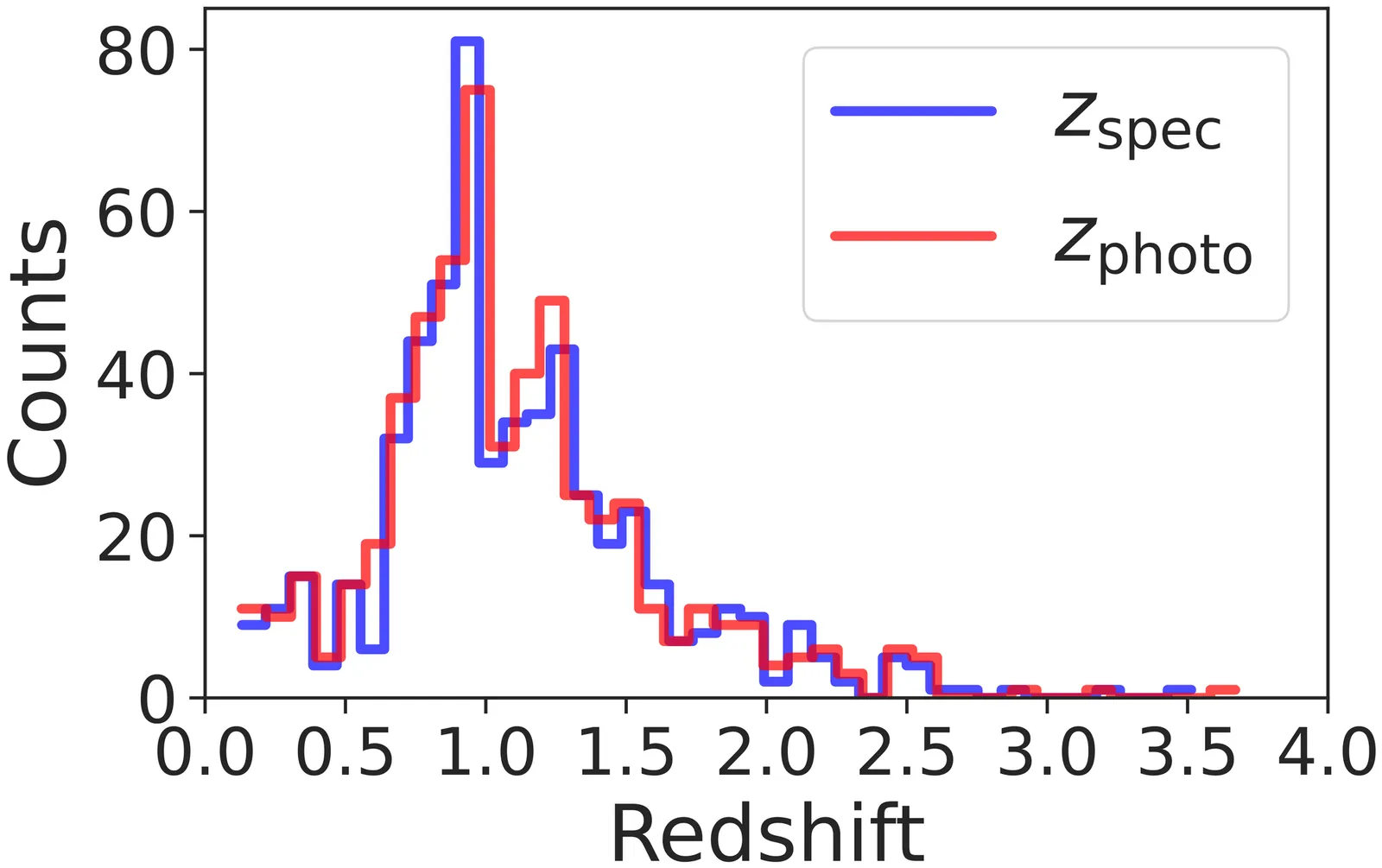

In this work, we demonstrate how Low-Rank Adaptation (LoRA) can be used to combine different galaxy imaging datasets to improve redshift estimation with CNN models for cosmology. LoRA is an established technique for large language models that adds adapter networks to adjust model weights and biases to efficiently fine-tune large base models without retraining. We train a base model using a photometric redshift ground truth dataset, which contains broad galaxy types but is less accurate. We then fine-tune using LoRA on a spectroscopic redshift ground truth dataset. These redshifts are more accurate but limited to bright galaxies and take orders of magnitude more time to obtain, so are less available for large surveys. Ideally, the combination of the two datasets would yield more accurate models that generalize well. The LoRA model performs better than a traditional transfer learning method, with $\sim2.5\times$ less bias and $\sim$2.2$\times$ less scatter. Retraining the model on a combined dataset yields a model that generalizes better than LoRA but at a cost of greater computation time. Our work shows that LoRA is useful for fine-tuning regression models in astrophysics by providing a middle ground between full retraining and no retraining. LoRA shows potential in allowing us to leverage existing pretrained astrophysical models, especially for data sparse tasks.

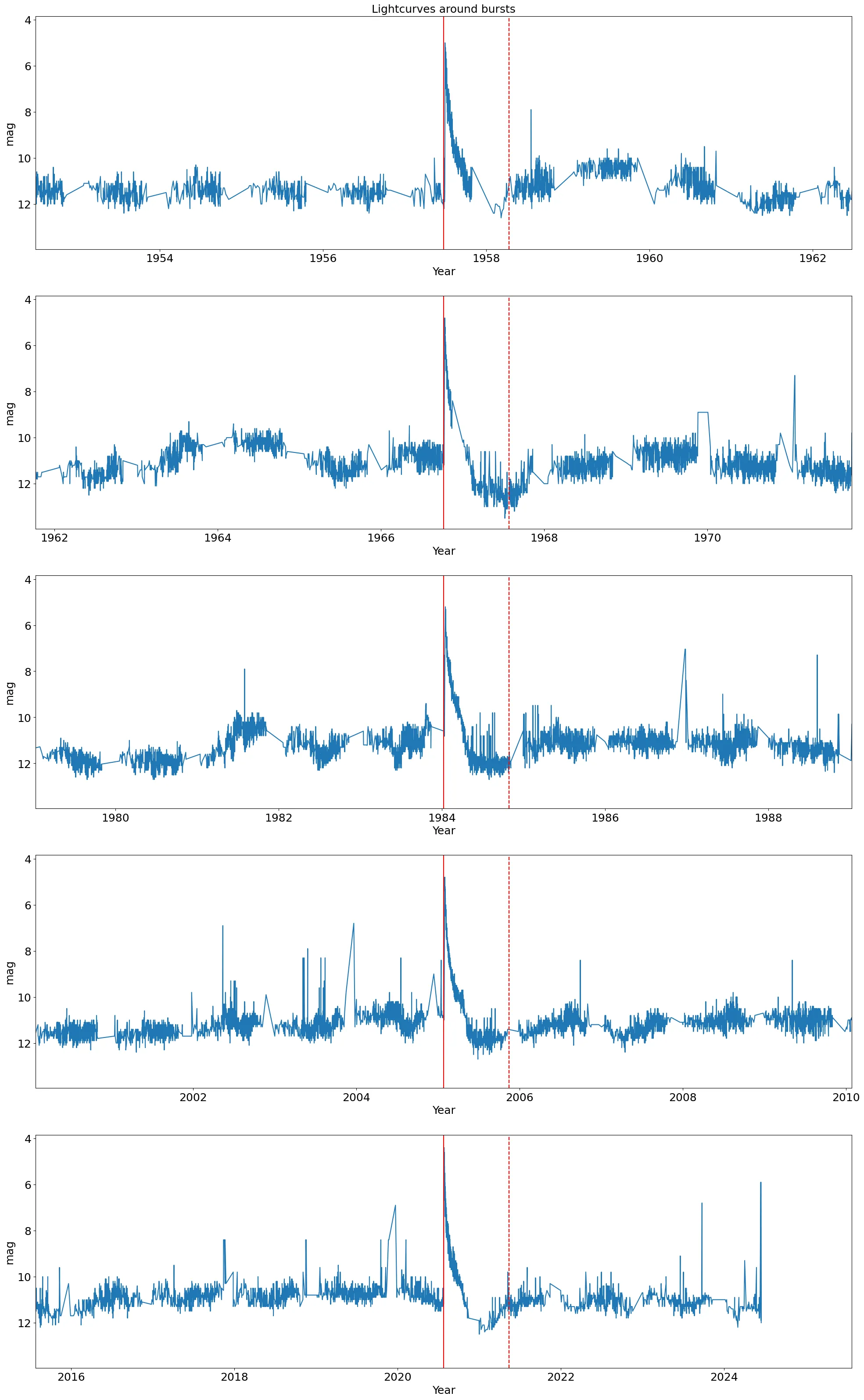

RS Oph is a recurrent nova, a kind of cataclismic variable that shows bursts in a period approximately shorter than a century. Persistent homology, a technique from topological data analysis, studies the evolution of topological features of a simplicial complex composed of the data points or an embedding of them, as some distance parameter is varied. For this work I trained a supervised learning model based on several featurizations, namely persistence landscapes, Carlsson coordinates, persistent images, and template functions, of the persistence diagrams of sections of the lightcurve of RS Oph. A tenfold cross validation of the model based on one of the featurizations, persistence landscapes, consistently shows high recalls and accuracies. This method serves the purpose of predicting whether RS Oph is bursting within a year.

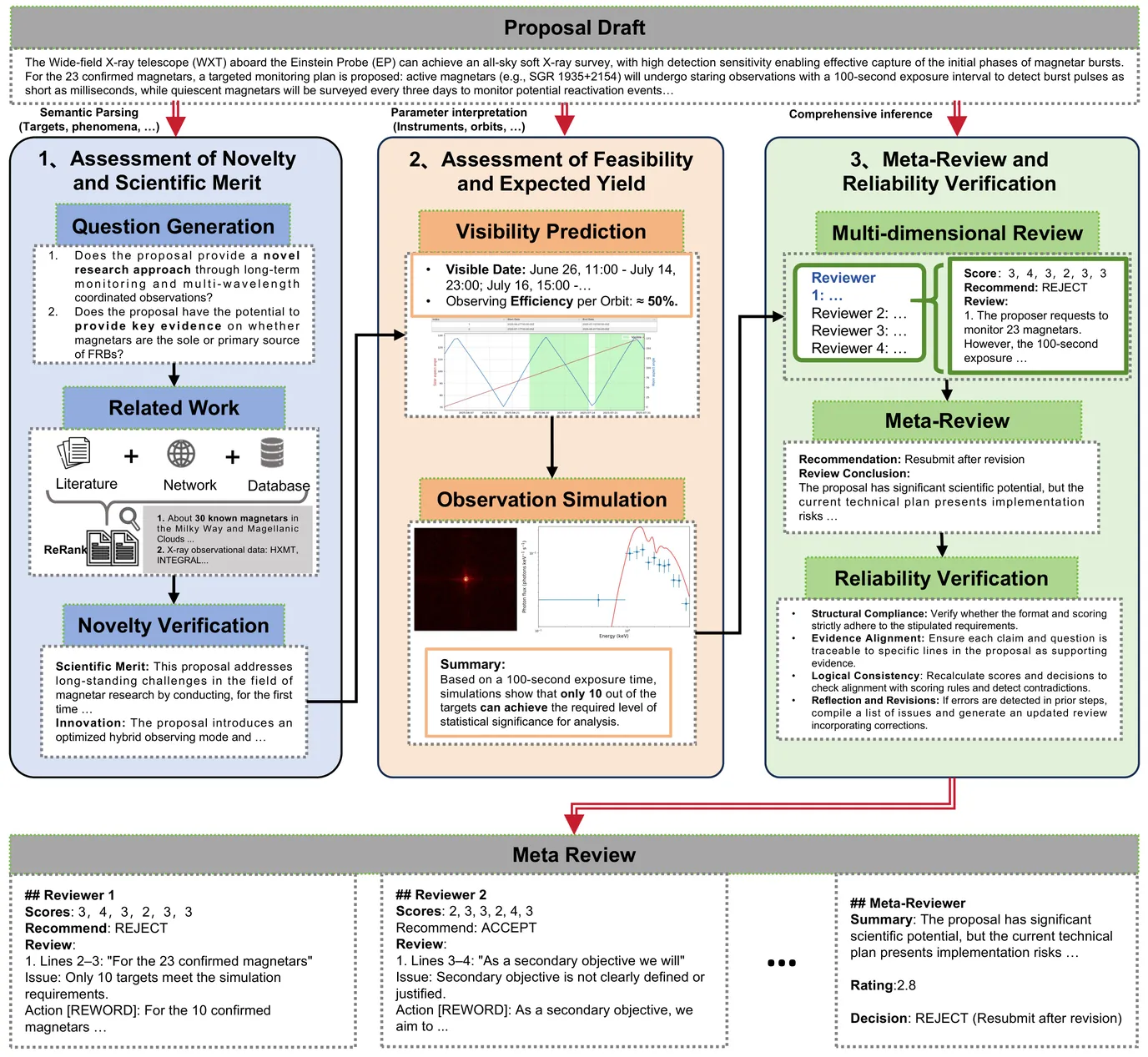

Competitive access to modern observatories has intensified as proposal volumes outpace available telescope time, making timely, consistent, and transparent peer review a critical bottleneck for the advancement of astronomy. Automating parts of this process is therefore both scientifically significant and operationally necessary to ensure fair allocation and reproducible decisions at scale. We present AstroReview, an open-source, agent-based framework that automates proposal review in three stages: (i) novelty and scientific merit, (ii) feasibility and expected yield, and (iii) meta-review and reliability verification. Task isolation and explicit reasoning traces curb hallucinations and improve transparency. Without any domain specific fine tuning, AstroReview used in our experiments only for the last stage, correctly identifies genuinely accepted proposals with an accuracy of 87%. The AstroReview in Action module replicates the review and refinement loop; with its integrated Proposal Authoring Agent, the acceptance rate of revised drafts increases by 66% after two iterations, showing that iterative feedback combined with automated meta-review and reliability verification delivers measurable quality gains. Together, these results point to a practical path toward scalable, auditable, and higher throughput proposal review for resource limited facilities.

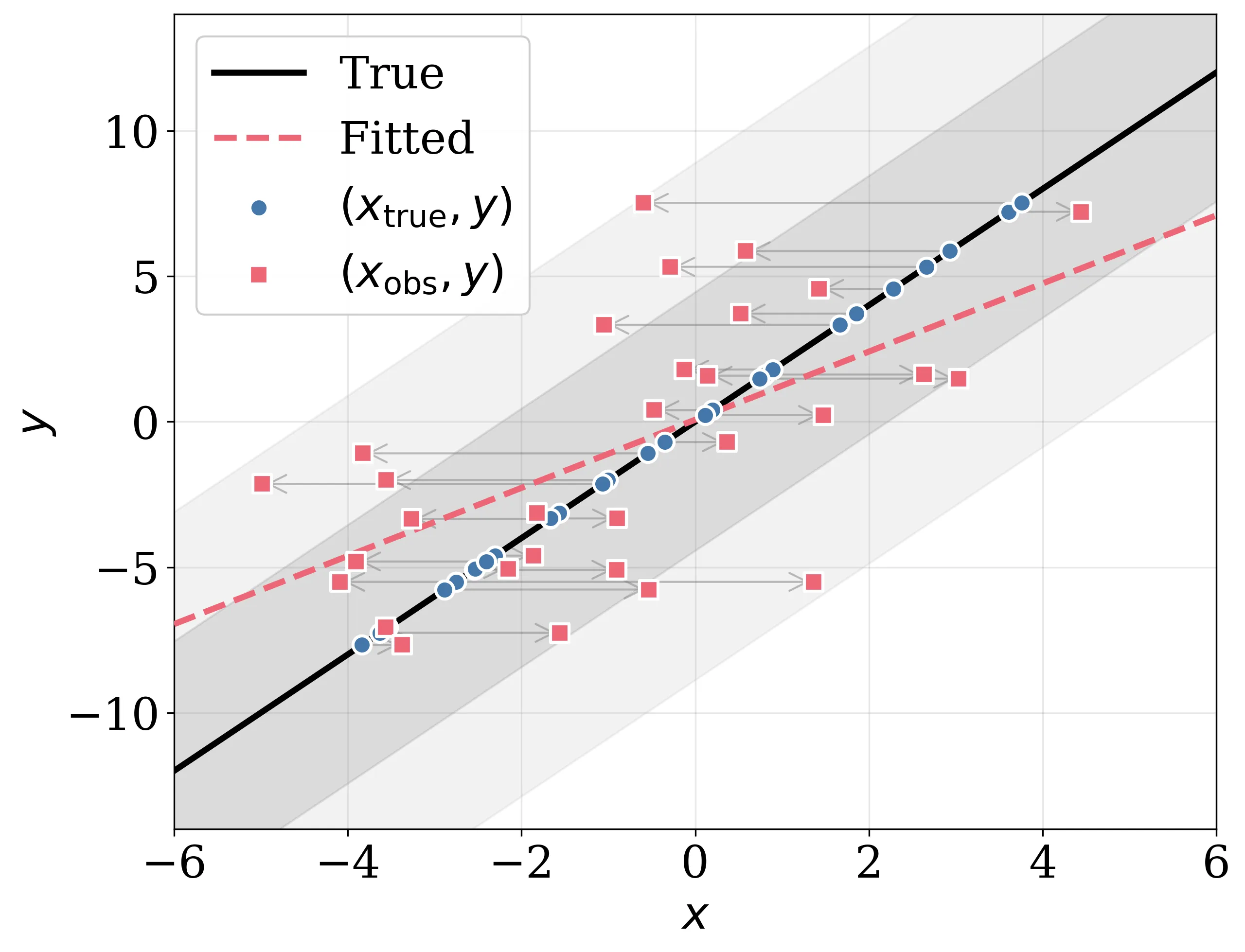

Attenuation bias -- the systematic underestimation of regression coefficients due to measurement errors in input variables -- affects astronomical data-driven models. For linear regression, this problem was solved by treating the true input values as latent variables to be estimated alongside model parameters. In this paper, we show that neural networks suffer from the same attenuation bias and that the latent variable solution generalizes directly to neural networks. We introduce LatentNN, a method that jointly optimizes network parameters and latent input values by maximizing the joint likelihood of observing both inputs and outputs. We demonstrate the correction on one-dimensional regression, multivariate inputs with correlated features, and stellar spectroscopy applications. LatentNN reduces attenuation bias across a range of signal-to-noise ratios where standard neural networks show large bias. This provides a framework for improved neural network inference in the low signal-to-noise regime characteristic of astronomical data. This bias correction is most effective when measurement errors are less than roughly half the intrinsic data range; in the regime of very low signal-to-noise and few informative features. Code is available at https://github.com/tingyuansen/LatentNN.

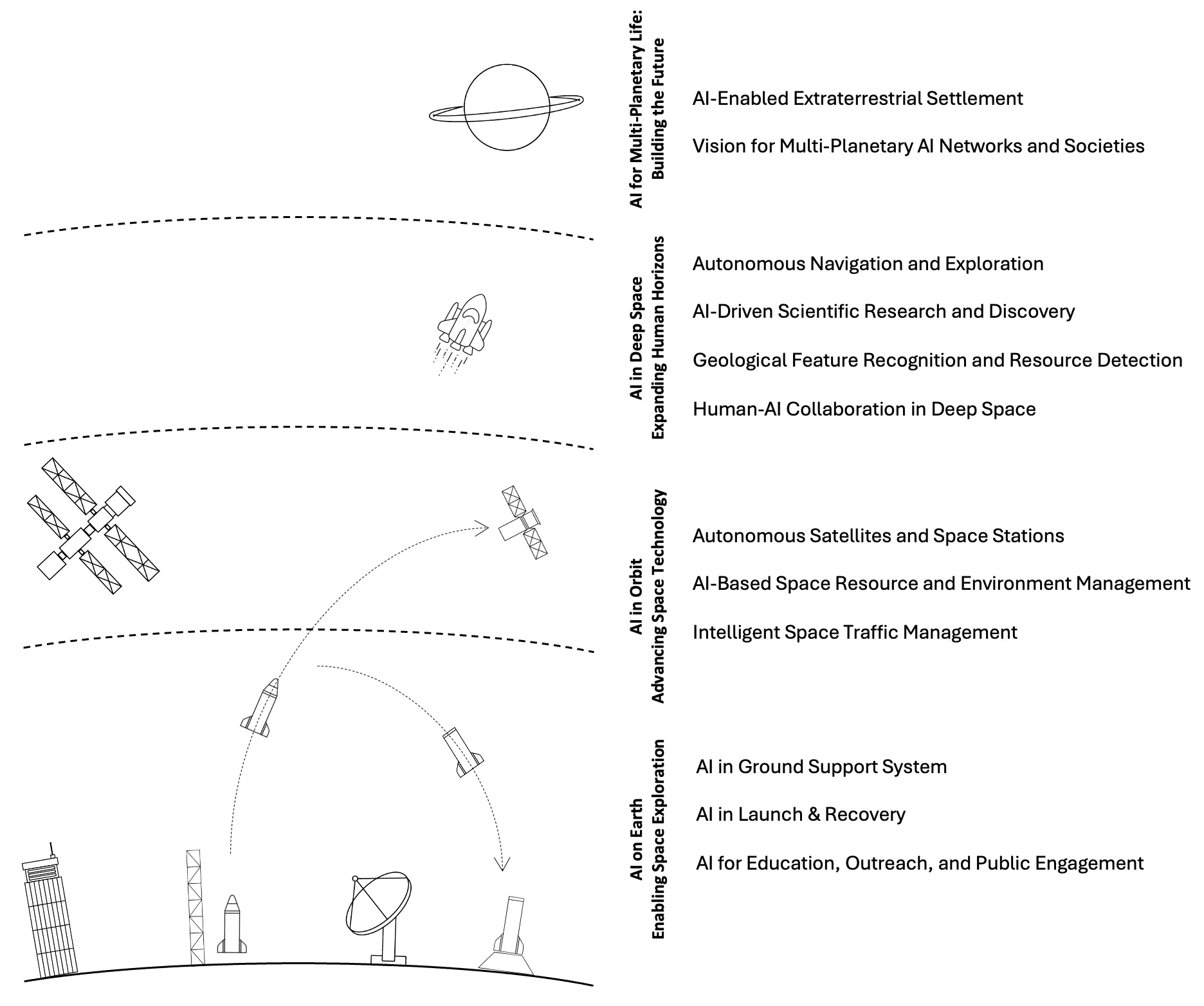

Artificial Intelligence (AI) is transforming domains from healthcare and agriculture to finance and industry. As progress on Earth meets growing constraints, the next frontier is outer space, where AI can enable autonomous, resilient operations under extreme uncertainty and limited human oversight. This paper introduces Space AI as a unified interdisciplinary field at the intersection of artificial intelligence and space science and technology. We consolidate historical developments and contemporary progress, and propose a systematic framework that organises Space AI into four mission contexts: 1 AI on Earth, covering intelligent mission planning, spacecraft design optimisation, simulation, and ground-based data analytics; 2 AI in Orbit, focusing on satellite and station autonomy, space robotics, on-board/near-real-time data processing, communication optimisation, and orbital safety; (3) AI in Deep Space, enabling autonomous navigation, adaptive scientific discovery, resource mapping, and long-duration human-AI collaboration under communication constraints; and 4 AI for Multi-Planetary Life, supporting in-situ resource utilisation, habitat and infrastructure construction, life-support and ecological management, and resilient interplanetary networks. Ultimately, Space AI can accelerate humanity's capability to explore and operate in space, while translating advances in sensing, robotics, optimisation, and trustworthy AI into broad societal impact on Earth.