1,715 papers

2602.17488

2602.17488Computational Hardness of Private Coreset

We study the problem of differentially private (DP) computation of coreset for the $k$-means objective. For a given input set of points, a coreset is another set of points such that the $k$-means objective for any candidate solution is preserved up to a multiplicative $(1 \pm α)$ factor (and some additive factor). We prove the first computational lower bounds for this problem. Specifically, assuming the existence of one-way functions, we show that no polynomial-time $(ε, 1/n^{ω(1)})$-DP algorithm can compute a coreset for $k$-means in the $\ell_\infty$-metric for some constant $α> 0$ (and some constant additive factor), even for $k=3$. For $k$-means in the Euclidean metric, we show a similar result but only for $α= Θ\left(1/d^2\right)$, where $d$ is the dimension.

2602.17488Feb 2026

View

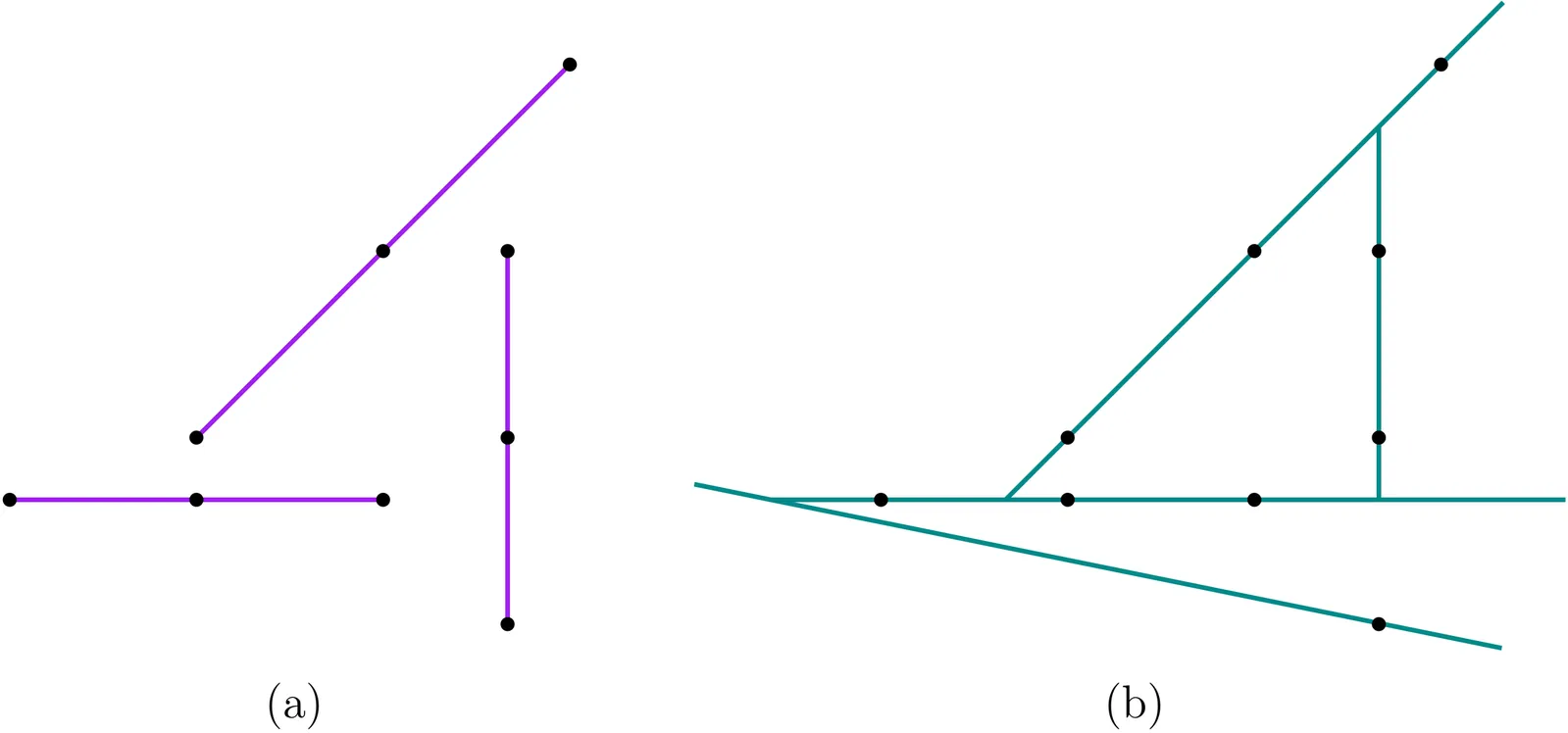

On the complexity of covering points by disjoint segments and by guillotine cuts

We show that two geometric cover problems in the plane, related to covering points with disjoint line segments, are NP-complete. Given $n$ points in the plane and a value $k$, the first problem asks if all points can be covered by $k$ disjoint line segments; the second problem treats the analogous question for $k$ guillotine cuts.

2602.17294Feb 2026

View

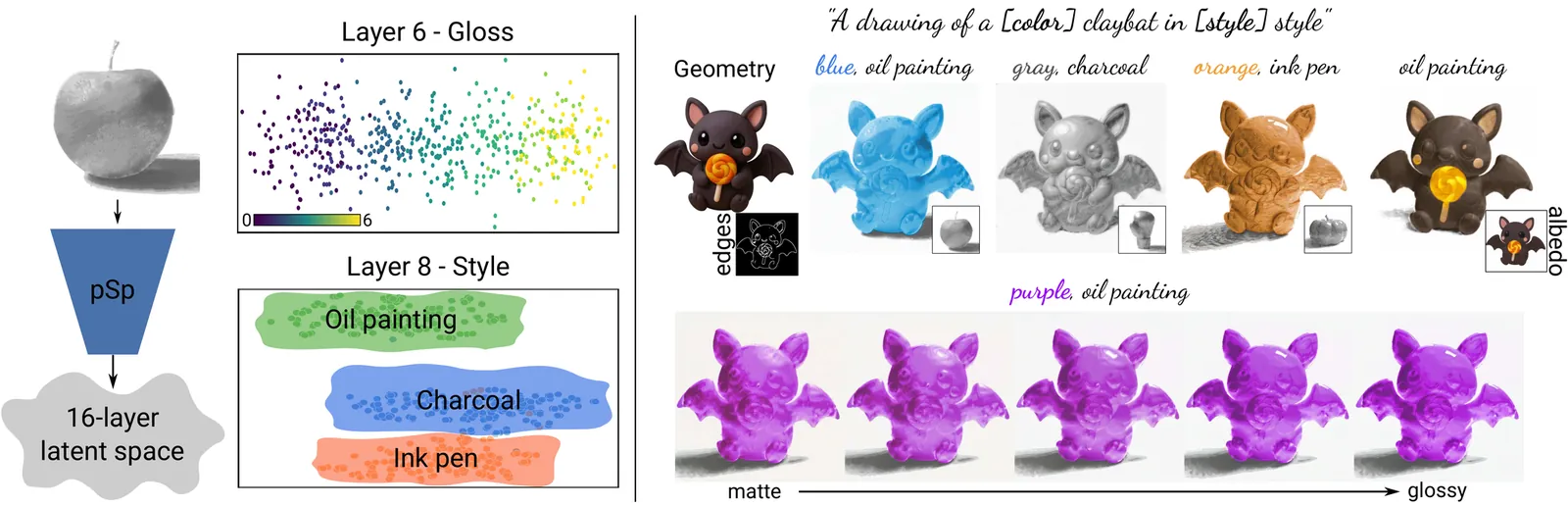

Style-Aware Gloss Control for Generative Non-Photorealistic Rendering

Humans can infer material characteristics of objects from their visual appearance, and this ability extends to artistic depictions, where similar perceptual strategies guide the interpretation of paintings or drawings. Among the factors that define material appearance, gloss, along with color, is widely regarded as one of the most important, and recent studies indicate that humans can perceive gloss independently of the artistic style used to depict an object. To investigate how gloss and artistic style are represented in learned models, we train an unsupervised generative model on a newly curated dataset of painterly objects designed to systematically vary such factors. Our analysis reveals a hierarchical latent space in which gloss is disentangled from other appearance factors, allowing for a detailed study of how gloss is represented and varies across artistic styles. Building on this representation, we introduce a lightweight adapter that connects our style- and gloss-aware latent space to a latent-diffusion model, enabling the synthesis of non-photorealistic images with fine-grained control of these factors. We compare our approach with previous models and observe improved disentanglement and controllability of the learned factors.

2602.16611Feb 2026

View

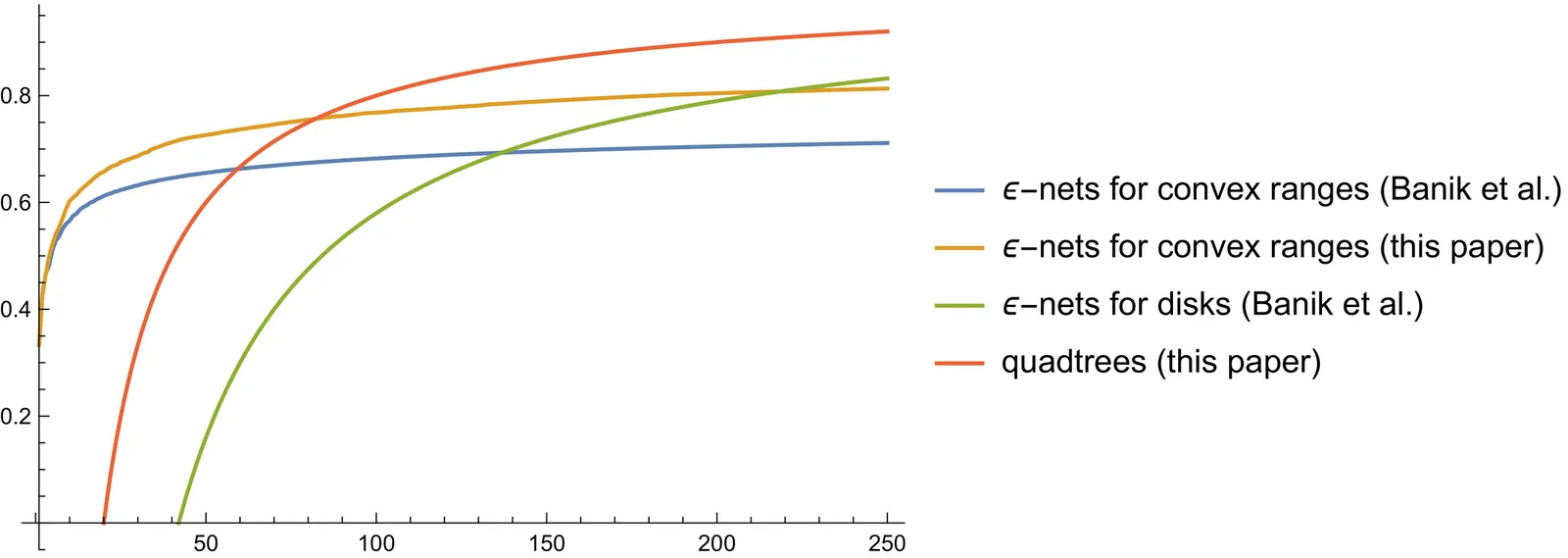

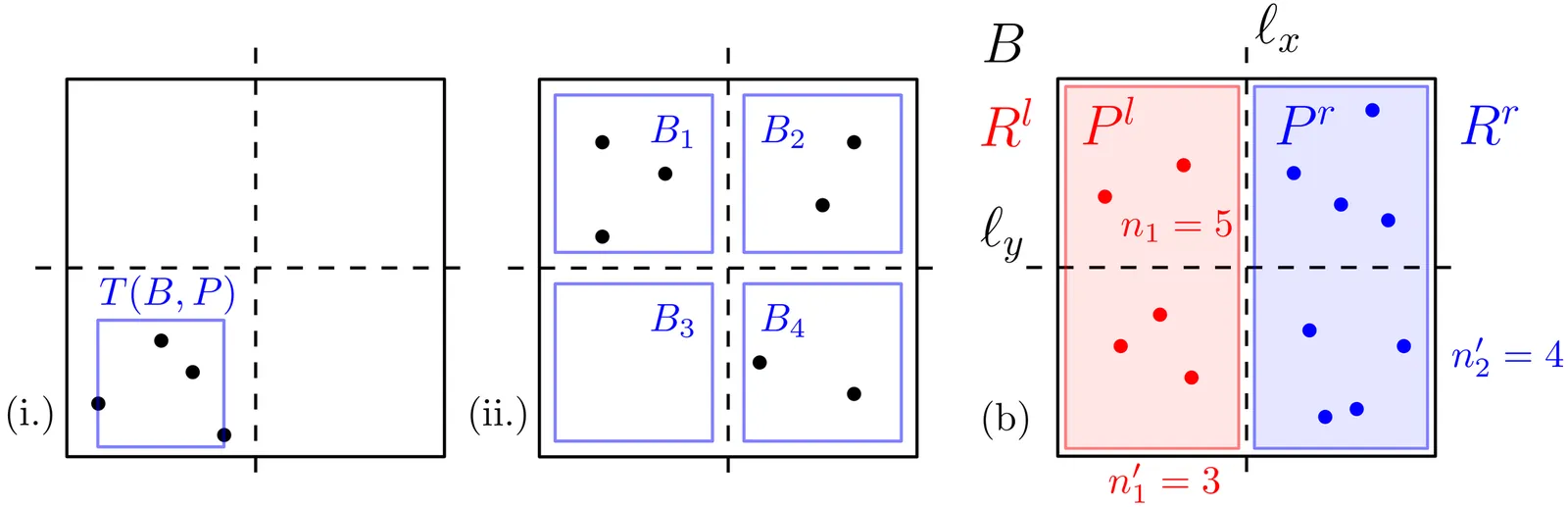

Improved Bounds for Discrete Voronoi Games

In the planar one-round discrete Voronoi game, two players $\mathcal{P}$ and $\mathcal{Q}$ compete over a set $V$ of $n$ voters represented by points in $\mathbb{R}^2$. First, $\mathcal{P}$ places a set $P$ of $k$ points, then $\mathcal{Q}$ places a set $Q$ of $\ell$ points, and then each voter $v\in V$ is won by the player who has placed a point closest to $v$. It is well known that if $k=\ell=1$, then $\mathcal{P}$ can always win $n/3$ voters and that this is worst-case optimal. We study the setting where $k>1$ and $\ell=1$. We present lower bounds on the number of voters that $\mathcal{P}$ can always win, which improve the existing bounds for all $k\geq 4$. As a by-product, we obtain improved bounds on small $\varepsilon$-nets for convex ranges. These results are for the $L_2$ metric. We also obtain lower bounds on the number of voters that $\mathcal{P}$ can always win when distances are measured in the $L_1$ metric.

2602.16518Feb 2026

View

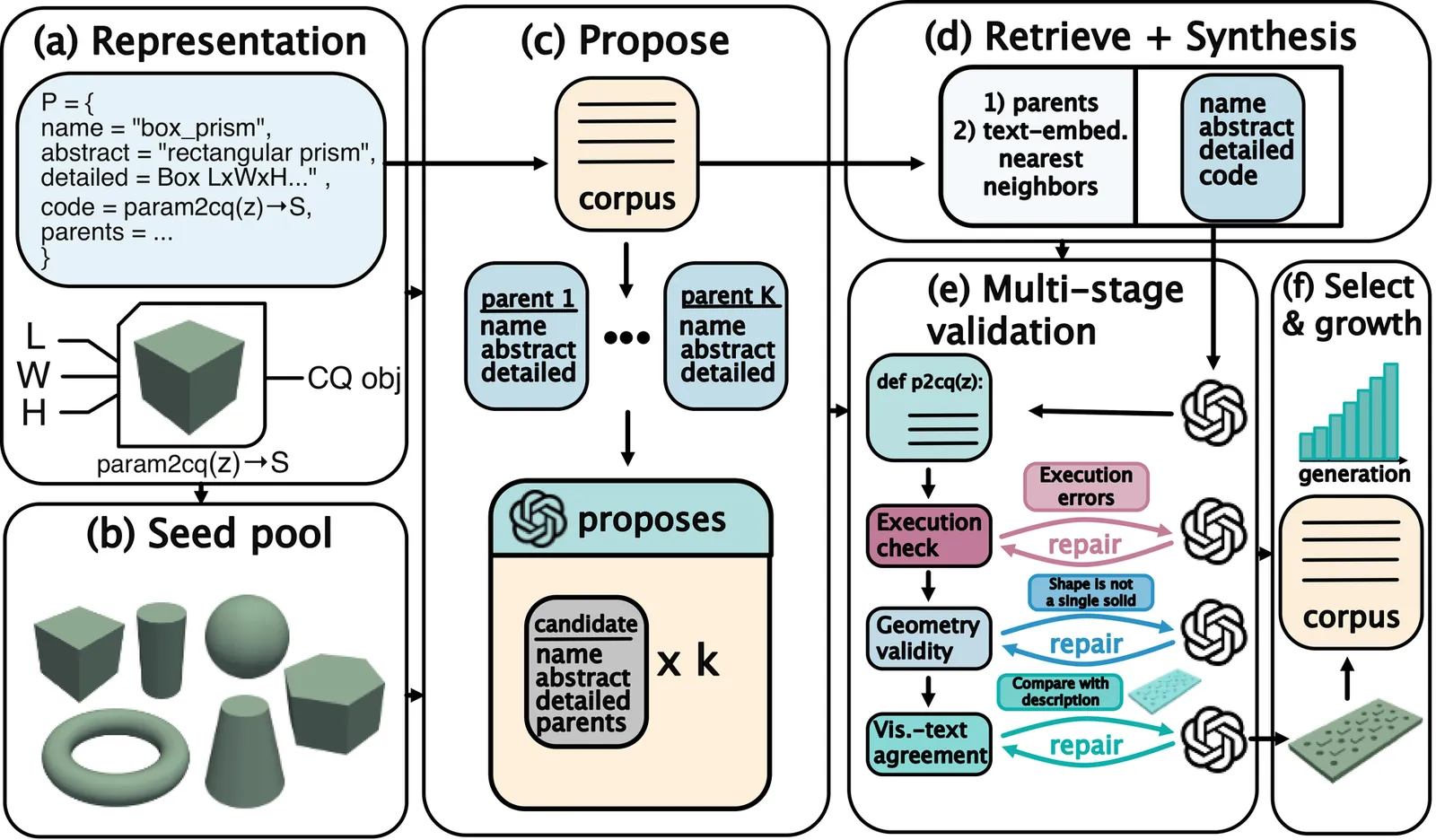

CADEvolve: Creating Realistic CAD via Program Evolution

Computer-Aided Design (CAD) delivers rapid, editable modeling for engineering and manufacturing. Recent AI progress now makes full automation feasible for various CAD tasks. However, progress is bottlenecked by data: public corpora mostly contain sketch-extrude sequences, lack complex operations, multi-operation composition and design intent, and thus hinder effective fine-tuning. Attempts to bypass this with frozen VLMs often yield simple or invalid programs due to limited 3D grounding in current foundation models. We present CADEvolve, an evolution-based pipeline and dataset that starts from simple primitives and, via VLM-guided edits and validations, incrementally grows CAD programs toward industrial-grade complexity. The result is 8k complex parts expressed as executable CadQuery parametric generators. After multi-stage post-processing and augmentation, we obtain a unified dataset of 1.3m scripts paired with rendered geometry and exercising the full CadQuery operation set. A VLM fine-tuned on CADEvolve achieves state-of-the-art results on the Image2CAD task across the DeepCAD, Fusion 360, and MCB benchmarks.

2602.16317Feb 2026

View

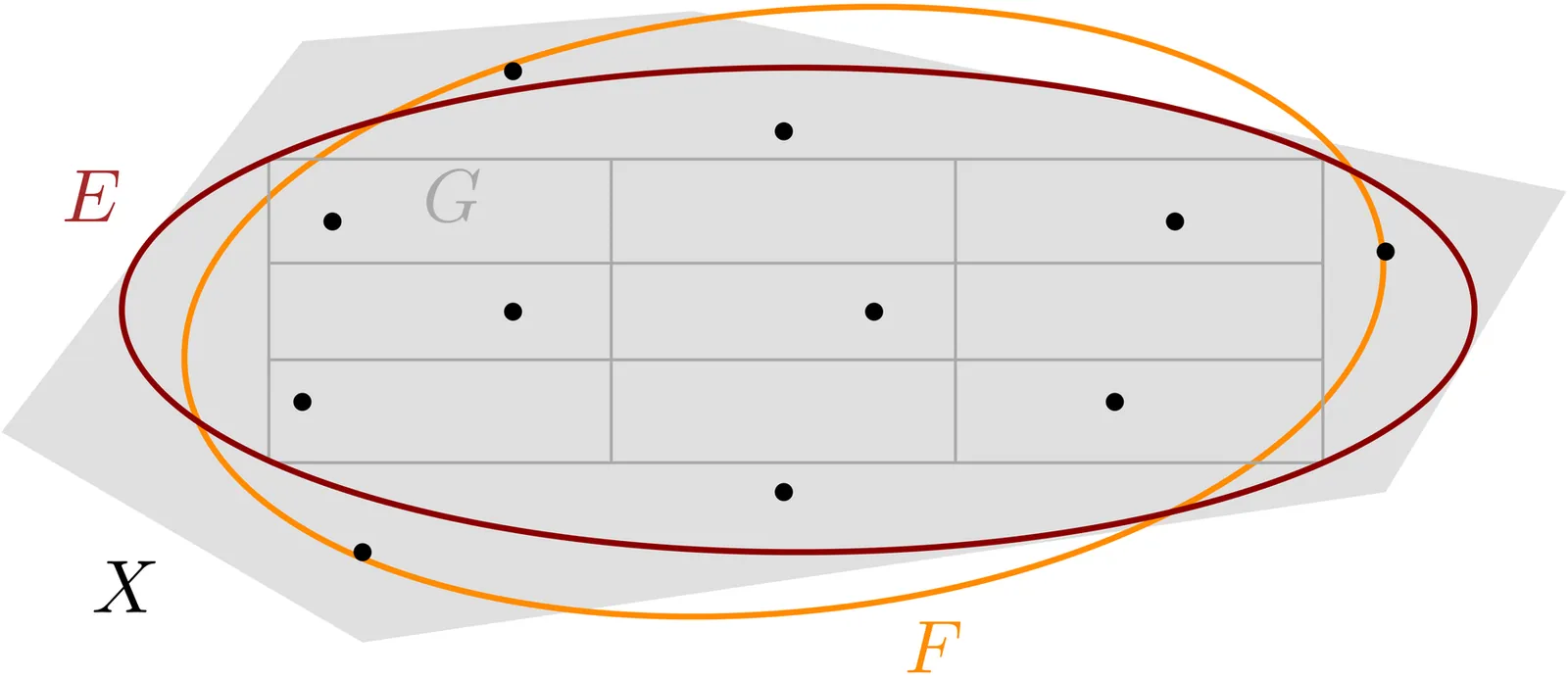

Dynamic and Streaming Algorithms for Union Volume Estimation

The union volume estimation problem asks to $(1\pm\varepsilon)$-approximate the volume of the union of $n$ given objects $X_1,\ldots,X_n \subset \mathbb{R}^d$. In their seminal work in 1989, Karp, Luby, and Madras solved this problem in time $O(n/\varepsilon^2)$ in an oracle model where each object $X_i$ can be accessed via three types of queries: obtain the volume of $X_i$, sample a random point from $X_i$, and test whether $X_i$ contains a given point $x$. This running time was recently shown to be optimal [Bringmann, Larsen, Nusser, Rotenberg, and Wang, SoCG'25]. In another line of work, Meel, Vinodchandran, and Chakraborty [PODS'21] designed algorithms that read the objects in one pass using polylogarithmic time per object and polylogarithmic space; this can be phrased as a dynamic algorithm supporting insertions of objects for union volume estimation in the oracle model. In this paper, we study algorithms for union volume estimation in the oracle model that support both insertions and deletions of objects. We obtain the following results: - an algorithm supporting insertions and deletions in polylogarithmic update and query time and linear space (this is the first such dynamic algorithm, even for 2D triangles); - an algorithm supporting insertions and suffix queries (which generalizes the sliding window setting) in polylogarithmic update and query time and space; - an algorithm supporting insertions and deletions of convex bodies of constant dimension in polylogarithmic update and query time and space.

2602.16306Feb 2026

View

Real-time Rendering with a Neural Irradiance Volume

Rendering diffuse global illumination in real-time is often approximated by pre-computing and storing irradiance in a 3D grid of probes. As long as most of the scene remains static, probes approximate irradiance for all surfaces immersed in the irradiance volume, including novel dynamic objects. This approach, however, suffers from aliasing artifacts and high memory consumption. We propose Neural Irradiance Volume (NIV), a neural-based technique that allows accurate real-time rendering of diffuse global illumination via a compact pre-computed model, overcoming the limitations of traditional probe-based methods, such as the expensive memory footprint, aliasing artifacts, and scene-specific heuristics. The key insight is that neural compression creates an adaptive and amortized representation of irradiance, circumventing the cubic scaling of grid-based methods. Our superior memory-scaling improves quality by at least 10x at the same memory budget, and enables a straightforward representation of higher-dimensional irradiance fields, allowing rendering of time-varying or dynamic effects without requiring additional computation at runtime. Unlike other neural rendering techniques, our method works within strict real-time constraints, providing fast inference (around 1 ms per frame on consumer GPUs at full HD resolution), reduced memory usage (1-5 MB for medium-sized scenes), and only requires a G-buffer as input, without expensive ray tracing or denoising.

2602.12949Feb 2026

View

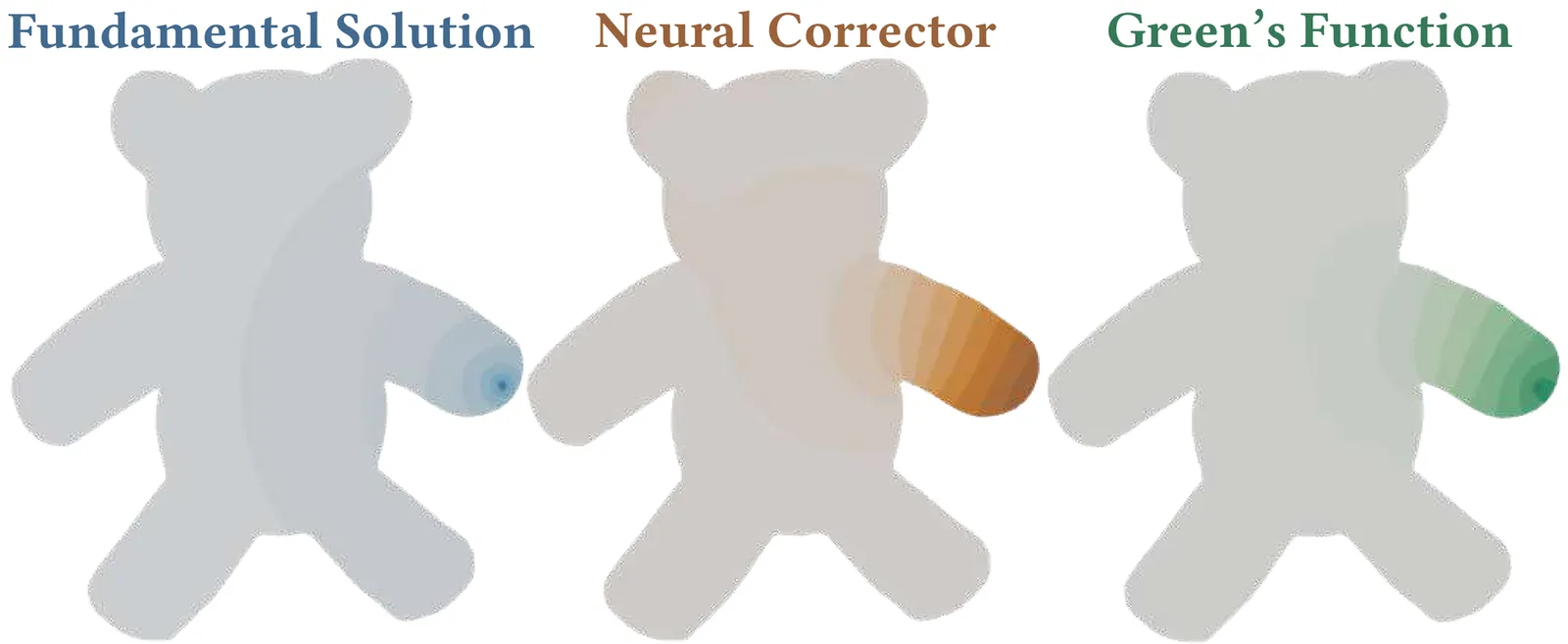

Variational Green's Functions for Volumetric PDEs

Green's functions characterize the fundamental solutions of partial differential equations; they are essential for tasks ranging from shape analysis to physical simulation, yet they remain computationally prohibitive to evaluate on arbitrary geometric discretizations. We present Variational Green's Function (VGF), a method that learns a smooth, differentiable representation of the Green's function for linear self-adjoint PDE operators, including the Poisson, the screened Poisson, and the biharmonic equations. To resolve the sharp singularities characteristic of the Green's functions, our method decomposes the Green's function into an analytic free-space component, and a learned corrector component. Our method leverages a variational foundation to impose Neumann boundary conditions naturally, and imposes Dirichlet boundary conditions via a projective layer on the output of the neural field. The resulting Green's functions are fast to evaluate, differentiable with respect to source application, and can be conditioned on other signals parameterizing our geometry.

2602.12349Feb 2026

View

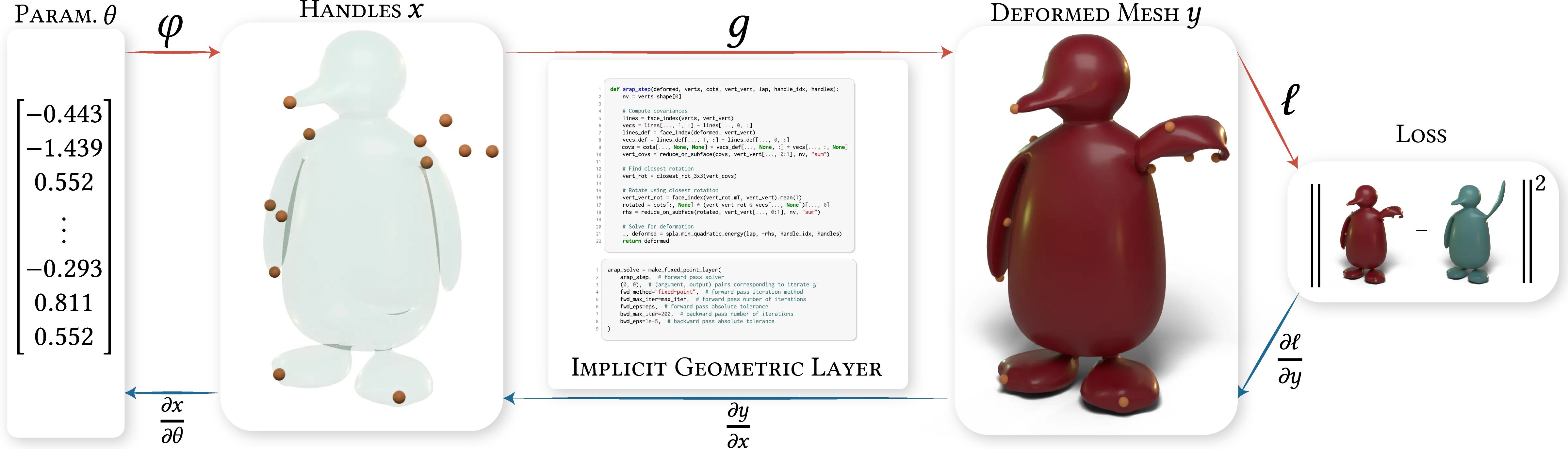

Iskra: A System for Inverse Geometry Processing

We propose a system for differentiating through solutions to geometry processing problems. Our system differentiates a broad class of geometric algorithms, exploiting existing fast problem-specific schemes common to geometry processing, including local-global and ADMM solvers. It is compatible with machine learning frameworks, opening doors to new classes of inverse geometry processing applications. We marry the scatter-gather approach to mesh processing with tensor-based workflows and rely on the adjoint method applied to user-specified imperative code to generate an efficient backward pass behind the scenes. We demonstrate our approach by differentiating through mean curvature flow, spectral conformal parameterization, geodesic distance computation, and as-rigid-as-possible deformation, examining usability and performance on these applications. Our system allows practitioners to differentiate through existing geometry processing algorithms without needing to reformulate them, resulting in low implementation effort, fast runtimes, and lower memory requirements than differentiable optimization tools not tailored to geometry processing.

2602.12105Feb 2026

View

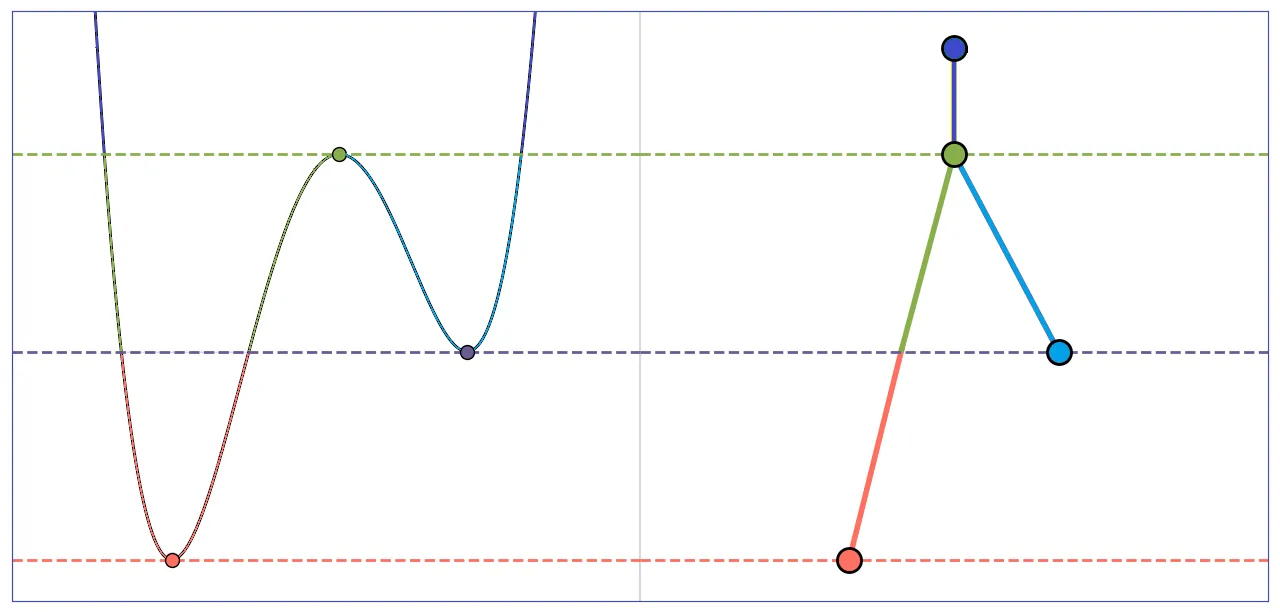

An Improved FPT Algorithm for Computing the Interleaving Distance between Merge Trees via Path-Preserving Maps

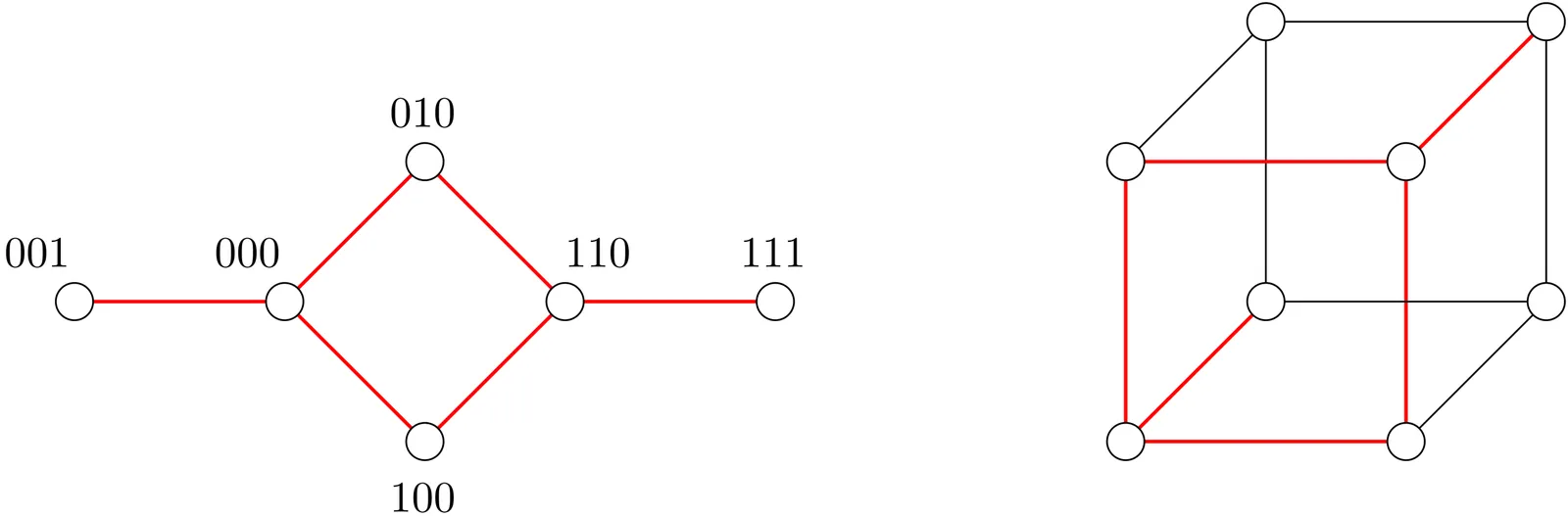

A merge tree is a fundamental topological structure used to capture the sub-level set (and similarly, super-level set) topology in scalar data analysis. The interleaving distance is a theoretically sound, stable metric for comparing merge trees. However, computing this distance exactly is NP-hard. First fixed-parameter tractable (FPT) algorithm for it's exact computation introduces the concept of an $\varepsilon$-good map between two merge trees, where $\varepsilon$ is a candidate value for the interleaving distance. The complexity of their algorithm is $O(2^{2τ}(2τ)^{2τ+2}\cdot n^2\log^3n)$ where $τ$ is the degree-bound parameter and $n$ is the total number of nodes in both the merge trees. Their algorithm exhibits exponential complexity in $τ$, which increases with the increasing value of $\varepsilon$. In the current paper, we propose an improved FPT algorithm for computing the $\varepsilon$-good map between two merge trees. Our algorithm introduces two new parameters, $η_f$ and $η_g$, corresponding to the numbers of leaf nodes in the merge trees $M_f$ and $M_g$, respectively. This parametrization is motivated by the observation that a merge tree can be decomposed into a collection of unique leaf-to-root paths. The proposed algorithm achieves a complexity of $O\!\left(n^2\log n+η_g^{η_f}(η_f+η_g)\, n \log n \right)$. To obtain this reduced complexity, we assume that number of possible $\varepsilon$-good maps from $M_f$ to $M_g$ does not exceed that from $M_g$ to $M_f$. Notably, the parameters $η_f$ and $η_g$ are independent of the choice of $\varepsilon$. Compared to their algorithm, our approach substantially reduces the search space for computing an optimal $\varepsilon$-good map. We also provide a formal proof of correctness for the proposed algorithm.

2602.12028Feb 2026

View

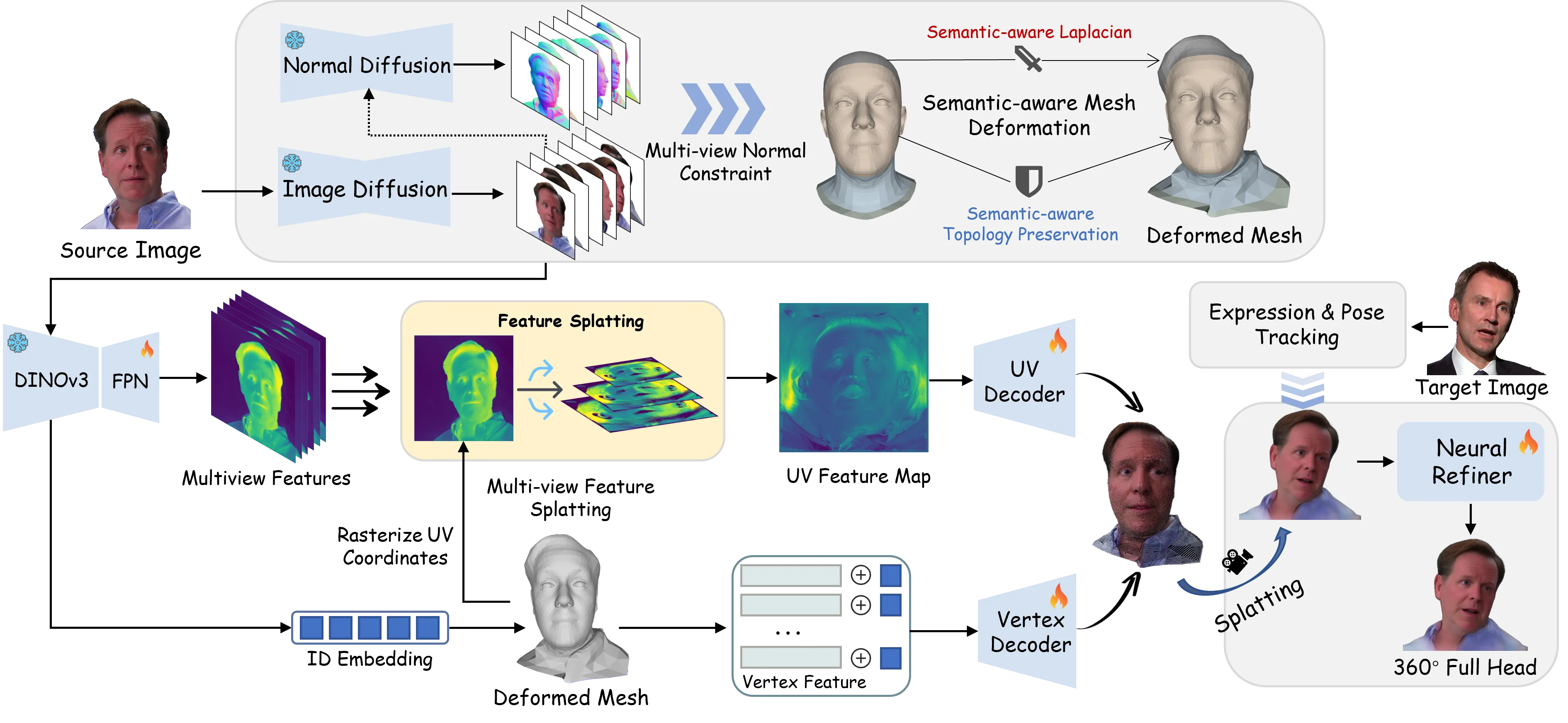

OMEGA-Avatar: One-shot Modeling of 360° Gaussian Avatars

Creating high-fidelity, animatable 3D avatars from a single image remains a formidable challenge. We identified three desirable attributes of avatar generation: 1) the method should be feed-forward, 2) model a 360° full-head, and 3) should be animation-ready. However, current work addresses only two of the three points simultaneously. To address these limitations, we propose OMEGA-Avatar, the first feed-forward framework that simultaneously generates a generalizable, 360°-complete, and animatable 3D Gaussian head from a single image. Starting from a feed-forward and animatable framework, we address the 360° full-head avatar generation problem with two novel components. First, to overcome poor hair modeling in full-head avatar generation, we introduce a semantic-aware mesh deformation module that integrates multi-view normals to optimize a FLAME head with hair while preserving its topology structure. Second, to enable effective feed-forward decoding of full-head features, we propose a multi-view feature splatting module that constructs a shared canonical UV representation from features across multiple views through differentiable bilinear splatting, hierarchical UV mapping, and visibility-aware fusion. This approach preserves both global structural coherence and local high-frequency details across all viewpoints, ensuring 360° consistency without per-instance optimization. Extensive experiments demonstrate that OMEGA-Avatar achieves state-of-the-art performance, significantly outperforming existing baselines in 360° full-head completeness while robustly preserving identity across different viewpoints.

2602.11693Feb 2026

View

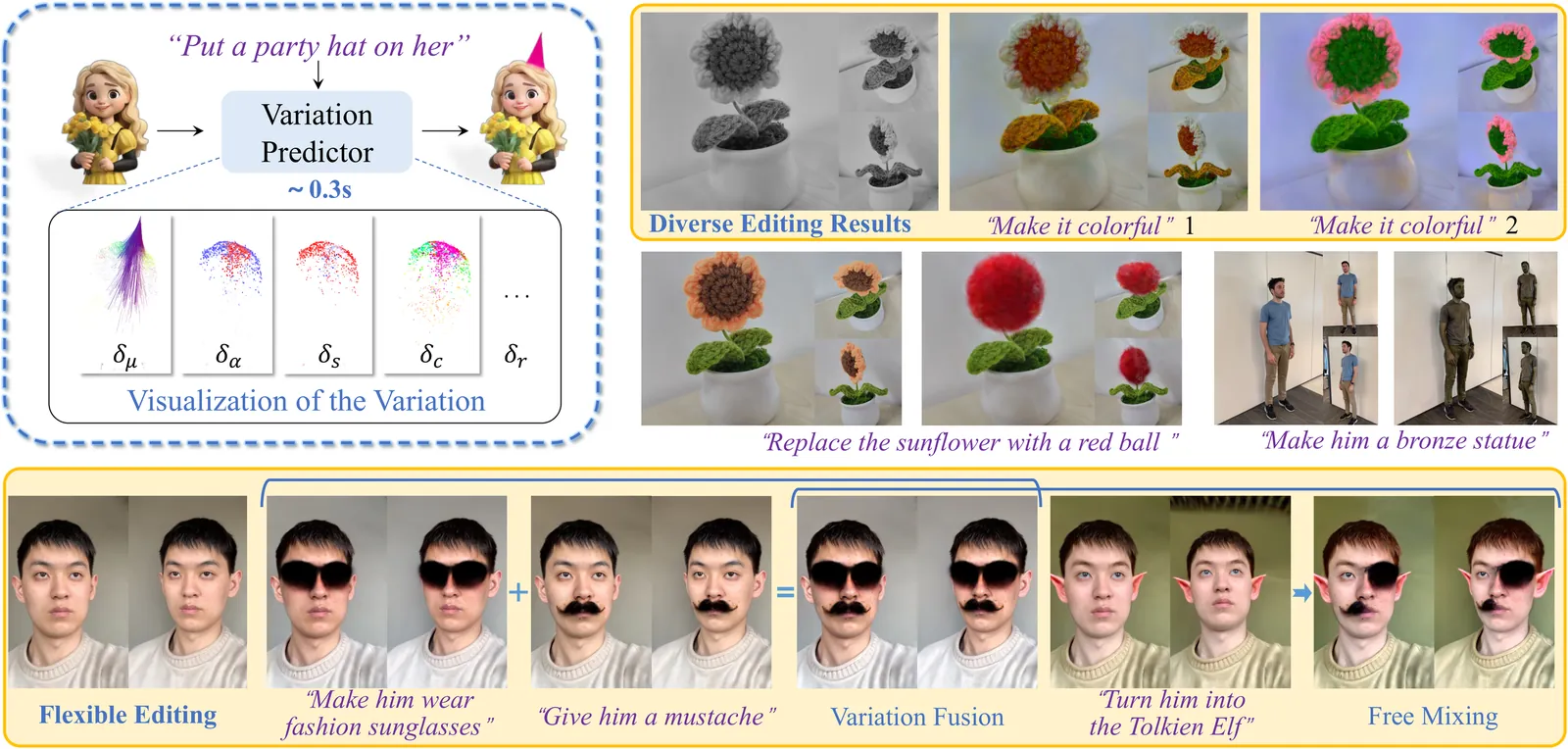

Variation-aware Flexible 3D Gaussian Editing

Indirect editing methods for 3D Gaussian Splatting (3DGS) have recently witnessed significant advancements. These approaches operate by first applying edits in the rendered 2D space and subsequently projecting the modifications back into 3D. However, this paradigm inevitably introduces cross-view inconsistencies and constrains both the flexibility and efficiency of the editing process. To address these challenges, we present VF-Editor, which enables native editing of Gaussian primitives by predicting attribute variations in a feedforward manner. To accurately and efficiently estimate these variations, we design a novel variation predictor distilled from 2D editing knowledge. The predictor encodes the input to generate a variation field and employs two learnable, parallel decoding functions to iteratively infer attribute changes for each 3D Gaussian. Thanks to its unified design, VF-Editor can seamlessly distill editing knowledge from diverse 2D editors and strategies into a single predictor, allowing for flexible and effective knowledge transfer into the 3D domain. Extensive experiments on both public and private datasets reveal the inherent limitations of indirect editing pipelines and validate the effectiveness and flexibility of our approach.

2602.11638Feb 2026

View

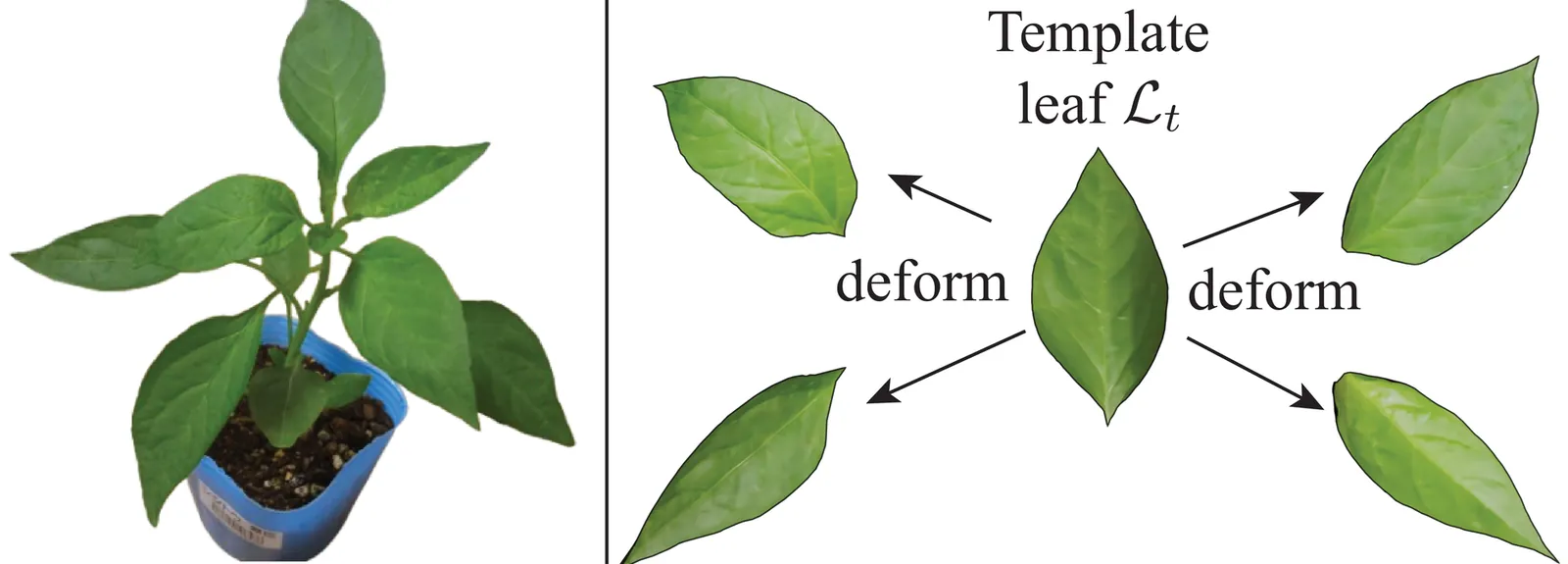

LeafFit: Plant Assets Creation from 3D Gaussian Splatting

We propose LeafFit, a pipeline that converts 3D Gaussian Splatting (3DGS) of individual plants into editable, instanced mesh assets. While 3DGS faithfully captures complex foliage, its high memory footprint and lack of mesh topology make it incompatible with traditional game production workflows. We address this by leveraging the repetition of leaf shapes; our method segments leaves from the unstructured 3DGS, with optional user interaction included as a fallback. A representative leaf group is selected and converted into a thin, sharp mesh to serve as a template; this template is then fitted to all other leaves via differentiable Moving Least Squares (MLS) deformation. At runtime, the deformation is evaluated efficiently on-the-fly using a vertex shader to minimize storage requirements. Experiments demonstrate that LeafFit achieves higher segmentation quality and deformation accuracy than recent baselines while significantly reducing data size and enabling parameter-level editing.

2602.11577Feb 2026

View

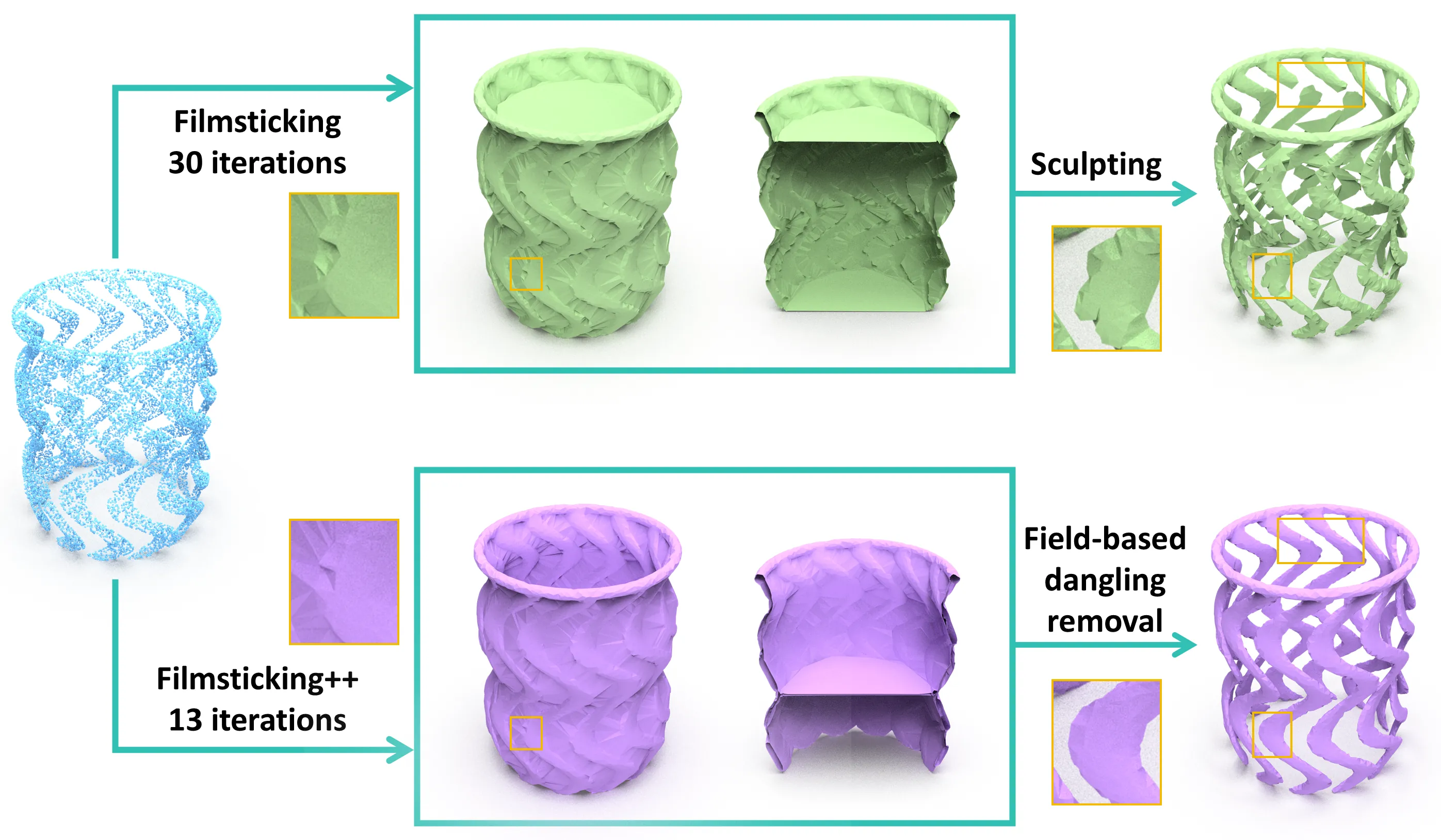

Filmsticking++: Rapid Film Sticking for Explicit Surface Reconstruction

Explicit surface reconstruction aims to generate a surface mesh that exactly interpolates a given point cloud. This requirement is crucial when the point cloud must lie non-negotiably on the final surface to preserve sharp features and fine geometric details. However, the task becomes substantially challenging with low-quality point clouds, due to inherent reconstruction ambiguities compounded by combinatorial complexity. A previous method using filmsticking technique by iteratively compute restricted Voronoi diagram to address these issues, ensures to produce a watertight manifold, setting a new benchmark as the state-of-the-art (SOTA) technique. Unfortunately, RVD-based filmsticking is inability to interpolate all points in the case of deep internal cavities, resulting in very likely is the generation of faulty topology. The cause of this issue is that RVD-based filmsticking has inherent limitations due to Euclidean distance metrics. In this paper, we extend the filmsticking technique, named Filmsticking++. Filmsticking++ reconstructing an explicit surface from points without normals. On one hand, Filmsticking++ break through the inherent limitations of Euclidean distance by employing a weighted-distance-based Restricted Power Diagram, which guarantees that all points are interpolated. On the other hand, we observe that as the guiding surface increasingly approximates the target shape, the external medial axis is gradually expelled outside the guiding surface. Building on this observation, we propose placing virtual sites inside the guiding surface to accelerate the expulsion of the external medial axis from its interior. To summarize, contrary to the SOTA method, Filmsticking++ demonstrates multiple benefits, including decreases computational cost, improved robustness and scalability.

2602.11433Feb 2026

View

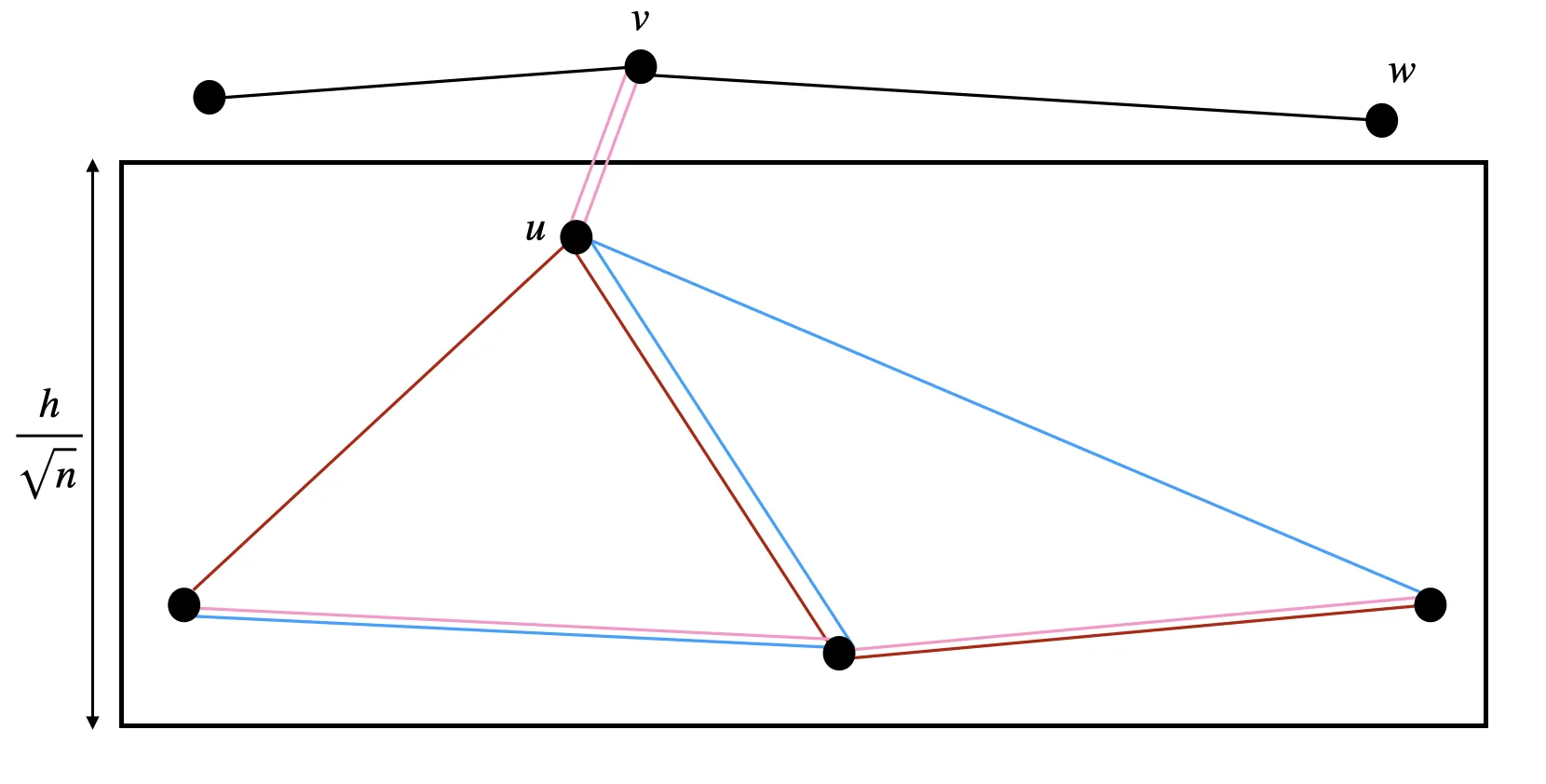

An Improved Upper Bound for the Euclidean TSP Constant Using Band Crossovers

Consider $n$ points generated uniformly at random in the unit square, and let $L_n$ be the length of their optimal traveling salesman tour. Beardwood, Halton, and Hammersley (1959) showed $L_n / \sqrt n \to β$ almost surely as $n\to \infty$ for some constant $β$. The exact value of $β$ is unknown but estimated to be approximately $0.71$ (Applegate, Bixby, Chvátal, Cook 2011). Beardwood et al. further showed that $0.625 \leq β\leq 0.92116.$ Currently, the best known bounds are $0.6277 \leq β\leq 0.90380$, due to Gaudio and Jaillet (2019) and Carlsson and Yu (2023), respectively. The upper bound was derived using a computer-aided approach that is amenable to lower bounds with improved computation speed. In this paper, we show via simulation and concentration analysis that future improvement of the $0.90380$ is limited to $\sim0.88$. Moreover, we provide an alternative tour-constructing heuristic that, via simulation, could potentially improve the upper bound to $\sim0.85$. Our approach builds on a prior \emph{band-traversal} strategy, initially proposed by Beardwood et al. (1959) and subsequently refined by Carlsson and Yu (2023): divide the unit square into bands of height $Θ(1/\sqrt{n})$, construct paths within each band, and then connect the paths to create a TSP tour. Our approach allows paths to cross bands, and takes advantage of pairs of points in adjacent bands which are close to each other. A rigorous numerical analysis improves the upper bound to $0.90367$.

2602.11250Feb 2026

View

Implicit representations via the polynomial method

Semialgebraic graphs are graphs whose vertices are points in $\mathbb{R}^d$, and adjacency between two vertices is determined by the truth value of a semialgebraic predicate of constant complexity. We show how to harness polynomial partitioning methods to construct compact adjacency labeling schemes for families of semialgebraic graphs. That is, we show that for any family of semialgebraic graphs, given a graph on $n$ vertices in this family, we can assign a label consisting of $O(n^{1-2/(d+1) + \varepsilon})$ bits to each vertex (where $\varepsilon > 0$ can be made arbitrarily small and the constant of proportionality depends on $\varepsilon$ and on the complexity of the adjacency-defining predicate), such that adjacency between two vertices can be determined solely from their two labels, without any additional information. We obtain for instance that unit disk graphs and segment intersection graphs have such labelings with labels of $O(n^{1/3 + \varepsilon})$ bits. This is in contrast to their natural implicit representation consisting of the coordinates of the disk centers or segment endpoints, which sometimes require exponentially many bits. It also improves on the best known bound of $O(n^{1-1/d}\log n)$ for $d$-dimensional semialgebraic families due to Alon (Discrete Comput. Geom., 2024), a bound that holds more generally for graphs with shattering functions bounded by a degree-$d$ polynomial. We also give new bounds on the size of adjacency labels for other families of graphs. In particular, we consider semilinear graphs, which are semialgebraic graphs in which the predicate only involves linear polynomials. We show that semilinear graphs have adjacency labels of size $O(\log n)$. We also prove that polygon visibility graphs, which are not semialgebraic in the above sense, have adjacency labels of size $O(\log^3 n)$.

2602.10922Feb 2026

View

Photons x Force: Differentiable Radiation Pressure Modeling

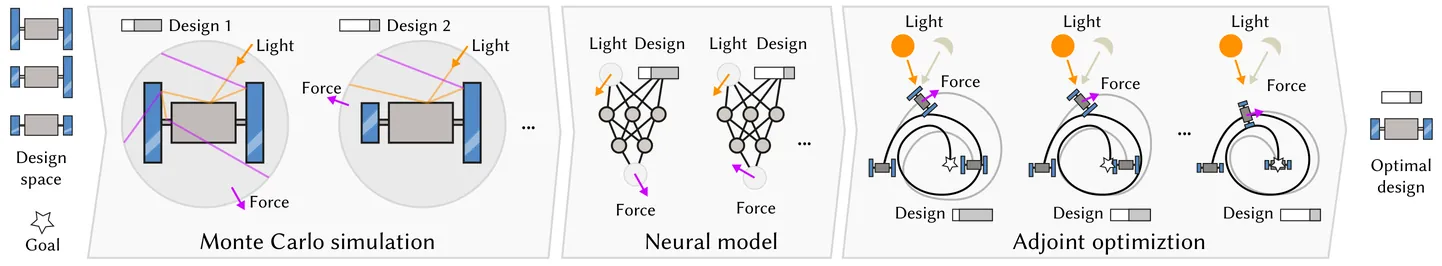

We propose a system to optimize parametric designs subject to radiation pressure, \ie the effect of light on the motion of objects. This is most relevant in the design of spacecraft, where radiation pressure presents the dominant non-conservative forcing mechanism, which is the case beyond approximately 800 km altitude. Despite its importance, the high computational cost of high-fidelity radiation pressure modeling has limited its use in large-scale spacecraft design, optimization, and space situational awareness applications. We enable this by offering three innovations in the simulation, in representation and in optimization: First, a practical computer graphics-inspired Monte-Carlo (MC) simulation of radiation pressure. The simulation is highly parallel, uses importance sampling and next-event estimation to reduce variance and allows simulating an entire family of designs instead of a single spacecraft as in previous work. Second, we introduce neural networks as a representation of forces from design parameters. This neural proxy model, learned from simulations, is inherently differentiable and can query forces orders of magnitude faster than a full MC simulation. Third, and finally, we demonstrate optimizing inverse radiation pressure designs, such as finding geometry, material or operation parameters that minimizes travel time, maximizes proximity given a desired end-point, minimize thruster fuel, trains mission control policies or allocated compute budget in extraterrestrial compute.

2602.10712Feb 2026

View

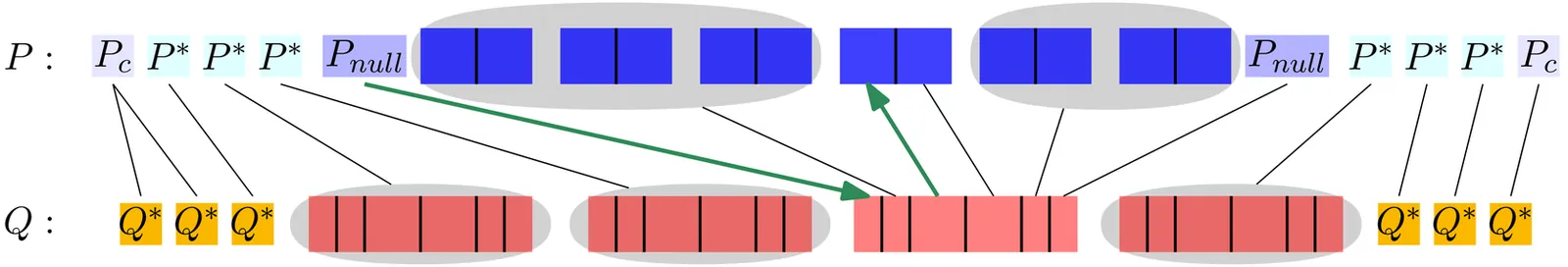

Fréchet Distance in the Imbalanced Case

Given two polygonal curves $P$ and $Q$ defined by $n$ and $m$ vertices with $m\leq n$, we show that the discrete Fréchet distance in 1D cannot be approximated within a factor of $2-\varepsilon$ in $\mathcal{O}((nm)^{1-δ})$ time for any $\varepsilon, δ>0$ unless OVH fails. Using a similar construction, we extend this bound for curves in 2D under the continuous or discrete Fréchet distance and increase the approximation factor to $1+\sqrt{2}-\varepsilon$ (resp. $3-\varepsilon$) if the curves lie in the Euclidean space (resp. in the $L_\infty$-space). This strengthens the lower bound by Buchin, Ophelders, and Speckmann to the case where $m=n^α$ for $α\in(0,1)$ and increases the approximation factor of $1.001$ by Bringmann. For the discrete Fréchet distance in 1D, we provide an approximation algorithm with optimal approximation factor and almost optimal running time. Further, for curves in any dimension embedded in any $L_p$ space, we present a $(3+\varepsilon)$-approximation algorithm for the continuous and discrete Fréchet distance using $\mathcal{O}((n+m^2)\log n)$ time, which almost matches the approximation factor of the lower bound for the $L_\infty$ metric.

2602.09551Feb 2026

View

The Presort Hierarchy for Geometric Problems

Many fundamental problems in computational geometry admit no algorithm running in $o(n \log n)$ time for $n$ planar input points, via classical reductions from sorting. Prominent examples include the computation of convex hulls, quadtrees, onion layer decompositions, Euclidean minimum spanning trees, KD-trees, Voronoi diagrams, and decremental closest-pair. A classical result shows that, given $n$ points sorted along a single direction, the convex hull can be constructed in linear time. Subsequent works established that for all of the other above problems, this information does not suffice. In 1989, Aggarwal, Guibas, Saxe, and Shor asked: Under which conditions can a Voronoi diagram be computed in $o(n \log n)$ time? Since then, the question of whether sorting along TWO directions enables a $o(n \log n)$-time algorithm for such problems has remained open and has been repeatedly mentioned in the literature. In this paper, we introduce the Presort Hierarchy: A problem is 1-Presortable if, given a sorting along one axis, it permits a (possibly randomised) $o(n \log n)$-time algorithm. It is 2-Presortable if sortings along both axes suffice. It is Presort-Hard otherwise. Our main result is that quadtrees, and by extension Delaunay triangulations, Voronoi diagrams, and Euclidean minimum spanning trees, are 2-Presortable: we present an algorithm with expected running time $O(n \sqrt{\log n})$. This addresses the longstanding open problem posed by Aggarwal, Guibas, Saxe, and Shor (albeit randomised). We complement this result by showing that some of the other above geometric problems are also 2-Presortable or Presort-Hard.

2602.08843Feb 2026

View

Forget Superresolution, Sample Adaptively (when Path Tracing)

Real-time path tracing increasingly operates under extremely low sampling budgets, often below one sample per pixel, as rendering complexity, resolution, and frame-rate requirements continue to rise. While super-resolution is widely used in production, it uniformly sacrifices spatial detail and cannot exploit variations in noise, reconstruction difficulty, and perceptual importance across the image. Adaptive sampling offers a compelling alternative, but existing end-to-end approaches rely on approximations that break down in sparse regimes. We introduce an end-to-end adaptive sampling and denoising pipeline explicitly designed for the sub-1-spp regime. Our method uses a stochastic formulation of sample placement that enables gradient estimation despite discrete sampling decisions, allowing stable training of a neural sampler at low sampling budgets. To better align optimization with human perception, we propose a tonemapping-aware training pipeline that integrates differentiable filmic operators and a state-of-the-art perceptual loss, preventing oversampling of regions with low visual impact. In addition, we introduce a gather-based pyramidal denoising filter and a learnable generalization of albedo demodulation tailored to sparse sampling. Our results show consistent improvements over uniform sparse sampling, with notably better reconstruction of perceptually critical details such as specular highlights and shadow boundaries, and demonstrate that adaptive sampling remains effective even at minimal budgets.

2602.08642Feb 2026

ViewPage 1 of 86