Quantum Physics

Quantum mechanics, quantum information, and quantum computing

Quantum mechanics, quantum information, and quantum computing

We prove that learning an unknown quantum channel with input dimension $d_A$, output dimension $d_B$, and Choi rank $r$ to diamond distance $\varepsilon$ requires $ Ω\!\left( \frac{d_A d_B r}{\varepsilon \log(d_B r / \varepsilon)} \right)$ queries. This improves the best previous $Ω(d_A d_B r)$ bound by introducing explicit $\varepsilon$-dependence, with a scaling in $\varepsilon$ that is near-optimal when $d_A=rd_B$ but not tight in general. The proof constructs an ensemble of channels that are well-separated in diamond norm yet admit Stinespring isometries that are close in operator norm.

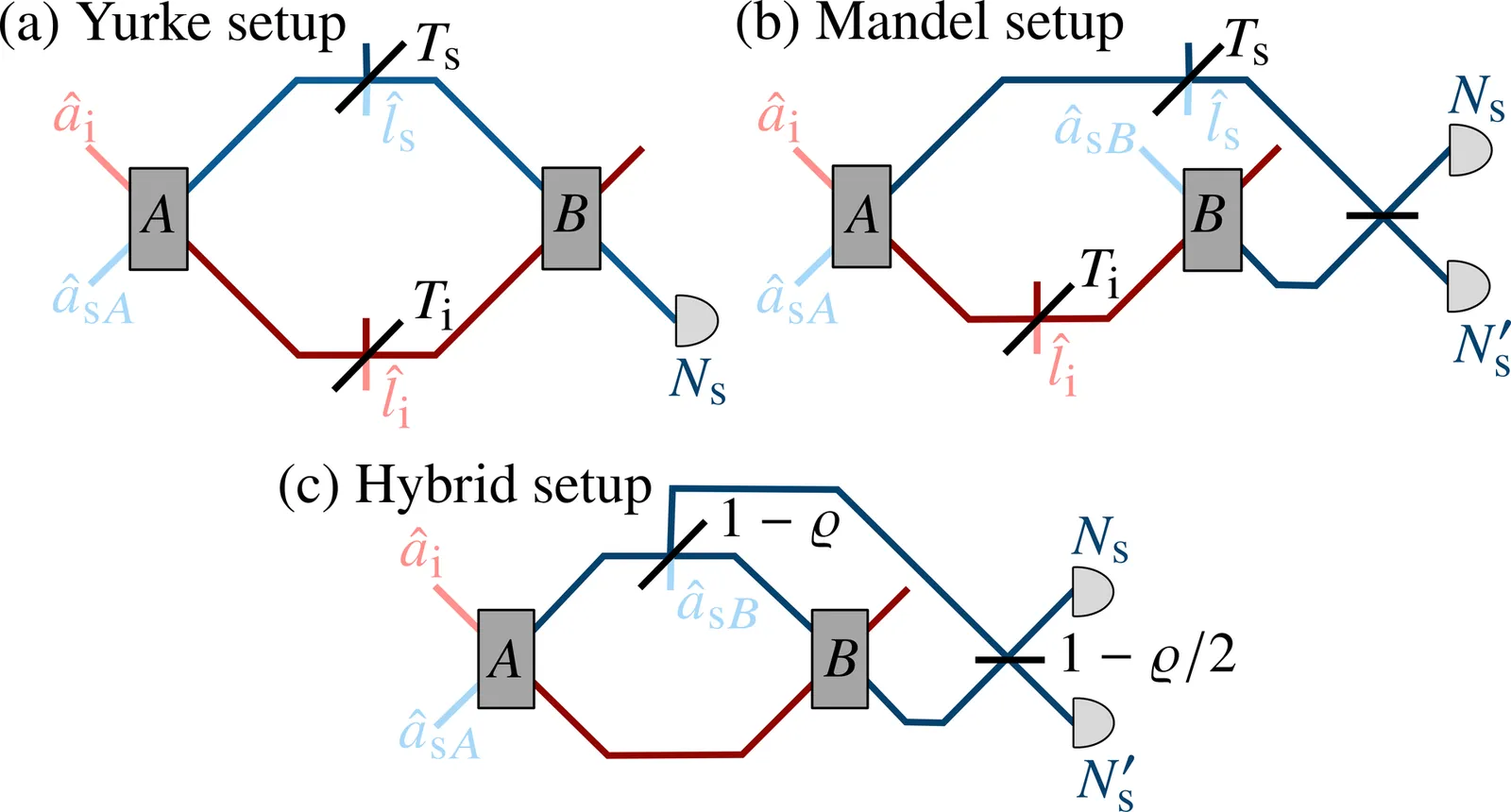

Over the past decade, several schemes for imaging and sensing based on nonlinear interferometers have been proposed and demonstrated experimentally. These interferometers exhibit two main advantages. First, they enable probing a sample at a chosen wavelength while detecting light at a different wavelength with high efficiency (bicolor quantum imaging and sensing with undetected light). Second, they can show quantum-enhanced sensitivities below the shot-noise limit, potentially reaching Heisenberg-limited precision in parameter estimation. Here, we compare three quantum-imaging configurations using only easily accessible intensity-based measurements for phase estimation: a Yurke-type SU(1,1) interferometer, a Mandel-type induced-coherence interferometer, and a hybrid scheme that continuously interpolates between them. While an ideal Yurke interferometer can exhibit Heisenberg scaling, this advantage is known to be fragile under realistic detection constraints and in the presence of loss. We demonstrate that differential intensity detection in the Mandel interferometer provides the highest and most robust phase sensitivity among the considered schemes, reaching but not surpassing the shot-noise limit, even in the presence of loss. Intensity measurements in a Yurke-type configuration can achieve genuine sub-shot-noise sensitivity under balanced losses and moderate gain; however, their performance degrades in realistic high-gain regimes. Consequently, in this regime, the Mandel configuration with differential detection outperforms the Yurke-type setup and constitutes the most robust approach for phase estimation.

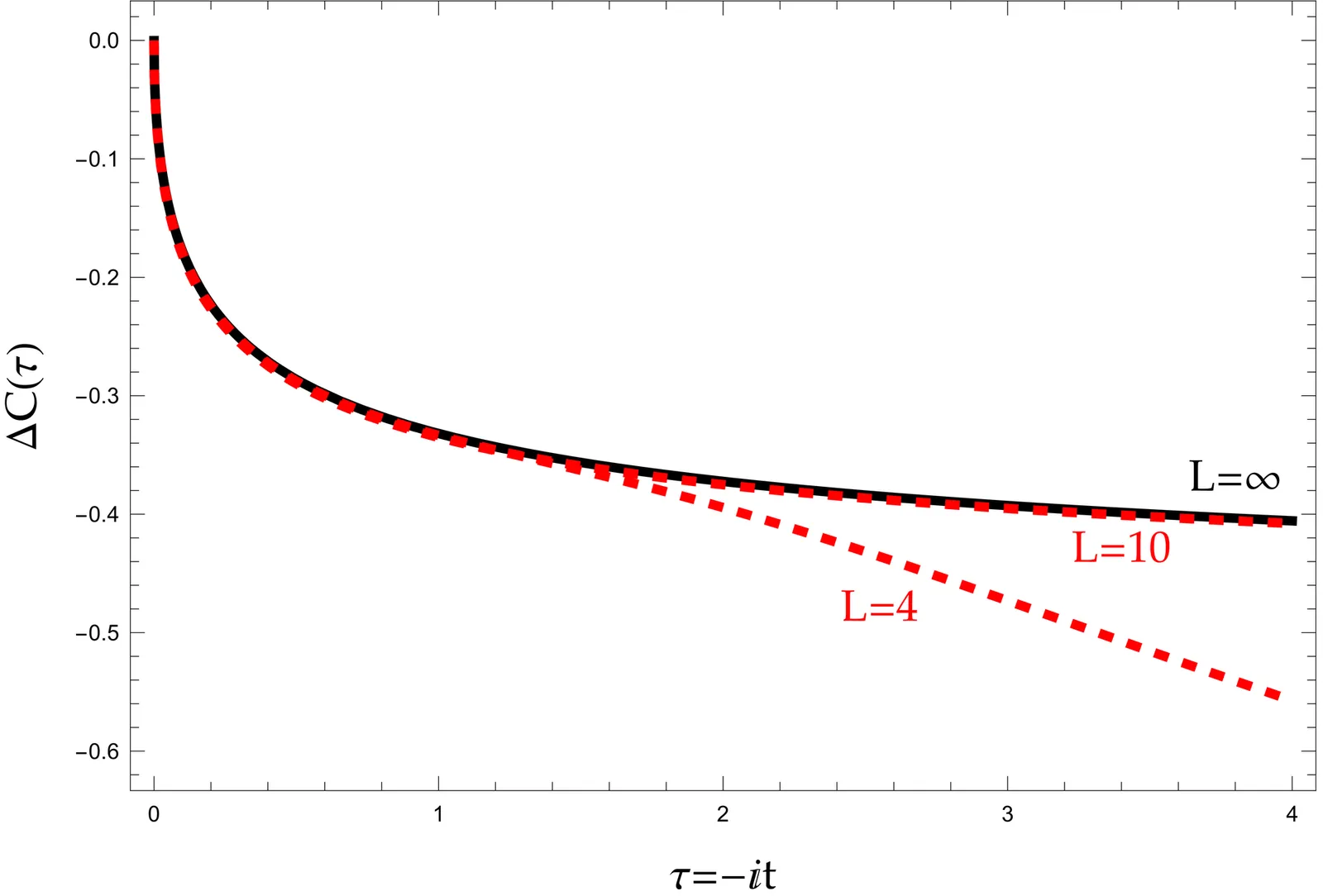

We investigate the feasibility of extracting infinite volume scattering phase shift on quantum computers in a simple one-dimensional quantum mechanical model, using the formalism established in Ref.~\cite{Guo:2023ecc} that relates the integrated correlation functions (ICF) for a trapped system to the infinite volume scattering phase shifts through a weighted integral. The system is first discretized in a finite box with periodic boundary conditions, and the formalism in real time is verified by employing a contact interaction potential with exact solutions. Quantum circuits are then designed and constructed to implement the formalism on current quantum computing architectures. To overcome the fast oscillatory behavior of the integrated correlation functions in real-time simulation, different methods of post-data analysis are proposed and discussed. Test results on IBM hardware show that good agreement can be achieved with two qubits, but complete failure ensues with three qubits due to two-qubit gate operation errors and thermal relaxation errors.

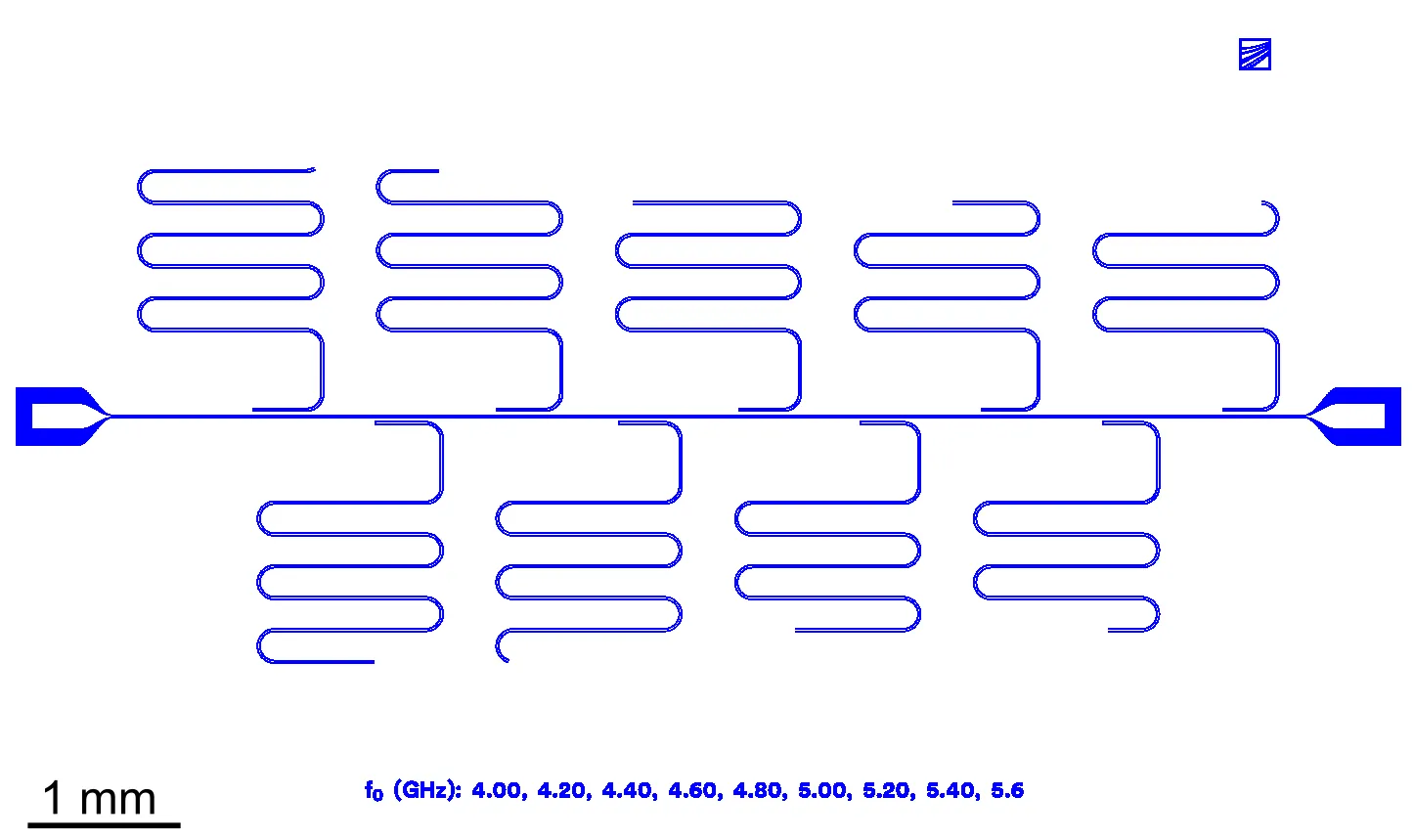

Aluminum remains the central material for superconducting qubits, and considerable effort has been devoted to optimizing its deposition and patterning for quantum devices. However, while post-processing of Nb- and Ta-based resonators has been widely explored, primarily focusing on oxide removal using buffered oxide etch (BOE), post-treatment strategies for Al resonators remain underdeveloped. This challenge becomes particularly relevant for industry-scale fabrication with multichip bonding, where delays between sample preparation and cooldown require surface treatments that preserve low dielectric loss during extended exposure to ambient conditions. In this work, we investigate surface modification approaches for Al resonators subjected to a 24-hour delay prior to cryogenic measurement. Passivation using self-limiting oxygen and fluorine chemistries was evaluated utilizing different plasma processes. Remote oxygen plasma treatment reduced dielectric losses, in contrast to direct plasma, likely due to additional ashing of residual resist despite the formation of a thicker oxide layer on both Si and Al surfaces. A fluorine-based plasma process was developed that passivated the Al surface with fluorine for subsequent BOE treatment. However, increasing fluorine incorporation in the aluminum oxide correlated with higher loss, identifying fluorine as an unsuitable passivation material for Al resonators. Finally, selective oxide removal using HF vapor and phosphoric acid was assessed for surface preparation. HF vapor selectively etched SiO2 while preserving Al2O3, whereas phosphoric acid exhibited the opposite selectivity. Sequential application of both etches yielded dielectric losses as low as $δ_\mathrm{LP} = 5.2 \times 10^{-7}$ ($Q\mathrm{i} \approx 1.9\,\mathrm{M}$) in the single photon regime, demonstrating a promising pathway for robust Al-based resonator fabrication.

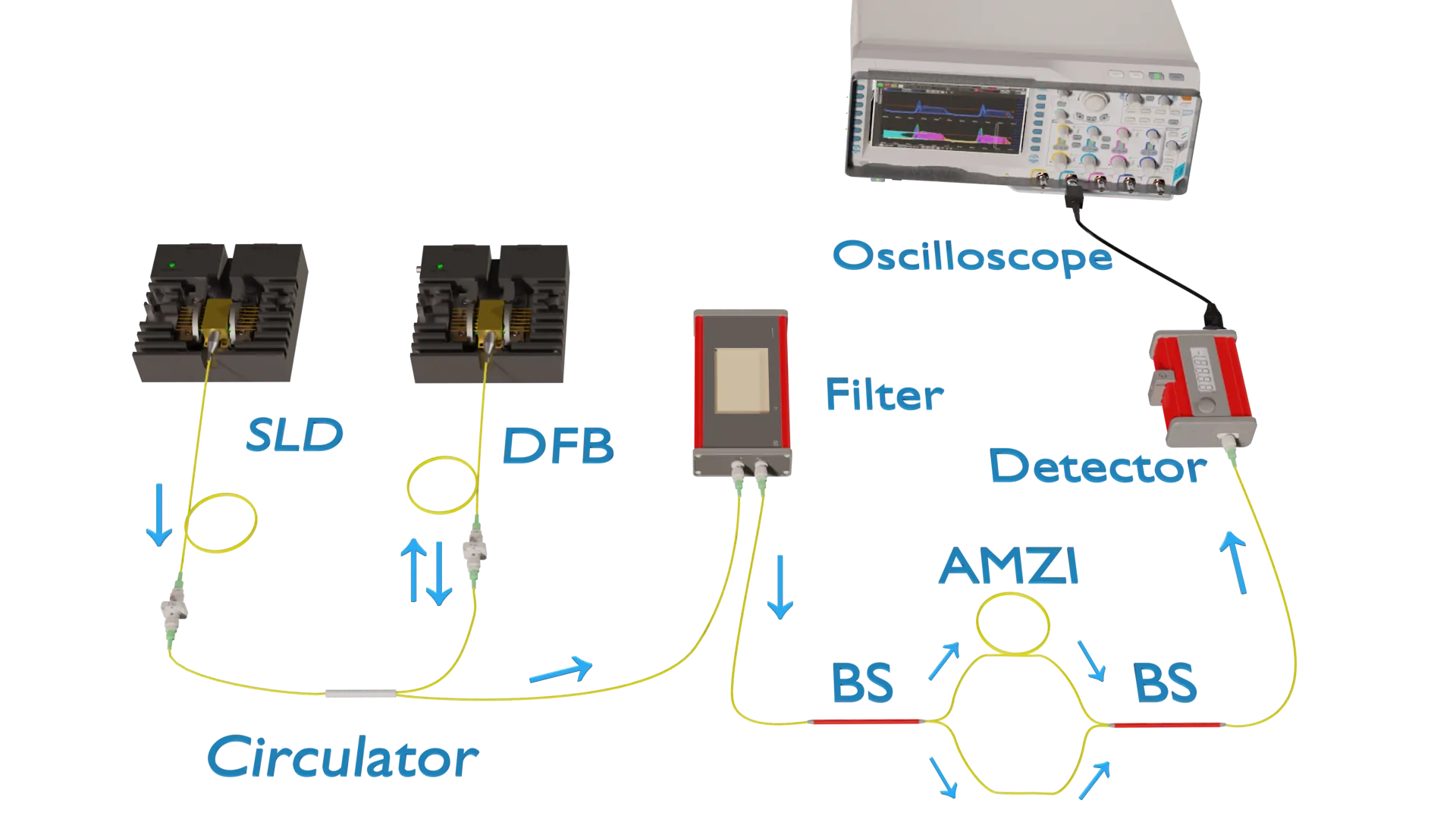

Gain-switching laser diodes is a well-established technique for generating optical pulses with random phases, where the quantum randomness arises naturally from spontaneous emission. However, the maximum switching rate is limited by phase diffusion: at high repetition rates, residual photons in the cavity seed subsequent pulses, leading to phase correlations, which degrade randomness. We present a method to overcome this limitation by employing an external source of spontaneous emission in conjunction with the laser. Our results show that this approach effectively removes interpulse phase correlations and restores phase randomization at repetition rates as high as 10 GHz. This technique opens new opportunities for high-rate quantum key distribution and quantum random number generation.

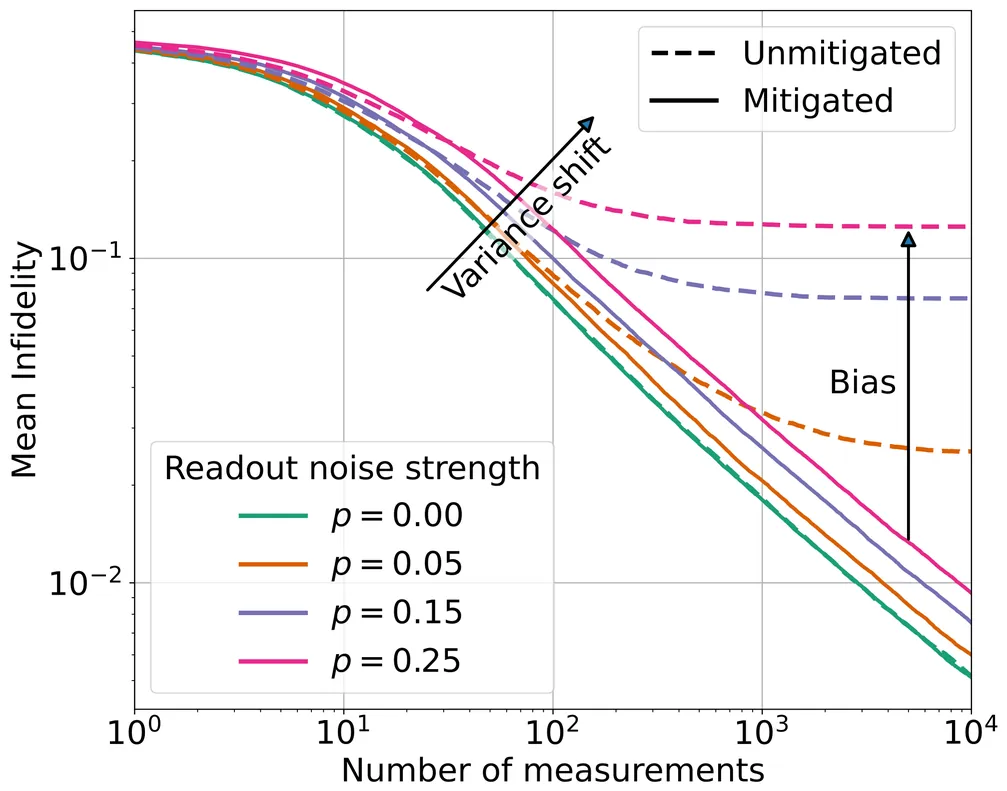

Assumption-free reconstruction of quantum states from measurements is essential for benchmarking and certifying quantum devices, but it remains difficult due to the extensive measurement statistics and experimental resources it demands. An approach to alleviating these demands is provided by adaptive measurement strategies, which can yield up to a quadratic improvement in reconstruction accuracy for pure states by dynamically optimizing measurement settings during data acquisition. A key open question is whether these asymptotic advantages remain in realistic experiments, where readout is inevitably noisy. In this work, we analyze the impact of readout noise on adaptive quantum state tomography with readout-error mitigation, focusing on the challenging regime of reconstructing pure states using mixed-state estimators. Using analytical arguments based on Fisher information optimization and extensive numerical simulations using Bayesian inference, we show that any nonzero readout noise eliminates the asymptotic quadratic scaling advantage of adaptive strategies. We numerically investigate the behavior for finite measurement statistics for single- and two-qubit systems with exact readout-error mitigation and find a gradual transition from ideal to sub-optimal scaling. We furthermore investigate realistic scenarios where detector tomography is performed with a limited number of state copies for calibration, showing that insufficient detector characterization leads to estimator bias and limited reconstruction accuracy. Although our result imposes an upper bound on the reconstruction accuracy that can be achieved with adaptive strategies, we nevertheless observe numerically a constant-factor gain in reconstruction accuracy, which becomes larger as the readout noise decreases. This indicates potential practical benefits in using adaptive measurement strategies in well-calibrated experiments.

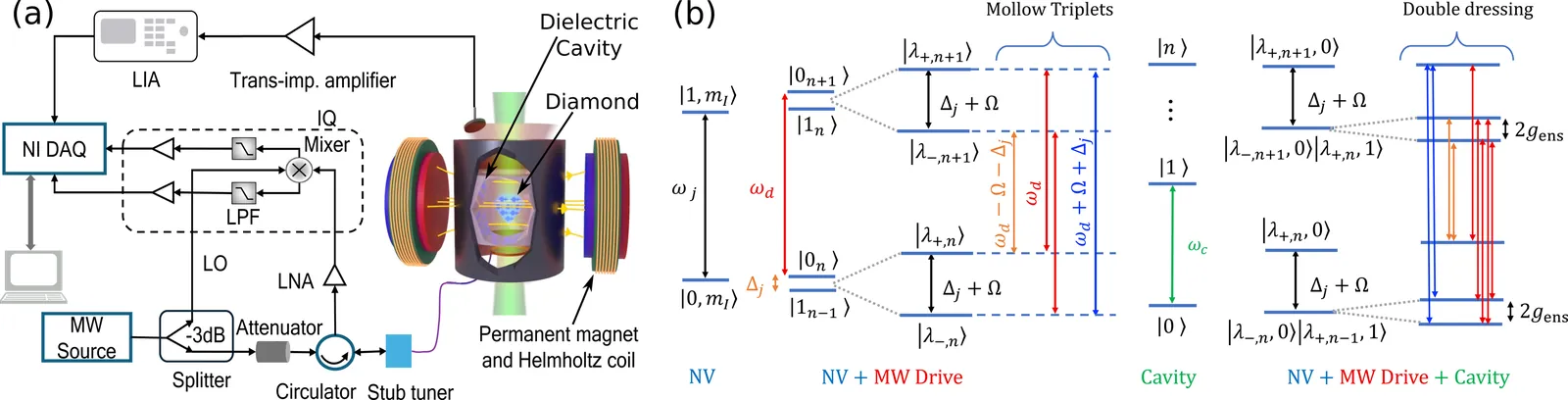

We report a cavity-enabled solid-state magnetometer based on an NV ensemble coupled with a dielectric cavity, achieving 12 pT/$\sqrt{\rm{Hz}}$ sensitivity and a nearly threefold gain from multispectral features. The features originate from cavity-induced splitting of the NV hyperfine levels and leverages robust quantum coherence in the doubly dressed states of the system to achieve high sensitivity. We project simulated near-term sensitivities approaching 100 fT/$\sqrt{\rm{Hz}}$, close to the Johnson-Nyquist limit. Our results establish frequency multiplexing as a new operational paradigm, offering a robust and scalable quantum resource for metrology under ambient conditions.

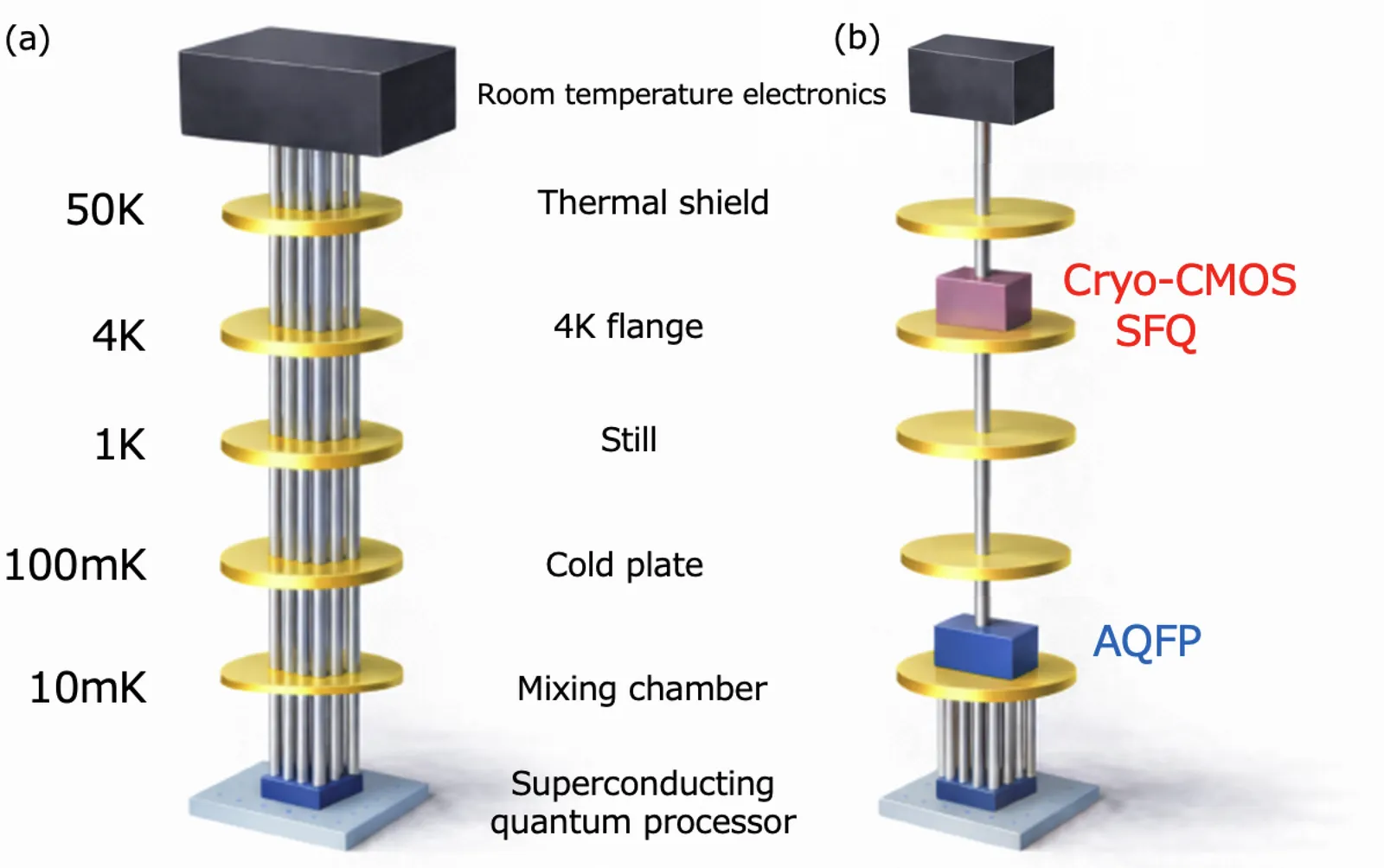

Scaling superconducting quantum computers to the fault-tolerant regime calls for a commensurate scaling of the classical control and readout stack. Today's systems largely rely on room-temperature, rack-based instrumentation connected to dilution-refrigerator cryostats through many coaxial cables. Looking ahead, superconducting fault-tolerant quantum computers (FTQCs) will likely adopt a heterogeneous quantum-classical architecture that places selected electronics at cryogenic stages -- for example, cryo-CMOS at 4~K and superconducting digital logic at 4~K and/or mK stages -- to curb wiring and thermal-load overheads. This review distills key requirements, surveys representative room-temperature and cryogenic approaches, and provides a transparent first-order accounting framework for cryoelectronics. Using an RSA-2048-scale benchmark as a concrete reference point, we illustrate how scaling targets motivate constraints on multiplexing and stage-wise cryogenic power, and discuss implications for functional partitioning across room-temperature electronics, cryo-CMOS, and superconducting logic.

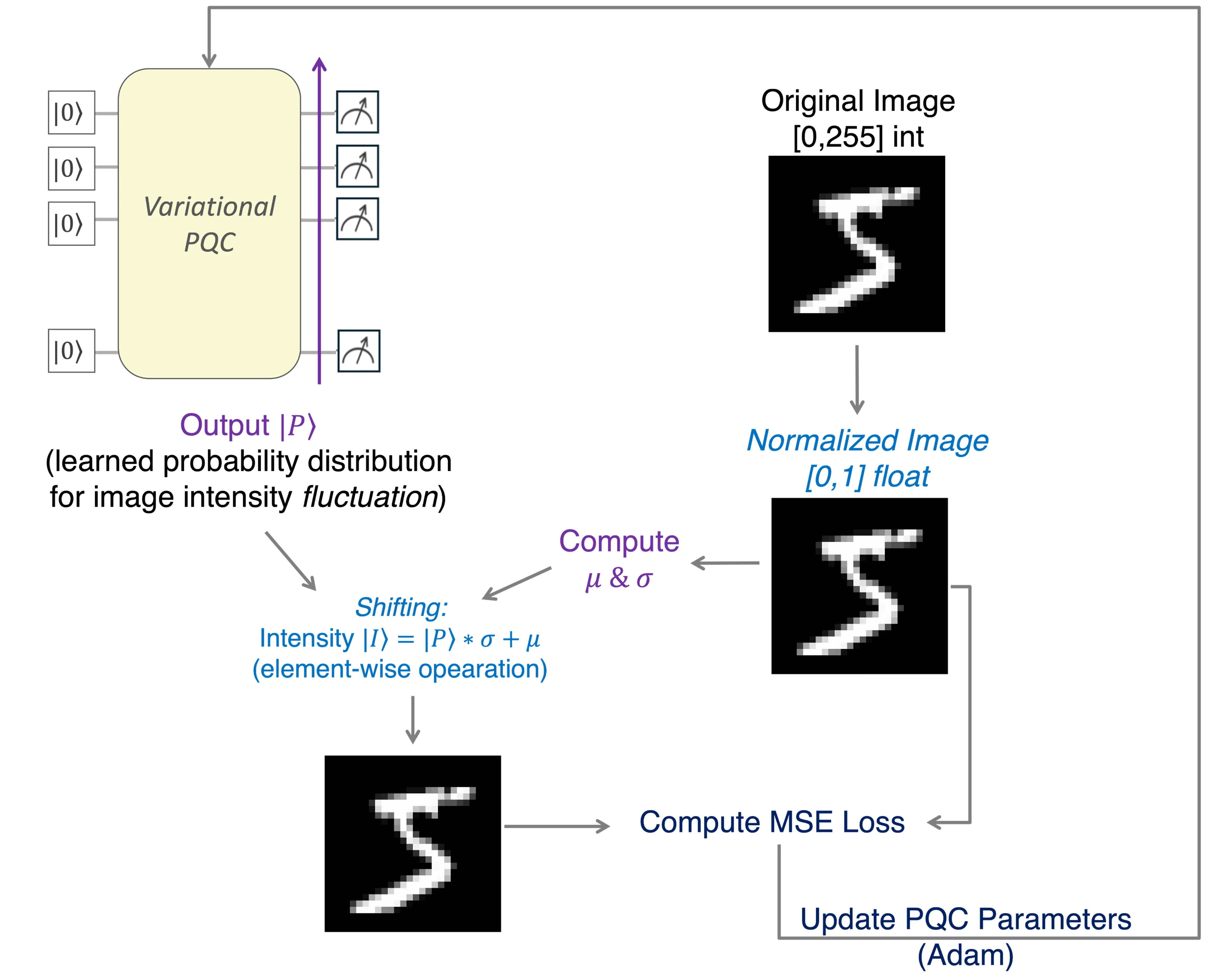

We present MPM-QIR, a variational-quantum-circuit (VQC) framework for classical image compression and representation whose core objective is to achieve equal or better reconstruction quality at a lower Parameter Compression Ratio (PCR). The method aligns a generative VQC's measurement-probability distribution with normalized pixel intensities and learns positional information implicitly via an ordered mapping to the flattened pixel array, thus eliminating explicit coordinate qubits and tying compression efficiency directly to circuit (ansatz) complexity. A bidirectional convolutional architecture induces long-range entanglement at shallow depth, capturing global image correlations with fewer parameters. Under a unified protocol, the approach attains PSNR $\geq$ 30 dB with lower PCR across benchmarks: MNIST 31.80 dB / SSIM 0.81 at PCR 0.69, Fashion-MNIST 31.30 dB / 0.91 at PCR 0.83, and CIFAR-10 31.56 dB / 0.97 at PCR 0.84. Overall, this compression-first design improves parameter efficiency, validates VQCs as direct and effective generative models for classical image compression, and is amenable to two-stage pipelines with classical codecs and to extensions beyond 2D imagery.

Grover's algorithm is a cornerstone of quantum search algorithm, offering quadratic speedup for unstructured problems. However, limited qubit counts and noise in today's noisy intermediate-scale quantum (NISQ) devices hinder large-scale hardware validation, making efficient classical simulation essential for algorithm development and hardware assessment. We present an iterative Grover simulation framework based on matrix product states (MPS) to efficiently simulate large-scale Grover's algorithm. Within the NVIDIA CUDA-Q environment, we compare iterative and common (non-iterative) Grover's circuits across statevector and MPS backends. On the MPS backend at 29 qubits, the iterative Grover's circuit runs about 15x faster than the common (non-iterative) Grover's circuit, and about 3-4x faster than the statevector backend. In sampling experiments, Grover's circuits demonstrate strong low-shot stability: as the qubit number increases beyond 13, a single-shot measurement still closely mirrors the results from 4,096 shots, indicating reliable estimates with minimal sampling and significant potential to cut measurement costs. Overall, an iterative MPS design delivers speed and scalability for Grover's circuit simulation, enabling practical large-scale implementations.

We compare three proof techniques for composable finite-size security of quantum key distribution under collective attacks, with emphasis on how the resulting secret-key rates behave at practically relevant block lengths. As a benchmark, we consider the BB84 protocol and evaluate finite-size key-rate estimates obtained from entropic uncertainty relations (EUR), from the asymptotic equipartition property (AEP), and from a direct finite-block analysis based on the conditional min-entropy, which we refer to as the finite-size min-entropy (FME) approach. For BB84 we show that the EUR-based bound provides the most favorable performance across the considered parameter range, while the AEP bound is asymptotically tight but can become overly pessimistic at moderate and small block sizes, where it may fail to certify a positive key. The FME approach remains effective in this small-block regime, yielding nonzero rates in situations where the AEP estimate vanishes, although it is not asymptotically optimal for BB84. These results motivate the use of FME-type analyses for continuous-variable protocols in settings where tight EUR-based bounds are unavailable, notably for coherent-state schemes where current finite-size analyses typically rely on AEP-style corrections.

Topological quantum sensing leverages unique topological features to suppress noise and improve the precision of parameter estimation, emerging as a promising tool in both fundamental research and practical application. In this Letter, we propose a sensing protocol that exploits the dynamics of topological quantum walks incorporating localized defects. Unlike conventional schemes that rely on topological protection to suppress disorder and defects, our protocol harnesses the evolution time as a resource to enable precise estimation of the defect parameter. By utilizing topologically nontrivial properties of the quantum walks, the sensing precision can approach the Heisenberg limit. We further demonstrate the performance and robustness of the protocol through Bayesian estimation. Our results show that this approach maintains high precision over a broad range of parameters and exhibits strong robustness against disorder, offering a practical pathway for topologically enhanced quantum metrology.

Loophole-free quantum nonlocality often demands experiments with high complexity (defined by all parties' settings and outcomes) and multiple efficient detectors. Here, we identify the fundamental efficiency and complexity thresholds for quantum steering using two-qubit entangled states. Remarkably, it requires only one photon detector on the untrusted side, with efficiency $ε> 1/X$, where $X \geq 2$ is the number of settings on that side. This threshold applies to all pure entangled states, in contrast to analogous Bell-nonlocality tests, which require almost unentangled states to be loss-tolerant. We confirm these predictions in a minimal-complexity ($X = 2$ for the untrusted party and a single three-outcome measurement for the trusted party), detection-loophole-free photonic experiment with $ε= (51.6 \pm 0.4)\% $.

2601.03734

2601.03734We investigate the computational hardness of estimating the quantum $α$-Rényi entropy ${\rm S}^{\tt R}_α(ρ) = \frac{\ln {\rm Tr}(ρ^α)}{1-α}$ and the quantum $q$-Tsallis entropy ${\rm S}^{\tt T}_q(ρ) = \frac{1-{\rm Tr}(ρ^q)}{q-1}$, both converging to the von Neumann entropy as the order approaches $1$. The promise problems Quantum $α$-Rényi Entropy Approximation (RényiQEA$_α$) and Quantum $q$-Tsallis Entropy Approximation (TsallisQEA$_q$) ask whether $ {\rm S}^ {\tt R}_α(ρ)$ or ${\rm S}^{\tt T}_q(ρ)$, respectively, is at least $τ_{\tt Y}$ or at most $τ_{\tt N}$, where $τ_{\tt Y} - τ_{\tt N}$ is typically a positive constant. Previous hardness results cover only the von Neumann entropy (order $1$) and some cases of the quantum $q$-Tsallis entropy, while existing approaches do not readily extend to other orders. We establish that for all positive real orders, the rank-$2$ variants Rank2RényiQEA$_α$ and Rank2TsallisQEA$_q$ are ${\sf BQP}$-hard. Combined with prior (rank-dependent) quantum query algorithms in Wang, Guan, Liu, Zhang, and Ying (TIT 2024), Wang, Zhang, and Li (TIT 2024), and Liu and Wang (SODA 2025), our results imply: - For all real orders $α> 0$ and $0 < q \leq 1$, LowRankRényiQEA$_α$ and LowRankTsallisQEA$_q$ are ${\sf BQP}$-complete, where both are restricted versions of RényiQEA$_α$ and TsallisQEA$_q$ with $ρ$ of polynomial rank. - For all real order $q>1$, TsallisQEA$_q$ is ${\sf BQP}$-complete. Our hardness results stem from reductions based on new inequalities relating the $α$-Rényi or $q$-Tsallis binary entropies of different orders, where the reductions differ substantially from previous approaches, and the inequalities are also of independent interest.

We investigate double-interval entanglement measures, specifically reflected entropy, mutual information, and logarithmic negativity, in quasiparticle excited states for classical, bosonic, and fermionic systems. We develop an algorithm that efficiently calculates these measures from density matrices expressed in a non-orthonormal basis, enabling straightforward numerical implementation. We find a universal additivity property that emerges at large momentum differences, where the entanglement measures for states with distinct quasiparticle sets equal the sum of their individual contributions. The classical limit arises as a special case of this additivity, with both bosonic and fermionic results converging to classical behavior when all momentum differences are large.

Bias-tailored codes such as the XZZX surface code and the domain wall color code achieve high dephasing-biased thresholds because, in the infinite-bias limit, their $Z$ syndromes decouple into one-dimensional repetition-like chains; the $X^3Z^3$ Floquet code shows an analogous strip-wise structure for detector events in spacetime. We capture this common mechanism by defining strip-symmetric biased codes, a class of static stabilizer and dynamical (Floquet) codes for which, under pure dephasing and perfect measurements, each elementary $Z$ fault is confined to a strip and the Z-detector--fault incidence matrix is block diagonal. For such codes the Z-detector hypergraph decomposes into independent strip components and maximum-likelihood $Z$ decoding factorizes across strips, yielding complexity savings for matching-based decoders. We characterize strip symmetry via per-strip stabilizer products, viewed as a $\mathbb{Z}_2$ 1-form symmetry, place XZZX, the domain wall color code, and $X^3Z^3$ in this framework, and introduce synthetic strip-symmetric detector models and domain-wise Clifford constructions that serve as design tools for new bias-tailored Floquet codes.

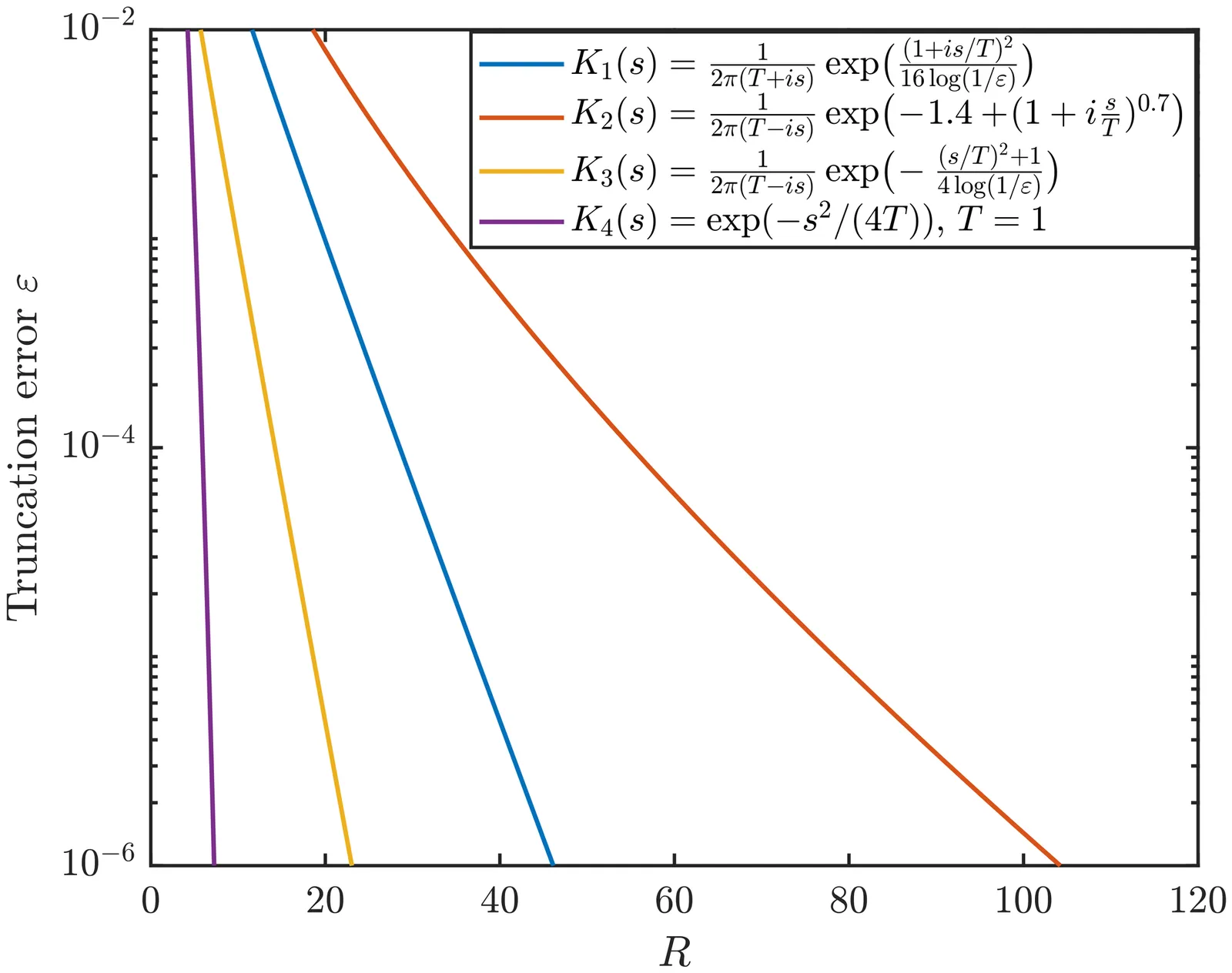

We present a quantum algorithm for simulating dissipative diffusion dynamics generated by positive semidefinite operators of the form $A=L^\dagger L$, a structure that arises naturally in standard discretizations of elliptic operators. Our main tool is the Kannai transform, which represents the diffusion semigroup $e^{-TA}$ as a Gaussian-weighted superposition of unitary wave propagators. This representation leads to a linear-combination-of-unitaries implementation with a Gaussian tail and yields query complexity $\tilde{\mathcal{O}}(\sqrt{\|A\| T \log(1/\varepsilon)})$, up to standard dependence on state-preparation and output norms, improving the scaling in $\|A\|, T$ and $\varepsilon$ compared with generic Hamiltonian-simulation-based methods. We instantiate the method for the heat equation and biharmonic diffusion under non-periodic physical boundary conditions, and we further use it as a subroutine for constant-coefficient linear parabolic surrogates arising in entropy-penalization schemes for viscous Hamilton--Jacobi equations. In the long-time regime, the same framework yields a structured quantum linear solver for $A\mathbf{x}=\mathbf{b}$ with $A=L^\dagger L$, achieving $\tilde{\mathcal{O}}(κ^{3/2}\log^2(1/\varepsilon))$ queries and improving the condition-number dependence over standard quantum linear-system algorithms in this factorized setting.

We show that Weyl's abandoned idea of local scale invariance has a natural realization at the quantum level in pilot-wave (deBroglie-Bohm) theory. We obtain the Weyl covariant derivative by complexifying the electromagnetic gauge coupling parameter. The resultant non-hermiticity has a natural interpretation in terms of local scale invariance of the quantum state in pilot-wave theory. The conserved current density is modified from $|ψ|^2$ to the local scale invariant, trajectory-dependent ratio $|ψ|^2/ \mathbf{1}^2[\mathcal{C}]$, where $\mathbf 1[\mathcal C]$ is a scale factor that depends on the pilot-wave trajectory $\mathcal C$ in configuration space. Our approach is general, and we implement it for the Schrödinger, Pauli, and Dirac equations coupled to an external electromagnetic field. We also implement it in quantum field theory for the case of a quantized axion field interacting with a quantized electromagnetic field. We discuss the equilibrium probability density and show that the corresponding trajectories are unique.

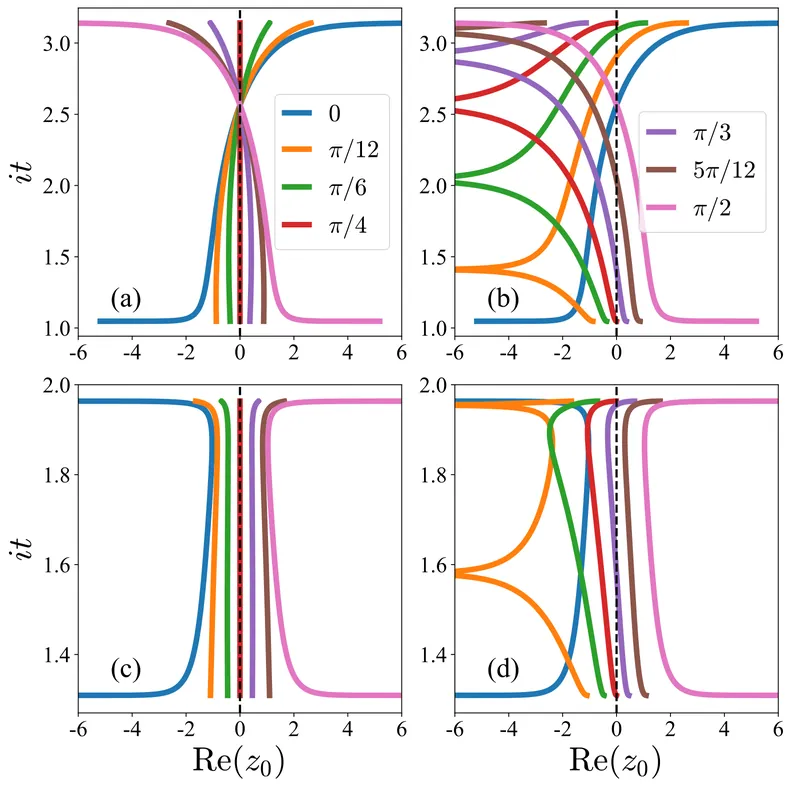

We propose a protocol to tailor dynamical quantum phase transitions (DQPTs) by double-mode squeezing onto the initial state in the XY chain. The effect of squeezing depends critically on the system's symmetry and parameters. When the squeezing operator breaks particle-hole symmetry (PHS), DQPTs become highly tunable, allowing one to either induce transitions within a single phase or suppress them. Remarkably, when PHS is preserved and the squeezing strength reaches $r=π/4$, a universal class of DQPTs emerges, independent of the quench path. This universality is characterized by two key features: (i) the collapse of all Fisher zeros onto the real-time axis, and (ii) the saturation of intermode entanglement to its maximum in each $(k,-k)$ modes. Moreover, the critical momenta governing the DQPTs coincide exactly with the modes attaining the maximal entanglement. At this universal point, the dynamical phase vanishes, leading to a purely geometric evolution marked by $π$-jumps in the Pancharatnam geometric phase. Our work establishes initial-state squeezing as a versatile tool for tailoring far-from-equilibrium criticality and reveals a direct link between entanglement saturation and universal nonanalytic dynamics.

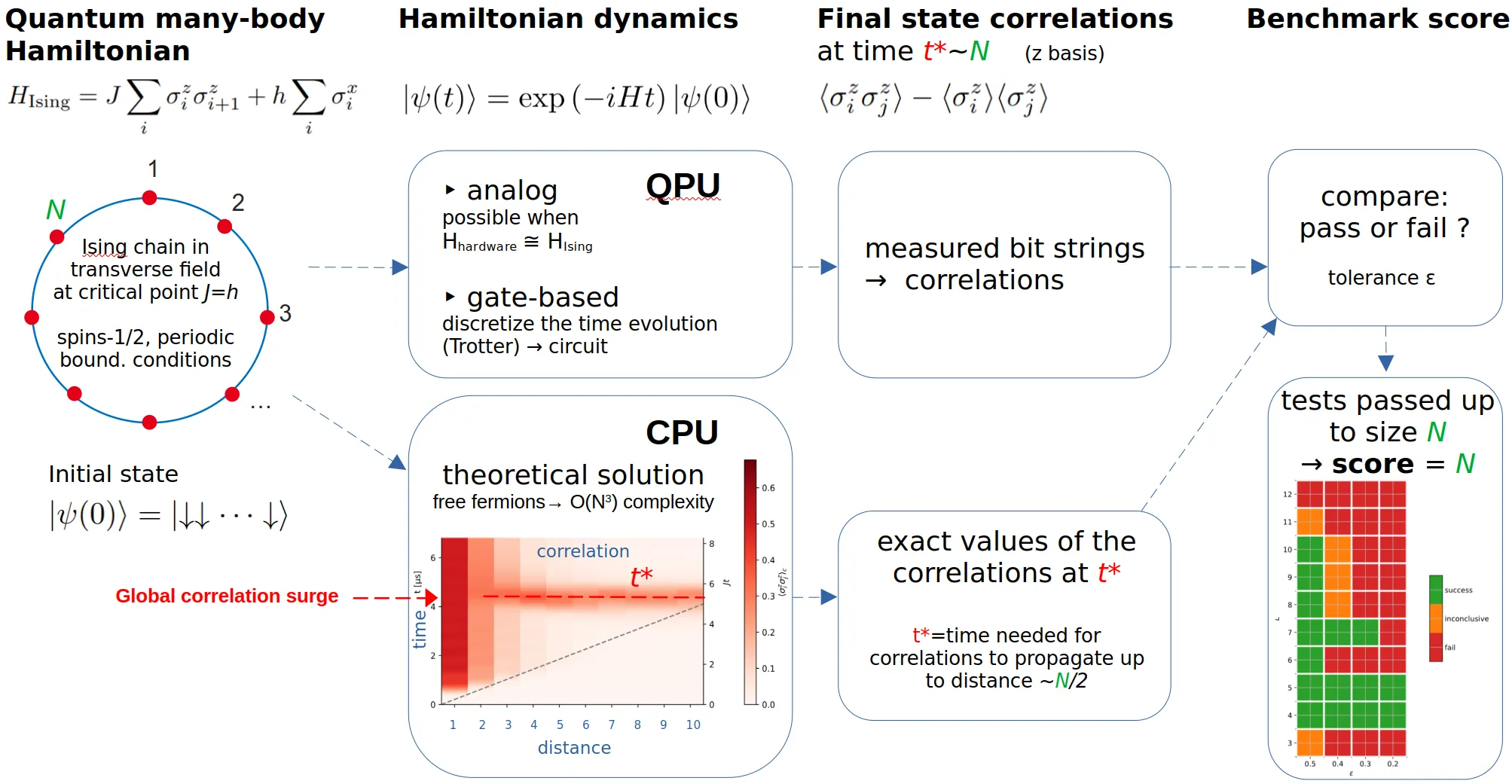

We propose the Many-body Quantum Score (MBQS), a practical and scalable application-level benchmark protocol designed to evaluate the capabilities of quantum processing units (QPUs)--both gate-based and analog--for simulating many-body quantum dynamics. MBQS quantifies performance by identifying the maximum number of qubits with which a QPU can reliably reproduce correlation functions of the transverse-field Ising model following a specific quantum quench. This paper presents the MBQS protocol and highlights its design principles, supported by analytical insights, classical simulations, and experimental data. It also displays results obtained with Ruby, an analog QPU based on Rydberg atoms developed by the Pasqal company. These findings demonstrate MBQS's potential as a robust and informative tool for benchmarking near-term quantum devices for many-body physics.