Economic Theory

Microeconomic theory, game theory, and mechanism design

Microeconomic theory, game theory, and mechanism design

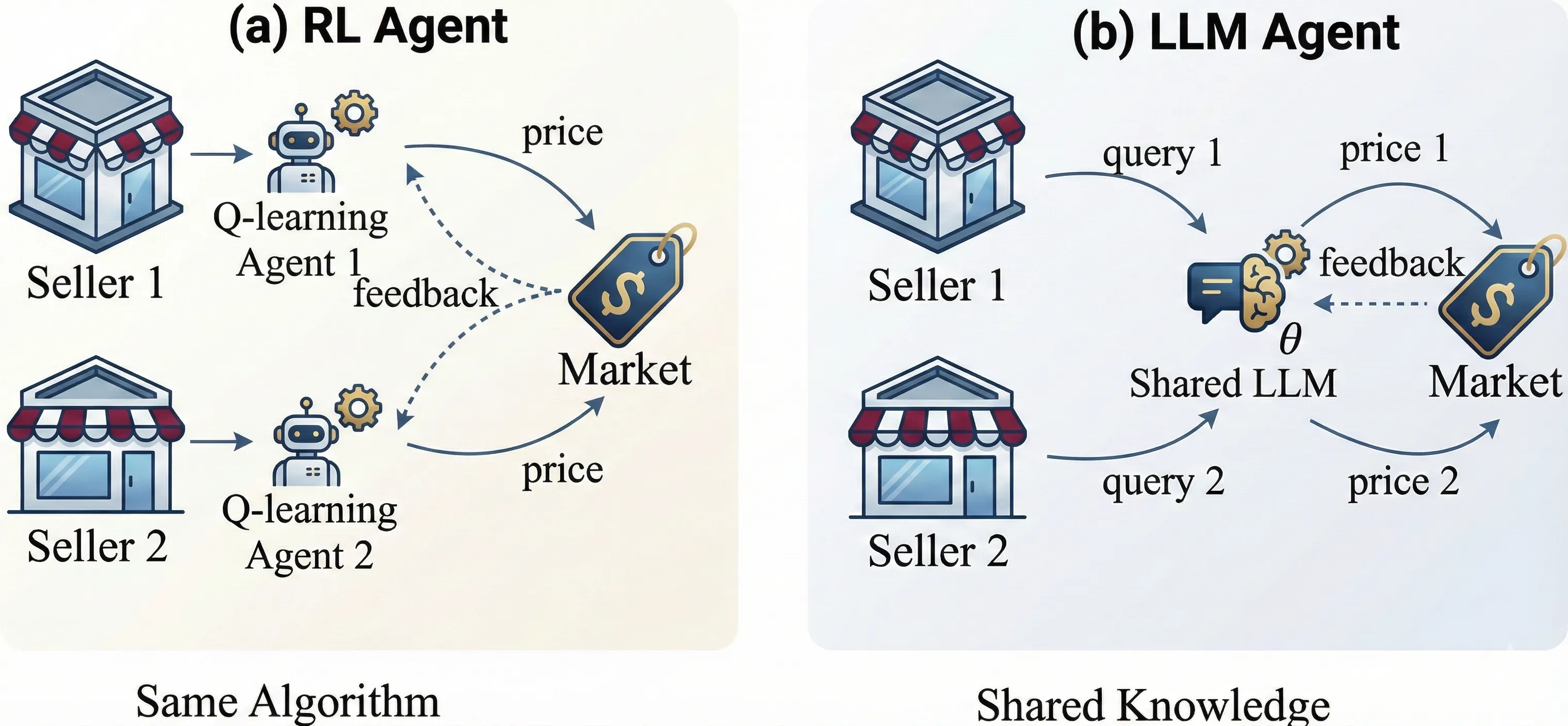

We study how delegating pricing to large language models (LLMs) can facilitate collusion in a duopoly when both sellers rely on the same pre-trained model. The LLM is characterized by (i) a propensity parameter capturing its internal bias toward high-price recommendations and (ii) an output-fidelity parameter measuring how tightly outputs track that bias; the propensity evolves through retraining. We show that configuring LLMs for robustness and reproducibility can induce collusion via a phase transition: there exists a critical output-fidelity threshold that pins down long-run behavior. Below it, competitive pricing is the unique long-run outcome. Above it, the system is bistable, with competitive and collusive pricing both locally stable and the realized outcome determined by the model's initial preference. The collusive regime resembles tacit collusion: prices are elevated on average, yet occasional low-price recommendations provide plausible deniability. With perfect fidelity, full collusion emerges from any interior initial condition. For finite training batches of size $b$, infrequent retraining (driven by computational costs) further amplifies collusion: conditional on starting in the collusive basin, the probability of collusion approaches one as $b$ grows, since larger batches dampen stochastic fluctuations that might otherwise tip the system toward competition. The indeterminacy region shrinks at rate $O(1/\sqrt{b})$.

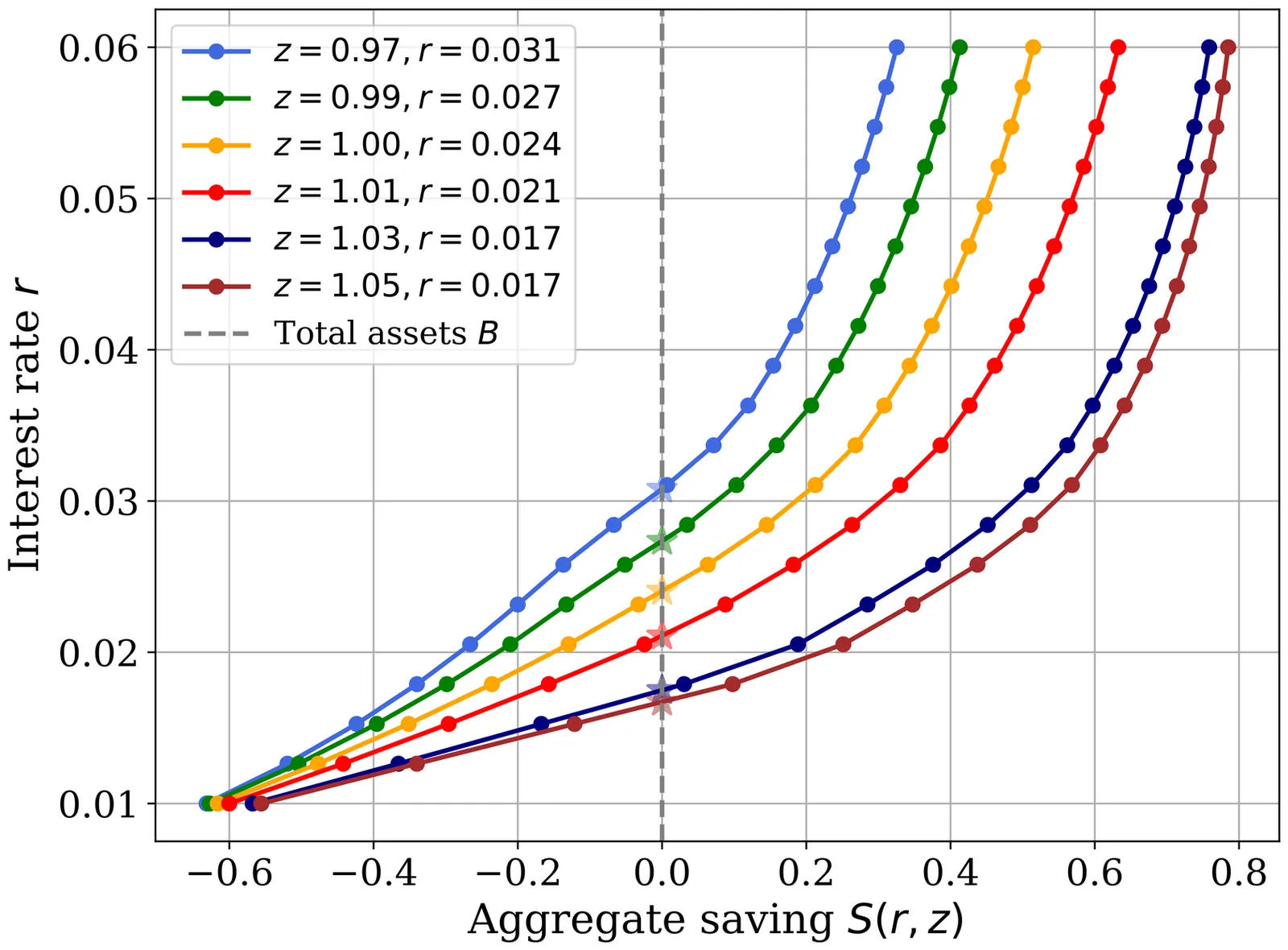

We present a new approach to formulating and solving heterogeneous agent models with aggregate risk. We replace the cross-sectional distribution with low-dimensional prices as state variables and let agents learn equilibrium price dynamics directly from simulated paths. To do so, we introduce a structural reinforcement learning (SRL) method which treats prices via simulation while exploiting agents' structural knowledge of their own individual dynamics. Our SRL method yields a general and highly efficient global solution method for heterogeneous agent models that sidesteps the Master equation and handles problems traditional methods struggle with, in particular nontrivial market-clearing conditions. We illustrate the approach in the Krusell-Smith model, the Huggett model with aggregate shocks, and a HANK model with a forward-looking Phillips curve, all of which we solve globally within minutes.

2512.10203

2512.10203We study Sybil manipulation in BRACE, a competitive equilibrium mechanism for combinatorial exchanges, by treating identity creation as a finite perturbation of the empirical distribution of reported types. Under standard regularity assumptions on the excess demand map and smoothness of principal utilities, we obtain explicit linear bounds on price and welfare deviations induced by bounded Sybil invasion. Using these bounds, we prove a sharp contrast: strategyproofness in the large holds if and only if each principal's share of identities vanishes, whereas any principal with a persistent positive share can construct deviations yielding strictly positive limiting gains. We further show that the feasibility of BRACE fails in the event of an unbounded population of Sybils and provide a precise cost threshold that ensures disincentivization of such attacks in large markets.

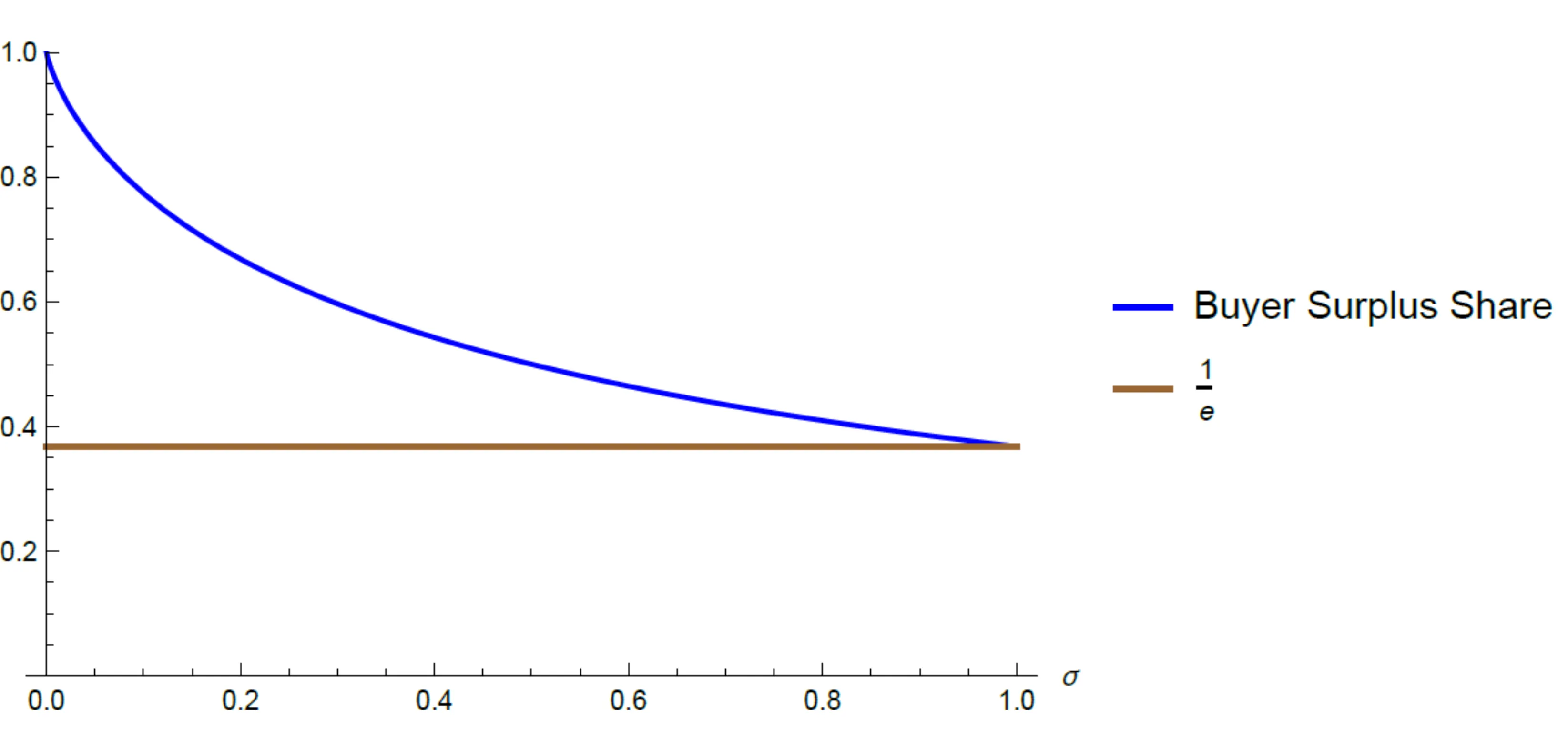

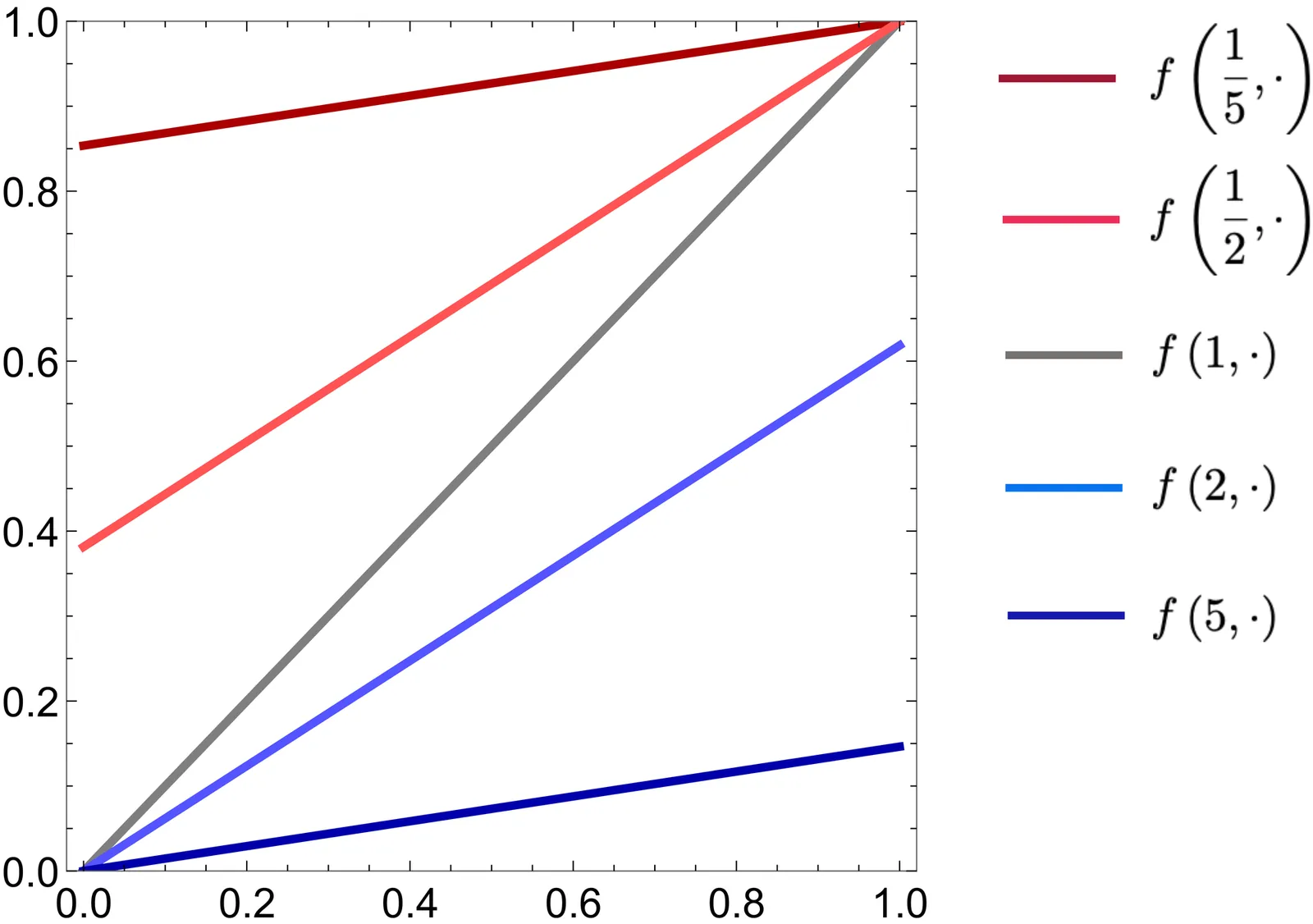

How should a buyer design procurement mechanisms when suppliers' costs are unknown, and the buyer does not have a prior belief? We demonstrate that simple mechanisms - that share a constant fraction of the buyer utility with the seller - allow the buyer to realize a guaranteed positive fraction of the efficient social surplus across all possible costs. Moreover, a judicious choice of the share based on the known demand maximizes the surplus ratio guarantee that can be attained across all possible (arbitrarily complex and nonlinear) mechanisms and cost functions. Similar results hold in related nonlinear pricing and optimal regulation problems.

2512.07798

2512.07798We study optimal auction design in an independent private values environment where bidders can endogenously -- but at a cost -- improve information about their own valuations. The optimal mechanism is two-stage: at stage-1 bidders register an information acquisition plan and pay a transfer; at stage-2 they bid, and allocation and payments are determined. We show that the revenue-optimal stage-2 rule is the Vickrey--Clarke--Groves (VCG) mechanism, while stage-1 transfers implement the optimal screening of types and absorb information rents consistent with incentive compatibility and participation. By committing to VCG ex post, the pre-auction information game becomes a potential game, so equilibrium information choices maximize expected welfare; the stage-1 fee schedule then transfers an optimal amount of payoff without conditioning on unverifiable cost scales. The design is robust to asymmetric primitives and accommodates a wide range of information technologies, providing a simple implementation that unifies efficiency and optimal revenue in environments with endogenous information acquisition.

2512.03930

2512.03930An axiomatic characterization of Nash equilibrium is provided for games in normal form. The Nash equilibrium correspondence is shown to be fully characterized by four simple and intuitive axioms, two of which are inspired by contraction and expansion consistency properties from the literature on abstract choice theory. The axiomatization applies to Nash equilibria in pure and mixed strategies alike, to games with strategy sets of any cardinality, and it does not require that players' preferences have a utility or expected utility representation.

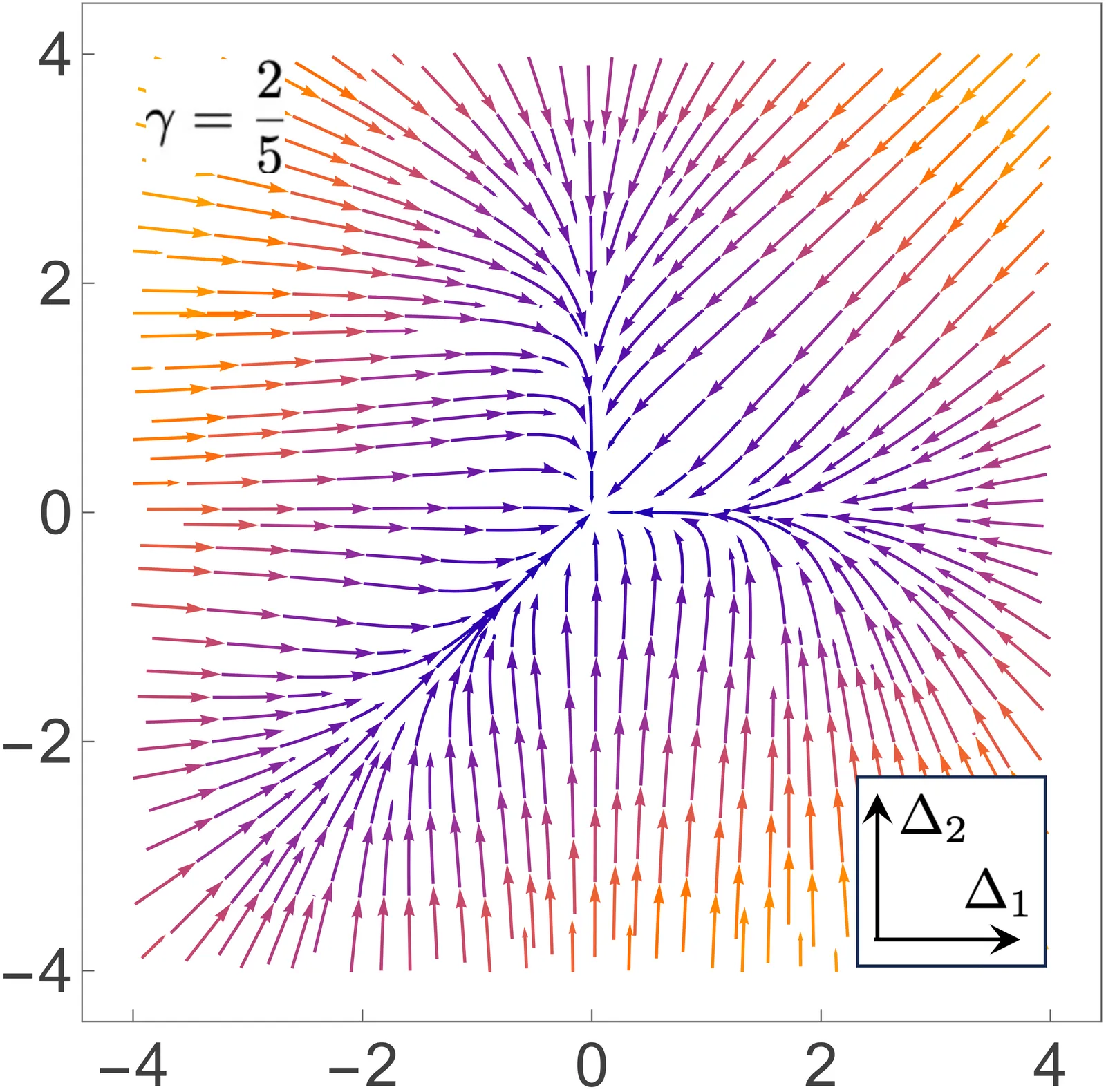

In a paper published in The Economic Journal in 2000, Weisbuch et al.\ introduce a model for buyers' preferences to the various sellers in over-the-counter (OTC) fish markets. While this model has become an archetype of economic conceptualization that combines bounded rationality and myopic reasoning, the literature on its asymptotic behaviours has remained scarce. In this paper, we proceed to a mathematical analysis of the dynamics and its full characterization in the simplest case of homogeneous buyer populations. By using elements of the theory of cooperative dynamical systems, we prove that, independently of the number of sellers and parameters, for almost every initial condition, the subsequent trajectory must asymptotically approach a stationary state. Moreover, for simple enough distributions of the sellers' attractiveness, we determine all stationary states and their parameter-dependent stability. This analysis shows that in most cases, the asymptotic preferences are ordered as the attractiveness are. However, depending on the parameters, there also exist robust functioning modes in which those sellers with highest preference are not the ones that provide highest profit.

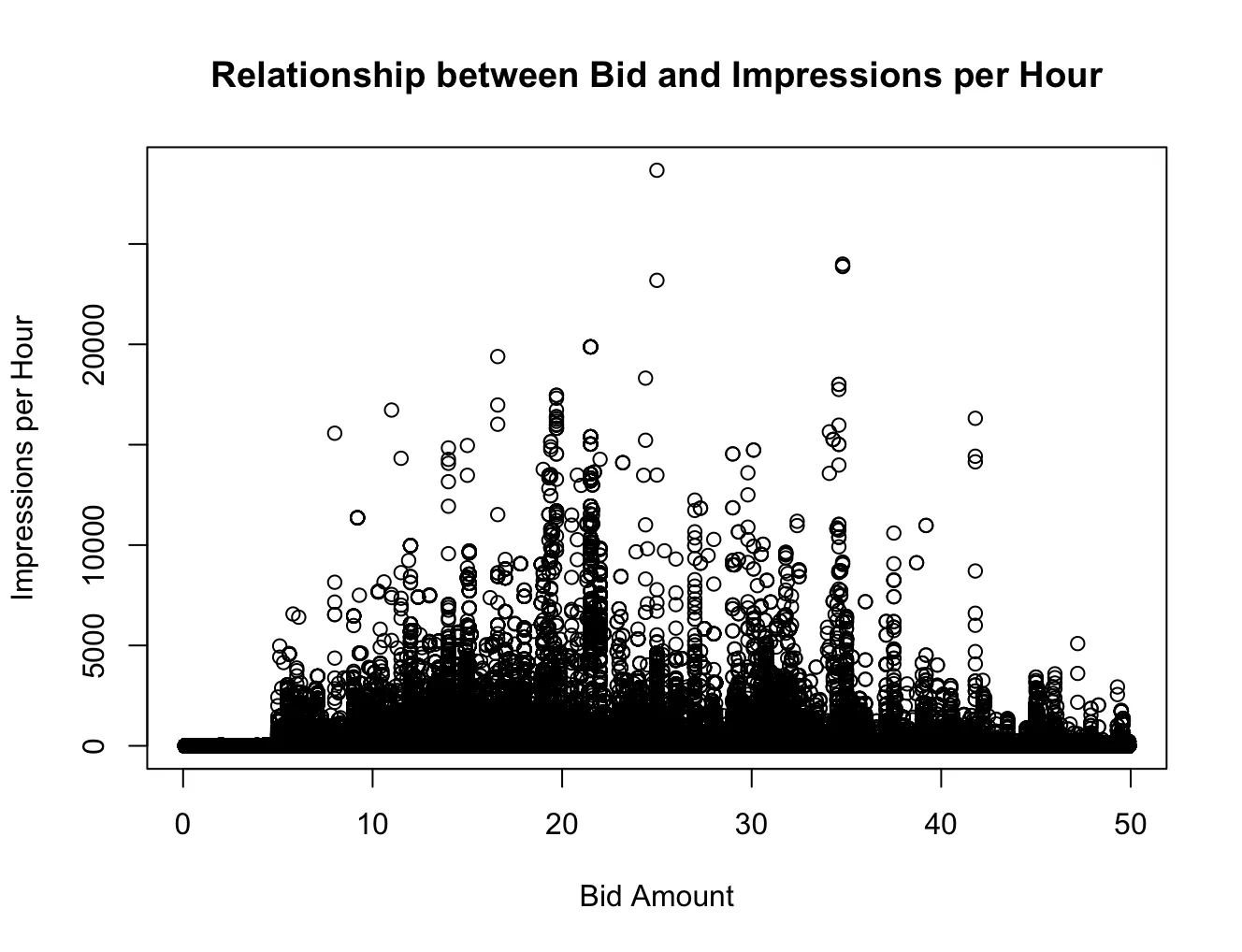

Traditional auction theory posits that bid value exhibits a positive correlation with the probability of securing the auctioned object in ascending auctions. However, under uncertainty and incomplete information, as is characteristic in real-time advertising markets, truthful bidding may not always represent a dominant strategy or yield a Pure Strategy Nash Equilibrium. Real-Time Bidding (RTB) platforms operationalize impression-level auctions via programmatic interfaces, where advertisers compete in first-price auction settings and often resort to bid shading, i.e., strategically submitting bids below their private valuations to optimize payoff. This paper empirically investigates bid shading behaviors and strategic adaptation using large-scale RTB auction data from the Yahoo Webscope dataset. Integrating Minority Game Theory with clustering algorithms and variance-scaling diagnostics, we analyze equilibrium bidding behavior across temporally segmented impression markets. Our results reveal the emergence of minority-based bidding strategies, wherein agents partition hourly ad slots into submarkets and place bids strategically where they anticipate being in the numerical minority. This strategic heterogeneity facilitates reduced expenditure while enhancing win probability, functioning as an endogenous bid shading mechanism. The analysis highlights the computational and economic implications of minority strategies in shaping bidder dynamics and pricing outcomes in decentralized, high-frequency auction environments.

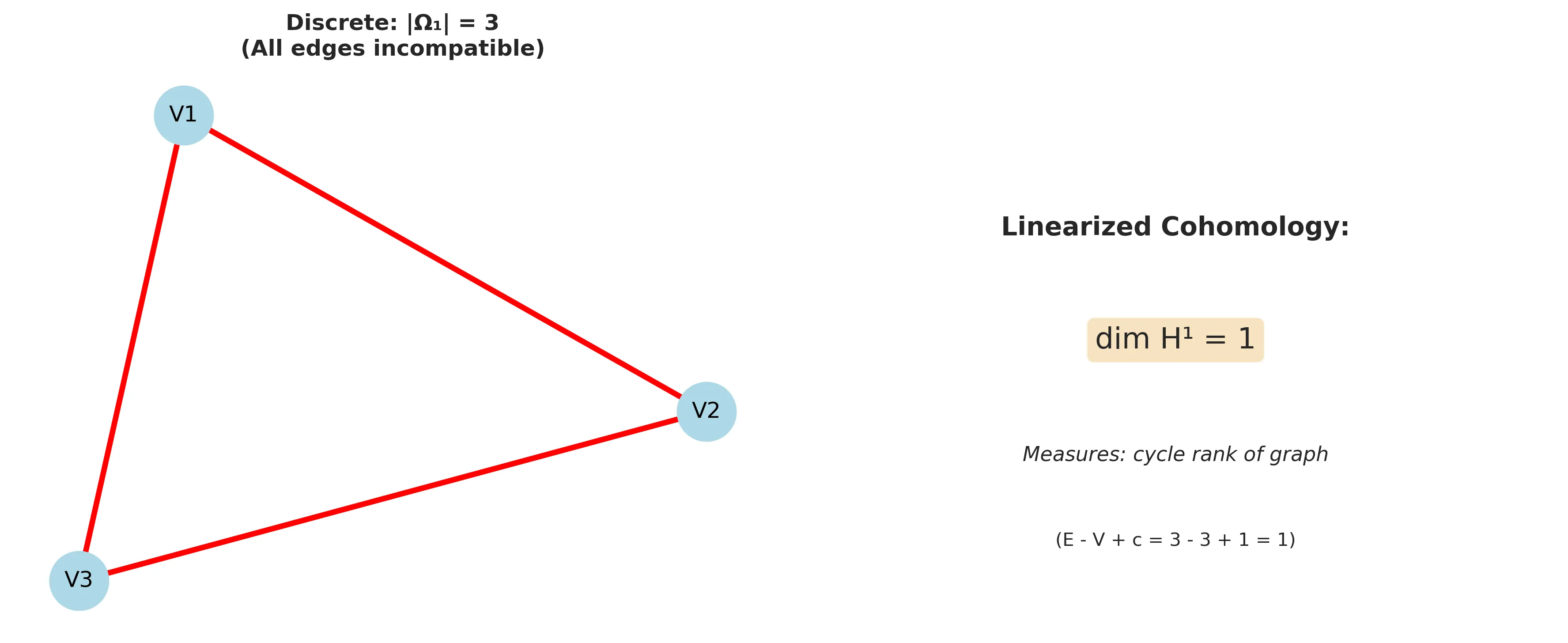

We introduce a graph-theoretic framework based on discrete sheaves to diagnose and localize inconsistencies in preference aggregation. Unlike traditional linearization methods (e.g., HodgeRank), this approach preserves the discrete structure of ordinal preferences, identifying which specific voter interactions cause aggregation failure -- information that global methods cannot provide -- via the Obstruction Locus. We formalize the Incompatibility Index to quantify these local conflicts and examine their behavior under stochastic variations using the Mallows model. Additionally, we develop a rigorous sheaf-theoretic pushforward operation to model voter merging, implemented via a polynomial-time constraint DAG algorithm. We demonstrate that graph quotients transform distributed edge conflicts into local impossibilities (empty stalks), providing a topological characterization of how aggregation paradoxes persist across scales.

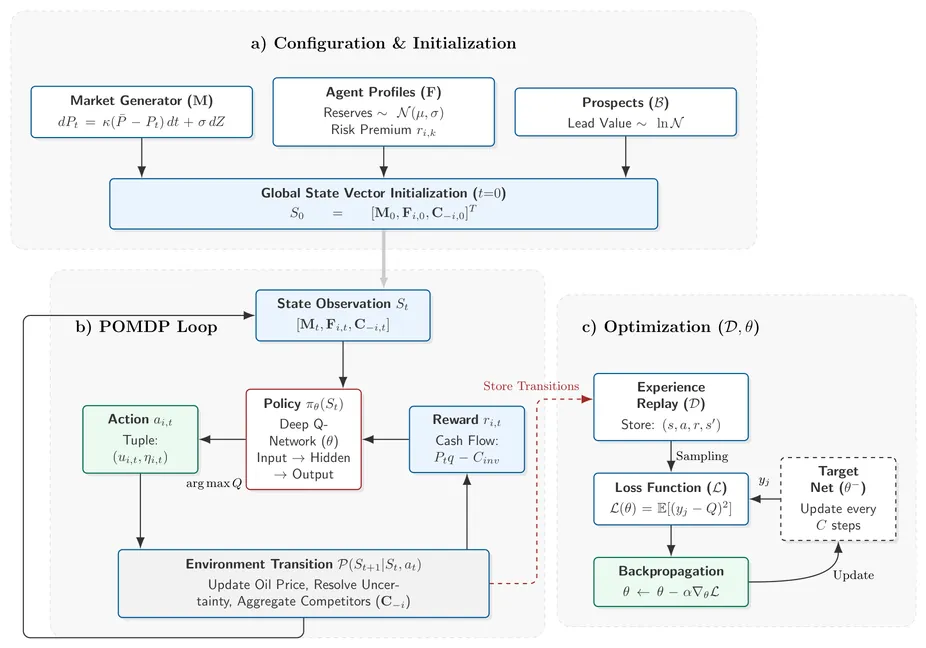

Our work investigates the economic efficiency of the prevailing "ladder-step" investment strategy in oil and gas exploration, which advocates for the incremental acquisition of geological information throughout the project lifecycle. By employing a multi-agent Deep Reinforcement Learning (DRL) framework, we model an alternative strategy that prioritizes the early acquisition of high-quality information assets. We simulate the entire upstream value chain-comprising competitive bidding, exploration, and development phases-to evaluate the economic impact of this approach relative to traditional methods. Our results demonstrate that front-loading information investment significantly reduces the costs associated with redundant data acquisition and enhances the precision of reserve valuation. Specifically, we find that the alternative strategy outperforms traditional methods in highly competitive environments by mitigating the "winner's curse" through more accurate bidding. Furthermore, the economic benefits are most pronounced during the development phase, where superior data quality minimizes capital misallocation. These findings suggest that optimal investment timing is structurally dependent on market competition rather than solely on price volatility, offering a new paradigm for capital allocation in extractive industries.

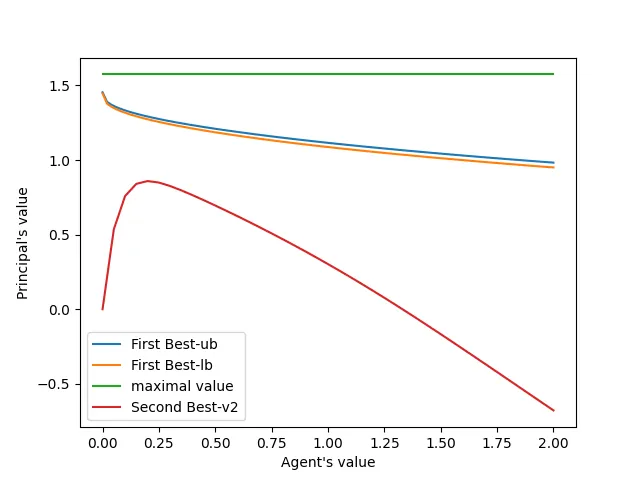

We study a continuous time contracting model in which a principal hires a risk averse agent to manage a project over a finite horizon and provides sequential payments whose timing is endogenously determined. The resulting nonzero-sum interaction between the principal and the agent is reformulated as a mixed control and stopping problem. Using numerical simulations, we investigate how factors such as the relative impatience of the parties and the number of bonus payments influence the principal's value and the structure of the optimal bonus payment scheme. A notable finding is that, in some contractual environments, the principal optimally offers a sign-on bonus to front-load incentives.

Electric power systems are increasingly turning to energy storage systems to balance supply and demand. But how much storage is required? What is the optimal volume of storage in a power system and on what does it depend? In addition, what form of hedge contracts do storage facilities require? We answer these questions in the special case in which the uncertainty in the power system involves successive draws of an independent, identically-distributed random variable. We characterize the conditions for the optimal operation of, and investment in, storage and show how these conditions can be understood graphically using price-duration curves. We also characterize the optimal hedge contracts for storage units.

This paper provides a unified approach to characterize the set of all feasible signals subject to privacy constraints. The Blackwell frontier of feasible signals can be decomposed into minimum informative signals achieving the Blackwell frontier of privacy variables, and conditionally privacy-preserving signals. A complete characterization of the minimum informative signals is then provided. We apply the framework to ex-post privacy (including differential and inferential privacy) and to constraints on posterior means of arbitrary statistics.

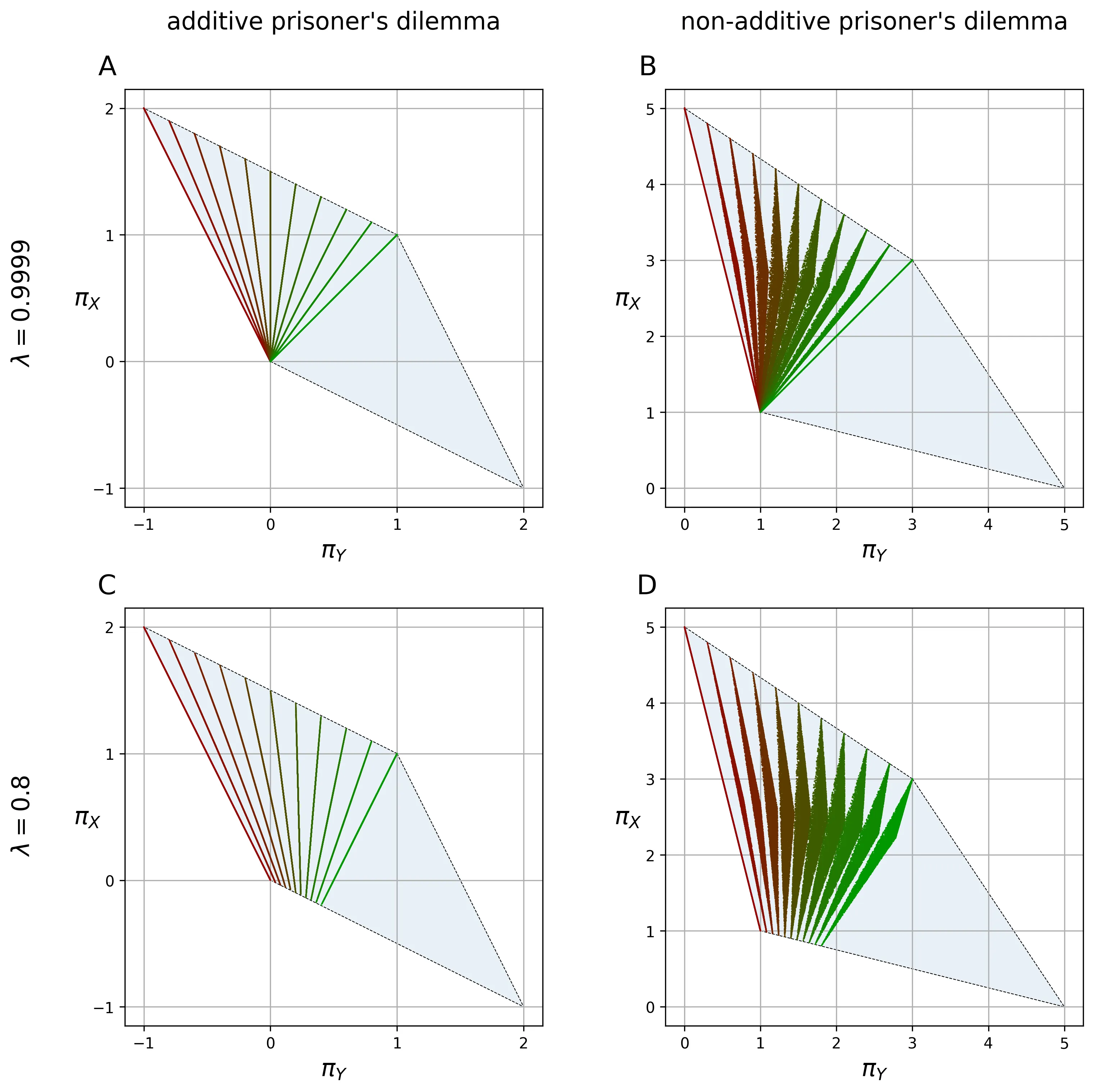

Originating in evolutionary game theory, the class of "zero-determinant" strategies enables a player to unilaterally enforce linear payoff relationships in simple repeated games. An upshot of this kind of payoff constraint is that it can shape the incentives for the opponent in a predetermined way. An example is when a player ensures that the agents get equal payoffs. While extensively studied in infinite-horizon games, extensions to discounted games, nonlinear payoff relationships, richer strategic environments, and behaviors with long memory remain incompletely understood. In this paper, we provide necessary and sufficient conditions for a player to enforce arbitrary payoff relationships (linear or nonlinear), in expectation, in discounted games. These conditions characterize precisely which payoff relationships are enforceable using strategies of arbitrary complexity. Our main result establishes that any such enforceable relationship can actually be implemented using a simple two-point reactive learning strategy, which conditions on the opponent's most recent action and the player's own previous mixed action, using information from only one round into the past. For additive payoff constraints, we show that enforcement is possible using even simpler (reactive) strategies that depend solely on the opponent's last move. In other words, this tractable class is universal within expectation-enforcing strategies. As examples, we apply these results to characterize extortionate, generous, equalizer, and fair strategies in the iterated prisoner's dilemma, asymmetric donation game, nonlinear donation game, and the hawk-dove game, identifying precisely when each class of strategy is enforceable and with what minimum discount factor.

2511.16357

2511.16357We study decentralized markets for goods whose utility perishes in time, with compute as a primary motivation. Recent advances in reproducible and verifiable execution allow jobs to pause, verify, and resume across heterogeneous hardware, which allow us to treat compute as time indexed capacity rather than bespoke bundles. We design an automated market maker (AMM) that posts an hourly price as a concave function of load--the ratio of current demand to a "floor supply" (providers willing to work at a preset floor). This mechanism decouples price discovery from allocation and yields transparent, low latency trading. We establish existence and uniqueness of equilibrium quotes and give conditions under which the equilibrium is admissible (i.e. active supply weakly exceeds demand). To align incentives, we pair a premium sharing pool (base cost plus a pro rata share of contemporaneous surplus) with a Cheapest Feasible Matching (CFM) rule; under mild assumptions, providers optimally stake early and fully while truthfully report costs. Despite being simple and computationally efficient, we show that CFM attains bounded worst case regret relative to an optimal benchmark.

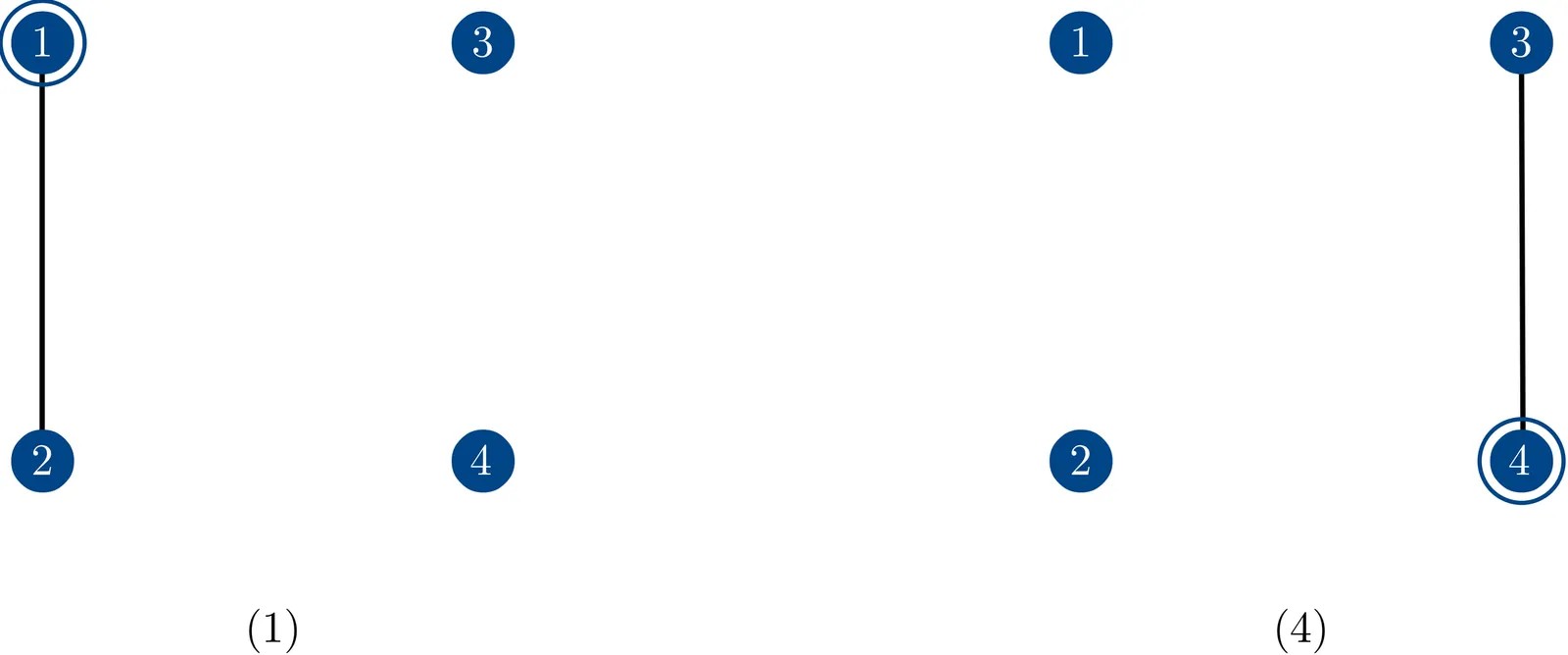

A planner wants to select one agent out of n agents on the basis of a binary characteristic that is commonly known to all agents but is not observed by the planner. Any pair of agents can either be friends or enemies or impartials of each other. An individual's most preferred outcome is that she be selected. If she is not selected, then she would prefer that a friend be selected, and if neither she herself or a friend is selected, then she would prefer that an impartial agent be selected. Finally, her least preferred outcome is that an enemy be selected. The planner wants to design a dominant strategy incentive compatible mechanism in order to be able choose a desirable agent. We derive sufficient conditions for existence of efficient and DSIC mechanisms when the planner knows the bilateral relationships between agents. We also show that if the planner does not know these relationships, then there is no efficient and DSIC mechanism and we compare the relative efficiency of two ``second-best'' DSIC mechanisms. Finally, we obtain sharp characterization results when the network of friends and enemies satisfies structural balance.

Given the combined evidences of bounded rationality, limited information and short-term optimization, over-the-counter (OTC) fresh product markets provide a perfect instance where to develop a behavioural approach to the analysis of micro-economic systems. Aiming at characterizing via a rigorous mathematical analysis, the main features of the spontaneous organization and functioning of such markets, we introduce and we study a stylized dynamical model for the time evolution of buyers populations and prices/attractiveness at each wholesaler. The dynamics is governed by immediate reactions of the actors to changes in basic indicators. Buyers are influenced by some degree of loyalty to their regular suppliers. Yet, at times, they also prospect for potential better offers. On the other hand, sellers primarily aim at maximising their profit. Yet, they can be also prone to improving their competitiveness in case of clientele deficit. Our results reveal that, in spite of being governed by simple and immediate rules, the competition between sellers self-regulates in time, as it constrains to bounded ranges the dispersion of both prices and clientele volumes, does similarly for the mean clientele volume, and it generates oscillatory behaviours that prevent any seller to dominate permanently its competitors (and to be dominated forever). Long-term behaviours are also investigated, with focus on asymptotic convergence to an equilibrium, as can be expected for a standard functioning mode. In particular, in the simplest case of 2 competing sellers, a normal-form-like analysis proves that such convergence holds, provided that the buyer's loyalty is sufficiently high or the sellers' reactivity is sufficiently low. In other words, this result identifies and proves those characteristics of the system that are responsible for long term stability and asymptotic damping of the oscillations.

Apportionment refers to the well-studied problem of allocating legislative seats among parties or groups with different entitlements. We present a multi-level generalization of apportionment where the groups form a hierarchical structure, which gives rise to stronger versions of the upper and lower quota notions. We show that running Adams' method level-by-level satisfies upper quota, while running Jefferson's method or the quota method level-by-level guarantees lower quota. Moreover, we prove that both quota notions can always be fulfilled simultaneously.

2511.13736

2511.13736Classic Rock-Paper-Scissors, RPS, has seen many variants and generalizations in the past several years. In the previous paper, we defined playability and balance for games. We used these definitions to show that different forms of imbalance agree on the most balanced and least balanced form of playable two-player n-object RPS games, referred to as (2,n)-RPS. We reintroduce these definitions here and show that, given a conjecture, the generalization of this game for m<50 players is a strongly playable RPS game. We also show that this game maximizes these forms of imbalance in the limit as the number of players goes to infinity.

2511.03145

2511.03145We consider fair and consistent extensions of the Shapley value for games with externalities. Based on the restriction identified by Casajus et al. (2024, Games Econ. Behavior 147, 88-146), we define balanced contributions, Sobolev's consistency, and Hart and Mas-Colell's consistency for games with externalities, and we show that these properties lead to characterizations of the generalization of the Shapley value introduced by Macho-Stadler et al. (2007, J. Econ. Theory 135, 339-356), that parallel important characterizations of the Shapley value.